1) 部署环境

- 部署节点 x1 : 运行这份 ansible 脚本的节点

- etcd节点 x3 : 注意etcd集群必须是1,3,5,7...奇数个节点

- master节点 x1 : 运行集群主要组件

- node节点 x3 : 真正应用部署的节点,根据需要增加机器配置和节点数

2)下载docker

wget download.yunwei.edu/shell/docker.tar.gz ls tar zxvf docker.tar.gz ls cd docker/ ls bash docker.sh

3)设置免密登陆并且配置号域名解析服务

172.16.254.20 reg.yunwei.edu #此域名解析是为了获取docker版ansible的资源地址 192.168.253.9 cicd #一个部署,三个节点,node1同时又是master节点 192.168.253.14 node1 192.168.253.11 node2 192.168.253.10 node3

4)下载并运行docker版ansible

[root@cicd ~]# docker run -itd -v /etc/ansible:/etc/ansible -v /etc/kubernetes/:/etc/kubernetes/ -v /root/.kube:/root/.kube -v /usr/local/bin/:/usr/local/bin/ 1acb4fd5df5b /bin/sh WARNING: IPv4 forwarding is disabled. Networking will not work.

上述报错解决方法:

在下面文件内添加,然后重启netowrk

systemctl restart network

[root@cicd ~]# cat /usr/lib/sysctl.d/00-system.conf

net.ipv4.ip_forward=1

5)进入刚刚下载ansible的容器。

[root@cicd ~]# docker exec -it 36aac0dee157 /bin/sh / # ansible all -m ping [WARNING]: Could not match supplied host pattern, ignoring: all [WARNING]: provided hosts list is empty, only localhost is available [WARNING]: No hosts matched, nothing to do

发现无法ping通,这是因为没有配置主机组以及取消配置文件中的host-key注释。

又因为我们宿主机与容器建立了目录映射,所以我们这里直接在宿主机之下做以下步骤

6)上传k8s的压缩包,然后解压,进入kubernetes目录。

wget http://download.yunwei.edu/shell/docker.tar.gz

[root@cicd kubernetes]# ls

bash ca.tar.gz harbor-offline-installer-v1.4.0.tgz image image.tar.gz k8s197.tar.gz kube-yunwei-197.tar.gz scope.yaml sock-shop

7)解压kube-yunwei-197.tar.gz压缩包,并把解压后目录下面的内容全部移动到/etc/ansible/

解压k8s197.tar.gz压缩包,并把解压后文件全部移动到/etc/ansible/bin/目录下

[root@cicd ansible]# ls 01.prepare.retry 02.etcd.yml 04.kube-master.yml 06.network.yml ansible.cfg example manifests tools 01.prepare.yml 03.docker.yml 05.kube-node.yml 99.clean.yml bin hosts roles

[root@cicd bin]# ls

bridge cfssljson docker-containerd-ctr docker-proxy flannel kubectl loopback

calicoctl docker docker-containerd-shim docker-runc host-local kubelet portmap

cfssl docker-compose dockerd etcd kube-apiserver kube-proxy VERSION.md

cfssl-certinfo docker-containerd docker-init etcdctl kube-controller-manager kube-scheduler

8)在/etc/ansible/目录下创建一个hosts文件,并将example目录下的样本文件复制到此文件中。

[deploy] 192.168.253.9 #部署节点ip #etcd集群请提供如下NODE_NAME、NODE_IP变量,请注意etcd集群必须是1,3,5,7...奇数个节点 [etcd] 192.168.253.14 NODE_NAME=etcd1 NODE_IP="192.168.253.14" 192.168.253.11 NODE_NAME=etcd2 NODE_IP="192.168.253.11" 192.168.253.10 NODE_NAME=etcd3 NODE_IP="192.168.253.10" [kube-master] 192.168.253.14 NODE_IP="192.168..253.14" [kube-node] 192.168.253.14 NODE_IP="192.168.253.14" 192.168.253.11 NODE_IP="192.168.253.11" 192.168.253.10 NODE_IP="192.168.253.10" [all:vars] # ---------集群主要参数--------------- #集群部署模式:allinone, single-master, multi-master DEPLOY_MODE=single-master #集群 MASTER IP MASTER_IP="192.168.253.14" #集群 APISERVER KUBE_APISERVER="https://192.168.253.14:6443" #master节点ip #TLS Bootstrapping 使用的 Token,使用 head -c 16 /dev/urandom | od -An -t x | tr -d ' ' 生成 BOOTSTRAP_TOKEN="d18f94b5fa585c7110f56803d925d2e7" # 集群网络插件,目前支持calico和flannel CLUSTER_NETWORK="calico" # 部分calico相关配置,更全配置可以去roles/calico/templates/calico.yaml.j2自定义 # 设置 CALICO_IPV4POOL_IPIP=“off”,可以提高网络性能,条件限制详见 05.安装calico网络组件.md CALICO_IPV4POOL_IPIP="always" # 设置 calico-node使用的host IP,bgp邻居通过该地址建立,可手动指定端口"interface=eth0"或使用如下自动发现 IP_AUTODETECTION_METHOD="can-reach=113.5.5.5" # 部分flannel配置,详见roles/flannel/templates/kube-flannel.yaml.j2 FLANNEL_BACKEND="vxlan" # 服务网段 (Service CIDR),部署前路由不可达,部署后集群内使用 IP:Port 可达 SERVICE_CIDR="10.68.0.0/16" # POD 网段 (Cluster CIDR),部署前路由不可达,**部署后**路由可达 CLUSTER_CIDR="172.20.0.0/16" # 服务端口范围 (NodePort Range) NODE_PORT_RANGE="20000-40000" # kubernetes 服务 IP (预分配,一般是 SERVICE_CIDR 中第一个IP) CLUSTER_KUBERNETES_SVC_IP="10.68.0.1" # 集群 DNS 服务 IP (从 SERVICE_CIDR 中预分配) CLUSTER_DNS_SVC_IP="10.68.0.2" # 集群 DNS 域名 CLUSTER_DNS_DOMAIN="cluster.local." # etcd 集群间通信的IP和端口, **根据实际 etcd 集群成员设置** ETCD_NODES="etcd1=https://192.168.253.14:2380,etcd2=https://192.168.253.11:2380,etcd3=https://192.168.253.10:2380" # etcd 集群服务地址列表, **根据实际 etcd 集群成员设置** ETCD_ENDPOINTS="https://192.168.253.14:2379,https://192.168.253.11:2379,https://192.168.253.10:2379" # 集群basic auth 使用的用户名和密码 BASIC_AUTH_USER="admin" BASIC_AUTH_PASS="admin" # ---------附加参数-------------------- #默认二进制文件目录 bin_dir="/usr/local/bin" # /root改为/usr #证书目录 ca_dir="/etc/kubernetes/ssl" #部署目录,即 ansible 工作目录 base_dir="/etc/ansible"

9)关闭防火墙,重启docker

再次进入容器,cd到/etc/ansible查看

出现6个脚本文件以及其他若干个文件

[root@cicd ansible]# docker exec -it ad5e6744151e /bin/sh / # cd /etc/ansible/ /etc/ansible # ls 01.prepare.retry 05.kube-node.yml example 01.prepare.yml 06.network.yml hosts 02.etcd.yml 99.clean.yml manifests 03.docker.yml ansible.cfg roles 04.kube-master.yml bin tools

10)在容器内执行01-05脚本

/etc/ansible # ansible-playbook 01.prepare.yml

/etc/ansible # ansible-playbook 02.etcd.yml

/etc/ansible # ansible-playbook 03.docker.yml

/etc/ansible # ansible-playbook 04.kube-master.yml

/etc/ansible # ansible-playbook 05.kube-node.yml

11) 部署节点另开一个会话。其他三个节点创建一个名为image的目录用于接收部署节点发送的镜像包。

执行以下命令,然后将image目录下的所有镜像全部发送给node1.node2.node3。

[root@cicd ~]# cd kubernetes [root@cicd kubernetes]# ls bash ca.tar.gz harbor-offline-installer-v1.4.0.tgz image image.tar.gz k8s197.tar.gz kube-yunwei-197.tar.gz scope.yaml sock-shop [root@cicd kubernetes]# cd image [root@cicd image]# ls bash-completion-2.1-6.el7.noarch.rpm coredns-1.0.6.tar.gz heapster-v1.5.1.tar kubernetes-dashboard-amd64-v1.8.3.tar.gz calico grafana-v4.4.3.tar influxdb-v1.3.3.tar pause-amd64-3.1.tar

12)node1节点进入到image目录,导入所有镜像

[root@node2 ~]# cd image/ [root@node2 image]# ls bash-completion-2.1-6.el7.noarch.rpm grafana-v4.4.3.tar calico-cni-v2.0.5.tar heapster-v1.5.1.tar calico-kube-controllers-v2.0.4.tar influxdb-v1.3.3.tar calico-node-v3.0.6.tar kubernetes-dashboard-amd64-v1.8.3.tar.gz coredns-1.0.6.tar.gz pause-amd64-3.1.tar [root@node2 image]# for ls in `ls` ;do docker load -i $ls ;done

13)查看镜像是否导入成功

[root@node2 image]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE mariadb latest 3ab4bbd66f97 8 hours ago 344MB zabbix/zabbix-agent latest bc21cdfcc381 6 days ago 14.9MB calico/node v3.0.6 15f002a49ae8 14 months ago 248MB calico/kube-controllers v2.0.4 f8e683e673ec 14 months ago 55.1MB calico/cni v2.0.5 b5e5532af766 14 months ago 69.1MB coredns/coredns 1.0.6 d4b7466213fe 16 months ago 39.9MB mirrorgooglecontainers/kubernetes-dashboard-amd64 v1.8.3 0c60bcf89900 16 months ago 102MB mirrorgooglecontainers/heapster-amd64 v1.5.1 c41e77c31c91 16 months ago 75.3MB mirrorgooglecontainers/pause-amd64 3.1 da86e6ba6ca1 18 months ago 742kB mirrorgooglecontainers/heapster-influxdb-amd64 v1.3.3 577260d221db 21 months ago 12.5MB mirrorgooglecontainers/heapster-grafana-amd64 v4.4.3 8cb3de219af7 21 months ago 152MB

其他两个节点操作相同。

14)在部署节点的容器内执行06脚本

/etc/ansible # ansible-playbook 06.network.yml

15)进入manifests/coredns/目录下,执行

kubeclt create -f .

/etc/ansible # cd manifests/ /etc/ansible/manifests # ls coredns dashboard efk heapster ingress kubedns /etc/ansible/manifests # cd coredns/ /etc/ansible/manifests/coredns # ls coredns.yaml /etc/ansible/manifests/coredns # kubectl create -f . serviceaccount "coredns" created clusterrole "system:coredns" created clusterrolebinding "system:coredns" created configmap "coredns" created deployment "coredns" created service "coredns" created

16)部署节点的宿主机界面执行:

kubectl get node -o wide

[root@cicd image]# kubectl get node -o wide NAME STATUS ROLES AGE VERSION EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME 192.168.253.10 Ready <none> 10m v1.9.7 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://18.3.0 192.168.253.11 Ready <none> 10m v1.9.7 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://18.3.0 192.168.253.14 Ready,SchedulingDisabled <none> 12m v1.9.7 <none> CentOS Linux 7 (Core) 3.10.0-693.el7.x86_64 docker://18.3.0

17)查看名称空间

查看kube-system空间内的pod详情

[root@cicd image]# kubectl get ns NAME STATUS AGE default Active 14m kube-public Active 14m kube-system Active 14m [root@cicd image]# kubectl get pod -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE calico-kube-controllers-754c88ccc8-86rht 1/1 Running 0 4m 192.168.253.11 192.168.253.11 calico-node-cvhcn 2/2 Running 0 4m 192.168.253.10 192.168.253.10 calico-node-j5d66 2/2 Running 0 4m 192.168.253.14 192.168.253.14 calico-node-qfm66 2/2 Running 0 4m 192.168.253.11 192.168.253.11 coredns-6ff7588dc6-mbg9x 1/1 Running 0 2m 172.20.104.1 192.168.253.11 coredns-6ff7588dc6-vzvbt 1/1 Running 0 2m 172.20.135.1 192.168.253.10

18)在不同节点上尝试ping通彼此节点的内部ip

[root@node1 image]# ping 172.20.135.1 PING 172.20.135.2 (172.20.135.2) 56(84) bytes of data. 64 bytes from 172.20.135.2: icmp_seq=1 ttl=63 time=0.813 ms 64 bytes from 172.20.135.2: icmp_seq=2 ttl=63 time=0.696 ms

如果ping通则证明k8s实验部署成功。

19)cd到dashboard目录下执行

kubectl create -f .

/etc/ansible/manifests # cd dashboard/ /etc/ansible/manifests/dashboard # ls 1.6.3 kubernetes-dashboard.yaml ui-read-rbac.yaml admin-user-sa-rbac.yaml ui-admin-rbac.yaml /etc/ansible/manifests/dashboard # kubectl create -f . serviceaccount "admin-user" created clusterrolebinding "admin-user" created secret "kubernetes-dashboard-certs" created serviceaccount "kubernetes-dashboard" created role "kubernetes-dashboard-minimal" created rolebinding "kubernetes-dashboard-minimal" created deployment "kubernetes-dashboard" created service "kubernetes-dashboard" created clusterrole "ui-admin" created rolebinding "ui-admin-binding" created clusterrole "ui-read" created rolebinding "ui-read-binding" created

20)再次执行

kubectl get pod -n kube-system -o wide

发现多出一个容器

[root@cicd image]# kubectl get pod -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE calico-kube-controllers-754c88ccc8-86rht 1/1 Running 0 7m 192.168.253.11 192.168.253.11 calico-node-cvhcn 2/2 Running 0 7m 192.168.253.10 192.168.253.10 calico-node-j5d66 2/2 Running 0 7m 192.168.253.14 192.168.253.14 calico-node-qfm66 2/2 Running 0 7m 192.168.253.11 192.168.253.11 coredns-6ff7588dc6-mbg9x 1/1 Running 0 5m 172.20.104.1 192.168.253.11 coredns-6ff7588dc6-vzvbt 1/1 Running 0 5m 172.20.135.1 192.168.253.10 kubernetes-dashboard-545b66db97-cbh9w 1/1 Running 0 1m 172.20.135.2 192.168.253.10

在node1节点ping172.168.135.2

[root@node1 image]# ping 172.20.135.2

PING 172.20.135.2 (172.20.135.2) 56(84) bytes of data.

64 bytes from 172.20.135.2: icmp_seq=1 ttl=63 time=0.813 ms

64 bytes from 172.20.135.2: icmp_seq=2 ttl=63 time=0.696 ms

如果能够ping通,执行以下命令

/etc/ansible/manifests/dashboard # kubectl cluster-info Kubernetes master is running at https://192.168.253.14:6443 CoreDNS is running at https://192.168.253.14:6443/api/v1/namespaces/kube-system/services/coredns:dns/proxy kubernetes-dashboard is running at https://192.168.253.14:6443/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy

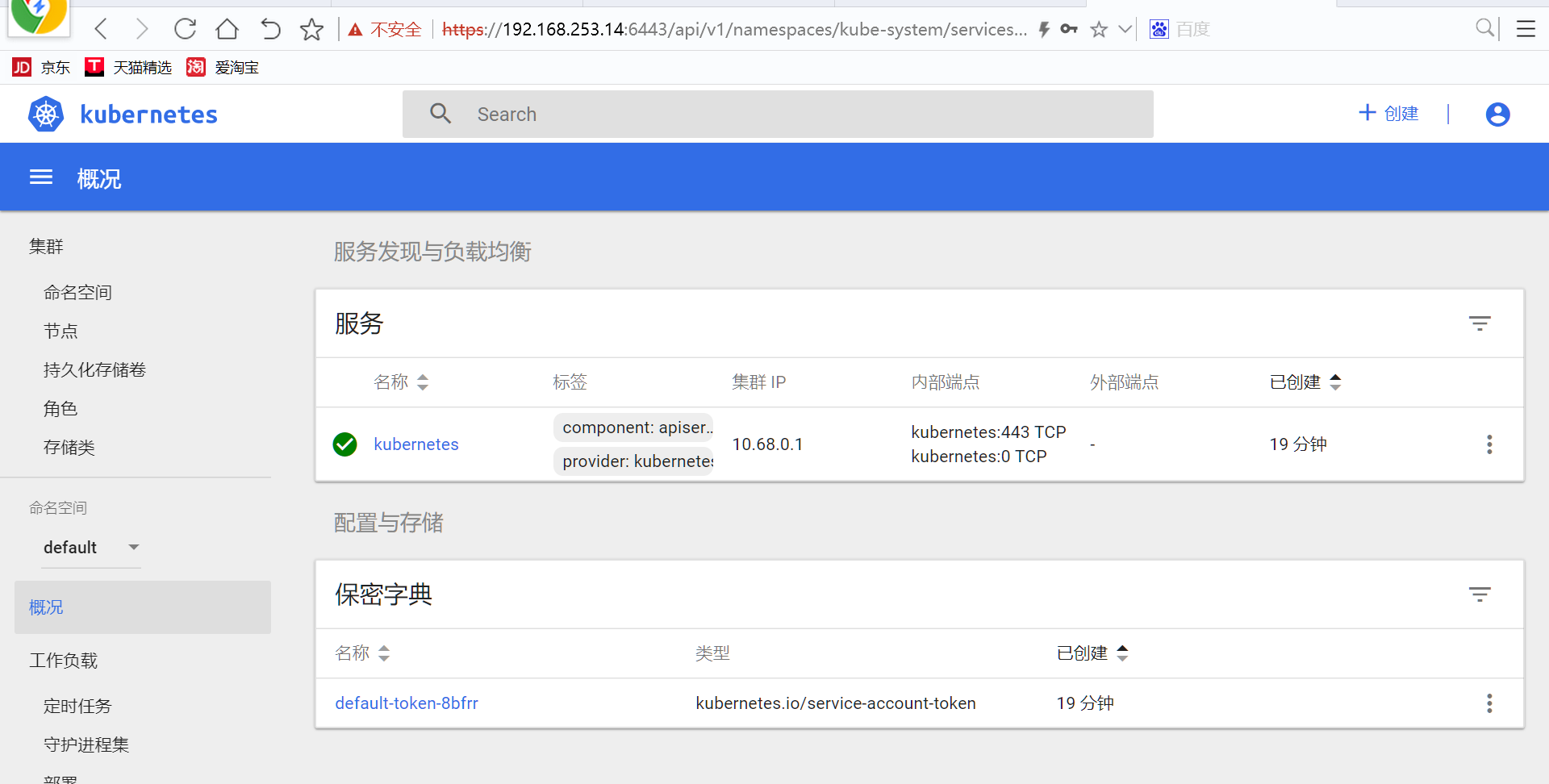

21)复制加粗连接访问网页

登陆用户名和密码后,选中令牌,执行以下命令

[root@cicd image]# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret|grep admin-user|awk '{print $1}') Name: admin-user-token-q46rv Namespace: kube-system Labels: <none> Annotations: kubernetes.io/service-account.name=admin-user kubernetes.io/service-account.uid=b31a5f5c-9283-11e9-a6e3-000c2966ee14 Type: kubernetes.io/service-account-token Data ==== ca.crt: 1359 bytes namespace: 11 bytes token: eyJhbGciOiJSUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXE0NnJ2Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJiMzFhNWY1Yy05MjgzLTExZTktYTZlMy0wMDBjMjk2NmVlMTQiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.O5gqGeUNlwUYYDSL2bSolvCUoGmWjipaiqwrfBCbCroQ2SanUdP0w3SbJ0Vhb1Ddv6QswC2HeaGeHV_ideoH03RalKlJoJmCIaz0OpbvWQ6KaeDKLHqBxGyT4S-AHncF908aHr84JfY6GVIfJJL7P68EzMrFpOI24YC_PDfZvBm7zud7uhiFClWs8MMMmSt0YAWoakVQ4sPfyhlBX9rZLE1-2LobMio6g0vue9PJxzmJhZ7GrcOqHu__IpbmtPpwj-VHnvhDkU8dhC8617HyVU7xDsajZ89HmqSIVFtXrpiDTChDwBfMlx7QRmzu7bNNXyesQqDoyBJJgReW3M0evw

复制以上令牌内容到空格中,点击进入以下页面代表成功。