学习参考这篇文章:

http://www.shareditor.com/blogshow/?blogId=96

机器学习、数据挖掘等各种大数据处理都离不开各种开源分布式系统,

hadoop用于分布式存储和map-reduce计算,

spark用于分布式机器学习,

hive是分布式数据库,

hbase是分布式kv系统,

看似互不相关的他们却都是基于相同的hdfs存储和yarn资源管理,

本文通过全套部署方法来让大家深入系统内部以充分理解分布式系统架构和他们之间的关系。

下载了 Hadoop hadoop-2.7.3 (http://mirror.bit.edu.cn/apache/hadoop/common/hadoop-2.7.3/hadoop-2.7.3.tar.gz)

拷贝到:work@gzns-ecom-baiduhui-201605-m42n05.gzns.baidu.com hadoop]$ pwd

/home/work/data/installed/

解压后目录:

/home/work/data/installed/hadoop-2.7.3/

修改 /home/work/data/installed/hadoop-2.7.3/etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:8390</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

</configuration>

hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/work/data/hadoop/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/work/data/hadoop/dfs/data</value>

</property>

<property>

<name>dfs.datanode.fsdataset.volume.choosing.policy</name>

<value>org.apache.hadoop.hdfs.server.datanode.fsdataset.AvailableSpaceVolumeChoosingPolicy</value>

</property>

<property>

<name>dfs.namenode.http-address</name>

<value>127.0.0.1:8305</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>127.0.0.1:8310</value>

</property>

</configuration>

etc/hadoop/yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>127.0.0.1</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>127.0.0.1:8320</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>864000</value>

</property>

<property>

<name>yarn.log-aggregation.retain-check-interval-seconds</name>

<value>86400</value>

</property>

<property>

<name>yarn.nodemanager.remote-app-log-dir</name>

<value>/YarnApp/Logs</value>

</property>

<property>

<name>yarn.log.server.url</name>

<value>http://127.0.0.1:8325/jobhistory/logs/</value>

</property>

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>/home/work/data/hadoop/dfs/tmp/</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>5000</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>1024</value>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>4.1</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

</configuration>

cp etc/hadoop/mapred-site.xml.template etc/hadoop/mapred-site.xml创建etc/hadoop/mapred-site.xml,内容改为如下:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

<description>Execution framework set to Hadoop YARN.</description>

</property>

<property>

<name>yarn.app.mapreduce.am.staging-dir</name>

<value>/home/work/data/hadoop/tmp/hadoop-yarn/staging</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>127.0.0.1:8330</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>127.0.0.1:8331</value>

</property>

<property>

<name>mapreduce.jobhistory.done-dir</name>

<value>${yarn.app.mapreduce.am.staging-dir}/history/done</value>

</property>

<property>

<name>mapreduce.jobhistory.intermediate-done-dir</name>

<value>${yarn.app.mapreduce.am.staging-dir}/history/done_intermediate</value>

</property>

<property>

<name>mapreduce.jobhistory.joblist.cache.size</name>

<value>1000</value>

</property>

<property>

<name>mapreduce.tasktracker.map.tasks.maximum</name>

<value>8</value>

</property>

<property>

<name>mapreduce.tasktracker.reduce.tasks.maximum</name>

<value>8</value>

</property>

<property>

<name>mapreduce.jobtracker.maxtasks.perjob</name>

<value>5</value>

<description>The maximum number of tasks for a single job.

A value of -1 indicates that there is no maximum.

</description>

</property>

</configuration>

在hadoop-env.sh里写JAVA_HOME

export JAVA_HOME=/home/work/.jumbo/opt/sun-java8

然后运行hdfs格式化命令:

./bin/hdfs namenode -format myclustername

可以看到 ~/data/hadoop/dfs/下面多了一个 name/ 目录。

启动namenode

$./sbin/hadoop-daemon.sh --script hdfs start namenode starting namenode, logging to /home/work/data/installed/hadoop-2.7.3/logs/hadoop-work-namenode-gzns-ecom-baiduhui-201605-m42n05.gzns.baidu.com.out

然后启动datanode,执行:

$ ./sbin/hadoop-daemon.sh --script hdfs start datanode starting datanode, logging to /home/work/data/installed/hadoop-2.7.3/logs/hadoop-work-datanode-gzns-ecom-baiduhui-201605-m42n05.gzns.baidu.com.out

启动Yarn resource manager

$ ./sbin/yarn-daemon.sh start resourcemanager starting resourcemanager, logging to /home/work/data/installed/hadoop-2.7.3/logs/yarn-work-resourcemanager-gzns-ecom-baiduhui-201605-m42n05.gzns.baidu.com.out

启动node manager

$ ./sbin/yarn-daemon.sh start nodemanager starting nodemanager, logging to /home/work/data/installed/hadoop-2.7.3/logs/yarn-work-nodemanager-gzns-ecom-baiduhui-201605-m42n05.gzns.baidu.com.out

启动MapReduce JobHistory Server

$ ./sbin/mr-jobhistory-daemon.sh start historyserver starting historyserver, logging to /home/work/data/installed/hadoop-2.7.3/logs/mapred-work-historyserver-gzns-ecom-baiduhui-201605-m42n05.gzns.baidu.com.out

然后访问

http://10.117.146.12:8320/

失败......

解决ing:

把之前配置文件里面的 127.0.0.1 全部改成 10.117.146.12,然后按照从后到前顺序stop服务,再启动服务。

然后使用:

http://10.117.146.12:8305 (etc/hadoop/hdfs-site.xml : dfs.namenode.http-address)就可以访问 namenode的情况啦:

然后,上面的服务都启动之后,http://10.117.146.12:8320 (etc/hadoop/yarn-site.xml : yarn.resourcemanager.webapp.address)可以访问 Resource Mgr的情况。

http://10.117.146.12:8332/jobhistory 能看到Job History (etc/hadoop/mapred-site.xml : mapreduce.jobhistory.webapp.address)

到此为止,Hadoop已经部署完毕。

实验几条命令:

$ ./bin/hadoop fs -mkdir /input $ cat data 1 2 3 4 $ ./bin/hadoop fs -put data /input $ ./bin/hadoop fs -ls /input Found 1 items -rw-r--r-- 3 work supergroup 8 2016-11-03 16:56 /input/data 但是在存放数据的目录,是看不出来有变化的: $ pwd /home/work/data/hadoop/dfs/data $ ls current in_use.lock

这时候,在 Overview 上能够看出一些变化:

Configured Capacity: 2.62 TB DFS Used: 48 KB (0%) Non DFS Used: 3.99 GB DFS Remaining: 2.62 TB (99.85%)

现在跑一个hadoop命令看看:

./bin/hadoop jar ./share/hadoop/tools/lib/hadoop-streaming-2.7.3.jar -input /input -output /output -mapper cat -reducer wc

跑完之后(下面有运行的详细日志),可以看到输出:

$ ./bin/hadoop fs -ls /output Found 2 items -rw-r--r-- 3 work supergroup 0 2016-11-03 17:17 /output/_SUCCESS -rw-r--r-- 3 work supergroup 25 2016-11-03 17:17 /output/part-00000

可以通过下面两个平台看到任务历史:

http://10.117.146.12:8320/cluster

http://10.117.146.12:8332/jobhistory

那么这两个任务历史,区别是什么呢?

我们看到cluster显示的其实是每一个application的历史信息,他是yarn(ResourceManager)的管理页面,

也就是不管是mapreduce还是其他类似mapreduce这样的任务,都会在这里显示,

mapreduce任务的Application Type是MAPREDUCE,其他任务的类型就是其他了。 但是jobhistory是专门显示mapreduce任务的。

可以看到结果:

$ ./bin/hadoop fs -ls /output

Found 2 items

-rw-r--r-- 3 work supergroup 0 2016-11-03 17:17 /output/_SUCCESS

-rw-r--r-- 3 work supergroup 25 2016-11-03 17:17 /output/part-00000

$ ./bin/hadoop fs -cat /output/part-00000

4 4 12

将data文件改成5行,之后,再跑下命令试试。

$ ./bin/hadoop jar ./share/hadoop/tools/lib/hadoop-streaming-2.7.3.jar -input /input -output /output -mapper cat -reducer wc packageJobJar: [/tmp/hadoop-unjar8999320636947334567/] [] /tmp/streamjob5312455304801278269.jar tmpDir=null 16/11/03 18:26:41 INFO client.RMProxy: Connecting to ResourceManager at /10.117.146.12:8032 16/11/03 18:26:42 INFO client.RMProxy: Connecting to ResourceManager at /10.117.146.12:8032 16/11/03 18:26:42 ERROR streaming.StreamJob: Error Launching job : Output directory hdfs://localhost:8390/output already exists Streaming Command Failed!

把output目录删掉再试试

$ ./bin/hadoop fs -rm -r /output

16/11/03 18:27:59 INFO fs.TrashPolicyDefault: Namenode trash configuration: Deletion interval = 0 minutes, Emptier interval = 0 minutes.

Deleted /output

./bin/hadoop jar ./share/hadoop/tools/lib/hadoop-streaming-2.7.3.jar -input /input -output /output -mapper cat -reducer wc

packageJobJar: [/tmp/hadoop-unjar5736062033650056389/] [] /tmp/streamjob584533256903330770.jar tmpDir=null

16/11/03 18:28:07 INFO client.RMProxy: Connecting to ResourceManager at /10.117.146.12:8032

16/11/03 18:28:07 INFO client.RMProxy: Connecting to ResourceManager at /10.117.146.12:8032

16/11/03 18:28:07 INFO mapred.FileInputFormat: Total input paths to process : 1

16/11/03 18:28:07 INFO mapreduce.JobSubmitter: number of splits:2

16/11/03 18:28:08 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1478162780323_0002

16/11/03 18:28:08 INFO impl.YarnClientImpl: Submitted application application_1478162780323_0002

16/11/03 18:28:08 INFO mapreduce.Job: The url to track the job: http://gzns-ecom-baiduhui-201605-m42n05.gzns.baidu.com:8320/proxy/application_1478162780323_0002/

16/11/03 18:28:08 INFO mapreduce.Job: Running job: job_1478162780323_0002

16/11/03 18:28:14 INFO mapreduce.Job: Job job_1478162780323_0002 running in uber mode : false

16/11/03 18:28:14 INFO mapreduce.Job: map 0% reduce 0%

16/11/03 18:28:19 INFO mapreduce.Job: map 100% reduce 0%

16/11/03 18:28:24 INFO mapreduce.Job: map 100% reduce 100%

16/11/03 18:28:24 INFO mapreduce.Job: Job job_1478162780323_0002 completed successfully

16/11/03 18:28:24 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=31

FILE: Number of bytes written=363298

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=183

HDFS: Number of bytes written=25

HDFS: Number of read operations=9

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=2

Launched reduce tasks=1

Data-local map tasks=2

Total time spent by all maps in occupied slots (ms)=4696

Total time spent by all reduces in occupied slots (ms)=2476

Total time spent by all map tasks (ms)=4696

Total time spent by all reduce tasks (ms)=2476

Total vcore-milliseconds taken by all map tasks=4696

Total vcore-milliseconds taken by all reduce tasks=2476

Total megabyte-milliseconds taken by all map tasks=4808704

Total megabyte-milliseconds taken by all reduce tasks=2535424

Map-Reduce Framework

Map input records=5

Map output records=5

Map output bytes=15

Map output materialized bytes=37

Input split bytes=168

Combine input records=0

Combine output records=0

Reduce input groups=5

Reduce shuffle bytes=37

Reduce input records=5

Reduce output records=1

Spilled Records=10

Shuffled Maps =2

Failed Shuffles=0

Merged Map outputs=2

GC time elapsed (ms)=174

CPU time spent (ms)=2970

Physical memory (bytes) snapshot=733491200

Virtual memory (bytes) snapshot=6347313152

Total committed heap usage (bytes)=538968064

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=15

File Output Format Counters

Bytes Written=25

16/11/03 18:28:24 INFO streaming.StreamJob: Output directory: /output

得到结果:

$ ./bin/hadoop fs -cat /output/part-00000

5 5 15

开始,还没搞清楚,为什么是上面那样的三列。直到我直接敲了命令,就一下子清楚啦。哈哈。

$ cat data | wc

5 5 10

原来是wc的输出格式:

行数 单词数 字节数

在开始其他安装之前,再复习一下Hadoop的结构。

hadoop分为几大部分:yarn负责资源和任务管理、hdfs负责分布式存储、map-reduce负责分布式计算。

yarn的结构如下:

yarn的两个部分:资源管理、任务调度。

资源管理需要一个全局的ResourceManager(RM)和分布在每台机器上的NodeManager协同工作,

RM负责资源的仲裁,NodeManager负责每个节点的资源监控、状态汇报和Container的管理 任务调度也需要ResourceManager负责任务的接受和调度,在任务调度中,在Container中启动的ApplicationMaster(AM)负责这个任务的管理,

当任务需要资源时,会向RM申请,分配到的Container用来起任务,然后AM和这些Container做通信,AM和具体执行的任务都是在Container中执行的 yarn区别于第一代hadoop的部署(namenode、jobtracker、tasktracker) 然后再看一下hdfs的架构:hdfs部分由NameNode、SecondaryNameNode和DataNode组成。

DataNode是真正的在每个存储节点上管理数据的模块,NameNode是对全局数据的名字信息做管理的模块,SecondaryNameNode是它的从节点,以防挂掉。 最后再说map-reduce:Map-reduce依赖于yarn和hdfs,另外还有一个JobHistoryServer用来看任务运行历史 hadoop虽然有多个模块分别部署,但是所需要的程序都在同一个tar包中,所以不同模块用到的配置文件都在一起,让我们来看几个最重要的配置文件: 各种默认配置:core-default.xml, hdfs-default.xml, yarn-default.xml, mapred-default.xml 各种web页面配置:core-site.xml, hdfs-site.xml, yarn-site.xml, mapred-site.xml 从这些配置文件也可以看出hadoop的几大部分是分开配置的。 除上面这些之外还有一些重要的配置:hadoop-env.sh、mapred-env.sh、yarn-env.sh,

他们用来配置程序运行时的java虚拟机参数以及一些二进制、配置、日志等的目录配置。

具体的配置方法,在上面已经详细地说明和部署了。

HBase

下面先进行HBase的安装部署:

下载了这里的内容:http://mirrors.cnnic.cn/apache/hbase/1.2.3/hbase-1.2.3-bin.tar.gz

解压后在 /home/work/data/installed/hbase-1.2.3/,修改配置 etc/hadoop/hdfs-site.xml (更正,貌似是修改conf/hbase-site.xml )

<configuration>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://localhost:8390/hbase</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>10.117.146.12</value>

</property>

</configuration>

注意上面的hdfs地址要跟core-site.xml 里面 fs.defaultFS 配置的一样。

其中hbase.zookeeper.quorum是zookeeper的地址,可以配多个,我们试验用就先配一个

启动hbase,执行:./bin/start-hbase.sh

报错:

+======================================================================+ | Error: JAVA_HOME is not set | +----------------------------------------------------------------------+ | Please download the latest Sun JDK from the Sun Java web site | | > http://www.oracle.com/technetwork/java/javase/downloads | | | | HBase requires Java 1.7 or later. | +======================================================================+

修改 hbase-env.sh

export JAVA_HOME=/home/work/.jumbo/opt/sun-java8/

然后启动命令(居然要输密码)

$ ./bin/start-hbase.sh work@10.117.146.12's password: 不知道密码,所以去改了密码。改好的密码和改密码的方式见团队wiki里面“开发环境”那里。 改好密码后,重新跑了命令: $ ./bin/start-hbase.sh work@10.117.146.12's password: 10.117.146.12: starting zookeeper, logging to /home/work/data/installed/hbase-1.2.3/bin/../logs/hbase-work-zookeeper-gzns-ecom-baiduhui-201605-m42n05.gzns.baidu.com.out starting master, logging to /home/work/data/installed/hbase-1.2.3/bin/../logs/hbase-work-master-gzns-ecom-baiduhui-201605-m42n05.gzns.baidu.com.out Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0 Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0 starting regionserver, logging to /home/work/data/installed/hbase-1.2.3/bin/../logs/hbase-work-1-regionserver-gzns-ecom-baiduhui-201605-m42n05.gzns.baidu.com.out

然后启动hbase的shell,并输入一些命令,创建一个表输入一条数据:

$ ./bin/hbase shell

2016-11-03 23:10:15,393 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

HBase Shell; enter 'help<RETURN>' for list of supported commands.

Type "exit<RETURN>" to leave the HBase Shell

Version 1.2.3, rbd63744624a26dc3350137b564fe746df7a721a4, Mon Aug 29 15:13:42 PDT 2016

hbase(main):001:0> status

1 active master, 0 backup masters, 1 servers, 0 dead, 2.0000 average load

hbase(main):003:0> create 'table1' , 'field1'

0 row(s) in 2.5030 seconds

=> Hbase::Table - table1

hbase(main):004:0> t1 = get_table('table1')

0 row(s) in 0.0000 seconds

=> Hbase::Table - table1

hbase(main):005:0> t1.put 'row1' , 'field1:qualifier1', 'value1'

0 row(s) in 0.1450 seconds

hbase(main):006:0> t1.scan

ROW COLUMN+CELL

row1 column=field1:qualifier1, timestamp=1478185957925, value=value1

1 row(s) in 0.0270 seconds

同时也看到hdfs中多出了hbase存储的目录:

$ ./bin/hadoop fs -ls /hbase Found 7 items drwxr-xr-x - work supergroup 0 2016-11-03 23:06 /hbase/.tmp drwxr-xr-x - work supergroup 0 2016-11-03 23:07 /hbase/MasterProcWALs drwxr-xr-x - work supergroup 0 2016-11-03 23:06 /hbase/WALs drwxr-xr-x - work supergroup 0 2016-11-03 23:06 /hbase/data -rw-r--r-- 3 work supergroup 42 2016-11-03 23:06 /hbase/hbase.id -rw-r--r-- 3 work supergroup 7 2016-11-03 23:06 /hbase/hbase.version drwxr-xr-x - work supergroup 0 2016-11-03 23:11 /hbase/oldWALs

说明hbase是以hdfs为存储介质的,因此它具有分布式存储拥有的所有优点。

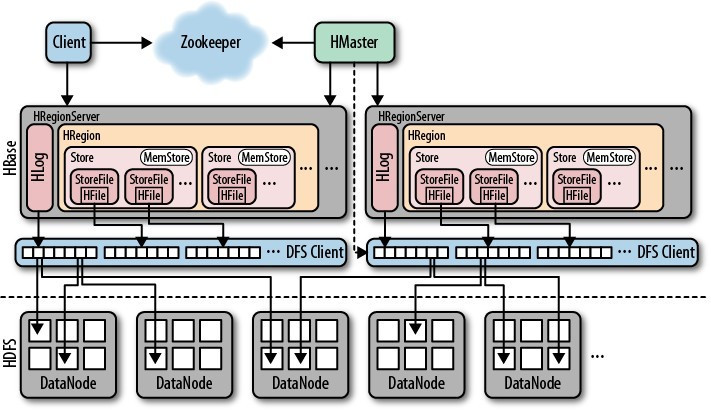

hbase的架构如下:

其中HMaster负责管理HRegionServer以实现负载均衡,负责管理和分配HRegion(数据分片),还负责管理命名空间和table元数据,以及权限控制

HRegionServer负责管理本地的HRegion、管理数据以及和hdfs交互。

Zookeeper负责集群的协调(如HMaster主从的failover)以及集群状态信息的存储

客户端传输数据直接和HRegionServer通信。

更多关于HBase的内容,可以参考这篇文章:http://www.cnblogs.com/charlesblc/p/6233860.html

Hive

再下载Hive进行安装:

Hive的下载地址:http://apache.fayea.com/hive/hive-2.1.0/apache-hive-2.1.0-bin.tar.gz

解压后在 /home/work/data/installed/apache-hive-2.1.0-bin/

打开conf,准备配置文件 cp hive-env.sh.template hive-env.sh

修改HADOOP_HOME:HADOOP_HOME=/home/work/data/installed/hadoop-2.7.3

cp hive-default.xml.template hive-site.xml

cp hive-log4j2.properties.template hive-log4j2.properties

cp hive-exec-log4j2.properties.template hive-exec-log4j2.properties

然后我试着直接初始化db元数据:

$ ./bin/schematool -dbType mysql -initSchema which: no hbase in (/home/work/.jumbo/bin:/usr/kerberos/bin:/usr/local/bin:/bin:/usr/bin:/usr/X11R6/bin:/opt/bin:/home/opt/bin:/home/work/bin) SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/home/work/data/installed/apache-hive-2.1.0-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/home/work/data/installed/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Metastore connection URL: jdbc:derby:;databaseName=metastore_db;create=true Metastore Connection Driver : org.apache.derby.jdbc.EmbeddedDriver Metastore connection User: APP Starting metastore schema initialization to 2.1.0 Initialization script hive-schema-2.1.0.mysql.sql Error: Syntax error: Encountered "<EOF>" at line 1, column 64. (state=42X01,code=30000) org.apache.hadoop.hive.metastore.HiveMetaException: Schema initialization FAILED! Metastore state would be inconsistent !! Underlying cause: java.io.IOException : Schema script failed, errorcode 2 Use --verbose for detailed stacktrace. *** schemaTool failed ***

看来必须要配置HBASE_PATH,如下:

在~/.bashrc里面加上: HADOOP_HOME=/home/work/data/installed/hadoop-2.7.3 HBASE_HOME=/home/work/data/installed/hbase-1.2.3 HIVE_HOME=/home/work/data/installed/apache-hive-2.1.0-bin export HADOOP_HOME export HBASE_HOME export HIVE_HOME PATH=$PATH:$HBASE_HOME/bin:$HIVE_HOME/bin:$HADOOP_HOME/bin export PATH 然后 source ~/.bashrc

初始化元数据数据库(默认保存在本地的derby数据库,也可以配置成mysql,注:应该是需要先装Mysql),注意,不要先执行hive命令,否则这一步会出错,先执行如下内容:

$ ./bin/schematool -dbType derby -initSchema SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/home/work/data/installed/apache-hive-2.1.0-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/home/work/data/installed/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Metastore connection URL: jdbc:derby:;databaseName=metastore_db;create=true Metastore Connection Driver : org.apache.derby.jdbc.EmbeddedDriver Metastore connection User: APP Starting metastore schema initialization to 2.1.0 Initialization script hive-schema-2.1.0.derby.sql Initialization script completed schemaTool completed

然后运行hive客户端命令启动hive,报错:

$ ./bin/hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/work/data/installed/apache-hive-2.1.0-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/work/data/installed/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in file:/home/work/data/installed/apache-hive-2.1.0-bin/conf/hive-log4j2.properties Async: true

Exception in thread "main" java.lang.IllegalArgumentException: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

at org.apache.hadoop.fs.Path.initialize(Path.java:205)

at org.apache.hadoop.fs.Path.<init>(Path.java:171)

at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:631)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:550)

at org.apache.hadoop.hive.ql.session.SessionState.beginStart(SessionState.java:518)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:705)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:641)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

Caused by: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

at java.net.URI.checkPath(URI.java:1823)

at java.net.URI.<init>(URI.java:745)

at org.apache.hadoop.fs.Path.initialize(Path.java:202)

... 12 more

所以,还是需要到 hive-site.xml里面修改,直接用vi的命令

:%s/${system:java.io.tmpdir}/\/home\/work\/data\/installed\/apache-hive-2.1.0-bin\/tmp/gc

:%s/${system:user.name}/work/gc

实际以上目录都换成:

/home/work/data/installed/apache-hive-2.1.0-bin/tmp/work

然后重新运行就可以了

$ ./bin/hive SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/home/work/data/installed/apache-hive-2.1.0-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/home/work/data/installed/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] Logging initialized using configuration in file:/home/work/data/installed/apache-hive-2.1.0-bin/conf/hive-log4j2.properties Async: true Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. hive>

创建一个库:

hive> show databases; OK default Time taken: 1.079 seconds, Fetched: 1 row(s) hive> create database mydatabase; OK Time taken: 0.413 seconds hive> show databases; OK default mydatabase Time taken: 0.014 seconds, Fetched: 2 row(s) hive>

这时候,还是单机版。需要启动server。启动之前,先把端口改一下。

"hive-site.xml"

<name>hive.server2.thrift.port</name>

<value>10000</value>

改成

<name>hive.server2.thrift.port</name>

<value>8338</value>

然后启动命令:

mkdir log ; nohup ./bin/hiveserver2 &>log/hive.log &

这时可以通过jdbc客户端连接这个服务访问hive,端口是8338.

Spark

下载Spark版本和地址:

http://apache.fayea.com/spark/spark-2.0.1/spark-2.0.1-bin-hadoop2.7.tgz

解压后的目录 /home/work/data/installed/spark-2.0.1-bin-hadoop2.7

首先可以单机跑(但是报错):

$ ./bin/spark-submit examples/src/main/python/pi.py 10

/home/work/data/installed/spark-2.0.1-bin-hadoop2.7/bin/spark-class: line 77: syntax error near unexpected token `"$ARG"'

/home/work/data/installed/spark-2.0.1-bin-hadoop2.7/bin/spark-class: line 77: ` CMD+=("$ARG")'

加了$JAVA_HOME,但是还是不行。

重新下载一个 2.0.0版本,下载链接如下:

http://101.44.1.8/files/41620000059EE71A/d3kbcqa49mib13.cloudfront.net/spark-2.0.0-bin-hadoop2.7.tgz

但还是报错,看到原来是脚本出错了:

while IFS= read -d '' -r ARG; do

CMD+=("$ARG")

done < <(build_command "$@")

COUNT=${#CMD[@]}

注意${#CMD[@]} 是长度。

应该是base版本的问题。。把上面改成如下:

while IFS= read -d '' -r ARG; do

#CMD+=("$ARG")

CMD=(${CMD[*]} "$ARG")

done < <(build_command "$@")

然后运行:

$ ./bin/spark-submit examples/src/main/python/pi.py 10

hihihi

File "/home/work/data/installed/spark-2.0.0-bin-hadoop2.7/examples/src/main/python/pi.py", line 35

partitions = int(sys.argv[1]) if len(sys.argv) > 1 else 2

^

SyntaxError: invalid syntax

应该是Python版本的问题

用jumbo重新装了个python,然后运行命令

$ pwd /home/work/data/installed/spark-2.0.1-bin-hadoop2.7 $ ./bin/spark-submit examples/src/main/python/pi.py 10 打印出很多日志,其中有如下几条重要日志: 16/11/04 02:08:26 INFO DAGScheduler: Job 0 finished: reduce at /home/work/data/installed/spark-2.0.1-bin-hadoop2.7/examples/src/main/python/pi.py:43, took 0.724629 s Pi is roughly 3.135560 16/11/04 02:08:26 INFO SparkUI: Stopped Spark web UI at http://10.117.146.12:4040

表示,计算任务成功了。

修改下默认端口 sbin/start-master.sh

if [ "$SPARK_MASTER_WEBUI_PORT" = "" ]; then SPARK_MASTER_WEBUI_PORT=8080 fi 改成 if [ "$SPARK_MASTER_WEBUI_PORT" = "" ]; then SPARK_MASTER_WEBUI_PORT=8340 fi

运行命令:

$ sbin/start-master.sh starting org.apache.spark.deploy.master.Master, logging to /home/work/data/installed/spark-2.0.1-bin-hadoop2.7/logs/spark-work-org.apache.spark.deploy.master.Master-1-gzns-ecom-baiduhui-201605-m42n05.gzns.baidu.com.out

此时,打开 http://10.117.146.12:8340/ 可以看到:

再启动slave

先改一下UI端口从8081到8341

if [ "$SPARK_WORKER_WEBUI_PORT" = "" ]; then

SPARK_WORKER_WEBUI_PORT=8341

fi

$ ./sbin/start-slave.sh spark://MYAY:7077 starting org.apache.spark.deploy.worker.Worker, logging to /home/work/data/installed/spark-2.0.1-bin-hadoop2.7/logs/spark-work-org.apache.spark.deploy.worker.Worker-1-gzns-ecom-baiduhui-201605-m42n05.gzns.baidu.com.out

刷新界面,本来是应该看到增加了一个worker,可是发现没有。打开上面的日志,发现报错。

WARN Worker: Failed to connect to master MYAY:7077

重新看了一下master的配置,重新启动slave:

$ ./sbin/stop-slave.sh ./sbin/start-slave.sh spark://[hostname]:7077 starting org.apache.spark.deploy.worker.Worker, logging to /home/work/data/installed/spark-2.0.1-bin-hadoop2.7/logs/spark-work-org.apache.spark.deploy.worker.Worker-1-gzns-ecom-baiduhui-201605-m42n05.gzns.baidu.com.out 看日志不再报错

看 http://10.117.146.12:8340/ 增加了一个 worker

看slave 的UI 界面 http://10.117.146.12:8341/ 也能正常看到。 (11.27注:看起来主从都是这台机器)

现在,就可以把刚刚单机的任务提交到spark集群上来运行了:

./bin/spark-submit --master spark://10.117.146.12:7077 examples/src/main/python/pi.py 10 结果日志包括了: 16/11/04 02:30:47 INFO DAGScheduler: Job 0 finished: reduce at /home/work/data/installed/spark-2.0.1-bin-hadoop2.7/examples/src/main/python/pi.py:43, took 2.081995 s Pi is roughly 3.140340 16/11/04 02:30:47 INFO SparkUI: Stopped Spark web UI at http://10.117.146.12:4040

spark程序也可以部署到yarn集群上执行,也就是我们部署hadoop时启动的yarn

我们需要提前配置好HADOOP_CONF_DIR,如下:

HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoop/

export HADOOP_CONF_DIR

然后把任务提交到Yarn集群上:

./bin/spark-submit --master yarn --deploy-mode cluster examples/src/main/python/pi.py 10

能看到打印了很多日志:

16/11/04 02:33:52 INFO client.RMProxy: Connecting to ResourceManager at /10.117.146.12:8032

16/11/04 02:33:52 INFO yarn.Client: Requesting a new application from cluster with 1 NodeManagers

...

16/11/04 02:34:08 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: 10.117.146.12

ApplicationMaster RPC port: 0

queue: default

start time: 1478198035784

final status: SUCCEEDED

tracking URL: http://gzns-ecom-baiduhui-201605-m42n05.gzns.baidu.com:8320/proxy/application_1478162780323_0003/

user: work

16/11/04 02:34:08 INFO yarn.Client: Deleting staging directory hdfs://localhost:8390/user/work/.sparkStaging/application_1478162780323_0003

16/11/04 02:34:08 INFO util.ShutdownHookManager: Shutdown hook called

16/11/04 02:34:08 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-8521df06-7a45-4fe5-81ce-6a2a26852eb1

在Hadoop任务的管理界面 http://10.117.146.12:8320/cluster 能看到跑了这个任务:

还没了解清楚,在yarn的配置下,怎么获得输出。可以再研究一下。

总结一下

hdfs是所有hadoop生态的底层存储架构,它主要完成了分布式存储系统的逻辑,凡是需要存储的都基于其上构建

yarn是负责集群资源管理的部分,这个资源主要指计算资源,因此它支撑了各种计算模块

map-reduce组件主要完成了map-reduce任务的调度逻辑,它依赖于hdfs作为输入输出及中间过程的存储,因此在hdfs之上,它也依赖yarn为它分配资源,因此也在yarn之上

hbase基于hdfs存储,通过独立的服务管理起来,因此仅在hdfs之上

hive基于hdfs存储,通过独立的服务管理起来,因此仅在hdfs之上

spark基于hdfs存储,即可以依赖yarn做资源分配计算资源也可以通过独立的服务管理,因此在hdfs之上也在yarn之上,从结构上看它和mapreduce一层比较像

总之,每一个系统负责了自己擅长的一部分,同时相互依托,形成了整个hadoop生态。

浙公网安备 33010602011771号

浙公网安备 33010602011771号