在 从服务间的一次调用分析整个springcloud的调用过程(二) 中我们会发现从头到尾都没说到负载均衡的逻辑,springcloud是如何在多个节点中选择哪个节点去执行请求的。

其实它的逻辑就在上一篇说到的AbstractLoadBalancerAwareClient的executeWithLoadBalancer的方法中

public T executeWithLoadBalancer(final S request, final IClientConfig requestConfig) throws ClientException { LoadBalancerCommand<T> command = buildLoadBalancerCommand(request, requestConfig); try { return command.submit( new ServerOperation<T>() { @Override public Observable<T> call(Server server) { URI finalUri = reconstructURIWithServer(server, request.getUri()); S requestForServer = (S) request.replaceUri(finalUri); try { return Observable.just(AbstractLoadBalancerAwareClient.this.execute(requestForServer, requestConfig)); } catch (Exception e) { return Observable.error(e); } } }) .toBlocking() .single(); } catch (Exception e) { Throwable t = e.getCause(); if (t instanceof ClientException) { throw (ClientException) t; } else { throw new ClientException(e); } } }

该方法会先构造一个LoadBalancerCommand,在该command中就包含了执行负载均衡的一些策略,比如loadBalancerContext和retryHandler;执行其submit方法,第一步会先初始化一个ExecutionInfoContext,该类会记一些整个执行期间的一些上下文信息;listenerInvoker是执行期间监听器的执行器。

然后会获取两个很重要的属性 maxRetrysSame(在一个节点上重试的次数,默认为0),maxRetryNext(最大重试下一台节点);接下来就是核心的一行 判断server==null?,执行selectServer方法

该方法会调用LoadBalancerContext.getServerFromLoadBalancer,最终会调用ILoadBalancer接口的实现类(默认为ZoneAwareLoadBalancer),在ZoneAwareLoadBalancer.chooseServer中第一处逻辑判断实际zone就一个(除非你使用了分区配置),所以会调用super.chooseServer(key),也就是BaseLoadBalancer.chooseServer方法

public Observable<T> submit(final ServerOperation<T> operation) { final ExecutionInfoContext context = new ExecutionInfoContext(); if (listenerInvoker != null) { try { listenerInvoker.onExecutionStart(); } catch (AbortExecutionException e) { return Observable.error(e); } } final int maxRetrysSame = retryHandler.getMaxRetriesOnSameServer(); final int maxRetrysNext = retryHandler.getMaxRetriesOnNextServer(); // Use the load balancer Observable<T> o = (server == null ? selectServer() : Observable.just(server)) .concatMap(new Func1<Server, Observable<T>>() { @Override // Called for each server being selected public Observable<T> call(Server server) { context.setServer(server); final ServerStats stats = loadBalancerContext.getServerStats(server); // Called for each attempt and retry Observable<T> o = Observable .just(server) .concatMap(new Func1<Server, Observable<T>>() { @Override public Observable<T> call(final Server server) { context.incAttemptCount(); loadBalancerContext.noteOpenConnection(stats); if (listenerInvoker != null) { try { listenerInvoker.onStartWithServer(context.toExecutionInfo()); } catch (AbortExecutionException e) { return Observable.error(e); } } final Stopwatch tracer = loadBalancerContext.getExecuteTracer().start(); return operation.call(server).doOnEach(new Observer<T>() { private T entity; @Override public void onCompleted() { recordStats(tracer, stats, entity, null); // TODO: What to do if onNext or onError are never called? } @Override public void onError(Throwable e) { recordStats(tracer, stats, null, e); logger.debug("Got error {} when executed on server {}", e, server); if (listenerInvoker != null) { listenerInvoker.onExceptionWithServer(e, context.toExecutionInfo()); } } @Override public void onNext(T entity) { this.entity = entity; if (listenerInvoker != null) { listenerInvoker.onExecutionSuccess(entity, context.toExecutionInfo()); } } private void recordStats(Stopwatch tracer, ServerStats stats, Object entity, Throwable exception) { tracer.stop(); loadBalancerContext.noteRequestCompletion(stats, entity, exception, tracer.getDuration(TimeUnit.MILLISECONDS), retryHandler); } }); } }); if (maxRetrysSame > 0) o = o.retry(retryPolicy(maxRetrysSame, true)); return o; } }); if (maxRetrysNext > 0 && server == null) o = o.retry(retryPolicy(maxRetrysNext, false)); return o.onErrorResumeNext(new Func1<Throwable, Observable<T>>() { @Override public Observable<T> call(Throwable e) { if (context.getAttemptCount() > 0) { if (maxRetrysNext > 0 && context.getServerAttemptCount() == (maxRetrysNext + 1)) { e = new ClientException(ClientException.ErrorType.NUMBEROF_RETRIES_NEXTSERVER_EXCEEDED, "Number of retries on next server exceeded max " + maxRetrysNext + " retries, while making a call for: " + context.getServer(), e); } else if (maxRetrysSame > 0 && context.getAttemptCount() == (maxRetrysSame + 1)) { e = new ClientException(ClientException.ErrorType.NUMBEROF_RETRIES_EXEEDED, "Number of retries exceeded max " + maxRetrysSame + " retries, while making a call for: " + context.getServer(), e); } } if (listenerInvoker != null) { listenerInvoker.onExecutionFailed(e, context.toFinalExecutionInfo()); } return Observable.error(e); } }); }

@Override public Server chooseServer(Object key) { if (!ENABLED.get() || getLoadBalancerStats().getAvailableZones().size() <= 1) { logger.debug("Zone aware logic disabled or there is only one zone"); return super.chooseServer(key); } Server server = null; try { LoadBalancerStats lbStats = getLoadBalancerStats(); Map<String, ZoneSnapshot> zoneSnapshot = ZoneAvoidanceRule.createSnapshot(lbStats); logger.debug("Zone snapshots: {}", zoneSnapshot); if (triggeringLoad == null) { triggeringLoad = DynamicPropertyFactory.getInstance().getDoubleProperty( "ZoneAwareNIWSDiscoveryLoadBalancer." + this.getName() + ".triggeringLoadPerServerThreshold", 0.2d); } if (triggeringBlackoutPercentage == null) { triggeringBlackoutPercentage = DynamicPropertyFactory.getInstance().getDoubleProperty( "ZoneAwareNIWSDiscoveryLoadBalancer." + this.getName() + ".avoidZoneWithBlackoutPercetage", 0.99999d); } Set<String> availableZones = ZoneAvoidanceRule.getAvailableZones(zoneSnapshot, triggeringLoad.get(), triggeringBlackoutPercentage.get()); logger.debug("Available zones: {}", availableZones); if (availableZones != null && availableZones.size() < zoneSnapshot.keySet().size()) { String zone = ZoneAvoidanceRule.randomChooseZone(zoneSnapshot, availableZones); logger.debug("Zone chosen: {}", zone); if (zone != null) { BaseLoadBalancer zoneLoadBalancer = getLoadBalancer(zone); server = zoneLoadBalancer.chooseServer(key); } } } catch (Exception e) { logger.error("Error choosing server using zone aware logic for load balancer={}", name, e); } if (server != null) { return server; } else { logger.debug("Zone avoidance logic is not invoked."); return super.chooseServer(key); } }

public Server chooseServer(Object key) { if (counter == null) { counter = createCounter(); } counter.increment(); if (rule == null) { return null; } else { try { return rule.choose(key); } catch (Exception e) { logger.warn("LoadBalancer [{}]: Error choosing server for key {}", name, key, e); return null; } } }

BaseLoadBalancer.chooseServer中的实现是依赖于IRule接口的,该接口的实现有多个,这里我在代码中定义了使用阿里 nacos的NacosRule,因此这里会走到NacosRule的choose方法

@Bean public NacosRule nacosRule(){ return new NacosRule(); }

@Override public Server choose(Object key) { try { String clusterName = this.nacosDiscoveryProperties.getClusterName(); DynamicServerListLoadBalancer loadBalancer = (DynamicServerListLoadBalancer) getLoadBalancer(); String name = loadBalancer.getName(); NamingService namingService = this.nacosDiscoveryProperties .namingServiceInstance(); List<Instance> instances = namingService.selectInstances(name, true); if (CollectionUtils.isEmpty(instances)) { LOGGER.warn("no instance in service {}", name); return null; } List<Instance> instancesToChoose = instances; if (StringUtils.isNotBlank(clusterName)) { List<Instance> sameClusterInstances = instances.stream() .filter(instance -> Objects.equals(clusterName, instance.getClusterName())) .collect(Collectors.toList()); if (!CollectionUtils.isEmpty(sameClusterInstances)) { instancesToChoose = sameClusterInstances; } else { LOGGER.warn( "A cross-cluster call occurs,name = {}, clusterName = {}, instance = {}", name, clusterName, instances); } } Instance instance = ExtendBalancer.getHostByRandomWeight2(instancesToChoose); return new NacosServer(instance); } catch (Exception e) { LOGGER.warn("NacosRule error", e); return null; } }

在方法中会拿到nacos的一些配置信息,比如注册中心地址,namespace;调用NamingService.selectInstance方法,最终会调用HostReactor.getServiceInfo,方法返回的是从serviceInfoMap获取的ServiceInfo,那么这个map中的值是从哪来的呢?

HostReactor中有一个UpdateTask实现了Runnable接口,它的run方法就是执行更新serviceInfoMap,每个service会注册一个task到ScheduledExecutorService中,并且是延迟任务,默认为1秒钟,且在每次执行完后又把当前task加入到执行任务队列中,这样就能近实时更新注册中心的节点信息,但是也还是会有一秒钟的延迟,所以就会出现一种情况,某个节点在注册中心已经下线了,一个请求可能还是会打到这个下线了的节点。

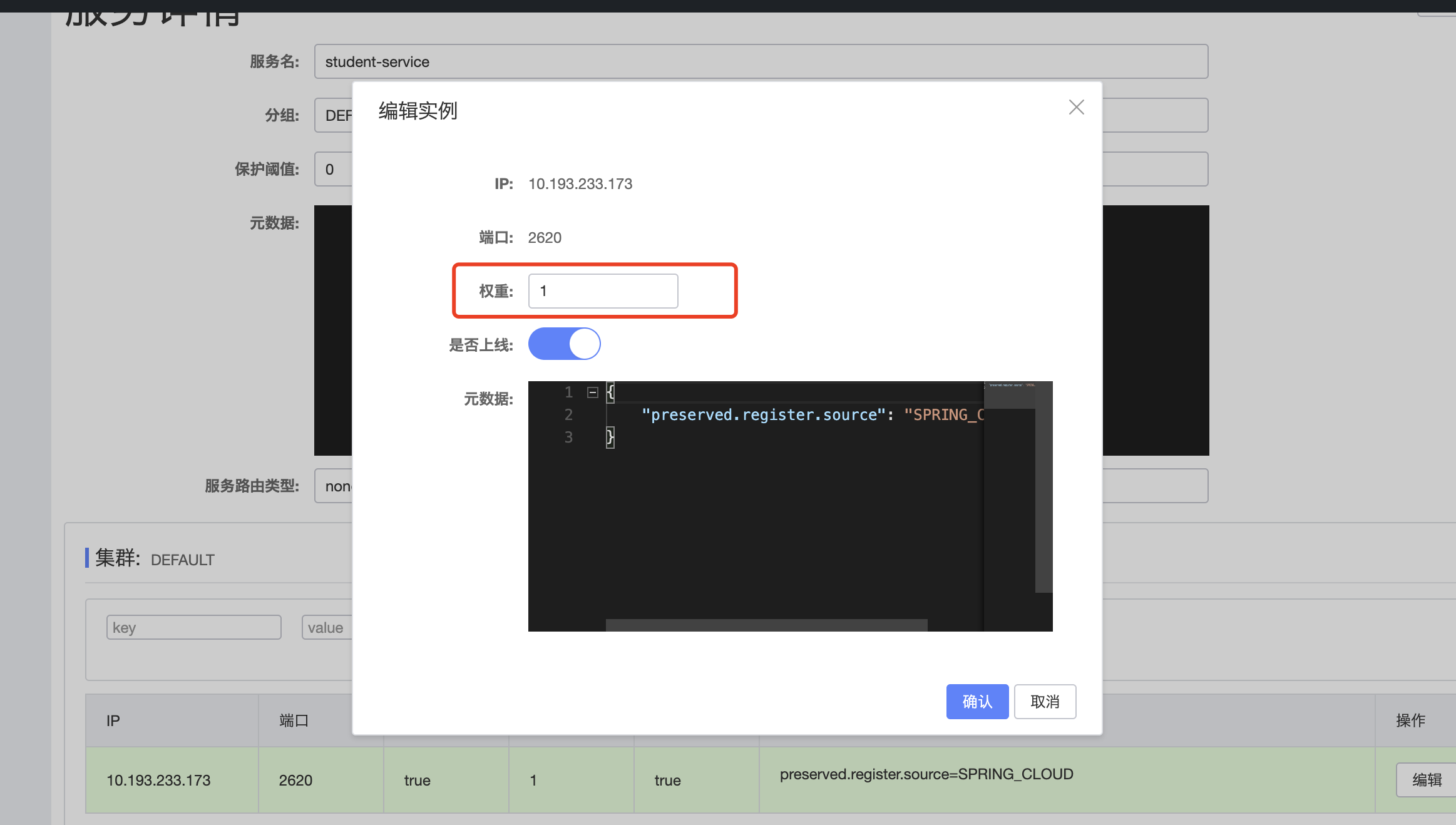

回到NacosRule choose 43行,当前已经获取了所有节点信息,然后nacos会根据权重随机选择一个节点,也就是com.alibaba.nacos.client.naming.core.Balancer.getHostByRandomWeight方法;关于权重我们可以在nacos管理页面中配置

浙公网安备 33010602011771号

浙公网安备 33010602011771号