目标:爬取猎聘网深圳的所有python类职位信息并导出xls

网址:https://www.shixiseng.com/interns?k=Python&p=1

思路流程:观察网页的标签等构造 ==> 构造函数获取详细页链接 ==> 进入详细页获取详细信息 ==> 构造分页函数(根据翻页判断何种为翻页) ==>循环遍历 ==> 反反爬虫 使爬虫具有一定稳定性。

发现翻页为:

https://www.shixiseng.com/interns?k=Python&p=1

https://www.shixiseng.com/interns?k=Python&p=2

https://www.shixiseng.com/interns?k=Python&p=2

所以 翻页函数为:

https://www.shixiseng.com/interns?k=Python&p={}

以下为完整代码:

1 import re,requests,xlwt,time 2 headers = { 3 'User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Safari/537.36' 4 } 5 endlist = [] 6 def getlinks(url): 7 wb_data = requests.get(url,headers=headers) 8 links = re.findall('div class="job-info".*?href="(.*?)"',wb_data.text,re.S) 9 for link in links: 10 getinfos(link) 11 def getinfos(url): 12 try: 13 wb_data= requests.get(url,headers=headers) 14 jobs = re.findall('<h1.*?>(.*?)</h1>',wb_data.text,re.S) 15 moneys = re.findall('class="job-item-title">(.*?)<em>',wb_data.text,re.S) 16 addresses = re.findall('class="basic-infor".*?<a.*?>(.*?)</a>',wb_data.text,re.S) 17 requires = re.findall('class="job-item main-message job-description".*?class="content content-word".*?>.*?(.*?)</div>',wb_data.text,re.S) 18 for job,money,address,require in zip(jobs,moneys,addresses,requires): 19 #print(job,money,address,require) 20 list = [url,job,money,address,require] 21 endlist.append(list) 22 except: 23 link = 'https://www.liepin.com'+url 24 getinfos(link) 25 if __name__== '__main__': 26 urls = ['https://www.liepin.com/zhaopin/?pubTime=&ckid=839511b5f66749af&fromSearchBtn=2&compkind=&isAnalysis=&init=-1&searchType=1&dqs=050090&industryType=&jobKind=&sortFlag=15°radeFlag=0&industries=&salary=&compscale=&key=Python&clean_condition=&headckid=7dac5ea2ab5ad2a8&d_pageSize=40&siTag=p_XzVCa5J0EfySMbVjghcw~-nQsjvAMdjst7vnBI-6VZQ&d_headId=75b630a3df78d830d1b43fd9c3a04226&d_ckId=dd7c3c89b11aab1eca541c47ba725967&d_sfrom=search_unknown&d_curPage=0&curPage={}'.format(str(i)) 27 for i in range(1,5)] 28 for url in urls: 29 getlinks(url)

time.sleep(2) 30 book = xlwt.Workbook(encoding='utf-8') 31 sheet = book.add_sheet('newjobmessage') 32 header = ['url','job','money','address','require'] 33 for h in range(len(header)): 34 sheet.write(0,h,header[h]) 35 i = 1 36 for list in endlist: 37 j = 0 38 for data in list: 39 sheet.write(i,j,data) 40 j+=1 41 i+=1 42 book.save('jobmessage.xls')

实战中碰到的问题:

1.href与href 不全报错。 处理方法:用try except语句 使requests返回时遇到错误跳转(因为此时只会因为收不到而报错所以href不全)。

2.存取问题。 经过几次思考 将所需的信息存入endlist再遍历排序

3.详细页取信息问题。 re有时候不好取值时可以适当用别的库来提取 不过效率变慢。

优化:

可运用多进程来加快

可添加time库来使爬虫更加稳定更加拟真实

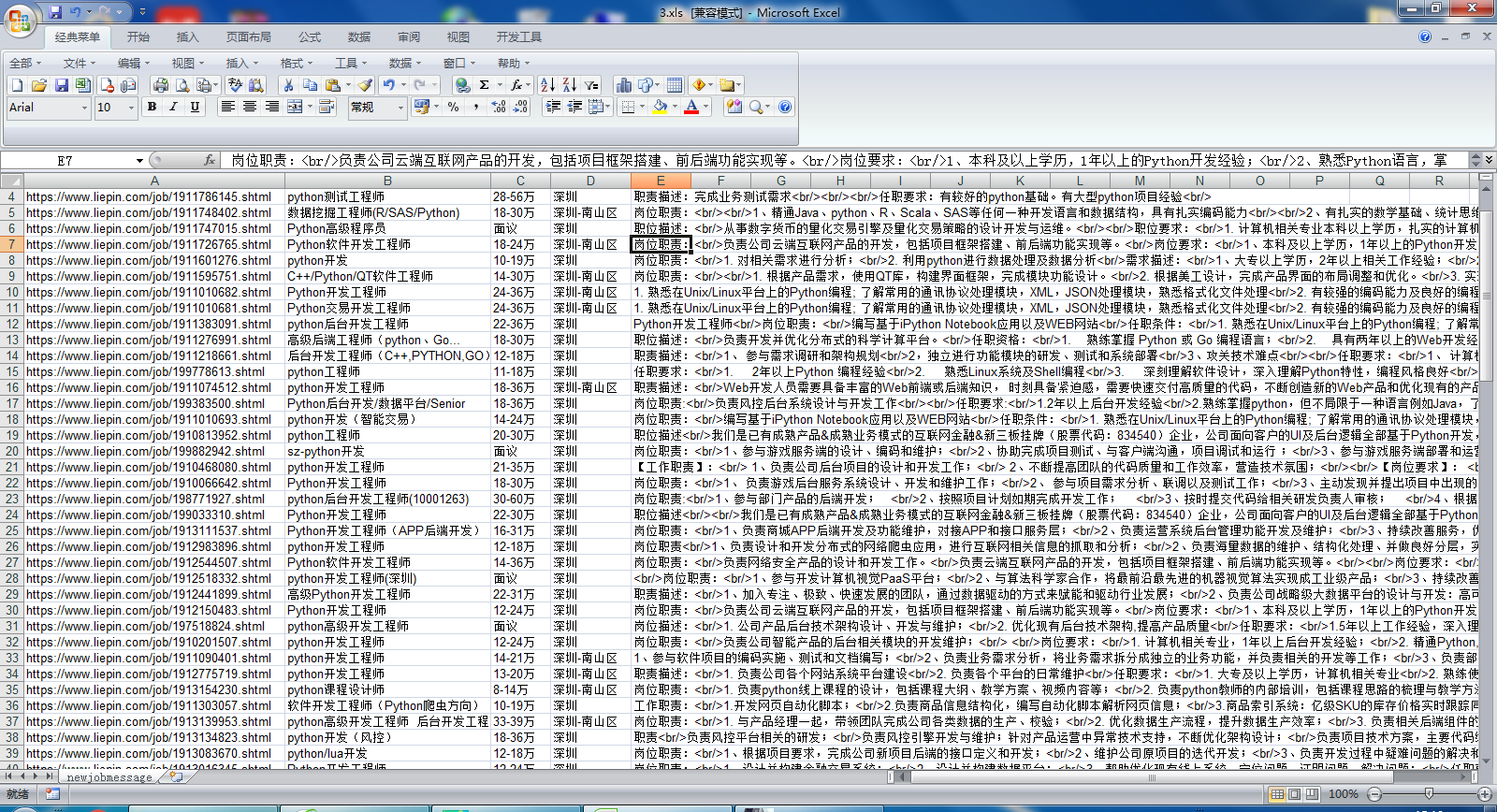

最终效果图:

总有一个理由,会让我们开始变强。