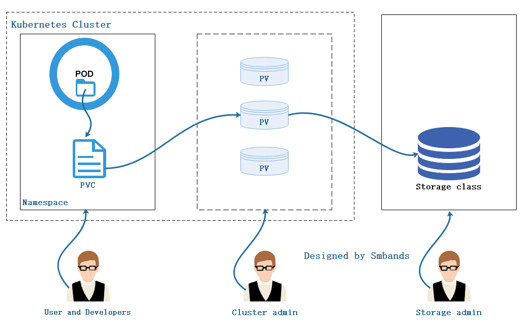

架构:

一. 搭建storageclass

1、master和node节点安装nfs服务

yum -y install nfs-utils rpcbind

2、启动nfs并设为开机自启:

systemctl start nfs && systemctl enable nfs

systemctl start rpcbind && systemctl enable rpcbind

3、master节点创建共享挂载目录(客户端不需要创建共享目录和编辑配置文件,只安装服务就行)

mkdir -pv /data/volumes/{v1,v2,v3}

4、编辑master节点/etc/exports文件,将目录共享到192.168.200.0/24这个网段中:(网段根据自己的情况写)

[root@master2 ~]# cat /etc/exports

/data/volumes/v1 192.168.200.0/24(rw,no_root_squash,no_all_squash)

/data/volumes/v2 192.168.200.0/24(rw,no_root_squash,no_all_squash)

/data/volumes/v3 192.168.200.0/24(rw,no_root_squash,no_all_squash)

5、发布

[root@master2 ~]# exportfs -arv

exporting 192.168.200.0/24:/data/volumes/v3

exporting 192.168.200.0/24:/data/volumes/v2

exporting 192.168.200.0/24:/data/volumes/v1

6、查看

[root@master2 ~]# showmount -e

Export list for master2:

/data/volumes/v3 192.168.200.0/24

/data/volumes/v2 192.168.200.0/24

/data/volumes/v1 192.168.200.0/24

7、在k8s的master主节点下载 NFS 插件

for file in class.yaml deployment.yaml rbac.yaml test-claim.yaml ; do wget https://raw.githubusercontent.com/kubernetes-incubator/external-storage/master/nfs-client/deploy/$file ; done

8、修改deployment.yaml文件

vim deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest ##默认是latest版本

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs ##这里的供应者名称必须和class.yaml中的provisioner的名称一致,否则部署不成功

- name: NFS_SERVER

value: 192.168.200.3 ##这里写NFS服务器的IP地址或者能解析到的主机名

- name: NFS_PATH

value: /data/volumes/v1 ##这里写NFS服务器中的共享挂载目录(强调:这里的路径必须是目录中最后一层的文件夹,否则部署的应用将无权限创建目录导致Pending)

volumes:

- name: nfs-client-root

nfs:

server: 192.168.200.3 ##NFS服务器的IP或可解析到的主机名

path: /data/volumes/v1 ##NFS服务器中的共享挂载目录(强调:这里的路径必须是目录中最后一层的文件夹,否则部署的应用将无权限创建目录导致Pending)

9、部署yaml文件

kubectl apply -f .

10、查看服务

kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-6d4469b5b5-bh6t9 1/1 Running 0 73m

11、列出你的集群中的StorageClass

kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

managed-nfs-storage fuseim.pri/ifs Delete Immediate false 77m

12、标记一个StorageClass为默认的 (

kubectl patch storageclass managed-nfs-storage -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

13、验证你选用为默认的StorageClass

kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

managed-nfs-storage (default) fuseim.pri/ifs Delete Immediate false 77m

14、测试文件

[root@master1 ~]# cat statefulset-nfs.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: nfs-web

spec:

serviceName: "nginx"

replicas: 3

selector:

matchLabels:

app: nfs-web # has to match .spec.template.metadata.labels

template:

metadata:

labels:

app: nfs-web

spec:

terminationGracePeriodSeconds: 10

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

annotations:

volume.beta.kubernetes.io/storage-class: managed-nfs-storage

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi

15、安装

kubectl apply -f statefulset-nfs.yaml

16、查看pod

[root@master1 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-6d4469b5b5-m6jgp 1/1 Running 1 47h

nfs-web-0 1/1 Running 0 44m

nfs-web-1 1/1 Running 0 44m

nfs-web-2 1/1 Running 0 43m

17、查看pvc

[root@master1 ~]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

test-claim Bound pvc-5dc58dfa-bd9d-4ad3-98c4-28649c13113c 1Mi RWX managed-nfs-storage 47h

www-nfs-web-0 Bound pvc-7cdcdc4c-e9d2-4848-b434-9caf7e72db5a 1Gi RWO managed-nfs-storage 45m

www-nfs-web-1 Bound pvc-23e3cdb2-a365-43ff-8936-d0e3df30ffac 1Gi RWO managed-nfs-storage 44m

www-nfs-web-2 Bound pvc-2c34b87d-4f09-4063-aea9-9ae1e7567194 1Gi RWO managed-nfs-storage 44m

18、查看pv

[root@master1 ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-23e3cdb2-a365-43ff-8936-d0e3df30ffac 1Gi RWO Delete Bound default/www-nfs-web-1 managed-nfs-storage 45m

pvc-2c34b87d-4f09-4063-aea9-9ae1e7567194 1Gi RWO Delete Bound default/www-nfs-web-2 managed-nfs-storage 45m

pvc-5dc58dfa-bd9d-4ad3-98c4-28649c13113c 1Mi RWX Delete Bound default/test-claim managed-nfs-storage 47h

pvc-7bf72c3c-be16-43ab-b43a-a84659b9c688 20Gi RWO Delete Bound kubesphere-monitoring-system/prometheus-k8s-db-prometheus-k8s-1 managed-nfs-storage 45h

pvc-7cdcdc4c-e9d2-4848-b434-9caf7e72db5a 1Gi RWO Delete Bound default/www-nfs-web-0 managed-nfs-storage 46m

pvc-dfac2b35-6c22-487e-baee-f381c44a5254 20Gi RWO Delete Bound kubesphere-monitoring-system/prometheus-k8s-db-prometheus-k8s-0 managed-nfs-storage 45h

19、查看 nfs server 目录中信息

[root@master1 ~]# ll /data/volumes/v1

total 0

drwxrwxrwx 2 root root 6 Feb 11 15:58 default-test-claim-pvc-5dc58dfa-bd9d-4ad3-98c4-28649c13113c

drwxrwxrwx 2 root root 6 Feb 11 10:35 default-test-claim-pvc-b68c2fde-14eb-464a-8907-f778a654e8b8

drwxrwxrwx 2 root root 6 Feb 13 14:30 default-www-nfs-web-0-pvc-7cdcdc4c-e9d2-4848-b434-9caf7e72db5a

drwxrwxrwx 2 root root 6 Feb 13 14:31 default-www-nfs-web-1-pvc-23e3cdb2-a365-43ff-8936-d0e3df30ffac

drwxrwxrwx 2 root root 6 Feb 13 14:31 default-www-nfs-web-2-pvc-2c34b87d-4f09-4063-aea9-9ae1e7567194

drwxrwxrwx 3 root root 27 Feb 13 14:44 kubesphere-monitoring-system-prometheus-k8s-db-prometheus-k8s-0-pvc-dfac2b35-6c22-487e-baee-f381c44a5254

drwxrwxrwx 3 root root 27 Feb 13 14:44 kubesphere-monitoring-system-prometheus-k8s-db-prometheus-k8s-1-pvc-7bf72c3c-be16-43ab-b43a-a84659b9c688

标签:

k8s

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 如何调用 DeepSeek 的自然语言处理 API 接口并集成到在线客服系统

· 【译】Visual Studio 中新的强大生产力特性

· 2025年我用 Compose 写了一个 Todo App