Rook概述

Ceph简介

Ceph是一种高度可扩展的分布式存储解决方案,提供对象、文件和块存储。在每个存储节点上,将找到Ceph存储对象的文件系统和Ceph OSD(对象存储守护程序)进程。在Ceph集群上,还存在Ceph MON(监控)守护程序,它们确保Ceph集群保持高可用性。

Rook简介

Rook 是一个开源的cloud-native storage编排, 提供平台和框架;为各种存储解决方案提供平台、框架和支持,以便与云原生环境本地集成。目前主要专用于Cloud-Native环境的文件、块、对象存储服务。它实现了一个自我管理的、自我扩容的、自我修复的分布式存储服务。

Rook支持自动部署、启动、配置、分配(provisioning)、扩容/缩容、升级、迁移、灾难恢复、监控,以及资源管理。为了实现所有这些功能,Rook依赖底层的容器编排平台,例如 kubernetes、CoreOS 等。。

Rook 目前支持Ceph、NFS、Minio Object Store、Edegefs、Cassandra、CockroachDB 存储的搭建。

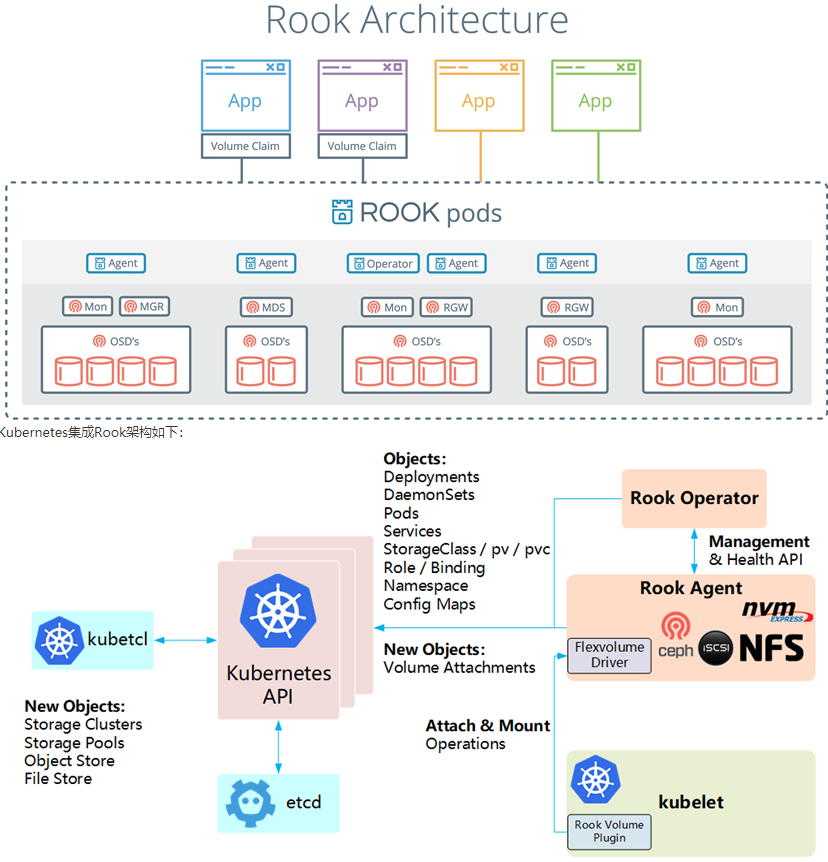

Rook机制:

Rook 提供了卷插件,来扩展了 K8S 的存储系统,使用 Kubelet 代理程序 Pod 可以挂载 Rook 管理的块设备和文件系统。

Rook Operator 负责启动并监控整个底层存储系统,例如 Ceph Pod、Ceph OSD 等,同时它还管理 CRD、对象存储、文件系统。

Rook Agent 代理部署在 K8S 每个节点上以 Pod 容器运行,每个代理 Pod 都配置一个 Flexvolume 驱动,该驱动主要用来跟 K8S 的卷控制框架集成起来,每个节点上的相关的操作,例如添加存储设备、挂载、格式化、删除存储等操作,都有该代理来完成。

Rook架构

1、三台node节点分别添加一块磁盘并输入以下命令识别磁盘(不用重启)

echo "- - -" >/sys/class/scsi_host/host0/scan

echo "- - -" >/sys/class/scsi_host/host1/scan

echo "- - -" >/sys/class/scsi_host/host2/scan

2、克隆 ceph

git clone https://github.com/rook/rook.git

3、切换到需要的版本分支

cd rook

git branch -a

git checkout -b release-1.1 remotes/origin/release-1.1

4、使用node节点存储,在master1上需要修改参数

kubectl label nodes {node1,node2,node3} ceph-osd=enabled

kubectl label nodes {node1,node2,node3} ceph-mon=enabled

kubectl label nodes node1 ceph-mgr=enabled

5、进入项目安装 common.yaml 和 operator.yaml

[root@master1 ceph]# kubectl apply -f common.yaml

namespace/rook-ceph created

customresourcedefinition.apiextensions.k8s.io/cephclusters.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephfilesystems.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephnfses.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectstores.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectstoreusers.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephblockpools.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/volumes.rook.io created

customresourcedefinition.apiextensions.k8s.io/objectbuckets.objectbucket.io created

customresourcedefinition.apiextensions.k8s.io/objectbucketclaims.objectbucket.io created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-object-bucket created

clusterrole.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

clusterrole.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt-rules created

role.rbac.authorization.k8s.io/rook-ceph-system created

clusterrole.rbac.authorization.k8s.io/rook-ceph-global created

clusterrole.rbac.authorization.k8s.io/rook-ceph-global-rules created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-cluster-rules created

clusterrole.rbac.authorization.k8s.io/rook-ceph-object-bucket created

serviceaccount/rook-ceph-system created

rolebinding.rbac.authorization.k8s.io/rook-ceph-system created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-global created

serviceaccount/rook-ceph-osd created

serviceaccount/rook-ceph-mgr created

serviceaccount/rook-ceph-cmd-reporter created

role.rbac.authorization.k8s.io/rook-ceph-osd created

clusterrole.rbac.authorization.k8s.io/rook-ceph-osd created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-system created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-system-rules created

role.rbac.authorization.k8s.io/rook-ceph-mgr created

role.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

rolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-system created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

podsecuritypolicy.policy/rook-privileged created

clusterrole.rbac.authorization.k8s.io/psp:rook created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-system-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-default-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-osd-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-psp created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cmd-reporter-psp created

serviceaccount/rook-csi-cephfs-plugin-sa created

serviceaccount/rook-csi-cephfs-provisioner-sa created

role.rbac.authorization.k8s.io/cephfs-external-provisioner-cfg created

rolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role-cfg created

clusterrole.rbac.authorization.k8s.io/cephfs-csi-nodeplugin created

clusterrole.rbac.authorization.k8s.io/cephfs-csi-nodeplugin-rules created

clusterrole.rbac.authorization.k8s.io/cephfs-external-provisioner-runner created

clusterrole.rbac.authorization.k8s.io/cephfs-external-provisioner-runner-rules created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-cephfs-plugin-sa-psp created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-cephfs-provisioner-sa-psp created

clusterrolebinding.rbac.authorization.k8s.io/cephfs-csi-nodeplugin created

clusterrolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role created

serviceaccount/rook-csi-rbd-plugin-sa created

serviceaccount/rook-csi-rbd-provisioner-sa created

role.rbac.authorization.k8s.io/rbd-external-provisioner-cfg created

rolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role-cfg created

clusterrole.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

clusterrole.rbac.authorization.k8s.io/rbd-csi-nodeplugin-rules created

clusterrole.rbac.authorization.k8s.io/rbd-external-provisioner-runner created

clusterrole.rbac.authorization.k8s.io/rbd-external-provisioner-runner-rules created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-rbd-plugin-sa-psp created

clusterrolebinding.rbac.authorization.k8s.io/rook-csi-rbd-provisioner-sa-psp created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role created

[root@master1 ceph]# kubectl apply -f operator.yaml

deployment.apps/rook-ceph-operator created

7、查看 roo-ceph 命名空间下 pod 状态

[root@master1 ceph]# kubectl get po -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-77c5668c9b-k7ml6 1/1 Running 0 12s

rook-discover-dmd2m 1/1 Running 0 8s

rook-discover-n48st 1/1 Running 0 8s

rook-discover-sgf7n 1/1 Running 0 8s

rook-discover-vb2mw 1/1 Running 0 8s

rook-discover-wzhkd 1/1 Running 0 8s

rook-discover-zsnp8 1/1 Running 0 8s

8、配置cluster.yaml

apiVersion: ceph.rook.io/v1

kind: CephCluster

metadata:

name: rook-ceph

namespace: rook-ceph

spec:

cephVersion:

image: ceph/ceph:v14.2.4-20190917

allowUnsupported: false

dataDirHostPath: /var/lib/rook

skipUpgradeChecks: false

mon:

count: 3

allowMultiplePerNode: false

dashboard:

enabled: true

ssl: true

monitoring:

enabled: false

rulesNamespace: rook-ceph

network:

hostNetwork: false

rbdMirroring:

workers: 0

placement:

# all:

# nodeAffinity:

# requiredDuringSchedulingIgnoredDuringExecution:

# nodeSelectorTerms:

# - matchExpressions:

# - key: role

# operator: In

# values:

# - storage-node

# podAffinity:

# podAntiAffinity:

# tolerations:

# - key: storage-node

# operator: Exists

mon:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: ceph-mon

operator: In

values:

- enabled

tolerations:

- key: ceph-mon

operator: Exists

ods:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: ceph-osd

operator: In

values:

- enabled

tolerations:

- key: ceph-osd

operator: Exists

mgr:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: ceph-mgr

operator: In

values:

- enabled

tolerations:

- key: ceph-mgr

operator: Exists

annotations:

resources:

removeOSDsIfOutAndSafeToRemove: false

storage:

useAllNodes: false #关闭使用所有Node

useAllDevices: false #关闭使用所有设备

deviceFilter: sdb

config:

metadataDevice:

databaseSizeMB: "1024"

journalSizeMB: "1024"

nodes:

- name: "node1" #指定存储节点主机

config:

storeType: bluestore #指定类型为裸磁盘

devices: #指定磁盘为sdb

- name: "sdb"

- name: "node2"

config:

storeType: bluestore

devices:

- name: "sdb"

- name: "node3"

config:

storeType: bluestore

devices:

- name: "sdb"

disruptionManagement:

managePodBudgets: false

osdMaintenanceTimeout: 30

manageMachineDisruptionBudgets: false

machineDisruptionBudgetNamespace: openshift-machine-api

9、安装cluster.yaml

[root@master1 ceph]# kubectl apply -f cluster.yaml

cephcluster.ceph.rook.io/rook-ceph created

10、查看状态

[root@master1 ceph]# kubectl get po -n rook-ceph

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-9qw89 3/3 Running 0 2m53s

csi-cephfsplugin-j9c5w 3/3 Running 0 2m53s

csi-cephfsplugin-kln7b 3/3 Running 0 2m53s

csi-cephfsplugin-lpzz5 3/3 Running 0 2m53s

csi-cephfsplugin-n6xqw 3/3 Running 0 2m53s

csi-cephfsplugin-provisioner-5f5fb76db9-cbgtq 4/4 Running 0 2m52s

csi-cephfsplugin-provisioner-5f5fb76db9-jb5s5 4/4 Running 0 2m52s

csi-cephfsplugin-tmpqd 3/3 Running 0 2m53s

csi-rbdplugin-2cdt6 3/3 Running 0 2m54s

csi-rbdplugin-48l7q 3/3 Running 0 2m54s

csi-rbdplugin-c7zmx 3/3 Running 0 2m54s

csi-rbdplugin-cjtt6 3/3 Running 0 2m54s

csi-rbdplugin-cqjgw 3/3 Running 0 2m54s

csi-rbdplugin-ljhzn 3/3 Running 0 2m54s

csi-rbdplugin-provisioner-8c5468854-292p8 5/5 Running 0 2m54s

csi-rbdplugin-provisioner-8c5468854-qqczh 5/5 Running 0 2m54s

rook-ceph-mgr-a-854dd44d4-g848k 1/1 Running 0 13s

rook-ceph-mon-a-7c77f495cb-bqsnm 1/1 Running 0 73s

rook-ceph-mon-b-78f7974649-8k854 1/1 Running 0 63s

rook-ceph-mon-c-f8b59c975-27qhj 1/1 Running 0 38s

rook-ceph-operator-77c5668c9b-k7ml6 1/1 Running 0 5m27s

rook-discover-dmd2m 1/1 Running 0 5m23s

rook-discover-n48st 1/1 Running 0 5m23s

rook-discover-sgf7n 1/1 Running 0 5m23s

rook-discover-vb2mw 1/1 Running 0 5m23s

rook-discover-wzhkd 1/1 Running 0 5m23s

rook-discover-zsnp8 1/1 Running 0 5m23s

11、安装Toolbox

toolbox是一个rook的工具集容器,该容器中的命令可以用来调试、测试Rook,对Ceph临时测试的操作一般在这个容器内执行。

kubectl apply -f toolbox.yaml

[root@master1 ceph]# kubectl -n rook-ceph get pod -l "app=rook-ceph-tools"

NAME READY STATUS RESTARTS AGE

rook-ceph-tools-6bd79cf569-2lthp 1/1 Running 0 76s

12、测试Rook

[root@master1 ceph]# kubectl -n rook-ceph exec -it $(kubectl -n rook-ceph get pod -l "app=rook-ceph-tools" -o jsonpath='{.items[0].metadata.name}') sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead.

sh-4.2# ceph status

cluster:

id: ee4579e8-6f9b-48f0-8175-182a15009bf8

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 2m)

mgr: a(active, since 77s)

osd: 3 osds: 3 up (since 3s), 3 in (since 3s)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 24 GiB / 27 GiB avail

pgs:

sh-4.2# ceph osd status

+----+-------+-------+-------+--------+---------+--------+---------+-----------+

| id | host | used | avail | wr ops | wr data | rd ops | rd data | state |

+----+-------+-------+-------+--------+---------+--------+---------+-----------+

| 0 | node1 | 1025M | 8190M | 0 | 0 | 0 | 0 | exists,up |

| 1 | node2 | 1025M | 8190M | 0 | 0 | 0 | 0 | exists,up |

| 2 | node3 | 1025M | 8190M | 0 | 0 | 0 | 0 | exists,up |

+----+-------+-------+-------+--------+---------+--------+---------+-----------+

sh-4.2# rados df

POOL_NAME USED OBJECTS CLONES COPIES MISSING_ON_PRIMARY UNFOUND DEGRADED RD_OPS RD WR_OPS WR USED COMPR UNDER COMPR

total_objects 0

total_used 3.0 GiB

total_avail 24 GiB

total_space 27 GiB

sh-4.2# ceph auth ls

installed auth entries:

osd.0

key: AQBDv3lhAPS/AhAAGzigeHwB9BFsWQuiYXTXPQ==

caps: [mgr] allow profile osd

caps: [mon] allow profile osd

caps: [osd] allow *

osd.1

key: AQBEv3lh/WaMDBAANZJAS8lR/FVFzA7MUSzi5w==

caps: [mgr] allow profile osd

caps: [mon] allow profile osd

caps: [osd] allow *

osd.2

key: AQBDv3lhm2L0LRAAiU1O47F/neFUgl4pPutN+w==

caps: [mgr] allow profile osd

caps: [mon] allow profile osd

caps: [osd] allow *

client.admin

key: AQB6vnlha/CuIBAAsfNW7cEoWWpkOMwCXpQM5g==

caps: [mds] allow *

caps: [mgr] allow *

caps: [mon] allow *

caps: [osd] allow *

client.bootstrap-mds

key: AQDFvnlhD8UqLBAANV2pKTeNekZz6yoxKc9uVw==

caps: [mon] allow profile bootstrap-mds

client.bootstrap-mgr

key: AQDFvnlho9QqLBAAtwrmj6pQDIOhMsNFaP+0cw==

caps: [mon] allow profile bootstrap-mgr

client.bootstrap-osd

key: AQDFvnlhbeMqLBAA60D0vtUjKk5EqKCzlFc+mA==

caps: [mon] allow profile bootstrap-osd

client.bootstrap-rbd

key: AQDFvnlh0/EqLBAAf3EUyHtPuGm8N3m7UirYUQ==

caps: [mon] allow profile bootstrap-rbd

client.bootstrap-rbd-mirror

key: AQDFvnlhfwArLBAA78d4dVfD0crjJspUzJ+UNw==

caps: [mon] allow profile bootstrap-rbd-mirror

client.bootstrap-rgw

key: AQDFvnlh3Q8rLBAAMn2rh9avCJS/r1T0x804GQ==

caps: [mon] allow profile bootstrap-rgw

client.csi-cephfs-node

key: AQD5vnlhx2FOLRAA+231/pqp4TK0iQtFT/6aXw==

caps: [mds] allow rw

caps: [mgr] allow rw

caps: [mon] allow r

caps: [osd] allow rw tag cephfs *=*

client.csi-cephfs-provisioner

key: AQD5vnlhKiTiDhAA+1lhupYiXT7JeMdSkcPS1g==

caps: [mgr] allow rw

caps: [mon] allow r

caps: [osd] allow rw tag cephfs metadata=*

client.csi-rbd-node

key: AQD4vnlhgEexLRAASgO09KpvF9/OKb7K1yxS4g==

caps: [mon] profile rbd

caps: [osd] profile rbd

client.csi-rbd-provisioner

key: AQD4vnlhfwy5FBAALyObdIuo4wyhiRZDrtNasw==

caps: [mgr] allow rw

caps: [mon] profile rbd

caps: [osd] profile rbd

mgr.a

key: AQD7vnlhvto/IBAAVgyCHEt57APBeLuqDrelYA==

caps: [mds] allow *

caps: [mon] allow *

caps: [osd] allow *

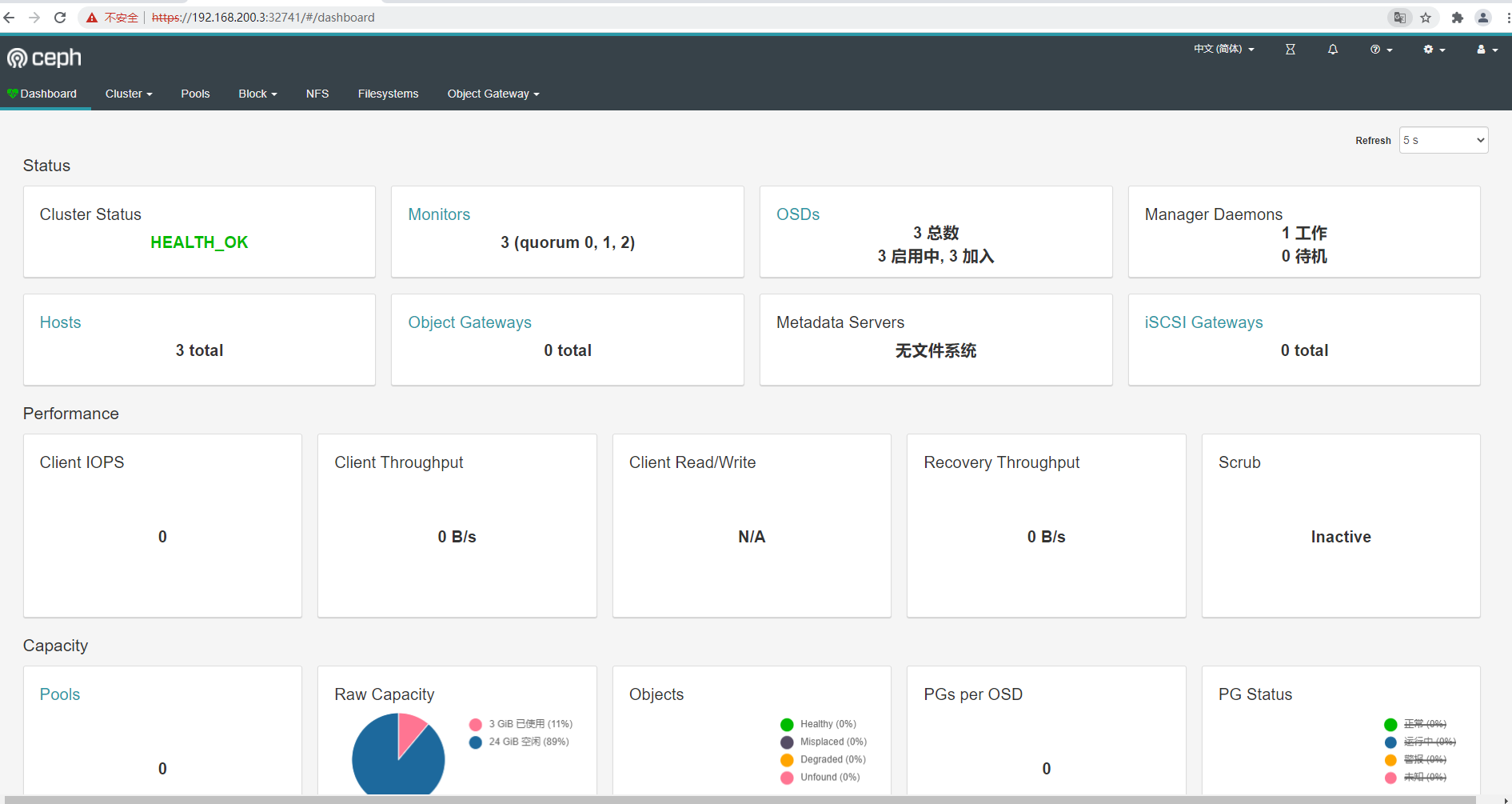

13、部署 Dashboard (需要修改暴露方式,把 ClusterIP 改成 NodePort,我这里已经改好了。)

[root@master1 ceph]# cat dashboard-external-https.yaml

apiVersion: v1

kind: Service

metadata:

name: rook-ceph-mgr-dashboard-external-https

namespace: rook-ceph

labels:

app: rook-ceph-mgr

rook_cluster: rook-ceph

spec:

ports:

- name: dashboard

port: 8443

protocol: TCP

targetPort: 8443

selector:

app: rook-ceph-mgr

rook_cluster: rook-ceph

sessionAffinity: None

type: NodePort # 暴露方式

[root@master1 ceph]# kubectl apply -f dashboard-external-https.yaml

service/rook-ceph-mgr-dashboard-external-https created

14、查看 dashboard 暴露端口

[root@master1 ceph]# kubectl get svc -n rook-ceph

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

csi-cephfsplugin-metrics ClusterIP 10.100.92.5 <none> 8080/TCP,8081/TCP 14m

csi-rbdplugin-metrics ClusterIP 10.107.1.61 <none> 8080/TCP,8081/TCP 14m

rook-ceph-mgr ClusterIP 10.103.103.155 <none> 9283/TCP 10m

rook-ceph-mgr-dashboard ClusterIP 10.96.81.222 <none> 8443/TCP 10m

rook-ceph-mgr-dashboard-external-https NodePort 10.100.215.186 <none> 8443:32741/TCP 105s # 暴露端口

rook-ceph-mon-a ClusterIP 10.109.2.220 <none> 6789/TCP,3300/TCP 12m

rook-ceph-mon-b ClusterIP 10.104.126.29 <none> 6789/TCP,3300/TCP 12m

rook-ceph-mon-c ClusterIP 10.100.86.71 <none> 6789/TCP,3300/TCP 11m

15、查看登录密码

[root@master1 ceph]# kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath='{.data.password}' | base64 --decode

nqSw8XnSWU

访问 dashboard (注意:https 访问,不是 http 访问)

https://ip:32741 (用户名:admin 密码:nqSw8XnSWU)

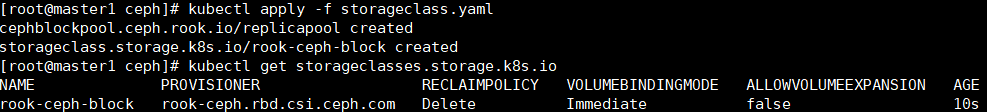

Ceph块存储

11.创建StorageClass

在提供(Provisioning)块存储之前,需要先创建StorageClass和存储池。K8S需要这两类资源,才能和Rook交互,进而分配持久卷(PV)。

详解:如下配置文件中会创建一个名为replicapool的存储池,和rook-ceph-block的storageClass。

apiVersion: ceph.rook.io/v1

kind: CephBlockPool

metadata:

name: replicapool

namespace: rook-ceph

spec:

failureDomain: host

replicated:

size: 3

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: rook-ceph-block

provisioner: rook-ceph.rbd.csi.ceph.com

parameters:

clusterID: rook-ceph

pool: replicapool

imageFormat: "2"

imageFeatures: layering

csi.storage.k8s.io/provisioner-secret-name: rook-csi-rbd-provisioner

csi.storage.k8s.io/provisioner-secret-namespace: rook-ceph

csi.storage.k8s.io/node-stage-secret-name: rook-csi-rbd-node

csi.storage.k8s.io/node-stage-secret-namespace: rook-ceph

csi.storage.k8s.io/fstype: ext4

reclaimPolicy: Delete

12.安装storageclass.yaml

kubectl apply -f storageclass.yaml

13.创建PVC

详解:这里创建相应的PVC,storageClassName:为基于rook Ceph集群的rook-ceph-block。

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: block-pvc

spec:

storageClassName: rook-ceph-block

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 200Mi

14.安装pvc.yaml

kubectl apply -f pvc.yaml

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 如何调用 DeepSeek 的自然语言处理 API 接口并集成到在线客服系统

· 【译】Visual Studio 中新的强大生产力特性

· 2025年我用 Compose 写了一个 Todo App