今天完成了北京市政百姓信件分析的一部分:

可以爬取网址,爬取信件内容,但是是静态的,只爬取一页

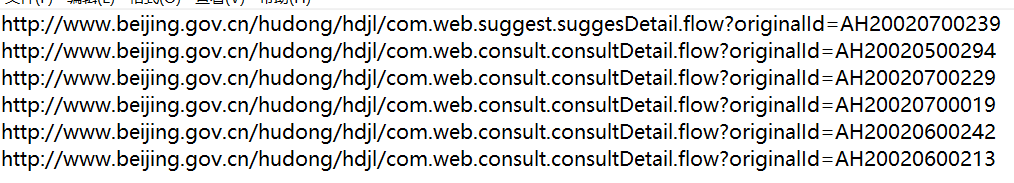

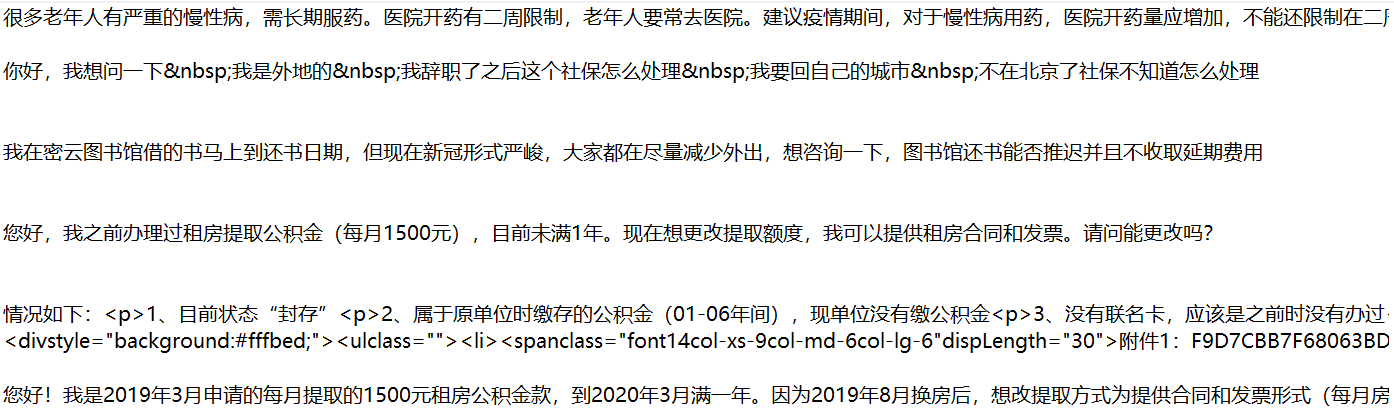

import urllib.request import random import json import re #取消证书验证 import ssl ssl._create_default_https_context = ssl._create_unverified_context def moni(): #模拟请求头 agentList=[ "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.103 Safari/537.36" "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:6.0) Gecko/20100101 Firefox/6.0" "Mozilla/5.0 (Windows NT 10.0; WOW64; Trident/7.0; rv:11.0) like Gecko" "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; SE 2.X MetaSr 1.0; SE 2.X MetaSr 1.0; .NET CLR 2.0.50727; SE 2.X MetaSr 1.0)" "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; 360SE)" ] agentStr= random.choice(agentList) return agentStr def urlCrawler(url): req = urllib.request.Request(url) req.add_header('User-Agent', moni()) response = urllib.request.urlopen(req) pattern = re.compile('<a onclick="letterdetail\(\'(.*?)\',\'(.*?)\'\)', re.S) results = pattern.findall(response.read().decode('utf-8')) with open("E:/A.txt", "wb")as f: for result in results: a, b=result b=re.sub("\s","",b) if(a=="建议"): c="http://www.beijing.gov.cn/hudong/hdjl/com.web.suggest.suggesDetail.flow?originalId="+b elif(a=="咨询"): c="http://www.beijing.gov.cn/hudong/hdjl/com.web.consult.consultDetail.flow?originalId="+b f.write(c.encode("utf-8")+"\n".encode("utf-8")) def htmlCrawler(): for line in open("E:/A.txt"): req = urllib.request.Request(line) req.add_header('User-Agent', moni()) response = urllib.request.urlopen(req) pattern = re.compile('<div class="col-xs-12 col-md-12 column p-2 text-muted mx-2">(.*?)</div>', re.S) results = pattern.findall(response.read().decode('utf-8')) with open("E:/B.txt", "ab")as f: for result in results: result = re.sub("\s", "", result) f.write(result.encode("utf-8")+"\n".encode("utf-8")) url="http://www.beijing.gov.cn/hudong/hdjl/com.web.search.replyMailList.flow" urlCrawler(url) htmlCrawler()

效果: