Python小练习:激活函数

作者:凯鲁嘎吉 - 博客园 http://www.cnblogs.com/kailugaji/

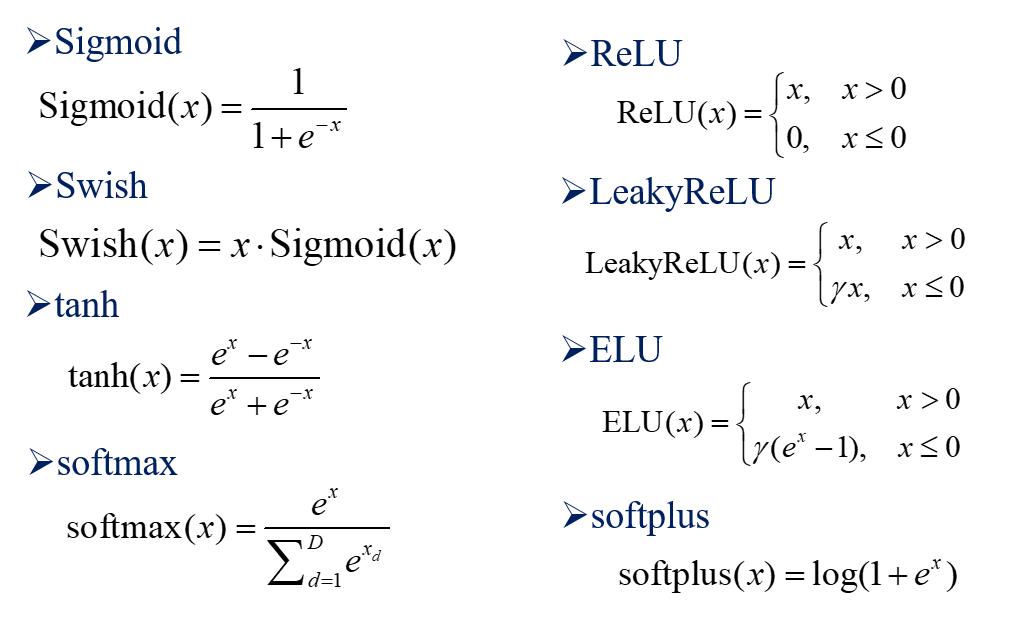

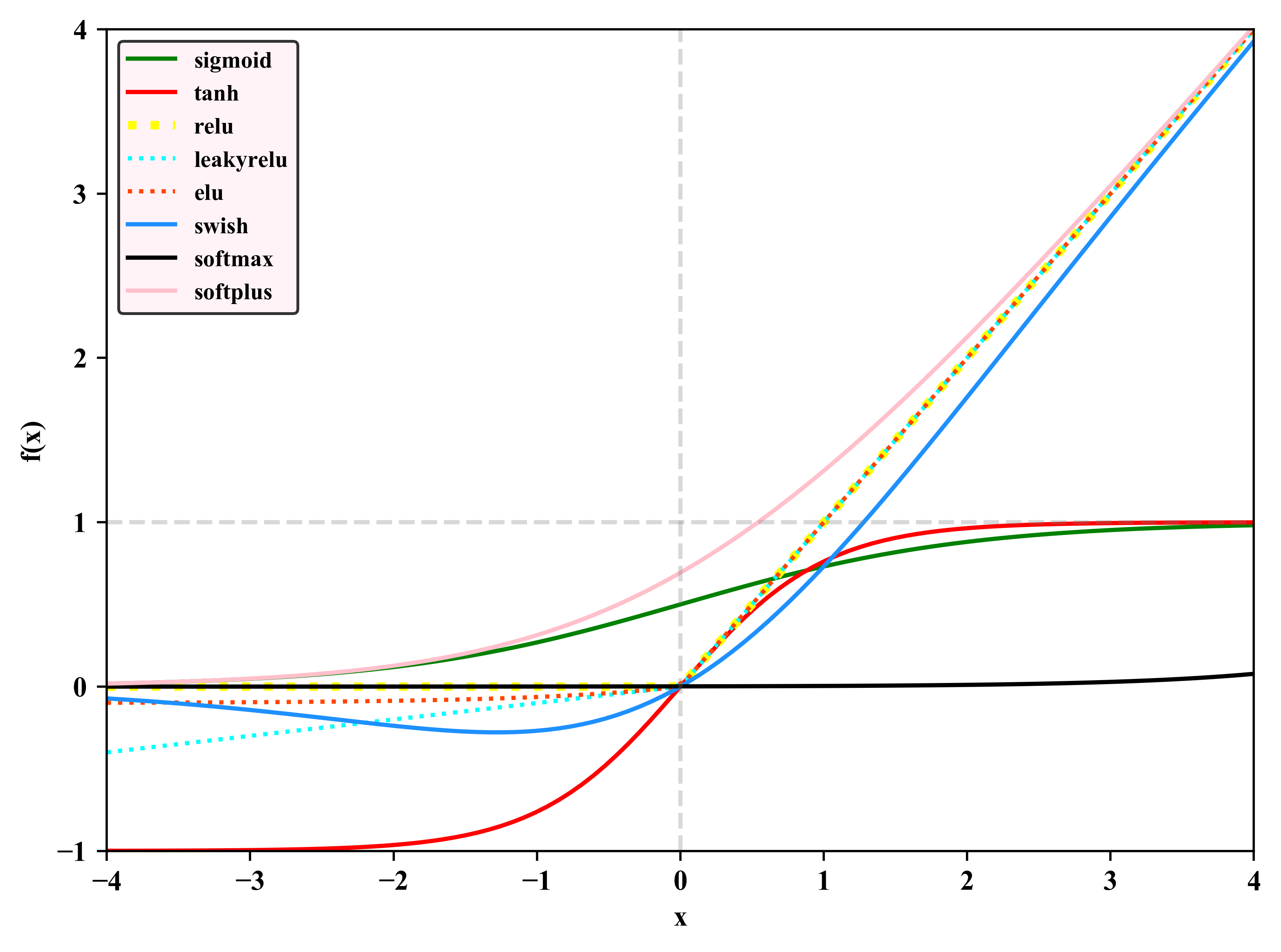

本文介绍几种常见的激活函数,并用Python来实现,包括:Sigmoid、tanh、ReLU、LeakyReLU、ELU、Swish、softmax、softplus。

1. 常见激活函数定义

2. activation_function_test1.py

1 # -*- coding: utf-8 -*- 2 # Author:凯鲁嘎吉 Coral Gajic 3 # https://www.cnblogs.com/kailugaji/ 4 # Python小练习:激活函数 5 # 用Python实现常见的激活函数:'sigmoid', 'tanh', 'relu', 'leakyrelu', 'elu', 'swish', 'softmax','softplus' 6 ''' 7 部分参考: 8 https://zhuanlan.zhihu.com/p/397494815 9 ''' 10 import numpy as np 11 import torch 12 import torch.nn.functional as F 13 import matplotlib.pyplot as plt 14 plt.rc('font',family='Times New Roman') 15 16 # 激活函数 17 def activation_function(index, x, gamma = None, dim = -1): 18 y = torch.empty([]) # 自己手动写的激活函数 19 z = torch.empty([]) # 调用Pytorch内置的激活函数 20 if index == 'sigmoid': 21 y = 1 / (1 + torch.exp(-x)) 22 z = torch.sigmoid(x) 23 elif index == 'tanh': 24 y = (torch.exp(x) - torch.exp(-x)) / (torch.exp(x) + torch.exp(-x)) 25 z = torch.tanh(x) 26 elif index == 'relu': 27 y = np.where(x >= 0, x, 0) 28 y = torch.tensor(y) 29 z = F.relu(x) 30 elif index == 'leakyrelu': 31 y = np.where(x > 0, x, x * gamma) 32 y = torch.tensor(y) 33 z = F.leaky_relu(x, gamma) 34 elif index == 'elu': 35 y = np.where(x > 0, x, gamma * (np.exp(x) - 1)) 36 y = torch.tensor(y) 37 z = F.elu(x,gamma) 38 elif index == 'swish': 39 y = x * (1 / (1 + torch.exp(-x))) 40 z = x * torch.sigmoid(x) 41 elif index == 'softmax': 42 y = torch.exp(x) / torch.exp(x).sum(dim = dim, keepdim = True) 43 z = F.softmax(x, dim = dim) 44 elif index == 'softplus': 45 y = torch.log(1 + torch.exp(x)) 46 z = F.softplus(x) 47 return y, z 48 49 torch.manual_seed(1) 50 x = torch.randn(2, 3) # 原始数据 51 print('原始数据:\n', x) 52 # activation_function()参数设置 53 index = ['sigmoid', 'tanh', 'relu', 'leakyrelu', 'elu', 'swish', 'softmax', 'softplus'] 54 gamma = 0.1 # 超参数 55 num = 4 # 小数点后保留几位 56 for idx in index: 57 y, z = activation_function(idx, x, gamma) 58 print('------------------------------------------') 59 print('激活函数为:', idx) 60 print('自己写的函数:\n', np.around(y, num)) 61 print('调用内置函数:\n', np.around(z, num)) 62 # --------------------画图------------------------ 63 # 手动设置横纵坐标范围 64 plt.xlim([-4, 4]) 65 plt.ylim([-1, 4]) 66 x = np.linspace(-4, 4, 100, endpoint=True) 67 color = ['green', 'red', 'yellow', 'cyan', 'orangered', 'dodgerblue', 'black', 'pink'] 68 ls = ['-', '-', ':', ':', ':', '-', '-', '-'] 69 for i in range(len(index)): 70 _, z = activation_function(index[i], torch.tensor(x), gamma) 71 if color[i] == 'yellow': 72 plt.plot(x, z.numpy(), color=color[i], ls=ls[i], lw = 3, label=index[i]) 73 else: 74 plt.plot(x, z.numpy(), color=color[i], ls=ls[i], label=index[i]) 75 # 添加 y = 1,x = 0 基准线 76 plt.plot([x.min(), x.max()], [1, 1], color = 'gray', ls = '--', alpha = 0.3) 77 plt.plot([0, 0], [-1, 4], color = 'gray', ls = '--', alpha = 0.3) 78 # 添加x轴和y轴标签 79 plt.xlabel('x') 80 plt.ylabel('f(x)') 81 plt.legend(ncol = 1, fontsize='small', facecolor='lavenderblush', edgecolor='black') 82 plt.tight_layout() 83 plt.savefig('Activation Functions.png', bbox_inches='tight', dpi=500) 84 plt.show()

3. 结果

D:\ProgramData\Anaconda3\python.exe "D:/Python code/2023.3 exercise/Activation Functions/activation_function_test1.py" 原始数据: tensor([[ 0.6614, 0.2669, 0.0617], [ 0.6213, -0.4519, -0.1661]]) ------------------------------------------ 激活函数为: sigmoid 自己写的函数: tensor([[0.6596, 0.5663, 0.5154], [0.6505, 0.3889, 0.4586]]) 调用内置函数: tensor([[0.6596, 0.5663, 0.5154], [0.6505, 0.3889, 0.4586]]) ------------------------------------------ 激活函数为: tanh 自己写的函数: tensor([[ 0.5793, 0.2608, 0.0616], [ 0.5520, -0.4235, -0.1646]]) 调用内置函数: tensor([[ 0.5793, 0.2608, 0.0616], [ 0.5520, -0.4235, -0.1646]]) ------------------------------------------ 激活函数为: relu 自己写的函数: tensor([[0.6614, 0.2669, 0.0617], [0.6213, 0.0000, 0.0000]]) 调用内置函数: tensor([[0.6614, 0.2669, 0.0617], [0.6213, 0.0000, 0.0000]]) ------------------------------------------ 激活函数为: leakyrelu 自己写的函数: tensor([[ 0.6614, 0.2669, 0.0617], [ 0.6213, -0.0452, -0.0166]]) 调用内置函数: tensor([[ 0.6614, 0.2669, 0.0617], [ 0.6213, -0.0452, -0.0166]]) ------------------------------------------ 激活函数为: elu 自己写的函数: tensor([[ 0.6614, 0.2669, 0.0617], [ 0.6213, -0.0364, -0.0153]]) 调用内置函数: tensor([[ 0.6614, 0.2669, 0.0617], [ 0.6213, -0.0364, -0.0153]]) ------------------------------------------ 激活函数为: swish 自己写的函数: tensor([[ 0.4362, 0.1512, 0.0318], [ 0.4042, -0.1757, -0.0762]]) 调用内置函数: tensor([[ 0.4362, 0.1512, 0.0318], [ 0.4042, -0.1757, -0.0762]]) ------------------------------------------ 激活函数为: softmax 自己写的函数: tensor([[0.4498, 0.3032, 0.2470], [0.5565, 0.1903, 0.2532]]) 调用内置函数: tensor([[0.4498, 0.3032, 0.2470], [0.5565, 0.1903, 0.2532]]) ------------------------------------------ 激活函数为: softplus 自己写的函数: tensor([[1.0775, 0.8355, 0.7245], [1.0513, 0.4925, 0.6135]]) 调用内置函数: tensor([[1.0775, 0.8355, 0.7245], [1.0513, 0.4925, 0.6135]]) Process finished with exit code 0

可以看到,自己写的激活函数与内置的结果一致。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律