元学习——从MAML到MAML++

作者:凯鲁嘎吉 - 博客园 http://www.cnblogs.com/kailugaji/

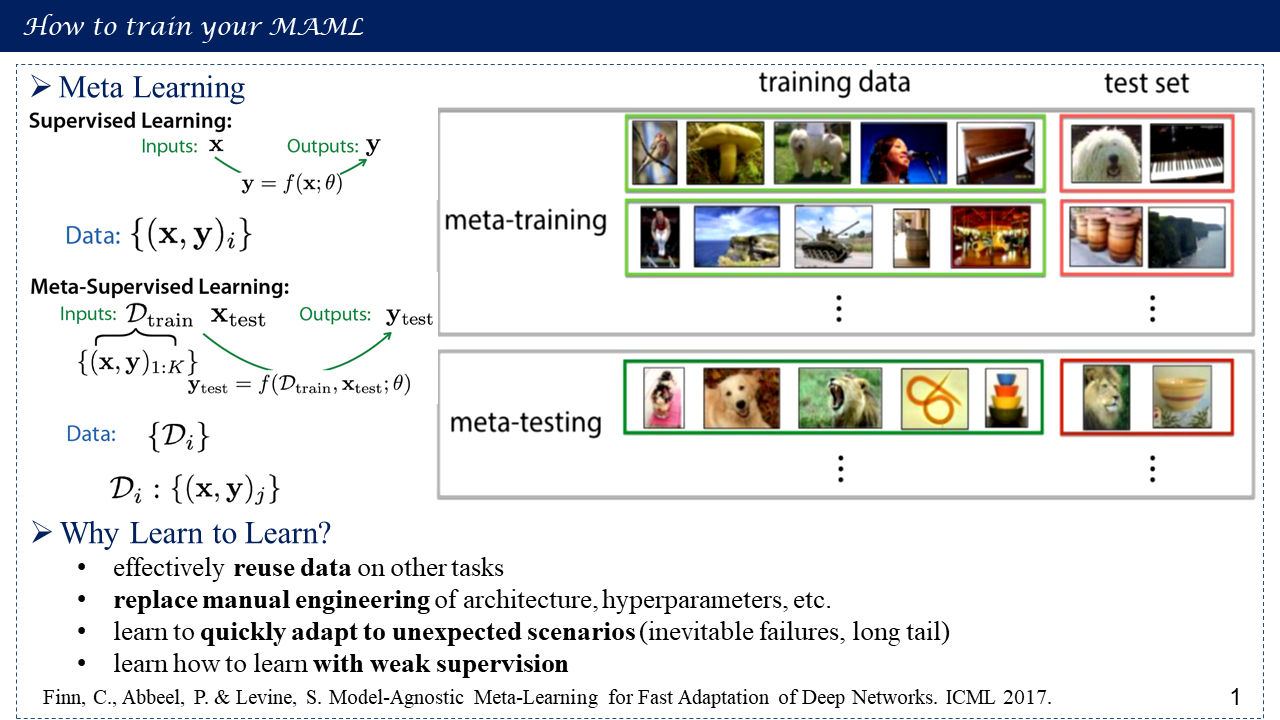

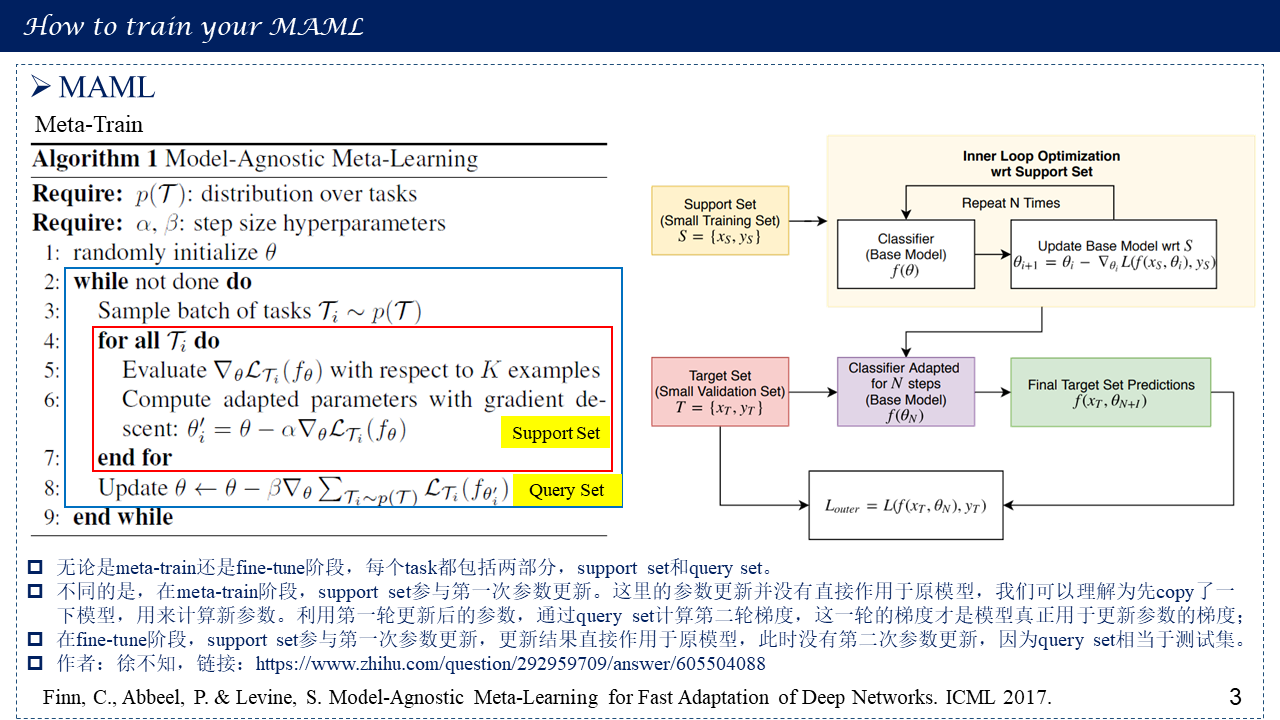

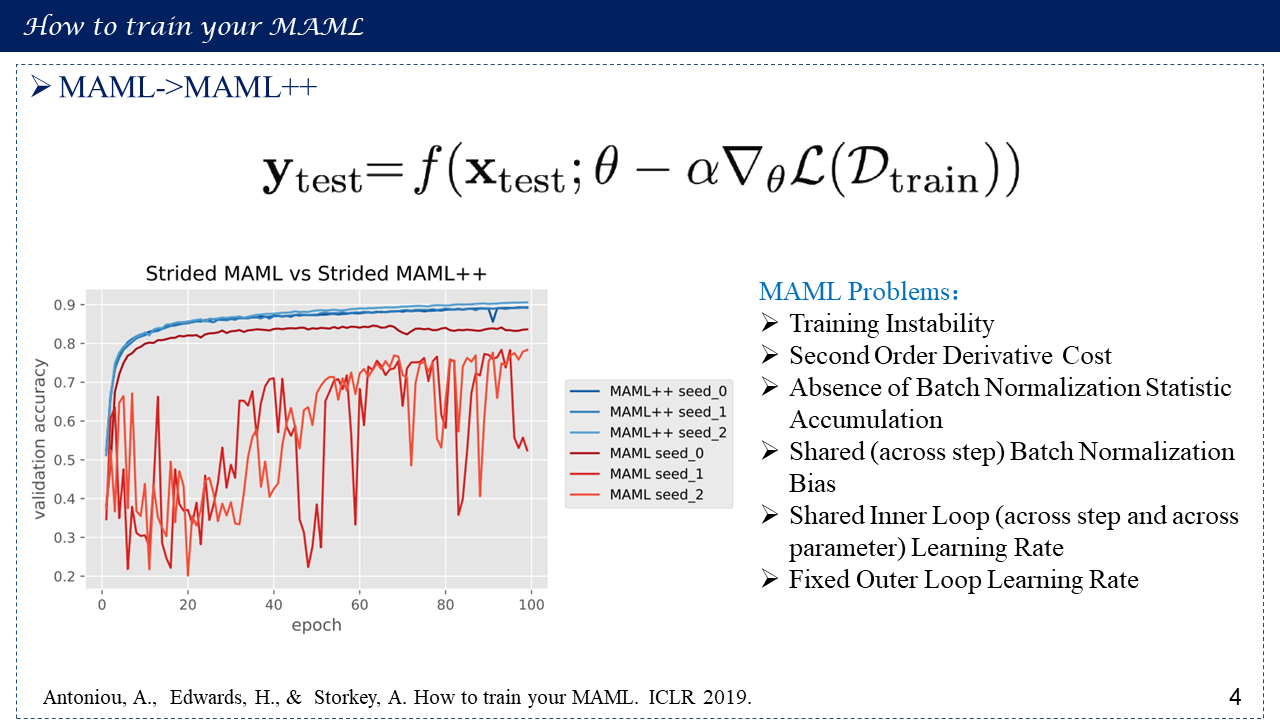

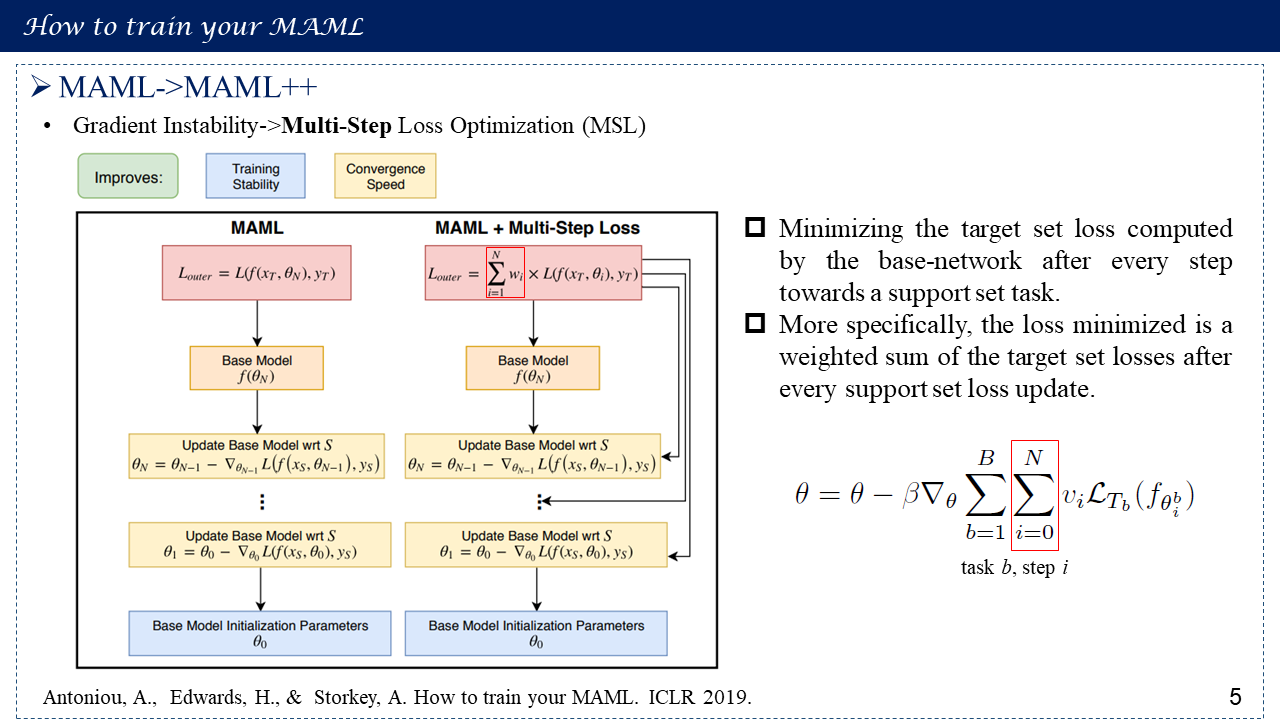

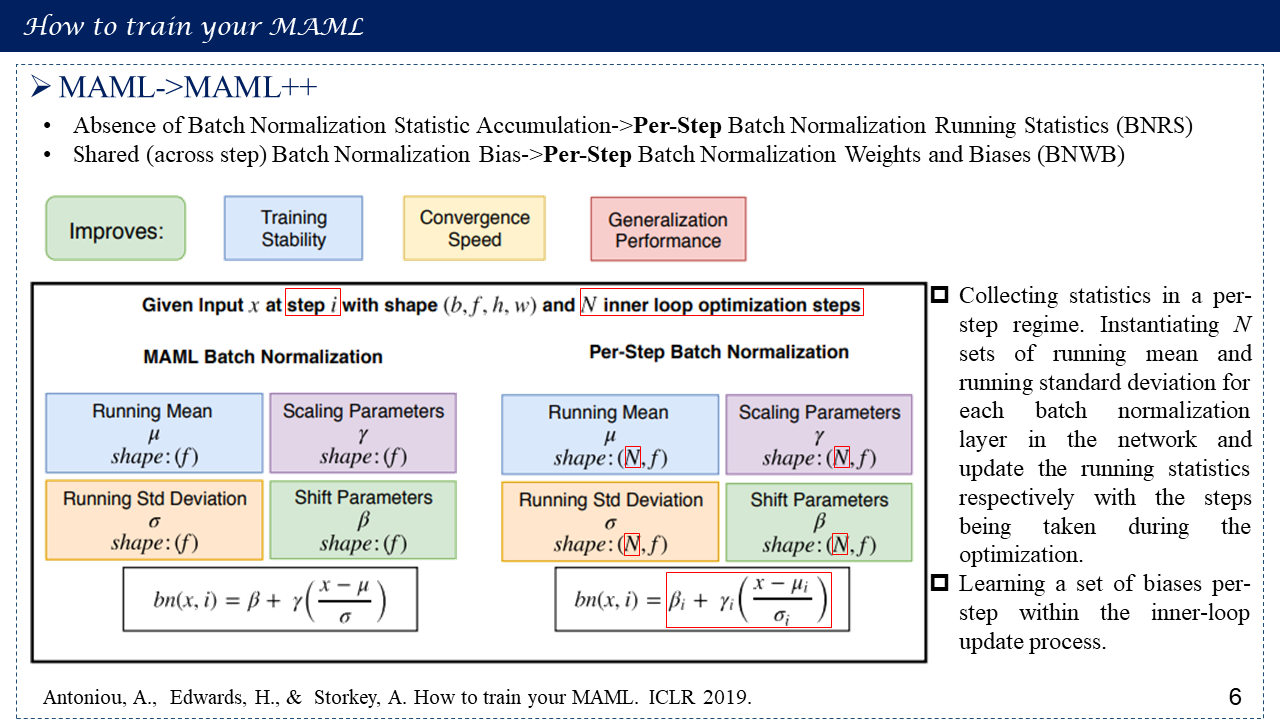

Few-shot learning领域最近有了实质性的进展。这些进步大多来自于将few-shot learning作为元学习问题。Model-Agnostic Meta-Learning (MAML)是目前利用元学习进行few-shot learning的最佳方法之一。MAML简单,优雅,功能强大,但是它有很多问题,比如对神经网络结构非常敏感,经常导致训练时不稳定,需要费力的超参数搜索来稳定训练和实现高泛化,并且在训练和推理时间上都非常昂贵的计算。在文"How to train your MAML"中,对MAML进行了各种改进,不仅稳定了系统,而且大幅度提高了MAML的泛化性能、收敛速度和计算开销。所提方法称之为MAML++。本博文首先介绍什么是元学习,经典的Model-Agnostic Meta-Learning的定义与执行过程,进而说明MAML面临的缺点与挑战,针对这些问题,进行相应改进,从而得到MAML++。

1. Meta Learning (Learn to Learn)

2. Black-Box Adaption vs Optimization-Based Approach

3. MAML

MAML Computation Graph

4. MAML Problems

5. MAML++

MAML with Multi-Step Loss Computation Graph

6. 参考文献

[1] Finn, C., Abbeel, P. & Levine, S. Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks. ICML 2017. Code: https://github.com/cbfinn/maml, https://github.com/dragen1860/MAML-Pytorch

Finn个人主页:https://ai.stanford.edu/~cbfinn/

[2] Antoniou, A., Edwards, H., & Storkey, A. How to train your MAML. ICLR 2019. Code: https://github.com/AntreasAntoniou/HowToTrainYourMAMLPytorch

[3] How to train your MAML: A step by step approach · BayesWatch https://www.bayeswatch.com/2018/11/30/HTYM/

[4] CS 330: Deep Multi-Task and Meta Learning http://web.stanford.edu/class/cs330/

[5] Meta-Learning: Learning to Learn Fast https://lilianweng.github.io/lil-log/2018/11/30/meta-learning.html