爬虫 通过博客园RSS页面爬取用户信息

逻辑

估算了一下博客园的用户数,大约为47万,生成"005684"这样的六位字符,构造url。

根据url爬取页面,解析xml,取出数据,存入DB。

两种存储方式:json或者直接存入MongoDB

如果响应时间过长 | 尝试次数过多 | 返回页面为空 则抛弃该url并休息,然后进行下一个。

两台电脑一起跑,爬完花了2天,共获取约36W用户公开数据。

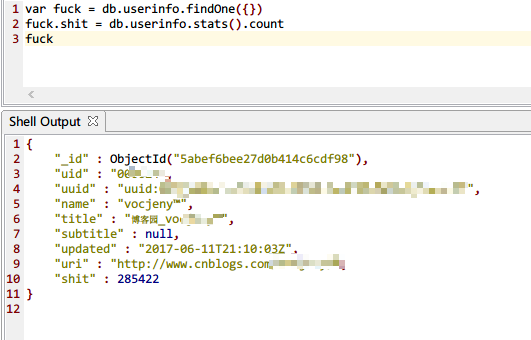

结果

一台电脑上的数据:

代码

环境:python 3.7, Windows 10

推荐使用DB,而不是JSON。

如果偏要用JSON,则需要修改下JSON数据的存储逻辑,每一次deposit()都关闭文件对象并重新打开。

import requests as rq

import xml.etree.cElementTree as ET

import json

import time

import random

import datetime

from pymongo import MongoClient

# @author: i@unoiou.com

# @date: 2018/3/27

# @description:

class User:

def __init__(self, uid, uuid, name, title, subtitle, updated, uri):

self.uid = uid

self.uuid = uuid

self.name = name

self.title = title

self.subtitle = subtitle

self.updated = updated

self.uri = uri

def __str__(self):

return 'uid:%s \t name:%s \t uri:%s' % (self.uid, self.name, self.uri)

def userj(self):

return {'uid': self.uid,

'uuid': self.uuid,

'name': self.name,

'title': self.title,

'subtitle': self.subtitle,

'updated': self.updated,

'uri': self.uri}

class CnblogSpider:

def __init__(self):

self.mongoclient = MongoClient('localhost', 27017)

self.collection = self.mongoclient.cnblogs.userinfo

self.json_file = open('./users.json', mode='a+', encoding='utf-8')

self.log_file = open('./log.txt', mode='a+')

self.ua = [

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; AcooBrowser; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0; Acoo Browser; SLCC1; .NET CLR 2.0.50727; Media Center PC 5.0; .NET CLR 3.0.04506)",

"Mozilla/4.0 (compatible; MSIE 7.0; AOL 9.5; AOLBuild 4337.35; Windows NT 5.1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/5.0 (Windows; U; MSIE 9.0; Windows NT 9.0; en-US)",

'.省略N个UA..'

]

self.headers = {

'User-Agent': random.choice(self.ua),

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en-US,en;q=0.5',

'Connection': 'keep-alive',

'Accept-Encoding': 'gzip, deflate',

}

self.retry_left = 10

self.users = []

self.deposit_threshold = 10

@staticmethod

def sleep():

time.sleep(random.randint(1, 5))

@staticmethod

def url(uid):

return 'http://feed.cnblogs.com/blog/u/' + uid + '/rss'

@staticmethod

def uid_gen(seed: str = '422616', floor: str = '522179') -> str:

"""

generate uid from @seed to @floor

:param seed: minimum

:param floor: maximum

:return: uid string

"""

start, end = int(seed), int(floor)

o = '0'

lock = 1000000

while start <= end and lock:

start = start + 1

lock = lock - 1

if len(str(start)) < 6:

uid = o * (6 - len(str(start))) + str(start)

yield uid

else:

yield str(start)

def parse_to_user(self, doc, uid):

"""

extract user info from document

if error return 5

:param doc: document string

:param uid: uid

:return: user json

"""

try:

root = ET.fromstring(doc)

title = root[0].text

subt = root[1].text

uuid = root[2].text

updated = root[3].text

name = root[4][0].text

uri = root[4][1].text

userj = User(uid, uuid, name, title, subt, updated, uri).userj()

print('{id: %s, name: %s, uri: %s, updated: %s}' % (uid, name, uri, updated))

return userj

except Exception as e:

self.log(e.__str__())

return 5

def retry_request(self, url):

"""

retry request several times according self.retry_left

:param url: url

:return: response.text

"""

try:

if self.retry_left < 1:

self.retry_left = 10

self.log('Droped one user...' + url)

return 5

self.retry_left = self.retry_left - 1

res = rq.get(url, headers=self.headers)

if res.status_code != 200:

self.sleep()

self.log('Retrying...%d times left.' % self.retry_left)

self.retry_request(url)

length = int(res.headers.get('Content-Length'))

if length < 300:

self.log('Empty:' + url)

return 5

return res.text

except Exception as e:

self.log('Error:' + url + '\t' + e.__str__())

def deposit(self, tp=2):

"""

deposit data

default: mongodb

:param tp: type, 1 for json file, 2 for mongodb

:return:

"""

if tp == 1:

self.json_file.write(',\n')

json.dump(self.users, self.json_file, ensure_ascii=False, indent=4)

elif tp == 2:

try:

result = self.collection.insert(self.users)

self.log('deposit: ' + str(len(result)))

print('[INFO] deposited %s userinfo.' % len(result))

except Exception as e:

self.log(e.__str__())

else:

return

self.log('Inserted: ' + str(len(self.users)))

self.users.clear()

def log(self, msg):

self.log_file.write(str(msg) + '\t at:' + str(datetime.datetime.now()) + '\n')

def start(self):

"""

Start spider

:return:

"""

current_users = 0

for uid in self.uid_gen():

text = self.retry_request(url=self.url(uid))

if text == 5:

continue

userj = self.parse_to_user(text, uid)

if userj == 5:

continue

self.users.append(userj)

current_users = current_users + 1

time.sleep(0.1)

if current_users > self.deposit_threshold:

self.deposit()

current_users = 0

self.deposit()

self.log('Done')

self.json_file.close()

self.log_file.close()

self.mongoclient.close()

self.collection = None

if __name__ == '__main__':

Spider = CnblogSpider()

Spider.start()

print('Done...\n> ')

总结

- 文件对象只有关闭或者内存超出python解释器规定的范围之后才会真正写入文件

- 使用MongoDB的全文检索,同一个collection只能指定一些字段创建一个text index

- 将Spider类继承Threading,使用多线程技术

- IP代理作为参数传给requests.get()即可

- 方法参数可以显式指定类型

浙公网安备 33010602011771号

浙公网安备 33010602011771号