报错背景:

刚在CDH中集成Flume插件,启动报错

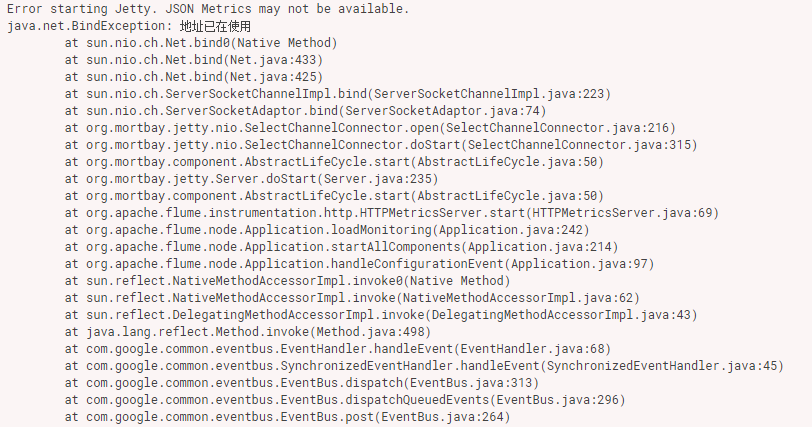

报错现象:

Error starting Jetty. JSON Metrics may not be available. java.net.BindException: 地址已在使用 at sun.nio.ch.Net.bind0(Native Method) at sun.nio.ch.Net.bind(Net.java:433) at sun.nio.ch.Net.bind(Net.java:425) at sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:223) at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:74) at org.mortbay.jetty.nio.SelectChannelConnector.open(SelectChannelConnector.java:216) at org.mortbay.jetty.nio.SelectChannelConnector.doStart(SelectChannelConnector.java:315) at org.mortbay.component.AbstractLifeCycle.start(AbstractLifeCycle.java:50) at org.mortbay.jetty.Server.doStart(Server.java:235) at org.mortbay.component.AbstractLifeCycle.start(AbstractLifeCycle.java:50) at org.apache.flume.instrumentation.http.HTTPMetricsServer.start(HTTPMetricsServer.java:69) at org.apache.flume.node.Application.loadMonitoring(Application.java:242) at org.apache.flume.node.Application.startAllComponents(Application.java:214) at org.apache.flume.node.Application.handleConfigurationEvent(Application.java:97) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at com.google.common.eventbus.EventHandler.handleEvent(EventHandler.java:68) at com.google.common.eventbus.SynchronizedEventHandler.handleEvent(SynchronizedEventHandler.java:45) at com.google.common.eventbus.EventBus.dispatch(EventBus.java:313) at com.google.common.eventbus.EventBus.dispatchQueuedEvents(EventBus.java:296) at com.google.common.eventbus.EventBus.post(EventBus.java:264) at org.apache.flume.node.PollingPropertiesFileConfigurationProvider$FileWatcherRunnable.run(PollingPropertiesFileConfigurationProvider.java:145) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748)

报错原因:

我也没看懂具体原因,但是在解决的时候稀里糊涂就把报错解决了。

报错解决:

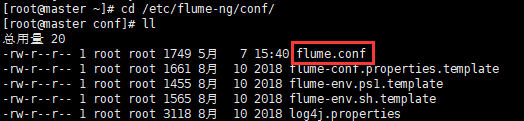

进入flume的配置目录:/etc/flume-ng/conf/

编辑文件:flume.conf

按照自己的需求填写相关语句

完成之后报错消失(CDH中的相同配置文件可能信息不同步,注意检查)

agent.sources = kafkaSource agent.channels = memoryChannel agent.sinks = hdfsSink #-------- kafkaSource相关配置----------------- agent.sources.kafkaSource.channels = memoryChannel # 定义消息源类型 agent.sources.kafkaSource.type=org.apache.flume.source.kafka.KafkaSource # 这里特别注意: 是kafka的zookeeper的地址 agent.sources.kafkaSource.zookeeperConnect=192.168.52.26:2181 # 配置消费的kafka topic agent.sources.kafkaSource.topic=AlarmHis agent.sources.kafkaSource.kafka.consumer.timeout.ms=100 #------- memoryChannel相关配置------------------------- # channel类型 agent.channels.memoryChannel.type=memory # channel存储的事件容量 agent.channels.memoryChannel.capacity=10000 # 事务容量 agent.channels.memoryChannel.transactionCapacity=1000 #---------hdfsSink 相关配置------------------ agent.sinks.hdfsSink.type=hdfs agent.sinks.hdfsSink.channel = memoryChannel # 写到HDFS的路径 agent.sinks.hdfsSink.hdfs.path=hdfs://master:8020/yk/dl/alarm_his #配置前缀和后缀 agent.sinks.hdfsSink.hdfs.filePrefix = AlarmHis agent.sinks.hdfsSink.hdfs.fileSuffix=.txt ## 表示只要过了60*60*24秒钟,就切换生成一个新的文件 agent.sinks.hdfsSink.hdfs.rollInterval = 86400 ## 如果记录的文件大于1024*1024*1024字节时切换一次 agent.sinks.hdfsSink.hdfs.rollSize = 0 ## 当写了5个事件时触发 agent.sinks.hdfsSink.hdfs.rollCount = 0 ## 收到了多少条消息往dfs中追加内容 agent.sinks.hdfsSink.hdfs.batchSize = 5 ## 使用本地时间戳 agent.sinks.hdfsSink.hdfs.useLocalTimeStamp = true agent.sinks.hdfsSink.hdfs.writeFormat=Text #生成的文件类型,默认是Sequencefile,可用DataStream:为普通文本 agent.sinks.hdfsSink.hdfs.fileType=DataStream