传统部署方式

1、纯手工scp

2、纯手工登录git pull 、svn update

3、纯手工xftp往上拉

4、开发给打一个压缩包,rz上去。解压

传统部署缺点:

1、全程运维参与,占用大量时间

2、上线速度慢。

3、认为失误多。管理混乱

4、回滚慢,不及时

新项目上线,规划排在第一位

一般银行都不提供测试接口。比如一些电商公司测试的话,经常把商品调节成1分,只能特定账号能看到。

环境的规划

1、开发环境-开发者本地有自己的环境,然后运维需要设置的开发环境,放的是大家共用的服务。如开发数据库mysql,其它:redis、Memcached。

2、测试环境:功能测试环境和性能测试环境

3、预生产环境:一般可以用生产环境中的某个节点担任

4、生产环境:直接对用户提供服务的环境

预生产环境产生的原因:

1、数据库不一致:测试环境和生产环境数据库肯定不一样的。

2、使用生产环境的联调接口。例如,支付接口

部署:

1、代码放在哪里:svn,git

2、获取什么版本代码?

svn+git直接拉去某个分支

svn:指定版本号

git:指定tag

3、差异解决:

(1)、各个节点直接差异:

(2)、代码仓库和实际的差异。配置文件是否在代码仓库中

(3)、配置文件未必一样:crontab.xml预生产节点

4、如何更新。java tomcat。需要重启。

5、测试。

6、串行和并行 分组部署

7如何执行。(1)shell执行。(2)web界面

关于差异文件:

环境准备

系统版本

|

1

2

3

4

5

|

[root@linux-node1 ~]# cat /etc/redhat-releaseCentOS Linux release 7.1.1503 (Core)[root@linux-node1 ~]# uname -rm3.10.0-229.el7.x86_64 x86_64[root@linux-node1 ~]# |

主机名和IP

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

node1[root@linux-node1 ~]# hostnamelinux-node1.nmap.com[root@linux-node1 ~]# cat /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain6192.168.56.11 linux-node1 linux-node1.nmap.com192.168.56.12 linux-node2 linux-node2.nmap.com[root@linux-node1 ~]#node2[root@linux-node2 ~]# hostnamelinux-node2.nmap.com[root@linux-node2 ~]# cat /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain6192.168.56.11 linux-node1 linux-node1.nmap.com192.168.56.12 linux-node2 linux-node2.nmap.com[root@linux-node2 ~]# |

两台web服务器,node1和node2作为两个web服务器,同时node1也作为部署分发服务器,去管理2个node节点上的web包

两个节点添加普通用户www,作为web服务器管理用户。

|

1

2

3

4

5

6

7

8

9

|

[root@linux-node1 scripts]# useradd -u 1001 www[root@linux-node1 scripts]# id wwwuid=1001(www) gid=1001(www) groups=1001(www)[root@linux-node1 scripts]#[root@linux-node2 ~]# useradd -u 1001 www[root@linux-node2 ~]# id wwwuid=1001(www) gid=1001(www) groups=1001(www)[root@linux-node2 ~]# |

配置www用户登录其他机器不用密码。密钥认证。以后www用户作为管理其它机器的用户

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

|

[root@linux-node1 .ssh]# su - www[www@linux-node1 ~]$ ssh-keygen -t rsaGenerating public/private rsa key pair.Enter file in which to save the key (/home/www/.ssh/id_rsa):Created directory '/home/www/.ssh'.Enter passphrase (empty for no passphrase):Enter same passphrase again:Your identification has been saved in /home/www/.ssh/id_rsa.Your public key has been saved in /home/www/.ssh/id_rsa.pub.The key fingerprint is:70:37:ff:d0:17:e0:74:1d:c9:04:28:bb:de:ec:1f:7f www@linux-node1.nmap.comThe key's randomart image is:+--[ RSA 2048]----+| .++++|| . .o oo.|| . . = . . || o o o . .|| S . o . .|| . o . || . o .. || . o o E|| .... ..|+-----------------+[www@linux-node1 ~]$ |

查看公钥

|

1

2

3

4

5

6

7

8

9

10

11

|

[www@linux-node1 ~]$ cd .ssh/[www@linux-node1 .ssh]$ lltotal 8-rw------- 1 www www 1679 Apr 5 03:41 id_rsa-rw-r--r-- 1 www www 406 Apr 5 03:41 id_rsa.pub[www@linux-node1 .ssh]$ cat id_rsa.pubssh-rsaAAAAB3NzaC1yc2EAAAADAQABAAABAQDcZh8EEk2/rS6B/tLHnRpZGrGIJYFHg7zRFvuT3N9jvOFhYJdWv+8WSQuT0pvxNM4eR0N5Ma9wVvKPo/lVjCaFK+M0dENJVhi6m9OKMtoo2ujvvuyinNPP4pyoK6ggG5jOlEkHoLcbWCRG/j3pN1rZYV+1twET9xi2IA4UQkgPvKKYWjq7NUR0v5BWsgEQt7VvjcLWTlltTVeGb3FDVKIjDnioIBmLmVwJS64N+GGgAj5YQ+bKHTwYanEMD39JGKxo0RXTZB5sa734yfNjc3hTZXB4RCcGdzgcMJs/Rt5VeZ277zF86xr4Hd5cioAbV6Y1RvELjmpvrqUUz3tcaKId www@linux-node1.nmap.com[www@linux-node1 .ssh]$ |

node2也添加node1的公钥

改成600权限才能正常登录

|

1

2

3

4

5

6

7

8

9

|

[www@linux-node2 ~]$ cd .ssh/[www@linux-node2 .ssh]$ vim authorized_keys[www@linux-node2 .ssh]$ cat authorized_keysssh-rsaAAAAB3NzaC1yc2EAAAADAQABAAABAQDcZh8EEk2/rS6B/tLHnRpZGrGIJYFHg7zRFvuT3N9jvOFhYJdWv+8WSQuT0pvxNM4eR0N5Ma9wVvKPo/lVjCaFK+M0dENJVhi6m9OKMtoo2ujvvuyinNPP4pyoK6ggG5jOlEkHoLcbWCRG/j3pN1rZYV+1twET9xi2IA4UQkgPvKKYWjq7NUR0v5BWsgEQt7VvjcLWTlltTVeGb3FDVKIjDnioIBmLmVwJS64N+GGgAj5YQ+bKHTwYanEMD39JGKxo0RXTZB5sa734yfNjc3hTZXB4RCcGdzgcMJs/Rt5VeZ277zF86xr4Hd5cioAbV6Y1RvELjmpvrqUUz3tcaKId www@linux-node1.nmap.com[www@linux-node2 .ssh]$ chmod 600 authorized_keys[www@linux-node2 .ssh]$ |

登录测试--成功

|

1

2

3

|

[www@linux-node1 .ssh]$ ssh 192.168.58.12Last login: Mon Apr 10 00:31:23 2017 from 192.168.58.11[www@linux-node2 ~]$ |

让node1的www用户ssh自己也不需要输入密码。

node1添加公钥

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

[www@linux-node1 .ssh]$ lltotal 12-rw------- 1 www www 1679 Apr 5 03:41 id_rsa-rw-r--r-- 1 www www 406 Apr 5 03:41 id_rsa.pub-rw-r--r-- 1 www www 175 Apr 5 03:43 known_hosts[www@linux-node1 .ssh]$ vim authorized_keys[www@linux-node1 .ssh]$ chmod 600 authorized_keys[www@linux-node1 .ssh]$ ssh 192.168.58.11The authenticity of host '192.168.58.11 (192.168.58.11)' can't be established.ECDSA key fingerprint is 8b:4e:2f:cd:37:89:02:60:3c:99:9f:c6:7a:5a:29:14.Are you sure you want to continue connecting (yes/no)? yesWarning: Permanently added '192.168.58.11' (ECDSA) to the list of known hosts.Last login: Wed Apr 5 03:40:47 2017[www@linux-node1 ~]$ exitlogoutConnection to 192.168.58.11 closed.[www@linux-node1 .ssh]$ ssh 192.168.58.11Last login: Wed Apr 5 03:46:21 2017 from 192.168.58.11[www@linux-node1 ~]$ |

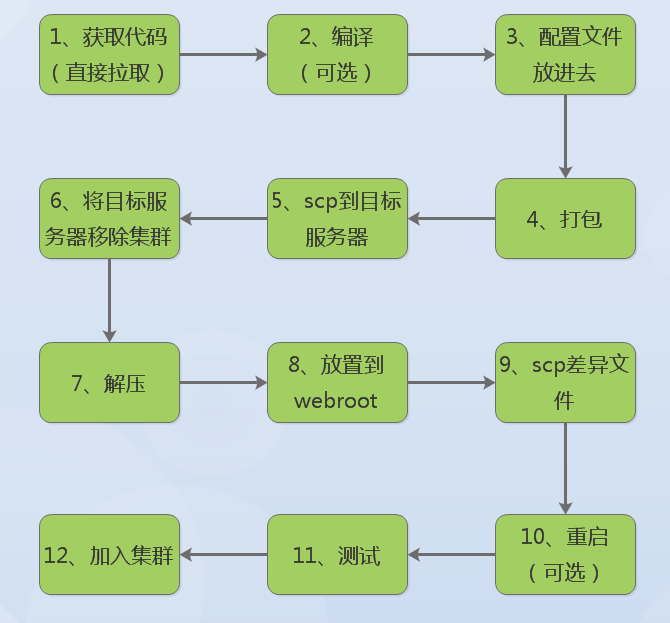

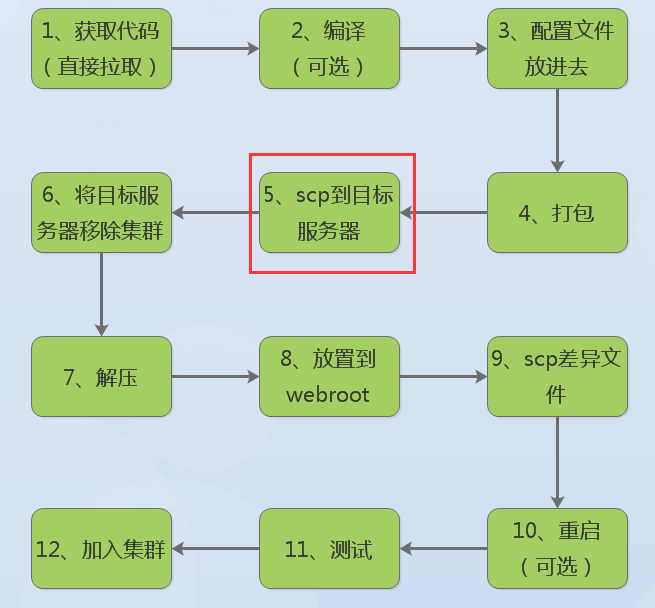

根据上面的流程图,先把大体框架写出来

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

|

[root@linux-node1 ~]# mkdir /scripts -p[root@linux-node1 ~]# cd /scripts/[root@linux-node1 scripts]# vim deploy.sh[root@linux-node1 scripts]# chmod +x deploy.sh[root@linux-node1 scripts]# ./deploy.shUsage: ./deploy.sh [ deploy | rollback ][root@linux-node1 scripts]# cat deploy.sh#!/bin/bash#Shell EnvSHELL_NAME="deploy.sh"SHELL_DIR="/home/www"SHELL_LOG="${SHELL_DIR}/${SHELL_NAME}.log"#Code EnvCODE_DIR="/deploy/code/deploy"CONFIG_DIR="/deploy/config"TMP_DIR="/deploy/tmp"TAR_DIR="/deploy/tar"usage(){ echo $"Usage: $0 [ deploy | rollback ]"}code_get(){ echo code_get}code_build(){ echo code_build}code_config(){ echo code_config}code_tar(){ echo code_tar}code_scp(){ echo code_scp}cluster_node_remove(){ echo cluster_node_remove}code_deploy(){ echo code_deploy}config_diff(){ echo config_diff}code_test(){ echo code_test}cluster_node_in(){ echo cluster_node_in}rollback(){ echo rollback}main(){ case $1 in deploy) code_get; code_build; code_config; code_tar; code_scp; cluster_node_remove; code_deploy; config_diff; code_test; cluster_node_in; ;; rollback) rollback; ;; *) usage; esac}main $1[root@linux-node1 scripts]# |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

main(){ DEPLOY_METHOD=$1 case $DEPLOY_METHOD in deploy) code_get; code_build; code_config; code_tar; code_scp; cluster_node_remove; code_deploy; config_diff; code_test; cluster_node_in; ;; |

继续完善脚本--添加日志和锁

1、凡是不记录日志的脚本就是刷流氓,执行到哪一步失败的啊?

2、脚本是否可以多个人一起执行?(最好不要多个人一起执行)不允许多人执行的话可以上锁

一般锁文件放下面目录下

|

1

2

3

4

|

[root@linux-node1 ~]# cd /var/run/lock/[root@linux-node1 lock]# lsiscsi lockdev lvm ppp subsys[root@linux-node1 lock]# |

我们可以单独添加个目录,给它用,因为权限问题,需要授权改变属组,我们使用tmp目录

主函数执行之前,应该先判断锁文件是否存在,执行的时候也应该生成这个lock文件

既然2个地方用到了它,是否可以把它制作成变量

新的脚本如下,主要添加了锁的功能

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

|

[root@linux-node1 scripts]# cat deploy.sh#!/bin/bash#Shell EnvSHELL_NAME="deploy.sh"SHELL_DIR="/home/www"SHELL_LOG="${SHELL_DIR}/${SHELL_NAME}.log"#Code EnvCODE_DIR="/deploy/code/deploy"CONFIG_DIR="/deploy/config"TMP_DIR="/deploy/tmp"TAR_DIR="/deploy/tar"LOCK_FILE="/tmp/deploy.lock"usage(){ echo $"Usage: $0 [ deploy | rollback ]"}shell_lock(){ touch ${LOCK_FILE}}shell_unlock(){ rm -f ${LOCK_FILE}}code_get(){ echo code_get sleep 60;}code_build(){ echo code_build}code_config(){ echo code_config}code_tar(){ echo code_tar}code_scp(){ echo code_scp}cluster_node_remove(){ echo cluster_node_remove}code_deploy(){ echo code_deploy}config_diff(){ echo config_diff}code_test(){ echo code_test}cluster_node_in(){ echo cluster_node_in}rollback(){ echo rollback}main(){ if [ -f ${LOCK_FILE} ];then echo "Deploy is running" && exit; fi DEPLOY_METHOD=$1 case $DEPLOY_METHOD in deploy) shell_lock; code_get; code_build; code_config; code_tar; code_scp; cluster_node_remove; code_deploy; config_diff; code_test; cluster_node_in; shell_unlock; ;; rollback) shell_lock; rollback; shell_unlock; ;; *) usage; esac}main $1[root@linux-node1 scripts]# |

先执行下检查语法错误

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[root@linux-node1 scripts]# ./deploy.sh deploycode_getcode_buildcode_configcode_tarcode_scpcluster_node_removecode_deployconfig_diffcode_testcluster_node_in[root@linux-node1 scripts]# ./deploy.sh rollbackrollback |

加个sleep测试下锁的功能

给一个函数加下sleep 测试下执行中,另外的人是否可以执行这个脚本

|

1

2

3

4

|

code_get(){ echo code_get sleep 60;} |

运行脚本

|

1

2

|

[root@linux-node1 scripts]# ./deploy.sh deploycode_get |

|

1

2

3

4

5

6

7

|

[root@linux-node1 scripts]# ./deploy.sh deployDeploy is running[root@linux-node1 scripts]# ./deploy.sh deployDeploy is running[root@linux-node1 scripts]# ./deploy.sh rollbackDeploy is running[root@linux-node1 scripts]# |

增加日志功能

其实就是echo一行到日志文件中,每个函数写加echo 写到日志里,这样比较low

能不能写个日志函数,加时间戳。以后日志函数可以复制到其它脚本里

|

1

2

3

4

5

|

[www@linux-node1 scripts]$ date "+%Y-%m-%d"2017-04-23[www@linux-node1 scripts]$ date "+%H-%M-%S"22-10-34[www@linux-node1 scripts]$ |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

#!/bin/bash# Date/Time VeriablesCDATE=$(date "+%Y-%m-%d")CTIME=$(date "+%H-%M-%S")#Shell EnvSHELL_NAME="deploy.sh"SHELL_DIR="/home/www"SHELL_LOG="${SHELL_DIR}/${SHELL_NAME}.log"#Code EnvCODE_DIR="/deploy/code/deploy"CONFIG_DIR="/deploy/config"TMP_DIR="/deploy/tmp"TAR_DIR="/deploy/tar"LOCK_FILE="/tmp/deploy.lock" |

还不能这么写,不然以后的时间都是一样的

可以改成这样,它不会执行

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

#!/bin/bash# Date/Time VeriablesCDATE='date "+%Y-%m-%d"'CTIME='date "+%H-%M-%S"'#Shell EnvSHELL_NAME="deploy.sh"SHELL_DIR="/home/www"SHELL_LOG="${SHELL_DIR}/${SHELL_NAME}.log"#Code EnvCODE_DIR="/deploy/code/deploy"CONFIG_DIR="/deploy/config"TMP_DIR="/deploy/tmp"TAR_DIR="/deploy/tar"LOCK_FILE="/tmp/deploy.lock" |

打包的时候,也用到时间戳命名了。还得用一个固定不变的时间用于打包

因为解压的时候,scp的时候用必须知道确定的包名字。

这里用到了2个时间,log-date是让它不执行的,cdate是让它执行的

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

#!/bin/bash# Date/Time VeriablesLOG_DATE='date "+%Y-%m-%d"'LOG_TIME='date "+%H-%M-%S"'CDATE=$(date "+%Y-%m-%d")CTIME=$(date "+%H-%M-%S")#Shell EnvSHELL_NAME="deploy.sh"SHELL_DIR="/home/www"SHELL_LOG="${SHELL_DIR}/${SHELL_NAME}.log"#Code EnvCODE_DIR="/deploy/code/deploy"CONFIG_DIR="/deploy/config"TMP_DIR="/deploy/tmp"TAR_DIR="/deploy/tar" |

|

1

2

3

4

5

6

7

|

[root@linux-node1 ~]# LOG_DATE='date "+%Y-%m-%d"'[root@linux-node1 ~]# LOG_TIME='date "+%H-%M-%S"'[root@linux-node1 ~]# echo $LOG_DATEdate "+%Y-%m-%d"[root@linux-node1 ~]# echo $LOG_TIMEdate "+%H-%M-%S"[root@linux-node1 ~]# |

|

1

2

3

4

5

|

[root@linux-node1 ~]# eval $LOG_TIME22-21-05[root@linux-node1 ~]# eval $LOG_DATE2017-04-23[root@linux-node1 ~]# |

|

1

2

3

4

|

[root@linux-node1 ~]# eval $LOG_DATE && eval $LOG_TIME2017-04-2322-22-48[root@linux-node1 ~]# |

单独定义一个时间变量(这里用不到,但是可以实现)

|

1

2

3

4

5

6

|

[root@linux-node1 ~]# D_T='date "+%Y-%m-%d-%H-%M-%S"'[root@linux-node1 ~]# echo $D_Tdate "+%Y-%m-%d-%H-%M-%S"[root@linux-node1 ~]# eval $D_T2017-04-26-19-33-01[root@linux-node1 ~]# |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

usage(){ echo $"Usage: $0 [ deploy | rollback ]"}writelog(){ LOGINFO=$1 echo "${CDATE} ${CTIME}: ${SHELL_NAME} : ${LOGINFO}" >> ${SHELL_LOG}}shell_lock(){ touch ${LOCK_FILE}}shell_unlock(){ rm -f ${LOCK_FILE}} |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

shell_lock(){ touch ${LOCK_FILE}}shell_unlock(){ rm -f ${LOCK_FILE}}code_get(){ writelog code_get;}code_build(){ echo code_build} |

|

1

2

3

4

5

6

7

8

9

10

11

|

shell_unlock(){ rm -f ${LOCK_FILE}}code_get(){ writelog "code_get";}code_build(){ echo code_build} |

|

1

2

3

4

5

6

7

8

9

10

11

12

|

[root@linux-node1 scripts]# mkdir /deploy/config -p[root@linux-node1 scripts]# mkdir /deploy/tmp -p[root@linux-node1 scripts]# mkdir /deploy/tar -p[root@linux-node1 scripts]# mkdir /deploy/code -p[root@linux-node1 scripts]# cd /deploy/[root@linux-node1 deploy]# lltotal 0drwxr-xr-x 2 root root 6 Apr 23 22:37 codedrwxr-xr-x 2 root root 6 Apr 23 22:37 configdrwxr-xr-x 2 root root 6 Apr 23 22:37 tardrwxr-xr-x 2 root root 6 Apr 23 22:37 tmp[root@linux-node1 deploy]# |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[root@linux-node1 deploy]# cd code/[root@linux-node1 code]# mkdir web-demo -p[root@linux-node1 code]# cd ..[root@linux-node1 deploy]# tree.├── code│ └── web-demo├── config├── tar└── tmp5 directories, 0 files[root@linux-node1 deploy]# |

修改脚本

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

#Shell EnvSHELL_NAME="deploy.sh"SHELL_DIR="/home/www"SHELL_LOG="${SHELL_DIR}/${SHELL_NAME}.log"#Code EnvCODE_DIR="/deploy/code/web-demo"CONFIG_DIR="/deploy/config"TMP_DIR="/deploy/tmp"TAR_DIR="/deploy/tar"LOCK_FILE="/tmp/deploy.lock"usage(){ echo $"Usage: $0 [ deploy | rollback ]"} |

|

1

2

3

4

|

code_get(){ writelog "code_get"; cd $CODE_DIR && git pull} |

配置文件不要放这个目录下,这个目录只用来更新---git pull.你不好判断配置文件是仓库里面的,还是你专门下载下来的(最佳实践)

规划的时候,只让这里目录执行git pull

|

1

|

TMP_DIR="/deploy/tmp" |

继续优化获取代码的函数

|

1

2

3

4

5

|

code_get(){ writelog "code_get"; cd $CODE_DIR && git pull cp -r ${CODE_DIR} ${TMP_DIR}/} |

配置操作的函数时候,觉得不合适,应该区分项目,标准化。比如web-demo可以理解为一个项目包名字

|

1

2

3

4

5

6

|

#Code EnvCODE_DIR="/deploy/code/web-demo"CONFIG_DIR="/deploy/config/web-demo"TMP_DIR="/deploy/tmp"TAR_DIR="/deploy/tar"LOCK_FILE="/tmp/deploy.lock" |

目录新建

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

[root@linux-node1 scripts]# cd /deploy/[root@linux-node1 deploy]# cd config/[root@linux-node1 config]# mkdir web-demo[root@linux-node1 config]# cd ..[root@linux-node1 deploy]# tree.├── code│ └── web-demo├── config│ └── web-demo├── tar└── tmp6 directories, 0 files[root@linux-node1 deploy]# |

|

1

2

3

4

5

6

|

[root@linux-node1 deploy]# cd config/[root@linux-node1 config]# cd web-demo/[root@linux-node1 web-demo]# vim config.ini[root@linux-node1 web-demo]# cat config.inihehe[root@linux-node1 web-demo]# |

|

1

2

3

4

5

6

7

|

#Code EnvPRO_NAME="web-demo"CODE_DIR="/deploy/code/web-demo"CONFIG_DIR="/deploy/config/web-demo"TMP_DIR="/deploy/tmp"TAR_DIR="/deploy/tar"LOCK_FILE="/tmp/deploy.lock" |

调整下脚本,优化code_config函数

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

code_get(){ writelog "code_get"; cd $CODE_DIR && git pull cp -r ${CODE_DIR} ${TMP_DIR}/}code_build(){ echo code_build}code_config(){ echo code_config /bin/cp -r $CONFIG_DIR/* $TMP_DIR/$PRO_NAME} |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

code_get(){ writelog "code_get"; cd $CODE_DIR && git pull cp -r ${CODE_DIR} ${TMP_DIR}/}code_build(){ echo code_build}code_config(){ echo code_config /bin/cp -r ${CONFIG_DIR}/* ${TMP_DIR}/"${PRO_NAME}"} |

注意是/bin/cp ,这样原先有配置文件,这里可以直接替换了

如果开发把配置文件打包进去了。连接的是测试的库,假如你部署生产环境了,连接测试的库。出了问题,谁背黑锅

运维是最后一道防线。开发和测试没遇到。你背黑锅

|

1

2

3

4

5

|

code_config(){ echo code_config /bin/cp -r ${CONFIG_DIR}/* ${TMP_DIR}/"${PRO_NAME}" PKG_NAME="${PRO_NAME}"_"${API_VER}"-"${CDATE}-${CTIME}"} |

继续优化

|

1

2

3

4

5

6

|

code_config(){ echo code_config /bin/cp -r ${CONFIG_DIR}/* ${TMP_DIR}/"${PRO_NAME}" PKG_NAME="${PRO_NAME}"_"${API_VER}"-"${CDATE}-${CTIME}" cd ${TMP_DIR} && mv ${PRO_NAME} ${PKG_NAME}} |

添加版本号,先随便定义个版本

|

1

2

3

4

5

6

|

code_get(){ writelog "code_get"; cd $CODE_DIR && git pull cp -r ${CODE_DIR} ${TMP_DIR}/ API_VER="123"} |

|

1

2

3

4

5

6

|

code_get(){ writelog "code_get"; cd $CODE_DIR && echo "git pull" cp -r ${CODE_DIR} ${TMP_DIR}/ API_VER="123"} |

|

1

2

|

[root@linux-node1 scripts]# chown -R www:www /deploy/[root@linux-node1 scripts]# |

|

1

2

3

4

5

|

[root@linux-node1 scripts]# cd /deploy/code/web-demo/[root@linux-node1 web-demo]# echo hehe>>index.html[root@linux-node1 web-demo]# cat index.htmlhehe[root@linux-node1 web-demo]# |

文件和目录结构如下

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[root@linux-node1 deploy]# tree.├── code│ └── web-demo│ └── index.html├── config│ └── web-demo│ └── config.ini├── tar└── tmp6 directories, 2 files[root@linux-node1 deploy]# |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

[root@linux-node1 deploy]# cd /scripts/[root@linux-node1 scripts]# chown -R www:www /scripts/deploy.sh[root@linux-node1 scripts]# lltotal 12-rw-r--r-- 1 root root 234 Apr 3 23:51 cobbler_list.py-rw-r--r-- 1 root root 1533 Apr 4 00:01 cobbler_system_api.py-rwxr-xr-x 1 www www 1929 Apr 23 23:04 deploy.sh[root@linux-node1 scripts]# su - wwwLast login: Sun Apr 23 22:06:44 CST 2017 on pts/0[www@linux-node1 scripts]$ ./deploy.sh deploygit pullcode_buildcode_configcode_tarcode_scpcluster_node_removecode_deployconfig_diffcode_testcluster_node_in[www@linux-node1 scripts]$ |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

[www@linux-node1 scripts]$ tree /deploy//deploy/├── code│ └── web-demo│ └── index.html├── config│ └── web-demo│ └── config.ini├── tar└── tmp ├── web-demo_123-2017-04-23-23-12-15 │ ├── config.ini │ └── index.html └── web-demo_123-2017-04-23-23-13-20 ├── config.ini └── index.html8 directories, 6 files[www@linux-node1 scripts]$ |

|

1

2

3

4

5

6

|

code_config(){ echo code_config /bin/cp -r ${CONFIG_DIR}/* ${TMP_DIR}/"${PRO_NAME}" PKG_NAME="${PRO_NAME}"_"${API_VER}"_"${CDATE}-${CTIME}" cd ${TMP_DIR} && mv ${PRO_NAME} ${PKG_NAME}} |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

|

[www@linux-node1 scripts]$ ./deploy.sh deploygit pullcode_buildcode_configcode_tarcode_scpcluster_node_removecode_deployconfig_diffcode_testcluster_node_in[www@linux-node1 scripts]$ tree /deploy//deploy/├── code│ └── web-demo│ └── index.html├── config│ └── web-demo│ └── config.ini├── tar└── tmp ├── web-demo_123-2017-04-23-23-12-15 │ ├── config.ini │ └── index.html ├── web-demo_123-2017-04-23-23-13-20 │ ├── config.ini │ └── index.html └── web-demo_123_2017-04-23-23-17-20 ├── config.ini └── index.html9 directories, 8 files[www@linux-node1 scripts]$ |

|

1

2

3

4

5

6

7

8

9

10

|

code_config(){ writelog "code_config" /bin/cp -r ${CONFIG_DIR}/* ${TMP_DIR}/"${PRO_NAME}" PKG_NAME="${PRO_NAME}"_"${API_VER}"_"${CDATE}-${CTIME}" cd ${TMP_DIR} && mv ${PRO_NAME} ${PKG_NAME}}code_tar(){ writelog "code_tar"} |

|

1

2

3

4

5

|

code_tar(){ writelog "code_tar" cd ${TMP_DIR} && tar cfz ${PKG_NAME}.tar.gz ${PKG_NAME} writelog "${PKG_NAME}.tar.gz"} |

再次测试脚本

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

|

[www@linux-node1 scripts]$ ./deploy.sh deploygit pullcode_buildcode_scpcluster_node_removecode_deployconfig_diffcode_testcluster_node_in[www@linux-node1 scripts]$ tree /deploy//deploy/├── code│ └── web-demo│ └── index.html├── config│ └── web-demo│ └── config.ini├── tar└── tmp ├── web-demo_123-2017-04-23-23-12-15 │ ├── config.ini │ └── index.html ├── web-demo_123-2017-04-23-23-13-20 │ ├── config.ini │ └── index.html ├── web-demo_123_2017-04-23-23-17-20 │ ├── config.ini │ └── index.html ├── web-demo_123_2017-04-23-23-22-09 │ ├── config.ini │ └── index.html └── web-demo_123_2017-04-23-23-22-09.tar.gz10 directories, 11 files[www@linux-node1 scripts]$ |

前4步都完毕,开始第五步--拷贝到目标服务器

遍历节点

|

1

2

3

4

5

|

[www@linux-node1 scripts]$ node_list="192.168.58.11 192.168.58.12"[www@linux-node1 scripts]$ for node in $node_list;do echo $node;done192.168.58.11192.168.58.12[www@linux-node1 scripts]$ |

脚本里添加node_list

|

1

2

3

4

5

6

7

8

9

10

11

|

#!/bin/bash#Node ListNODE_LIST="192.168.58.11 192.168.58.12"# Date/Time VeriablesLOG_DATE='date "+%Y-%m-%d"'LOG_TIME='date "+%H-%M-%S"'CDATE=$(date "+%Y-%m-%d")CTIME=$(date "+%H-%M-%S") |

分发到目标节点

|

1

2

3

4

5

6

|

code_scp(){ echo code_scp for node in $NODE_LIST;do scp ${TMP_DIR}/${PKG_NAME}.tar.gz $node:/opt/webroot/ done} |

|

1

2

3

4

5

6

|

[root@linux-node1 scripts]# mkdir /opt/webroot -p[root@linux-node1 scripts]# chown -R www:www /opt/webroot[root@linux-node1 scripts]#[root@linux-node2 ~]# mkdir /opt/webroot -p[root@linux-node2 ~]# chown -R www:www /opt/webroot[root@linux-node2 ~]# |

完善拷贝函数

|

1

2

3

4

5

6

|

code_scp(){ echo code_scp for node in $NODE_LIST;do scp ${TMP_DIR}/${PKG_NAME}.tar.gz $node:/opt/webroot/ done} |

|

1

2

3

4

5

6

7

8

9

10

11

12

|

[www@linux-node1 scripts]$ ./deploy.sh deploygit pullcode_buildcode_scpweb-demo_123_2017-04-23-23-33-50.tar.gz 100% 204 0.2KB/s 00:00 web-demo_123_2017-04-23-23-33-50.tar.gz 100% 204 0.2KB/s 00:00 cluster_node_removecode_deployconfig_diffcode_testcluster_node_in[www@linux-node1 scripts]$ |

|

1

2

3

4

5

6

7

8

9

10

11

12

|

[www@linux-node1 scripts]$ tree /opt/webroot//opt/webroot/└── web-demo_123_2017-04-23-23-33-50.tar.gz0 directories, 1 file[www@linux-node1 scripts]$[root@linux-node2 ~]# tree /opt/webroot//opt/webroot/└── web-demo_123_2017-04-23-23-33-50.tar.gz0 directories, 1 file[root@linux-node2 ~]# |

该第6步了,写个日志代替

|

1

2

3

|

cluster_node_remove(){writelog "cluster_node_remove"} |

|

1

2

3

4

5

6

|

code_config(){ writelog "code_config" /bin/cp -r ${CONFIG_DIR}/* ${TMP_DIR}/"${PRO_NAME}" PKG_NAME="${PRO_NAME}"_"${API_VER}"_"${CDATE}-${CTIME}" cd ${TMP_DIR} && mv ${PRO_NAME} ${PKG_NAME}} |

|

1

2

3

4

5

6

|

code_config(){ writelog "code_config" /bin/cp -r ${CONFIG_DIR}/base/* ${TMP_DIR}/"${PRO_NAME}" PKG_NAME="${PRO_NAME}"_"${API_VER}"_"${CDATE}-${CTIME}" cd ${TMP_DIR} && mv ${PRO_NAME} ${PKG_NAME}} |

创建配置文件目录,base存放相同的配置,other存放差异配置

|

1

2

3

4

5

6

7

8

9

|

[www@linux-node1 scripts]$ cd /deploy/config/web-demo/[www@linux-node1 web-demo]$ mkdir base[www@linux-node1 web-demo]$ mkdir other[www@linux-node1 web-demo]$ lltotal 4drwxrwxr-x 2 www www 6 Apr 23 23:38 base-rw-r--r-- 1 www www 5 Apr 23 22:46 config.inidrwxrwxr-x 2 www www 6 Apr 23 23:38 other[www@linux-node1 web-demo]$ |

调整下配置文件所在目录

|

1

2

3

4

5

6

7

|

[www@linux-node1 web-demo]$ mv config.ini base/[www@linux-node1 web-demo]$ cd other/[www@linux-node1 other]$ echo 192.168.58.12-config >>192.168.58.12.crontab.xml[www@linux-node1 other]$ lltotal 4-rw-rw-r-- 1 www www 21 Apr 23 23:39 192.168.58.12.crontab.xml[www@linux-node1 other]$ |

|

1

2

3

4

5

6

7

8

9

|

code_deploy(){ echo code_deploy cd /opt/webroot/ && tar xfz ${PKG_NAME}.tar.gz}config_diff(){ echo config_diff scp ${CONFIG_DIR}/other/192.168.58.12.crontab.xml 192.168.58.12:/opt/webroot/${PKG_NAME}} |

|

1

2

3

4

5

6

7

8

9

10

11

12

|

[www@linux-node1 scripts]$ ./deploy.sh deploygit pullcode_buildcode_scpweb-demo_123_2017-04-23-23-43-48.tar.gz 100% 204 0.2KB/s 00:00 web-demo_123_2017-04-23-23-43-48.tar.gz 100% 204 0.2KB/s 00:00 code_deployconfig_diff192.168.58.12.crontab.xml 100% 21 0.0KB/s 00:00 code_testcluster_node_in[www@linux-node1 scripts]$ |

上面还有不足的地方,scp到目标服务器并解压,应该使用ssh远程执行、。上面脚本远程node2上解压是失败的

脚本再次改造下,把部署的函数和差异配置合并到一起

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

cluster_node_remove(){ writelog "cluster_node_remove"}code_deploy(){ echo code_deploy for node in $NODE_LIST;do ssh $node "cd /opt/webroot/ && tar xfz ${PKG_NAME}.tar.gz" done scp ${CONFIG_DIR}/other/192.168.58.12.crontab.xml 192.168.58.12:/opt/webroot/${PKG_NAME}/crontab.xml}code_test(){ echo code_test} |

创建webroot

|

1

2

3

4

5

6

7

8

|

[root@linux-node1 ~]# mkdir /webroot[root@linux-node1 ~]# chown -R www:www /webroot[root@linux-node1 ~]#[root@linux-node2 ~]# mkdir /webroot[root@linux-node2 ~]# chown -R www:www /webroot[root@linux-node2 ~]# |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

cluster_node_remove(){ writelog "cluster_node_remove"}code_deploy(){ echo code_deploy for node in $NODE_LIST;do ssh $node "cd /opt/webroot/ && tar xfz ${PKG_NAME}.tar.gz" done scp ${CONFIG_DIR}/other/192.168.58.12.crontab.xml 192.168.58.12:/opt/webroot/${PKG_NAME}/crontab.xml ln -s /opt/webroot/${PKG_NAME} /webroot/web-demo}code_test(){ echo code_test} |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

cluster_node_remove(){ writelog "cluster_node_remove"}code_deploy(){ echo code_deploy for node in $NODE_LIST;do ssh $node "cd /opt/webroot/ && tar xfz ${PKG_NAME}.tar.gz" done scp ${CONFIG_DIR}/other/192.168.58.12.crontab.xml 192.168.58.12:/opt/webroot/${PKG_NAME}/crontab.xml rm -f /webroot/web-demo && ln -s /opt/webroot/${PKG_NAME} /webroot/web-demo}code_test(){ echo code_test} |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

cluster_node_remove(){ writelog "cluster_node_remove"}code_deploy(){ echo code_deploy for node in $NODE_LIST;do ssh $node "cd /opt/webroot/ && tar xfz ${PKG_NAME}.tar.gz" rm -f /webroot/web-demo && ln -s /opt/webroot/${PKG_NAME} /webroot/web-demo done scp ${CONFIG_DIR}/other/192.168.58.12.crontab.xml 192.168.58.12:/opt/webroot/${PKG_NAME}/crontab.xml}code_test(){ echo code_test} |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

cluster_node_remove(){ writelog "cluster_node_remove"}code_deploy(){ echo code_deploy for node in $NODE_LIST;do ssh $node "cd /opt/webroot/ && tar xfz ${PKG_NAME}.tar.gz" rm -f /webroot/web-demo && ln -s /opt/webroot/${PKG_NAME} /webroot/web-demo done scp ${CONFIG_DIR}/other/192.168.58.12.crontab.xml 192.168.58.12:/webroot/web-demo/crontab.xml}code_test(){ echo code_test} |

|

1

2

3

4

5

|

[www@linux-node1 scripts]$ cd /webroot/[www@linux-node1 webroot]$ mkdir web-demo -p[www@linux-node1 webroot]$[root@linux-node2 webroot]# mkdir web-demo -p[root@linux-node2 webroot]# |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

cluster_node_remove(){ writelog "cluster_node_remove"}code_deploy(){ echo code_deploy for node in $NODE_LIST;do ssh $node "cd /opt/webroot/ && tar xfz ${PKG_NAME}.tar.gz" ssh $node "rm -rf /webroot/web-demo && ln -s /opt/webroot/${PKG_NAME} /webroot/web-demo" done scp ${CONFIG_DIR}/other/192.168.58.12.crontab.xml 192.168.58.12:/webroot/web-demo/crontab.xml}code_test(){ echo code_test} |

测试脚本

|

1

2

3

4

5

6

7

8

9

10

11

12

|

[www@linux-node1 scripts]$ ./deploy.sh deploygit pullcode_buildcode_scpweb-demo_123_2017-04-24-00-01-24.tar.gz 100% 204 0.2KB/s 00:00 web-demo_123_2017-04-24-00-01-24.tar.gz 100% 204 0.2KB/s 00:00 code_deploy192.168.58.12.crontab.xml 100% 21 0.0KB/s 00:00 ./deploy.sh: line 113: config_diff: command not foundcode_testcluster_node_in[www@linux-node1 scripts]$ |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

|

测试[www@linux-node1 scripts]$ ./deploy.sh deploygit pullcode_buildcode_scpweb-demo_123_2017-04-24-00-02-44.tar.gz 100% 205 0.2KB/s 00:00 web-demo_123_2017-04-24-00-02-44.tar.gz 100% 205 0.2KB/s 00:00 code_deploy192.168.58.12.crontab.xml 100% 21 0.0KB/s 00:00 code_testcluster_node_in[www@linux-node1 scripts]$检查[www@linux-node1 scripts]$ ll /webroot/total 0lrwxrwxrwx 1 www www 45 Apr 24 00:02 web-demo -> /opt/webroot/web-demo_123_2017-04-24-00-02-44[www@linux-node1 scripts]$[root@linux-node2 webroot]# ll /webroot/total 0lrwxrwxrwx 1 www www 45 Apr 24 00:02 web-demo -> /opt/webroot/web-demo_123_2017-04-24-00-02-44[root@linux-node2 webroot]# |

|

1

2

3

4

5

6

|

code_get(){ writelog "code_get"; cd $CODE_DIR && echo "git pull" cp -r ${CODE_DIR} ${TMP_DIR}/ API_VER="456"} |

继续测试

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

[www@linux-node1 scripts]$ ./deploy.sh deploygit pullcode_buildcode_scpweb-demo_456_2017-04-24-00-04-05.tar.gz 100% 204 0.2KB/s 00:00 web-demo_456_2017-04-24-00-04-05.tar.gz 100% 204 0.2KB/s 00:00 code_deploy192.168.58.12.crontab.xml 100% 21 0.0KB/s 00:00 code_testcluster_node_in[www@linux-node1 scripts]$ ll /webroot/total 0lrwxrwxrwx 1 www www 45 Apr 24 00:04 web-demo -> /opt/webroot/web-demo_456_2017-04-24-00-04-05[www@linux-node1 scripts]$ |

检查