SpringBoot系列(四)使用spring-kafka实现生产者消费者demo代码

一、引入spring-kafka的maven依赖

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

<version>2.5.4.RELEASE</version>

</dependency>方式一、创建springboot-web项目,自动引入(本文以此为例创建)

- 打开IDEA-File-New-Project,选择Spring Initializr初始化方式,Next,Next

- 选中Web中的Spring Web依赖,点击添加

- 选中Messaging中的Spring for Apache Kafka依赖,点击添加

- 创建项目

方式二、创建maven项目,手动引入

- 打开IDEA-File-New-Project,选择Maven初始化方式

- 添加如下依赖到pom.xml文件

- 经过创建启动类,构造Web环境后,剩余操作跟方式一类似。

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.5.5</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

</dependencies>二、生产者demo代码

step1:application.properties配置文件中,只开producer的配置,将consumer的配置注释

server.port=8080

#kafka

spring.kafka.bootstrap-servers=0.0.0.0:9092

#kafka producer

spring.kafka.producer.key-serializer=org.apache.kafka.common.serialization.StringSerializer

spring.kafka.producer.value-serializer=org.apache.kafka.common.serialization.StringSerializer

spring.kafka.producer.acks=all

spring.kafka.producer.retries=0

#kafka consumer

#spring.kafka.consumer.group-id=group_id

#spring.kafka.consumer.enable-auto-commit=true

#spring.kafka.consumer.auto-commit-interval=5000

#spring.kafka.consumer.key-deserializer=org.apache.kafka.common.serialization.StringDeserializer

#spring.kafka.consumer.value-deserializer=org.apache.kafka.common.serialization.StringDeserializerstep2:spring会自动加载这些配置,初始化生产者,其使用方式如下:

直接调用方式

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

/**

* kafka 生产者样例代码

* @author cavan

*/

@RestController

@RequestMapping("/kafka")

public class KafkaController {

private static final Logger LOGGER = LoggerFactory.getLogger(KafkaController.class);

private static final String TOPIC = "topic-test";

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

@PostMapping(value = "/publish")

public String sendMessageTokafkaTopic(@RequestParam("message") String message) {

LOGGER.info(String.format("------ Producing message ------ %s", message));

kafkaTemplate.send(TOPIC, message);

return "ok";

}

}封装后调用

封装代码

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.stereotype.Service;

/**

* kafka producer

*

* @author cavan

*/

@Service

public class Producer {

private static final Logger LOGGER = LoggerFactory.getLogger(Producer.class);

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

public void sendMessage(String topic, String message) {

LOGGER.info(String.format("Producing message is: %s", message));

kafkaTemplate.send(topic, message);

}

}调用代码

import com.example.demo.kafka.Producer;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

/**

* kafka send message

*

* @author cavan

*/

@RestController

@RequestMapping("/kafka")

public class KafkaController {

private static final Logger LOGGER = LoggerFactory.getLogger(KafkaController.class);

private static final String TOPIC = "topic-test";

@Autowired

private Producer producer;

@PostMapping(value = "/producer")

public String sendMessageTokafka(@RequestParam("message") String message) {

LOGGER.info(String.format("------ Producing message ------ %s", message));

producer.sendMessage(TOPIC, message);

return "ok";

}

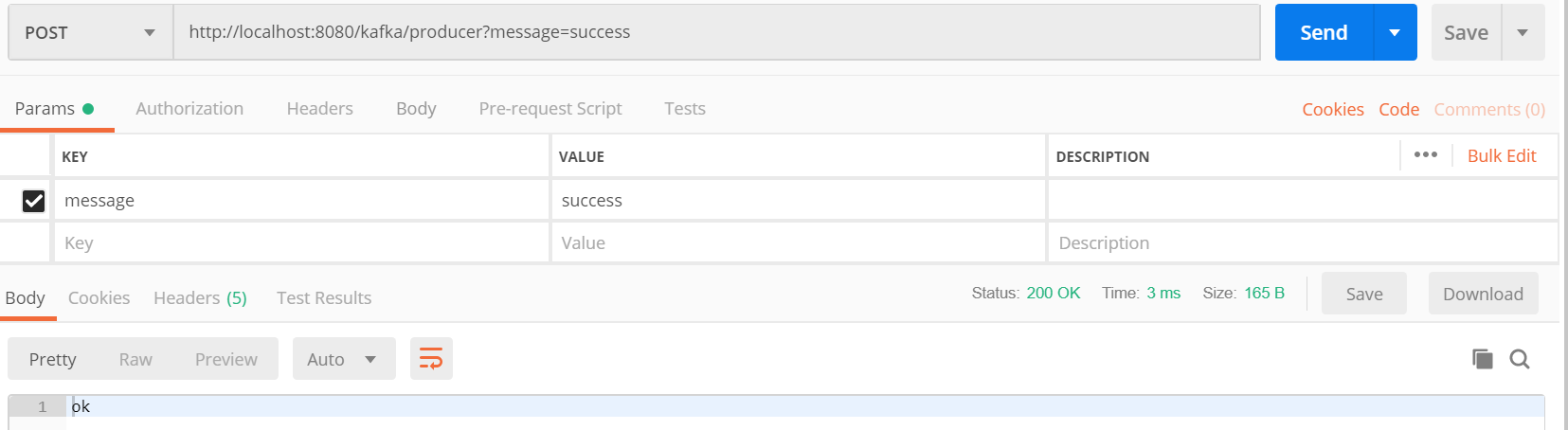

}postman调用接口测试

生产者日志:

2021-10-08 19:26:50.205 INFO 20836 --- [nio-8080-exec-2] c.e.demo.controller.KafkaController : ------ Producing message ------ success

三、消费者demo代码

step1:application.properties配置文件中,增加consumer的配置

server.port=8080

#kafka

spring.kafka.bootstrap-servers=0.0.0.0:9092

#kafka producer

spring.kafka.producer.key-serializer=org.apache.kafka.common.serialization.StringSerializer

spring.kafka.producer.value-serializer=org.apache.kafka.common.serialization.StringSerializer

spring.kafka.producer.acks=all

spring.kafka.producer.retries=0

#kafka consumer

spring.kafka.consumer.group-id=group_id

spring.kafka.consumer.enable-auto-commit=true

spring.kafka.consumer.auto-commit-interval=5000

spring.kafka.consumer.key-deserializer=org.apache.kafka.common.serialization.StringDeserializer

spring.kafka.consumer.value-deserializer=org.apache.kafka.common.serialization.StringDeserializerstep2:spring会自动加载这些配置,初始化消费者,其使用方式如下:

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.stereotype.Service;

/**

* kafka consumer demo

*

* @author cavan

*/

@Service

public class Consumer {

private static final Logger LOGGER = LoggerFactory.getLogger(Consumer.class);

@KafkaListener(id = "test", topics = "topic-test")

public void listen(ConsumerRecord<?, ?> record) {

LOGGER.info(

"topic ={},partition={},offset={},customer={},value={}",

record.topic(),

record.partition(),

record.offset(),

record.key(),

record.value());

}

}消费成功日志:

2021-10-08 19:55:23.004 INFO 11340 --- [nio-8080-exec-2] o.a.kafka.common.utils.AppInfoParser : Kafka version: 2.7.1

2021-10-08 19:55:23.004 INFO 11340 --- [nio-8080-exec-2] o.a.kafka.common.utils.AppInfoParser : Kafka commitId: 61dbce85d0d41457

2021-10-08 19:55:23.004 INFO 11340 --- [nio-8080-exec-2] o.a.kafka.common.utils.AppInfoParser : Kafka startTimeMs: 1633694123004

2021-10-08 19:55:23.117 INFO 11340 --- [ad | producer-1] org.apache.kafka.clients.Metadata : [Producer clientId=producer-1] Cluster ID: Lx7DFjG5SYuOLlJKtz2NCQ

2021-10-08 19:55:23.236 INFO 11340 --- [ test-0-C-1] com.example.demo.kafka.Consumer : topic =topic-test,partition=0,offset=31,customer=null,value=success

浙公网安备 33010602011771号

浙公网安备 33010602011771号