问题描述:

spark.SparkContext: Created broadcast 0 from textFile at WordCount.scala:37

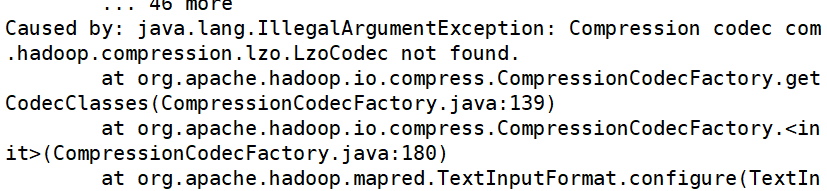

Exception in thread "main" java.lang.RuntimeException: Error in configuring object

.........

//往下N多行

Caused by: java.lang.ClassNotFoundException: Class com.hadoop.compression.lzo.LzoCodec not found

at org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:2499)

问题原因:

Spark on Yarn会默认使用Hadoop集群配置文件设置编码方式,但是Spark在自己的spark-yarn/jars 包里面没有找到支持lzo压缩的jar包,所以报错。

问题解决方案:

解决方案一:拷贝lzo的包到/opt/module/spark-yarn/jars目录

cp /opt/module/hadoop-3.1.3/share/hadoop/common/hadoop-lzo-0.4.20.jar /opt/module/spark-yarn/jars

解决方案二:spark-submit提交参数指定

bin/spark-submit --master yarn --name wbwb \ --jars depend/hadoop-lzo-0.4.20.jar \ --class com.atguigu.sparksql.sparksql_hive_sql_myUDAF WordCount-jar-with-dependencies.jar

浙公网安备 33010602011771号

浙公网安备 33010602011771号