ZooKeeper’s atomic broadcast protocol:Theory and practice

ZooKeeper的原子广播协议:理论和实践

Andr´e Medeiros

March 20, 2012

Abstract

摘要

Apache ZooKeeper is a distributed coordination service for cloud computing, providing essential synchronization and group services for other distributed applications. At its core lies an atomic broadcast protocol,which elects a leader,synchronizes the nodes, and performs broadcasts of updates from the leader. We study the design of this protocol, highlight promised properties, and analyze its official implementation by Apache. In particular, the default leader election protocol is studied in detail.

Apache ZooKeeper是用于云计算的分布式协调服务,为其他分布式应用程序提供必要的同步和组服务,其核心是一个原子广播协议,该协议选举领导者,同步节点,并执行来自领导者的更新广播,我们研究了该协议的设计,突出了其承诺的属性,并分析了Apache的官方实现,特别是详细研究了默认的领导者选举协议。

1 Introduction

介绍

ZooKeeper [8, 10, 11, 12, 19] is a fault-tolerant distributed coordination service for cloud computing applications currently maintained by Yahoo! and the Apache Software Foundation. It provides fundamental services for other cloud computing applications by encapsulating distributed coordination algorithms and maintaining a simple database.

ZooKeeper 【8,10,11,12,19】 是一个用于云计算应用程序的容错分布式协调服务,目前由雅虎和 Apache 软件基金会维护。它通过封装分布式协调算法并维护一个简单的数据库,为其他云计算应用程序提供了基本服务。

The service is intended to be highly-available and highly-reliable, so several client processes rely on it for bootstrapping, storing configuration data, status of running processes, group membership, implementing synchronization primitives, and managing failure recovery. It achieves availability and reliability through replication, and is designed to have good performance in read-dominant workloads [12].

该服务旨在具有高可用性和高可靠性,因此几个客户端进程依赖于它进行启动、存储配置数据、运行进程的状态、组成员、实现同步原语和管理故障恢复。它通过复制实现可用性和可靠性,并旨在在以读取为主的工作负载中具有良好性能【12】。

Total replication of the ZooKeeper database is performed on an ensemble, i.e., a number of host servers, three or five being usual configurations, of which one is the leader of a quorum (i.e.,majority). The service is considered up as long as a quorum of the ensemble is available. A critical component of ZooKeeper is Zab, the ZooKeeper Atomic Broadcast algorithm, which is the protocol that manages atomic updates to the replicas. It is responsible for agreeing on a leader in the ensemble, synchronizing the replicas, managing update transactions to be broadcast, as well as recovering from a crashed state to a valid state. We study Zab in detail in this report.

ZooKeeper数据库的整体复制是在一个由许多主机服务器组成的集合上执行的,这些服务器通常配置为3或5个,其中一个是这些服务器(即多数)的领导者。只要集群的节点大多数可用,该服务就被认为处于运行状态。ZooKeeper的一个关键组件是Zab,即ZooKeeper原子广播算法,它是用于管理副本原子更新的协议。它负责在集群中协商领导者,同步副本,管理要广播的更新事务,以及从崩溃状态恢复到有效状态。我们在本报告中详细研究了Zab。

The outline of this report is as follows. The background knowledge for atomic broadcast protocols is given in the next section. In Section 3 we present Zab’s design,while in Section 4 we comment on its implementation, ending with the conclusion in Section 5. The main references for this report are [12, 19].

本报告的概要如下。 下一节介绍了原子广播协议的背景知识。 在第3节中,我们介绍了Zab的设计,而在第4节中,我们对其实现进行了评论,最后在第5节中得出结论。 本报告的主要参考文献是【12,19】。

2 Background

背景

A broadcast algorithm transmits a message from one process – the primary process – to all other processes in a network or in a broadcast domain,including the primary. Atomic broadcast protocols are distributed algorithms guaranteed either to correctly broadcast or to abort without side effects. It is a primitive widely used in distributed computing for group communication. Atomic broadcast can also be defined as a reliable broadcast that satisfies total order [3], i.e., that satisfies the following properties [4]:

广播算法将消息从主进程(primary process)发送到网络或广播域(broadcast domain)中所有其他进程,包括主进程。原子广播协议是分布式算法,保证正确广播或无副作用地终止,是分布式计算中广泛使用的基本算法。原子广播也可以定义为满足全序的可靠广播【3】,即满足以下属性【4】:

-

Validity: If a correct process broadcasts a message, then all correct processes will eventually deliver it.

有效性:如果一个正确的进程广播了一条消息,那么所有正确的进程最终都会传递它。 -

Uniform Agreement: If a process delivers a message, then all correct processes eventually deliver that message.

统一协议:如果一个进程传递了一条消息,那么所有正确的过程最终都会传递这条消息。 -

Uniform Integrity: For any message m, every process delivers m at most once,and only if m was previously broadcast by the sender of m.

统一完整性:对于任何消息m,每个进程最多发送一次m,并且只有在m的发送者之前广播了m的情况下。 -

Uniform Total Order: If processes p and q both deliver messages m and m′,then p delivers m before m′ if and only if q delivers m before m′.

统一总序:如果进程p和q都发送消息m和m′,则p在m′之前发送m,当且仅当q在m′之前发送m。

Paxos [14, 15] is a traditional protocol for solving distributed consensus. It was not originally intended for atomic broadcasting, but it has been shown in D´efago et al. [4] how consensus protocols can be used for atomic broadcasting. There are many other atomic broadcast protocols, and Paxos was considered for being used in ZooKeeper, however it does not satisfy some critical properties that the service requires. The properties are described in Section 2.3. Zab aims at satisfying ZooKeeper requirements,while maintaining some similarity to Paxos. Refer to [15] for more details on Paxos.

Paxos 【14,15】 是一种用于解决分布式共识的传统协议。它最初并不是为了原子广播而设计的,但D'Efago 等人【4】已经证明,共识协议可以用于原子广播。还有许多其他原子广播协议,ZooKeeper考虑使用Paxos,但它没有满足服务所需的某些关键属性。这些属性在第 2.3 节中进行了描述。Zab旨在满足ZooKeeper的要求,同时保持与Paxos的某些相似性。有关Paxos的详细信息,请参阅【15】。

2.1 Paxos and design decisions for Zab

2.1 Paxos和Zab的设计决策

Two important requirements [12] for Zab are handling multiple outstanding client operations and efficient recovery from crashes. An outstanding transaction is one that has been proposed but not yet delivered. For high-performance, it is important that ZooKeeper can handle multiple outstanding state changes requested by the client and that a prefix of operations submitted concurrently are committed according to FIFO order. Moreover, it is useful that the system can recover efficiently after the leader has crashed.

Zab的两个重要要求【12】是处理多个未完成的客户端操作和从崩溃中高效恢复。未完成事务是已被提出但尚未传递的事务。对于高性能,ZooKeeper可以处理客户端请求的多个未完成状态更改是重要的,并且对一系列同时提交的操作能根据FIFO顺序提交。此外,它对于系统能在领导者崩溃后高效恢复也有效。

The original Paxos protocol does not enable multiple outstanding transactions.Paxos does not require FIFO channels for communication, so it tolerates message loss and reordering. If two outstanding transactions have an order dependency, then Paxos cannot have multiple outstanding transactions because FIFO order is not guaranteed. This problem could be solved by batching multiple transactions into a single proposal and allowing at most one proposal at a time, but this has performance drawbacks.

原始的 Paxos 协议不支持多个未决事务。Paxos 协议不需要通信的 FIFO 通道,因此它可以容忍消息丢失和重排序。如果两个未完成事务具有顺序依赖性,则Paxos 协议无法支持多个未完成事务,因为 FIFO 顺序无法保证。这个问题可以通过将多个事务打包成一个提案,并允许最多一个提案来解决,但这存在性能问题。

The manipulation of the sequence of transactions to use during recovery from primary crashes is claimed to not be efficient enough in Paxos [12]. Zab improves this aspect by employing a transaction identification scheme to totally order the transactions. Under the scheme,in order to update the application state of a new primary process, it is sufficient to inspect the highest transaction identifier from each process,and to copy transactions only from the process that accepted the transaction with the highest identifier. In Paxos, the same idea cannot be applied on sequence numbers,so a new primary has to execute Phase 1 of Paxos for all previous sequence numbers for which the primary has not “learned a value” (in Zab terminology, “committed a transaction”).

Paxos在从主要崩溃中恢复时,对事务顺序的操作被认为不够高效。Zab通过使用事务标识方案来完全排序事务来改善了这一方面。在该方案中,为了更新新主进程的应用状态,只需检查每个进程的最高事务标识符,并仅从接受具有最高标记事务的事务进程复制事务。在Paxos中,不能将相同的想法应用于序列号,因此新主必须为老主没有“学习到值”(在 Zab 术语中,“提交事务”)的所有先前序列号执行Paxos的第1阶段。

Additional performance requirements [19] for ZooKeeper are: (i) low latency, (ii) good throughput under bursty conditions, handling situations when write workloads increase rapidly during, e.g., massive system reconfiguration, and (iii) smooth failure handling, so that the service can stay up when some non-leader server crashes.

ZooKeeper的其他性能要求包括:(i)低延迟,(ii)在突发条件下良好的吞吐量,处理在例如大规模系统重新配置期间写工作量迅速增加的情况,以及(iii)平稳的故障处理,因此当某些非领导者服务器崩溃时,服务可以保持运行。

2.2 Crash-recovery system model

崩溃恢复系统模型

ZooKeeper assumes the crash-recovery model as system model [12]. The system is a set of processes Π = {p1, p2, . . . , pN}, also referred to as peers in this report, that communicate by message passing, are each equipped with a stable storage device, and may crash and recover indefinitely many times. A quorum of Π is a subset Q ⊆ Π such that |Q| > N/2. Any two quorums have a non-empty intersection. Processes have two states: up and down. A process is down from a crash time point to the beginning of its recovery, and up from the beginning of a recovery until the next crash happens. There is a bidirectional channel for every pair of processes in Π,which is expected to satisfy the following properties: (i) integrity, asserting that process pj receives a message m from pi only if pi has sent m; and (ii)prefix, stating that if process pj receives a message m and there is a message m′ that precedes m in the sequence of messages pi sent to pj,then pj receives m′ before m.To achieve these properties, ZooKeeper uses TCP – therefore FIFO – channels for communication.

ZooKeeper采用崩溃恢复模型作为系统模型【12】。该系统是一组进程 Π ={p1,p2,...,pN},也称为该报告中的peers,它们通过消息传递进行通信,每个进程都配备一个稳定的存储设备,并且可以无限多次崩溃和恢复。Π 的大多数是Π 的子集Q,即Q ⊆ Π,使得|Q| > N/2。任何两个大多数都有非空交集。进程有两种状态:up和down。一个进程从崩溃时间点到恢复开始时处于down状态,从恢复开始到下一次崩溃发生时处于up状态。对于 Π 中每对进程,都有一个双向通道,期望满足以下属性:(i)完整性,即进程 pj 仅当 pi 发送了消息 m,才接收消息 m;(ii)前置性,即如果进程 pj 接收了消息 m,并且 pi 发送给 pj 的消息序列中存在在 m 之前的消息 m′,则 pj 在 m 之前接收 m′。为了实现这些属性,ZooKeeper 使用 TCP(因此是 FIFO)通道进行通信。

2.3 Expected properties

预期属性

To guarantee that processes are consistent, there are a couple of safety properties to be satisfied by Zab. Additionally, for allowing multiple outstanding operations, we require primary order properties. To state these properties we first need some definitions.

为了确保过程是一致的,需要满足 Zab 的几个安全属性。此外,为了允许多个未完成的操作,我们需要主要顺序属性。 为了说明这些属性,我们首先需要一些定义。

In ZooKeeper’s crash-recovery model, if the primary process crashes, a new primary process needs to be elected. Since broadcast messages are totally ordered, we require at most one primary active at a time. So over time we get an unbounded sequence of primary processes ρ1ρ2 . . . ρe . . ., where ρe ∈ Π, and e is an integer called epoch, representing a period of time when ρe was the single primary in the ensemble. Process ρe precedes ρe′, denoted ρe ≺ ρe′, if e < e′.

在 ZooKeeper 的崩溃恢复模型中,如果主进程崩溃,则需要选举一个新的主进程。 由于广播消息是完全有序的,因此我们要求一次最多只有一个主进程处于活动状态。 因此,随着时间的推移,我们得到了一个无界的主进程序列 ρ1ρ2...ρe...,其中 ρe ∈ Π,e 是一个称为 epoch 的整数,表示 ρe 是集合中唯一的主进程的时间段。如果 e < e′,进程 ρe 在 epoch 之前,表示 ρe ≺ρe′。

Transactions are state changes that the primary propagates (“broadcasts”) to the ensemble, and are represented by a pair ⟨v, z⟩, where v is the new state and z is an identifier called zxid. Transactions are first proposed to a process by the primary, then delivered (“committed”) at a process upon a specific call to a delivery method.

事务是主进程向集合传播的状态变化(“广播”),由一个二元组 ⟨v, z⟩ 表示,其中 v 是新状态,z 是一个称为 zxid 的标识符。 事务首先由主进程向一个进程提出,然后在一个进程的的特定调用的交付方法上交付(“提交”)。

The following properties are necessary for consistency [12].

以下属性对于一致性是必要的 【12】。

-

Integrity: If some process delivers ⟨v, z⟩, then some process has broadcast ⟨v, z⟩.

完整性:如果某个进程传递了 ⟨v, z⟩,那么某个进程已经广播了 ⟨v, z⟩。 -

Total order: If some process delivers ⟨v, z⟩ before ⟨v′, z′⟩, then any process that delivers ⟨v′, z′⟩ must also deliver ⟨v, z⟩ before ⟨v′, z′⟩.

全局顺序性:如果某个进程在 ⟨v′, z′ ⟩ 之前传递了 ⟨v, z⟩,那么任何在 ⟨v′, z ′⟩ 之前传递⟨v′, z′〉 的进程也必须在 ⟨v′, z′″之前交付 ⟨v, z⟩。 -

Agreement: If some process pi delivers ⟨v, z⟩ and some process pj delivers ⟨v′, z′⟩,then either pi delivers ⟨v′, z′⟩ or pj delivers ⟨v, z⟩.

一致性:如果某些进程 pi 传递了 ⟨v, z⟩,而某些进程 pj 传递了 ⟨v′, z′〉,那么要么pi 传递了 ⟨v′,z′〉,要么 pj 传递了 ⟨ v, z⟩。

Primary order properties [12] are given below.

一级顺序属性【12】如下所示。

-

Local primary order: If a primary broadcasts ⟨v, z⟩ before it broadcasts ⟨v′,z′⟩,then a process that delivers ⟨v′, z′⟩ must have delivered ⟨v, z⟩ before ⟨v′,z′⟩.

局部主序:如果一个主广播了 ⟨v,z⟩,然后广播了 <v',z'>,那么传递 <v',z'>的进程必须在传递 ⟨v',z‘⟩ 之前传递了 ⟨v,z⟩。 -

Global primary order: Suppose a primary ρi broadcasts ⟨v, z⟩, and a primary ρj ≻ ρi broadcasts ⟨v′, z′⟩. If a process delivers both ⟨v, z⟩ and ⟨v′, z′⟩,then itmust deliver ⟨v, z⟩ before ⟨v′, z′⟩.

全局主序:假设主序ρi广播了⟨v, z⟩,主序ρj≻ρi广播了⟨v′, z′⟩。如果一个进程传递了⟨v, z⟩和⟨v′, z′⟩,那么它必须在传递⟨v′, z′⟩之前传递⟨v, z⟩。 -

Primary integrity: If a primary ρe broadcasts ⟨v, z⟩ and some process delivers⟨v′, z′⟩ which was broadcast by ρe′ ≺ ρe, then ρe must have delivered ⟨v′, z′⟩ before broadcasting ⟨v, z⟩.

主序完整性:如果主进程 ρe 广播了 ⟨v, z⟩,并且某个进程传递了 ⟨v′, z′ ⟩,该⟨v′, z′〉 由 ρe′ ≺ ρe 广播,则 ρe 必须在广播 ⟨v, z⟩ 之前传递了 ⟨v′,z′⟩。

Local primary order corresponds to FIFO order. Primary integrity guarantees that a primary has delivered transactions from previous epochs.

本地主顺序对应于FIFO顺序。主完整性确保主已传递来自先前时期的事务。

3 Atomic broadcast protocol

原子广播协议

In Zab, there are three possible (non-persistent) states a peer can assume: following, leading, or election. Whether a peer is a follower or a leader, it executes three Zab phases: (1) discovery, (2) synchronization, and (3)broadcast, in this order. Previous to Phase 1, a peer is in state election,when it executes a leader election algorithm to look for a peer to vote for becoming the leader. At the beginning of Phase 1, the peer inspects its vote and decides whether it should become a follower or a leader. For this reason, leader election is sometimes called Phase 0.

在 Zab 中,一个同伴可以处于三种可能的(非持续的)状态:跟随、领导或选举。无论一节点是跟随者还是领导者,它都会执行三个 Zab 阶段:(1)发现,(2)同步,(3)广播,顺序如下。在阶段 1 之前,一个节点处于选举状态,当它执行领导者选举算法以寻找一个同伴投票成为领导者时。在阶段 1 的开始,同伴检查其投票并决定它是否应该成为跟随者或领导者。因此,领导者选举有时被称为阶段 0。

The leader peer coordinates the phases together with the followers, and there should be at most one leader peer in Phase 3 at a time, which is also the primary process to broadcast messages. In other words, the primary is always the leader. Phases 1 and 2 are important for bringing the ensemble to a mutually consistent state, specially when recovering from crashes. They constitute the recovery part of the protocol and are critical to guarantee order of transactions while allowing multiple outstanding transactions. If crashes do not occur, peers stay indefinitely in Phase 3 participating in broadcasts,similar to the two phase commit protocol [9]. During Phases 1,2, and 3, peers can decide to go back to leader election if any failure or timeout occurs.

领导者peer与跟随者一起协调这些阶段,在阶段 3 中最多有一个领导者节点,这也是广播消息的主要进程。换句话说,主要进程始终是领导者。阶段 1 和2 对于将整个集合带入相互一致的状态非常重要,特别是在从崩溃中恢复时。它们构成了协议的恢复部分,对于在允许多个未完成事务的同时确保事务顺序至关重要。 如果不发生崩溃,则节点将无限期地停留在第3阶段,参与广播,类似于两阶段提交协议【9】。 在第1、2和3阶段,如果发生任何失败或超时,同伴可以决定返回领导者选举。

ZooKeeper clients are applications that use ZooKeeper services by connecting to at least one server in the ensemble. The client submits operations to the connected server, and if this operation implies some state change, then the Zab layer will perform a broadcast. If the operation was submitted to a follower, it is forwarded to the leader peer. If a leader receives the operation request, then it executes and propagates the state change to its followers. Read requests from the client are directly served by any ZooKeeper server. The client can choose to guarantee that the replica is up-to-date by issuing a sync request to the connected ZooKeeper server.

ZooKeeper客户端是应用程序,它们通过连接到集群中的至少一个服务器来使用ZooKeeper服务。 客户端将操作提交给连接的服务器,如果该操作涉及某些状态更改,则Zab层将执行广播。 如果将操作提交给跟随者,则将其转发给领导者。 如果领导者收到操作请求,则它将执行并将其状态更改传播给其跟随者。 客户端的读请求由任何ZooKeeper服务器直接提供服务。客户端可以通过向连接的 ZooKeeper 服务器发出同步请求来确保副本是最新的。

In Zab, transaction identifiers (zxid) are crucial for implementing total order properties. The zxid z of a transaction ⟨v, z⟩ is a pair ⟨e, c⟩, where e is the epoch number of the primary ρe that generated the transaction ⟨v, z⟩, and c is an integer acting as a counter. The notation z.epoch means e, and z.counter = c. The counter c is incremented every time a new transaction is introduced by the primary. When a new epoch starts – a new leader becomes active – c is set to zero and e is incremented from what was known to be the previous epoch. Since both e and c are increasing, transactions can be ordered by their zxid. For two zxids ⟨e, c⟩and ⟨e′, c′⟩, we write ⟨e, c⟩ ≺z ⟨e′, c′⟩ if e < e′ or if e = e′ and c < c′.

在 Zab 中,事务标识符(zxid)对于实现全序属性至关重要。 事务 ⟨v, z⟩ 的 zxid z 是一个元组 ⟨e, c⟩,其中 e 是生成事务 ⟨v, z⟩的主节点 ρe 的epoch数,c 是一个充当计数器的整数。 符号 z.epoch 意味着 e,z.counter = c。 计数器 c 每次由主节点引入一个新的事务时都会增加。 当一个新的epoch开始时——一个新的领导者变得活跃——c 被设置为零,e 从已知的前一个epoch开始递增。 由于 e 和 c 都在增加,可以通过它们的 zxid 对事务进行排序。 对于两个 zxid ⟨e, c⟩ 和 ⟨e′, c′〉,如果 e < e′ 或如果 e = e′ 和 c < c′,我们写成 ⟨e, c⟩ ≺ \(~z~⟨e′, c′⟩\)

There are four variables that constitute the persistent state of a peer, which are used during the recovery part of the protocol:

在协议的恢复部分中,有四个变量构成了peer的持久状态:

− history: a log of transaction proposals accepted;

− 历史:已接受的事务提议的日志;

− acceptedEpoch: the epoch number of the last NEWEPOCH message accepted;

− 已接受的Epoch:最新已接受的NEWEPOCH消息的Epoch编号;

− currentEpoch:the epoch number of the last NEWLEADER message accepted;

− 当前Epoch:最后接受的NEWLEADER消息的Epoch编号;

− lastZxid:zxid of the last proposal in the history;

−lastZxid:历史中的最后一个提议的zxid;

We assume some mechanism to determine whether a transaction proposal in the history has been committed in the peer’s ZooKeeper database. The variable names above follow the terminology of [18], while in [12] they are different: history of a peer f is hf, acceptedEpoch is f.p, currentEpoch is f.a, and lastZxid is f.zxid.

我们假设存在某种机制来确定历史中的某个事务提议是否已在peer的ZooKeeper数据库中提交。上面的变量名遵循【18】的术语,而在【12】中它们是不同的:peer的f的历史是hf,接受的Epoch是f.p,当前Epoch是f.a,lastZxid是f.zxid。

3.1 Phases of the protocol

协议的阶段

The four phases of the Zab protocol are described next.

Zab协议的四个阶段如下。

Phase 0: Leader election Peers are initialized in this phase, having state election. No specific leader election protocol needs to be employed, as long as the protocol terminates, with high probability, choosing a peer that is up and that a quorum of peers voted for. After termination of the leader election algorithm, a peer stores its vote to local volatile memory. If peer p voted for peer p′, then p′ is called the prospective leader for p. Only at the beginning of Phase 3 does a prospective leader become an established leader, when it will also be the primary process. If the peer has voted for itself, it shifts to state leading, otherwise it changes to state following.

阶段 0:领导者选举 阶段 0:领导者选举 在这一阶段,节点进行初始化,进行状态选举。 只要协议以高概率终止,选择一个处于活动状态的节点,并得到半数节点的投票,就不需要使用特定的领导者选举协议。 在领导者选举算法终止后,节点将其投票存储到本地易失性存储器中。 如果节点 p 投票给节点 p′,则节点 p′称为节点 p的潜在领导者。只有在阶段3的开始,潜在领导者才成为已建立的领导者,同时它也将成为主要进程。 如果节点投票给自己,则它切换到领导状态,否则它切换到跟随状态。

Phase 1: Discovery In this phase, followers communicate with their prospective leader, so that the leader gathers information about the most recent transactions that its followers accepted. The purpose of this phase is to discover the most updated sequence of accepted transactions among a quorum, and to establish a new epoch so that previous leaders cannot commit new proposals. The complete description of this phase is described in Algorithm 1.

阶段 1:发现在这一阶段,跟随者与其潜在领导者进行通信,以便领导者收集其跟随者接受的最新交易的信息。本阶段的目的是发现一组确认交易的最新序列,并建立一个新的epoch,以便之前的领导者不能提交新的提议。本阶段的完整描述见算法 1。

Algorithm 1: Zab Phase 1: Discovery.

At the beginning of this phase, a follower peer will start a leader-follower connection with the prospective leader. Since the vote variable of a follower corresponds to only one peer, the follower can connect to only one leader at a time. If a peer p is not in state leading and another process considers p to be a prospective leader, any leader-follower connection will be denied by p. Either the denial of a leader-follower connection or some other failure can bring a follower back to Phase 0.

在这个阶段开始时,一个跟随者节点将开始与潜在领导者建立领导者-跟随者连接。由于跟随者的投票变量仅对应于一个同伴,因此跟随者一次只能连接到一个领导者。如果一个节点 p不在领导状态,而另一个进程认为p是潜在领导者,则p将拒绝任何领导者-跟随者连接。领导者-跟随者连接的拒绝或某些其他失败可能会将跟随者带回到阶段0。

Phase 2: Synchronization The Synchronization phase concludes the recovery part of the protocol,synchronizing the replicas in the ensemble using the leader’s updated history from the previous phase. The leader communicates with the followers,proposing transactions from its history. Followers acknowledge the proposals if their own history is behind the leader’s history. When the leader sees acknowledgements from a quorum, it issues a commit message to them. At that point, the leader is said to be established, and not anymore prospective. Algorithm 2 gives the complete description of this phase.

阶段2:同步 同步阶段完成了协议的恢复部分,使用前一阶段领导者更新的历史记录来同步群集中的副本。 领导者与跟随者通信,从其历史记录中提出事务。 如果跟随者的历史记录落后于领导者的历史记录,则跟随者确认提议。 当领导者看到来自法定人数的确认时,它向他们发出提交消息。 在这一点上,领导者被认为已经建立,不再是潜在领导者。 算法2给出了这个阶段的完整描述。

Phase 3: Broadcast If no crashes occur, peers stay in this phase indefinitely,performing broadcast of transactions as soon as a ZooKeeper client issues a write request. At the beginning, a quorum of peers is expected to be consistent, and there can be no two leaders in Phase 3. The leader allows also new followers to join the epoch, since only a quorum of followers is enough for starting Phase 3. To catch up with other peers,incoming followers receive transactions broadcast during that epoch, and are included in the leader’s set of known followers.

第三阶段:广播 如果没有发生崩溃,节点们将无限期地停留在这个阶段,一旦ZooKeeper 客户端发出写请求,peer们就会立即执行事务的广播。 在开始时,预计节点们的多数派将保持一致,并且第三阶段中不能有两个领导者。 领导者还允许新跟随者加入当前的epoch,因为只有跟随者的多数派才足以启动第三阶段。 为了赶上其他节点,新加入的跟随者将在那个epoch接收广播的事务,并包括在领导者已知的跟随者集合中。

Since Phase 3 is the only phase when new state changes are handled, the Zab layer needs to notify the ZooKeeper application that it’s prepared for receiving new state changes. For this purpose, the leader calls ready(e) at the beginning of Phase 3, which enables the application to broadcast transactions.

由于第三阶段是处理新状态更改的唯一阶段,Zab 层需要通知ZooKeeper 应用程序,它已准备好接收新的状态更改。 为此,领导者在第三阶段的开始调用 ready(e),这使应用程序能够广播事务。

Algorithm 3 describes the phase.

算法 3 描述了该阶段。

Algorithms 1, 2, and 3 are apparently asynchronous and do not take into account possible peer crashes. To detect failures, Zab employs periodic heartbeat messages between followers and their leaders. If a leader does not receive heartbeats from a quorum of followers within a given timeout, it abandons its leadership and shifts to state election and Phase 0. A follower also goes to Leader Election Phase if it does not receive heartbeats from its leader within a timeout.

算法1、2和3显然是异步的,没有考虑到可能的节点崩溃。为了检测故障,Zab使用周期性心跳消息 追随者和他们的领导者之间。 如果一个领导者在给定的超时内没有从一定数量的追随者那里收到心跳,它将放弃其领导地位并转移到状态选举和第 0 阶段。 如果一个追随者在超时内没有从其领导者那里收到心跳,它也将进入领导者选举阶段。

3.2 Analytical results

分析结果

We briefly mention some formal properties that Zab satisfies, and their corresponding proofs were given in Junqueira et al. [11, 12]. The invariants are simple to show by inspecting the three algorithms, while claims are carefully demonstrated using the invariants.

我们简要介绍了Zab满足的一些形式属性,以及Junqueira等人 【11, 12】给出的相应证明。通过检查这三个算法,不变量是容易展示的,而断言则通过使用不变量进行了仔细的证明。

Invariant 1 [12] In Broadcast Phase, a follower F accepts a proposal ⟨e, ⟨v, z⟩⟩only if F.currentEpoch = e.

不变量 1 【12】 在广播阶段,一个跟随者 F 只有在 F.currentEpoch =e时才接受提议 ⟨e, ⟨v,z⟩⟩。

Invariant 2 [12] During the Broadcast Phase of epoch e, if a follower F has F.currentEpoch = e, then F accepts proposals and delivers transactions according to zxid order.

不变量2【12】在节点e的广播阶段,如果跟随者F的 currentEpoch = e,则F根据zxid顺序接受提议并传递事务。

Invariant 3 [12] During Phase 1, a follower F will not accept proposals from the leader of any epoch e′ < F.acceptedEpoch.

不变量3【12】在阶段1中,跟随者F不会接受来自任何epoch e′<F.acceptedEpoch的领导者的提议。

Invariant 4 [12] In Phase 1, an ACKEPOCH(F.currentEpoch, F.history,F.lastZxid) message does not alter, reorder, or lose transactions in F.history.In Phase 2, a NEWLEADER(e′, L.history) message does not alter, reorder, or lose transactions inL.history.

不变量 4 【12】 在第 1 阶段,ACKEPOCH(F.currentEpoch,F.history,F.lastZxid)消息不会更改、重排或丢失 F.history 中的事务。 在第 2 阶段,NEWLEADER(e′,L.history)消息不会更改、重排或将丢失 L.history 中的事务。

Invariant 5 [12] The sequence of transactions a follower F delivered while in Phase 3 of epoch F.currentEpoch is contained in the sequence of transactions broadcast by primary ρF.e, where F.e denotes the last epoch e such that F learned that e has been committed.

不变量 5 【12】 跟随者 F 在当前 epoch F.currentEpochs 的第 3 阶段交付的事务序列包含在主节点 ρF.e 广播的事务序列中,其中 F.e 表示最后一个 epoch e,使得 F 知道 e 已提交。

Claim 1 [11] For every epoch number e, there is at most one process that calls ready(e) in Broadcast Phase.

断言 1 【11】 对于每个 epoch 编号 e,广播阶段最多只有一个进程调用 ready(e)。

Claim 2 [12] Zab satisfies the properties from Section 2.3: broadcast integrity, agreement, total order, local primary order, global primary order,and primary integrity.

断言2 【12】 Zab满足第2.3节中的属性:广播完整性、一致性、全局顺序、本地主顺序、全局主顺序和主完整性。

Claim 3 [12] Liveness property: Suppose that a quorum Q of followers is up,the followers in Q have L as their prospective leader, L is up, and messages between a follower in Q and L are received in a timely fashion. If L proposes a transaction⟨e, ⟨v, z⟩⟩, then ⟨e, ⟨v, z⟩⟩ is eventually committed.

断言 3 【12】 存活性:假设一个追随者集合 Q 的追随者已经出现,Q 中的追随者将 L 作为他们的潜在领导者,L 已经出现,并且 Q 中的追随者和 L 之间的消息可以及时收到。如果 L 提议事务 ⟨e, ⟨v,z⟩⟩,则 ⟨e, ⟨v ,z⟩⟩ 最终会被提交。

4 Implementation

实现

Apache ZooKeeper is written in Java, and the version we have used for studying the implementation was 3.3.3 [8]. Version 3.3.4 is the latest stable version (to this date),but this has very little differences in the Zab layer. Recent unstable versions have significant changes, though.

Apache ZooKeeper是用Java编写的,我们用于学习实现的版本是3.3.3 【8】。3.3.4是最新的稳定版本(到目前为止),虽然最近的不稳定版本有重大变化,但这在Zab层中几乎没有差异。

Most of the source code is dedicated to ZooKeeper’s storage functions and client communication. Classes responsible for Zab are deep inside the implementation. As mentioned in Section 2.2, TCP connections are used to implement the bidirectional channels between peers in the ensemble. The FIFO order that TCP communication satisfies is crucial for the correctness of the broadcast protocol.

大多数源代码都专门用于ZooKeeper的存储函数和客户端通信。负责Zab的类位于实现的深处。如第2.2节所述,TCP连接用于在群集中节点之间实现双向通道。TCP通信满足的FIFO顺序对于广播协议的正确性至关重要。特别是,Phase 0的默认领导者选举算法与Phase 1的实现紧密耦合。

The Java implementation of Zab roughly follows Algorithms 1, 2, and 3. Several optimizations were added to the source code, which make the actual implementation look significantly different from what we have seen in the previous section. In particular, the default leader election algorithm for Phase 0 is tightly coupled with the implementation of Phase 1.

Zab的Java实现大致遵循算法1、2和3。源代码中添加了几个优化,这使得实际实现看起来与我们在前一节中看到的有很大不同。特别是,阶段0的默认领导者选举算法与阶段1的实现紧密耦合。

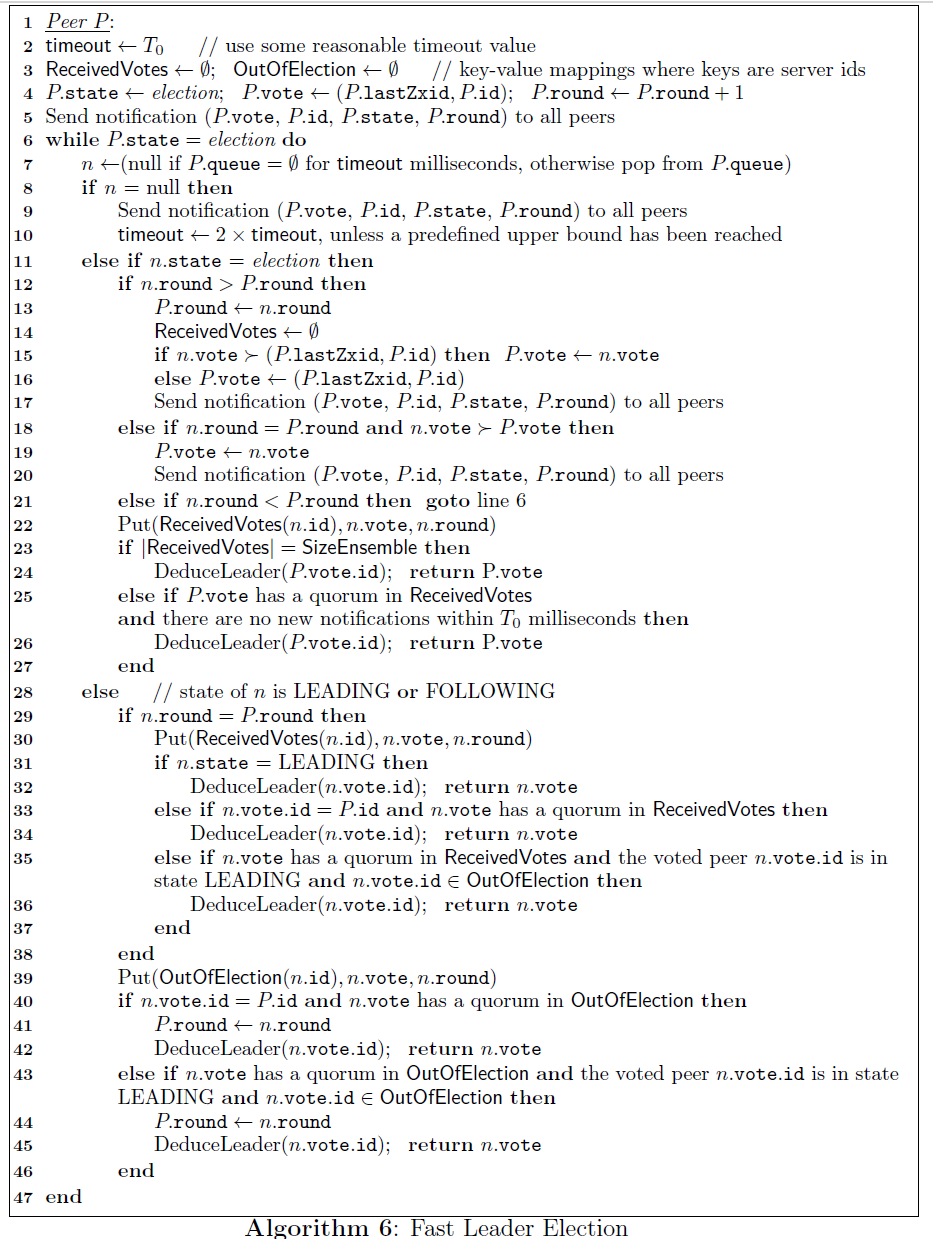

Fast Leader Election (FLE) is the name of the default leader election algorithm in the implementation. This algorithm employs an optimization: It attempts to elect as leader the peer that has the most up-to-date history from a quorum of processes. When such a leader is elected, in Phase 1 it will not need to communicate with followers to discover the latest history. Even though other leader election algorithms are supported by the implementation, in reality Phase 1 was modified to require that Phase 0 elects a leader with the most up-to-date history.

快速领导者选举(FLE)是实现中的默认领导者选举算法的名称。 该算法采用了一种优化方法:它试图从一组进程中选举具有最新历史记录的peer作为领导者。 当选举出这样的领导者时,在Phase 1中,它不需要与追随者通信以发现最新的历史记录。尽管其他领导者选举算法得到了实现的支持,但在现实中,Phase 1被修改为要求Phase 0选举具有最新历史记录的领导者。

In practice, since FLE covers the discovery responsibility of Phase 1, this phase has been neglected in version 3.3.3 (and also 3.3.4) of ZooKeeper. There is no clear distinction between Phases 1 and 2 in the implementation, so we refer to the combination of both as Recovery Phase. This phase comes after Phase 0, and assumes that the leader has the latest history in a quorum.Algorithm 4 is an approximate pseudocode of the Recovery Phase, and Figure 1 compares the implemented phases to Zab’s phases.

在实践中,由于FLE涵盖了Phase 1的发现责任,因此在ZooKeeper的3.3.3(和3.3.4)版本中忽略了该阶段。 在实现中,没有明确区分Phase 1和2,因此我们将两者结合称为恢复阶段。该阶段在阶段0之后,假设领导者具有最新的历史记录。算法4是恢复阶段的近似伪代码,图1将实现的阶段与Zab的阶段进行了比较。

Figure 1: Comparison betweens the phases of Zab protocol and the implemented pro-tocol.

图1:Zab协议的各个阶段与实际协议的比较。

The implemented Recovery Phase resembles more Phase 2 than Phase 1.Followers connect to the leader and send their last zxid, so the leader can decide how to synchronize the followers’ histories. However, the synchronization is done differently than in Phase 2: Followers can abort some outstanding transactions upon receiving the TRUNC message or accept newer proposals from the leader upon receiving the DIFF message. The implementation uses some special variables for performing a case-by-case synchronization: history.lastCommittedZxid is the zxid of the most recently committed transaction in history, and history.oldThreshold is the zxid of some committed transaction considered to be old enough in history.

实现的恢复阶段更像第二阶段,而不是第一阶段。跟随者连接到领导者并发送他们的最后一个 zxid,因此领导者可以决定如何同步跟随者的历史记录。但是,同步与第二阶段不同:跟随者可以在收到 TRUNC 消息时取消一些未完成的事务,或者在收到 DIFF 消息时接受领导者的新提议。 实现使用一些特殊变量来执行逐个同步:history.lastCommittedZxid是历史中最新提交的事务的zxid,而history.oldThreshold是被认为在历史中足够旧的某些已提交事务的zxid。

The purpose of this synchronization is to keep the replicas in a mutually consistent state [19]. In order to do so, committed transactions in any replica must be committed in all other replicas, in the same order.Furthermore, proposed transactions that should not be committed anymore must be abandoned so that no peer commits them. Messages SNAP and DIFF take care of the former case, while TRUNC is responsible for the latter.

这种同步的目的是保持副本处于相互一致的状态【19】。 为了做到这一点,任何副本中的已提交事务都必须以相同顺序在所有其他副本中提交。 此外,不再需要提交的提议事务必须被放弃,这样就不会有 peer 提交它们。 消息SNAP和DIFF处理前者,而TRUNC负责后者。

There are no analogous variables to acceptedEpoch and currentEpoch. Instead,the Algorithm derives the current epoch e from the zxid ⟨e, c⟩ of the latest transaction in its history. If a follower F attempts to connect to its prospective leader L which is not actually leading, L will deny connection and F will execute line 22 in Algorithm 4.

没有类似于acceptedEpoch和currentEpoch的变量。 相反,算法从历史中最新事务的zxid ⟨e,c⟩中推导出当前epoch e。如果一个追随者F试图连接到其实际上没有领导能力的潜在领导者L,L将拒绝连接,F将执行算法4中的第22行。

The implementation expects stronger post-conditions from the leader election phase than Zab’s description [12] does, see Section 4.1 below. FLE is undocumented,so we now focus on studying it in detail, including the postconditions that the Recovery Phase requires.

实现期望领导者选举阶段具有比Zab的描述【12】更强的后置条件,见下面的第4.1节。 FLE没有文档记录,因此我们现在专注于详细研究它,包括恢复阶段所需的后置条件。

4.1 Fast Leader Election

快速领导者选举

The main postcondition that Fast Leader Election attempts to guarantee for the subsequent Recovery Phase is that the leader will have in its history all committed transactions. This is supported by the assumption that the peer with the most recent proposed transaction must have also the most recent committed transaction. For performing the synchronization, Recovery Phase assumes this postcondition holds. If, however, the postcondition does not hold, a follower might have a committed transaction that the leader does not have. In that situation, the replicas would be inconsistent, and Recovery Phase would not be able to bring the ensemble to a consistent state, since the synchronization direction is strictly from leader to followers. To achieve the postcondition,FLE aims at electing a leader with highest lastZxid among a quorum.

Fast Leader Election 试图为后续的恢复阶段保证的主要后置条件是,领导者在其历史中具有所有已提交的事务。这是通过假设具有最近提议事务的peer也具有最近已提交的事务来支撑的。为了执行同步,恢复阶段假设该后置条件成立。但是,如果后置条件不成立,跟随者可能具有领导者不具有的已提交事务。在这种情况下,副本将是不一致的,恢复阶段将无法将整个系统带入一致状态,因为同步方向严格是从领导者到跟随者。为了实现后置条件,FLE旨在选举具有最高lastZxid的领导者。

In FLE, peers in the election state vote for other peers for the purpose of electing a leader with the latest history. Peers exchange notifications about their votes, and they update their own vote when a peer with more recent history is discovered. A local execution of FLE will terminate returning a vote for a single peer and then transition to Recovery Phase. If the vote was for the peer itself, it shifts to state leading (and following itself), otherwise it goes to state following. Any subsequent failures will cause the peer to go back to state election and restart FLE.Different executions of FLE are distinguished by a round number: Every time FLE restarts, the round number is incremented.

在FLE中,处于选举状态的节点为其他节点投票,以选举具有最新历史记录的leader。节点交换关于其投票的通知,当发现具有更新历史记录的节点时,它们更新自己的投票。FLE的本地执行将终止返回单个节点的投票,然后过渡到恢复阶段。 如果投票是对同伴本身的,它将切换到领导状态(并跟随自己),否则它将切换到跟随状态。 任何后面的失败都将导致同伴返回到选举状态并重新启动FLE。 FLE的不同执行由一个整数区分开来:每次FLE重新启动时,该整数都会增加。

Recall the set of peers Π = {p1, p2, . . . , pN}, where {1, 2, . . . , N} are the server identification numbers of the peers. A vote for a peer pi is represented by a pair (zi,i),where zi is the zxid of the latest transaction in pi. For FLE, votes are ordered by the“better” relation ≻ such that (zi, i) ⪰ (zj, j) if zi ≻ zj or if zi =zj and i ≥ j.

回想一下节点集合Π={p1,p2,...,pN},其中{1,2,...,N}是节点的服务器标识号码。 对节点pi的投票由一对(zi,i)表示,其中zi是pi中最新事务的zxid。 对于FLE,投票按“更好”关系排序≻使得(zi,i)⪰(zj,j),如果zi≻zj或如果zi=zj且i≥j。

Since each peer has a unique server id and knows the zxid of its latest transaction,all peers are totally ordered by the relation ≻. That is, if pi and pj are two peers with zxid and server id pairs (zi, i), (zj, j), respectively,then pi ⪰ pj if and only if(zi, i) ⪰ (zj, j).

由于每个节点都有一个唯一的服务器ID,并且知道其最新事务的zxid,因此所有节点都完全按照关系≻排序。也就是说,如果pi和pj是具有zxid和服务器ID对(zi,i)和(zj,j)的两个节点,那么pi⪰pj当且仅当(zi,i)⪰(zj,j)。

In the Recovery Phase, a follower pF is successfully connected to its prospective leader pL once it passes line 24in Algorithm 4. The objective of FLE is to eventually elect a leader pL which was voted by a quorum Q of followers, such that every follower pF ∈ Q eventually gets successfully connected to pL and had satisfied pF ⪯ pL when FLE was terminated.

在恢复阶段,一旦追随者pF通过了算法4中的第24行,它就会成功地连接到其潜在领导者pL。FLE的目标是最终选举出一个由大多数追随者集合Q的成员投票的领导者pL,使得每个追随者pF∈Q最终成功连接到pL,并且在FLE终止时满足pF⪯pL。

Nothing is written to disk during the execution of FLE, so it does not have a disk-persistent state. This also means that the FLE round number is not persistent.However, it uses some variables known to be persistent, such as lastZxid. The non-persistent variables important to FLE are: vote, identification number id, state ∈{election, leading, following},current round ∈ Z+, and queue of received notifications. A notification is a tuple (vote, id, state, round) to be sent to other peers with information about the sender peer.

在FLE执行期间,不会将任何内容写入磁盘,因此它没有磁盘持久状态。这也意味着FLE的执行次数不是持久的。然而,它使用一些已知是持久的变量,例如lastZxid。对 FLE 重要的非持久变量是:vote、identification number id、state ∈{election、leading、following}、current round ∈ Z+、以及收到的通知队列。通知是一个元组(vote、id、state、round),用于向其他节点发送有关发送者节点的信息。

Algorithm 6 gives a thorough description of Fast Leader Election, which roughly works as follows. Each peer knows the IP addresses of all other peers, and knows the total number of peers,SizeEnsemble. A peer starts by voting for itself, sends notifications of its vote to all other peers, and then waits for notifications to arrive. Upon receiving a notification, it will be dealt by the current peer according to the state of the peer that sent the notification. If the state was election, the current peer updates its view of the other peers’ votes, and updates its own vote in case the received vote is better. Notifications from previous rounds are ignored. If the state of the sender peer was not election, the current peer updates its view of follower-leader relationships of peers outside leader election phase. In either case, when the current peer detects a quorum of peers with a common vote, it returns its final vote and decides to be a leader or a follower.

算法 6 对快速领导者选举进行了全面的描述,其大致工作原理如下。 每个节点都知道所有其他节点的 IP 地址,并知道总同伴数 SizeEnsemble。 一个节点首先为自己投票,将其投票通知发送给所有其他节点,然后等待通知到达。 在收到通知后,当前节点将根据发送通知的节点的状态进行处理。 如果状态为 election,则当前节点将更新其对其他节点投票的view,并在收到优先级更高的投票的情况下更新其自己的投票。忽略来自上一轮的通知。如果发送者的状态不是选举,则当前节点更新其对领导者选举阶段之外节点的跟随者-领导者关系的view。在任何情况下,当当前节点检测到具有共同投票的同伴的人数过半时,它返回其最终投票并决定成为领导者或跟随者。

Some subroutines necessary for Fast Leader Election are:

FLE一些必要的选举子流程是:

− DeduceLeader(id): sets the state of the current peer to LEADING in case id is equal to its own server id, otherwise sets the peer’s state to FOLLOWING.

DeduceLeader(id):如果id等于其自己的服务器id,则将当前节点的状态设置为LEADING,否则将节点的状态设置为FOLLOWING。

− Put(Table(id), vote, round): in the key-value mapping Table, sets the value of the entry with key id to (vote, round, version), where version is a positive integer i indicating that vote is the i-th vote of server id during its current election round. Supposing (v, r, i) was the previous value of the entry id (initially(v, r, i) = (⊥, ⊥, ⊥)), version := 1 if r ≠ round, and version := i+1 otherwise.

Put(Table(id),vote,round):在键值映射表中,将键为id的条目的值设置为(vote,round,version),其中version是一个正整数i,表示vote是服务器id在当前的一轮选举中的第i票。假设(v,r,i)是条目id的先前值 (initially(v, r, i) = (⊥, ⊥, ⊥)),如果r≠round,则version=1,否则version := i+1。

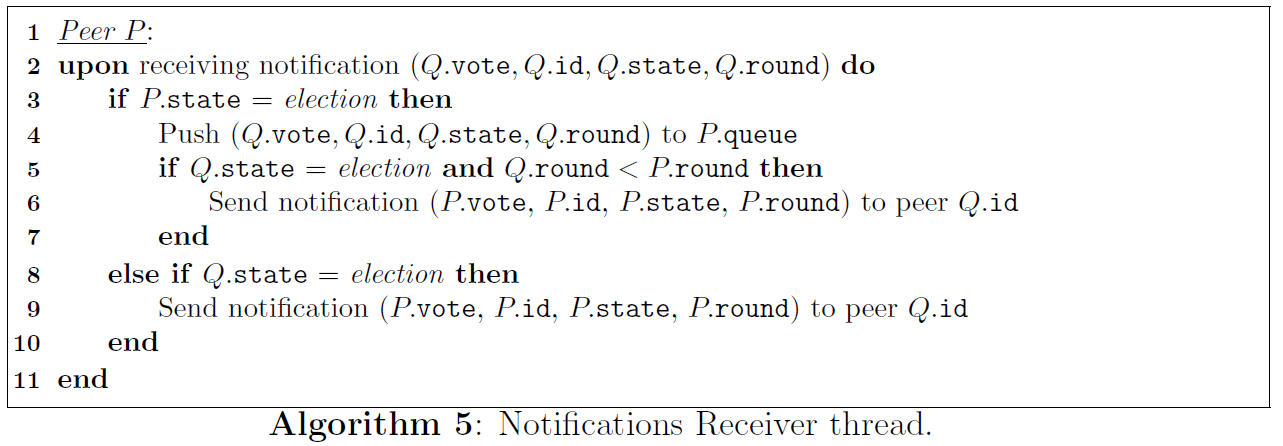

− Notifications Receiver, a thread which is run in parallel to the protocol,and described by the pseudocode of Algorithm 5. It receives notifications from a peer Q, forwards them to FLE through a queue, and sends back to Q a notification about the current peer’s vote.

通知接收器,一个与协议并行运行的线程,由算法5的伪代码描述,它从节点Q接收通知并向节点Q发送当前关于节点投票的通知,通知接收器通过队列将这些通知发送给FLE。

Algorithm 5: Notifications Receiver thread.

算法5:通知接收器线程。

4.2 Problems with the implemented protocol

实现协议的问题

Some problems have emerged from the implemented protocol due to differences from the designed protocol. We will briefly consider two bugs in this section.

由于与设计协议的差异,实现协议出现了一些问题。 在本节中,我们将简要考虑两个错误。

As mentioned, the implementation up to version 3.3.3 has not included epoch variables acceptedEpoch and currentEpoch. This omission has generated problems [5](issue ZOOKEEPER-335 in Apache’s issue tracking system) in a production version and was noticed by many ZooKeeper clients. The origin of this problem is at the beginning of Recovery Phase (Algorithm 4 line 2), when the leader increments its epoch(contained in lastZxid) even before acquiring a quorum of successfully connected followers (such leader is called false leader). Since a follower goes back to FLE if its epoch is larger than the leader’s epoch (line 25), when a false leader drops leadership and becomes a follower of a leader from a previous epoch, it finds a smaller epoch (line25) and goes back to FLE. This behavior can loop, switching from Recovery Phase to FLE.

正如前面提到的,实现版本 3.3.3 之前没有包括 epoch 变量acceptedEpoch 和 currentEpoch。 这个疏忽在生产版本中产生了问题【5】(Apache 的问题跟踪系统中的 ZOOKEEPER-325 问题),并被许多 ZooKeeper 的客户注意到了。 这个问题的根源在于恢复阶段的开始(算法 4 的第 2 行),当领导者甚至在获得成功连接的跟随者的多数票之前(这样的领导者被称为假领导者)就增加其 epoch(包含在 lastZxid 中)时。 由于跟随者的 epoch 大于领导者的 epoch(第 25 行),当假领导者放弃领导地位并成为来自以前 epoch 的领导者的跟随者时,它会发现较小的 epoch(第 25 行)并回到 FLE。这种行为可以循环,从恢复阶段切换到FLE。

Consequently, using lastZxid to store the epoch number, there is no distinction between a tried epoch and a joined epoch in the implementation. Those are the respective purposes for acceptedEpoch and currentEpoch, hence the omission of them render such problems. These variables have been properly inserted in recent (unstable)ZooKeeper versions to fix the problems mentioned above.

因此,使用lastZxid来存储时代号,在实现中尝试的epoch和当前加入的epoch之间没有区别。 这些是acceptedEpoch和currentEpoch的相对目的,因此省略它们会导致这些问题。 这些变量在最近的(不稳定的)ZooKeeper版本中已经正确纳入,以修复上述问题。

Another problem of the implementation is related to abandoning follower proposals in the Recovery Phase, through TRUNC messages. Algorithm 4 assumes that the condition z ≻ L.history.lastCommittedZxid (on line 11) is necessary and sufficient for determining follower proposals ⟨v, z⟩ to be abandoned. However, there might be proposals that need to be abandoned but do not satisfy that condition. The bug was reported in the issue ZOOKEEPER-1154 [6], and we mention a scenario where it happens.

另一个实现问题与在恢复阶段通过TRUNC消息丢弃跟随者提议有关。 算法4假定条件z≻L.history.lastCommittedZxid(第11行)对于确定要放弃的跟随者提议⟨v, z⟩是必要和充分的。 然而,可能存在需要放弃但不满足该条件的提议。 错误在 ZOOKEEPER-1054 【6】 问题中进行了报告,我们提到了这种情况。

Suppose Π = {p1, p2, p3}, all peers are in Broadcast Phase and synchronized to the latest (committed) transaction with zxid ⟨e =1, c = 3⟩, and p1 is the leader. A new proposal with zxid ⟨1, 4⟩ is issued by the leader p1 (Algorithm 3 line 3), but this gets accepted only by p1 since the whole ensemble Π crashes after p1 accepts⟨1, 4⟩ and before {p2, p3}receive the proposal. Then, {p2, p3} restart and proceed to FLE, while p1 remains down. From FLE, p2 becomes the leader supported by the quorum {p2, p3}.At the beginning of Recovery Phase, p2 sets the epoch to 2(p2.lastZxid = ⟨2, 0⟩), completes Recovery Phase, then in Broadcast Phase a new proposal with zxid ⟨2, 1⟩ gets accepted by the quorum, then committed. At that point, the leader p2 has p2.history.lastCommittedZxid =⟨2, 1⟩ and (for example) p2.history.oldThreshold = ⟨1, 1⟩. Soon after that, p1 is up again, it performs FLE to discover that p2 is the leader with a quorum in Broadcast Phase, then in Recovery Phase p1 sends to the leader its FOLLOWERINFO(p1.lastZxid = ⟨1, 4⟩). The leader p2 sends a DIFF to follower p1 since ⟨1, 4⟩ ≺ p2.history.lastCommittedZxid and p2.history.oldThreshold ≺ ⟨1, 4⟩.

假设 Π= {p1,p2,p3},所有peer都处于广播阶段,并已同步到具有zxid ⟨e = 2,c =3⟩的最新(提交)事务,并且p1是领导者。 一个具有zxid ⟨1,4⟩的新提议由领导者p1发出(算法3行3),但只有p1接受 ⟨1,4⟩,因为整个集合 Π 在p1接受 ⟨1,4⟩后崩溃,而在{p2,p3}接收提议之前。 然后,{p2,p3}重新启动并继续FLE,而p1仍然处于关闭状态。 从FLE开始,p2成为由大多数节点 {p2,p3}支持的领导者。在恢复阶段的开始,p2将epoch置为2(p2.lastZxid= ⟨2,0⟩),完成恢复阶段,然后在广播阶段,一个新的具有zxid ⟨2,1⟩的提议被大多数节点接受,然后提交。 在这一点上,领导者p2具有p2.history.lastCommittedZxid = ⟨2,1⟩,并且(例如)p2.history.oldThreshold = ⟨1,1⟩。不久之后,p1再次上线,它执行FLE以发现p2是Broadcast Phase中具有quorum的领导者,然后在恢复阶段中,p1向领导者发送FOLLOWERINFO(p1.lastZxid=⟨1,4⟩)。领导者p2向跟随者p1发送DIFF,因为⟨1,4⟩≺p2.history.lastCommittedZxid和p2.history.oldThreshold≺⟨1,4⟩。

In that scenario, the leader should have sent either TRUNC to abort the follower’s uncommitted proposal ⟨1, 4⟩, or SNAP to make the follower’s database state exactly reflect the leader’s database. The latter option is what ZooKeeper developers have decided to adopt to fix the bug in the protocol.

在这种情况下,领导者应该发送TRUNC以放弃跟随者的未提交提议⟨1,4⟩,或者SNAP以使跟随者的数据库状态完全反映领导者的数据库。后者是ZooKeeper开发人员决定采用的选项,以修复协议中的错误。

5 Conclusion

结论

The ZooKeeper service has been used in many cloud computing infrastructures of organizations such as Yahoo! [7], Facebook [1], 101tec [7], RackSpace [7], AdroitLogic [7],deepdyve [7], and others. Since it assumes key responsibilities in cloud software stacks,it is critical that ZooKeeper performs reliably. In particular, given the dependency of ZooKeeper on Zab, the implementation of this protocol should always satisfy the properties mentioned in Section 2.3.

许多组织的云计算基础设施,如雅虎【7】、Facebook【1】、101tec【7】、RackSpace【7】、AdroitLogic【7】、deepdyve【7】等,都使用了ZooKeeper服务。由于它在云软件堆栈中承担着关键责任,因此ZooKeeper的性能至关重要。特别是,由于ZooKeeper依赖于Zab,因此该协议的实现应始终满足第2.3节中提到的属性。

Its increasing use has demonstrated how it has been able to fulfill its correctness objectives. However, another important demand for ZooKeeper is performance, such as low latency and high throughput. Reed and Junqueira [19] affirm that good performance has been key for its wide adoption [12]. Indeed,performance requirements have significantly changed the atomic broadcast protocol, for instance, through Fast Leader Election and Recovery Phase.

它的日益普及表明,它已经能够实现其正确性目标。 然而,ZooKeeper的另一个重要需求是性能,例如低延迟和高吞吐量。 Reed和Junqueira【19】证实,良好的性能是其广泛采用的关键【12】。 的确,性能要求极大地改变了原子广播协议,例如通过快速领导者选举和恢复阶段。

The adoption of optimizations in the protocol has not been painless, as seen with issues [5] and [6]. Some optimizations have been reverted in order to maintain correctness, such as the absence of variables acceptedEpoch and currentEpoch. Even though bugs are being actively fixed, experience shows us how distributed coordination problems must be dealt with caution. In fact, the sole purpose of ZooKeeper is to properly handle those problems [8]:

协议中采用优化的过程并非一帆风顺,如问题【5】和【6】所示。为了保持正确性,一些优化已被收纳,例如缺少变量acceptedEpoch和currentEpoch。尽管正在积极修复错误,但经验表明,我们必须谨慎处理分布式协调问题。事实上,ZooKeeper的唯一目的是正确处理这些问题【8】:

ZooKeeper is a centralized service for maintaining configuration information, naming, providing distributed synchronization, and providing group services. All of these kinds of services are used in some form or another by distributed applications. Each time they are implemented there is a lot of work that goes into fixing the bugs and race conditions that are inevitable. Because of the difficulty of implementing these kinds of services,applications initially usually skimp on them, which make them brittle in the presence of change and difficult to manage. Even when done correctly,different implementations of these services lead to management complexity when the applications are deployed.

ZooKeeper是一个集中式服务,用于维护配置信息、命名、提供分布式同步以及提供组服务。所有这些服务都以某种形式被分布式应用程序使用。每次实现这些服务时,都需要投入大量精力来修复不可避免的错误和竞态条件。由于实现这些服务具有困难性,应用程序最初通常会省略它们,这使它们在出现变化时变得脆弱,并且难以管理。即使正确实现,这些服务的不同实现也会导致应用程序部署时的管理复杂性。

ZooKeeper’s development, however, has also experienced these quoted problems. Some of these problems came from the difference between the implemented protocol and the published protocol from Junqueira et al.[12].Apparently, this difference has existed since the beginning of the development, which suggests early optimization attempts. Knuth [13] has long ago mentioned the dangers of optimization:

然而,ZooKeeper的发展也经历了这些问题。 其中一些问题来自Junqueira等人【12】发布的协议与实际实现的协议之间的差异。 显然,这种差异自开发之初就存在,这表明早期进行了优化尝试。 Knuth【13】早就提到了优化的危险:

We should forget about small efficiencies, say about 97% of the time: premature optimization is the root of all evil. Yet we should not pass up our opportunities in that critical 3%. A good programmer will not be lulled into complacency by such reasoning, he will be wise to look carefully at the critical code; but only after that code has been identified.

我们应该忘记小的效率,大约97%的时间:过早的优化是万恶之源。 然而,我们不应该错过那关键的3%的机会。 一个好的程序员不会被这样的推理所迷惑而自满,他会明智地仔细检查关键代码;但只有在确认了代码之后。

It is not a trivial task to achieve correctness in solutions for distributed coordination problems, and high performance requirements should not allow the sacrifice of correctness properties.

在分布式协调问题的解决方案中实现正确性并非易事,高性能要求不应牺牲正确性。

References

参考文献

[1] Dhruba Borthakur, Jonathan Gray, Joydeep Sen Sarma, Kannan Muthukkarup-pan, Nicolas Spiegelberg, Hairong Kuang, Karthik Ranganathan, Dmytro Molkov,Aravind Menon, Samuel Rash, Rodrigo Schmidt, and Amitanand S. Aiyer. ApacheHadoop goes realtime at Facebook. In Timos K. Sellis, Ren´ee J. Miller, Anasta-sios Kementsietsidis,and Yannis Velegrakis, editors, SIGMOD Conference, pages1071–1080.ACM, 2011. ISBN 978-1-4503-0661-4.

[2] Michael Burrows. The Chubby lock service for loosely-coupled distributed systems.In OSDI, pages 335–350. USENIX Association, 2006.

[3] Tushar Deepak Chandra and Sam Toueg. Unreliable failure detectors for reliabledistributed systems. J. ACM, 43(2):225–267, 1996.

[4] Xavier Defago, Andre Schiper, and Peter Urban. Total order broadcast and mul-

ticast algorithms: Taxonomy and survey. ACM Comput. Surv., 36(4):372-421,2004.

[5] The Apache Software Foundation. Apache Jira issue ZOOKEEPER-335,March2009. URL https://issues.apache.org/jira/browse/ZOOKEEPER-335.

[6] The Apache Software Foundation. Apache Jira issue ZOOKEEPER-1154,August2011. URL https://issues.apache.org/jira/browse/ZOOKEEPER-1154.

[7] The Apache Software Foundation. Wiki page: Applications and organiza-tions using ZooKeeper, January 2012. URL http://wiki.apache.org/hadoop/ZooKeeper/PoweredBy.

[8] The Apache Software Foundation. Apache Zookeeper home page,January 2012.URL http://zookeeper.apache.org/.

[9] Jim Gray. Notes on data base operating systems. In Operating Systems,volume 60of Lecture Notes in Computer Science, pages 393–481. Springer Berlin / Heidelberg,1978.

[10] Patrick Hunt, Mahadev Konar, Flavio P. Junqueira, and Benjamin Reed.ZooKeeper: Wait-free coordination for internet-scale systems. In Proceedings ofthe 2010 USENIX conference on USENIX annual technical conference, USENIX-ATC’10, pages 11–11. USENIX Association,2010.

[11] Flavio P. Junqueira, Benjamin C. Reed, and Marco Serafini. Dissecting Zab.Yahoo! Research, Sunnyvale, CA, USA, Tech. Rep. YL-2010-007, 122010.

[12] Flavio P. Junqueira, Benjamin C. Reed, and Marco Serafini. Zab: High-performance broadcast for primary-backup systems. In DSN, pages 245–256. IEEE,2011. ISBN 978-1-4244-9233-6.

[13] Donald E. Knuth. Structured programming with go to statements. ACM Comput.Surv., 6(4):261–301, 1974.

[14] Leslie Lamport. The Part-Time Parliament. ACM Trans. Comput. Syst.,16(2):133–169, 1998.

[15] Leslie Lamport. Paxos made simple. ACM SIGACT News, 32(4):18–25,2001.

[16] Parisa Jalili Marandi, Marco Primi, Nicolas Schiper, and Fernando Pedone. RingPaxos: A high-throughput atomic broadcast protocol. In DSN,pages 527–536.IEEE, 2010. ISBN 978-1-4244-7501-8.

[17] Cristian Mart´ın and Mikel Larrea. A simple and communication-efficient Omegaalgorithm in the crash-recovery model. Inf. Process. Lett.,110(3):83–87, 2010.

[18] Benjamin Reed. Apache’s Wiki page of a Zab documentation, January 2012. URLhttps://cwiki.apache.org/confluence/display/ZOOKEEPER/Zab1.0.

[19] Benjamin Reed and Flavio P. Junqueira. A simple totally ordered broadcast pro-tocol. Proceedings of the 2nd Workshop on Large-Scale Distributed Systems andMiddleware LADIS 08, page 1, 2008.