大数据安全系列的其它文章

https://www.cnblogs.com/bainianminguo/p/12548076.html-----------安装kerberos

https://www.cnblogs.com/bainianminguo/p/12548334.html-----------hadoop的kerberos认证

https://www.cnblogs.com/bainianminguo/p/12548175.html-----------zookeeper的kerberos认证

https://www.cnblogs.com/bainianminguo/p/12584732.html-----------hive的kerberos认证

https://www.cnblogs.com/bainianminguo/p/12584880.html-----------es的search-guard认证

https://www.cnblogs.com/bainianminguo/p/12639821.html-----------flink的kerberos认证

https://www.cnblogs.com/bainianminguo/p/12639887.html-----------spark的kerberos认证

今天的博客介绍大数据安全系列之flink的kerberos配置

一、flink安装

1、解压安装包

1 | tar -zxvf flink-1.8.0-bin-scala_2.11.tgz -C /usr/local/ |

2、重命名安装目录

1 | [root@cluster2-host1 local]# mv flink-1.8.0/ flink |

3、修改环境变量文件

1 2 | export FLINK_HOME=/usr/local/flinkexport PATH=${PATH}:${FLINK_HOME}/bin |

[root@cluster2-host1 data]# source /etc/profile

[root@cluster2-host1 data]# echo $FLINK_HOME

/usr/local/flink

4、修改flink的配置文件

1 2 3 | [root@cluster2-host1 conf]# vim flink-conf.yaml [root@cluster2-host1 conf]# pwd/usr/local/flink/conf |

1 | jobmanager.rpc.address: cluster2-host1 |

修改slaver文件

1 2 3 | [root@cluster2-host1 conf]# vim slaves [root@cluster2-host1 conf]# pwd/usr/local/flink/conf |

1 2 | cluster2-host2cluster2-host3 |

修改master文件

1 2 | [root@cluster2-host1 bin]# cat /usr/local/flink/conf/masters cluster2-host1 |

修改yarn-site.xml文件

1 2 3 4 | <property> <name>yarn.nodemanager.vmem-pmem-ratio</name> <value>5</value></property> |

5、创建flink用户

1 2 3 4 5 6 | [root@cluster2-host3 hadoop]# useradd flink -g flink[root@cluster2-host3 hadoop]# passwd flink Changing password for user flink.New password: BAD PASSWORD: The password fails the dictionary check - it is based on a dictionary wordRetype new password: |

6、修改flink安装目录的属主和属组

1 | [root@cluster2-host3 hadoop]# chown -R flink:flink /usr/local/flink/ |

7、启动flink验证安装步骤

1 2 3 4 5 6 | [root@cluster2-host1 bin]# ./start-cluster.sh Starting cluster.[INFO] 1 instance(s) of standalonesession are already running on cluster2-host1.Starting standalonesession daemon on host cluster2-host1.Starting taskexecutor daemon on host cluster2-host2.Starting taskexecutor daemon on host cluster2-host3. |

检查进程

1 2 3 4 5 6 7 8 | [root@cluster2-host1 bin]# jps10400 Secur30817 StandaloneSessionClusterEntrypoint12661 ResourceManager12805 NodeManager4998 QuorumPeerMain30935 Jps2631 NameNode |

登陆页面

1 | http://10.87.18.34:8081/#/overview |

关闭flink,上面的standalone的启动方法,下面启动flink-session模式

拷贝hadoop的依赖包到flink的lib目录

1 | scp flink-shaded-hadoop2-uber-2.7.5-1.8.0.jar /usr/local/flink/lib/ |

启动flink-session模式

1 | ./yarn-session.sh -n 2 -s 6 -jm 1024 -tm 1024 -nm test -d |

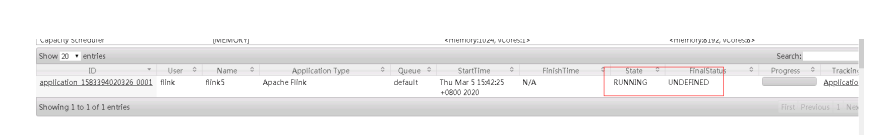

检查yanr的页面

二、配置flink的kerberos的配置

1、创建flink的kerberos认证主体文件

1 2 3 | kadmin.local: addprinc flink/cluster2-host1kadmin.local: addprinc flink/cluster2-host2kadmin.local: addprinc flink/cluster2-host3 |

1 2 3 | kadmin.local: ktadd -norandkey -k /etc/security/keytab/flink.keytab flink/cluster2-host1kadmin.local: ktadd -norandkey -k /etc/security/keytab/flink.keytab flink/cluster2-host2kadmin.local: ktadd -norandkey -k /etc/security/keytab/flink.keytab flink/cluster2-host3 |

2、拷贝keytab文件到其它节点

1 2 3 4 | [root@cluster2-host1 bin]# scp /etc/security/keytab/flink.keytab root@cluster2-host2:/usr/local/flink/flink.keytab 100% 1580 1.5KB/s 00:00 [root@cluster2-host1 bin]# scp /etc/security/keytab/flink.keytab root@cluster2-host3:/usr/local/flink/flink.keytab |

3、修改flink的配置文件

1 2 3 4 | security.kerberos.login.use-ticket-cache: truesecurity.kerberos.login.keytab: /usr/local/flink/flink.keytabsecurity.kerberos.login.principal: flink/cluster2-host3yarn.log-aggregation-enable: true |

4、启动yarn-session,看到如下操作,则配置完成

1 2 3 | flink@cluster2-host1 bin]$ ./yarn-session.sh -n 2 -s 6 -jm 1024 -tm 1024 -nm flink5 -d2020-03-05 02:42:23,706 INFO org.apache.hadoop.security.UserGroupInformation - Login successful for user flink/cluster2-host1 using keytab file /usr/local/flink/flink.keytab |

查看页面

检查进程

1 2 3 4 5 6 7 8 9 10 | [root@cluster2-host1 sbin]# jps6118 ResourceManager15975 NameNode22472 -- process information unavailable6779 NodeManager23483 YarnSessionClusterEntrypoint24717 Master9790 QuorumPeerMain25534 Jps20239 Secur |

5、flink的kerberos的配置完成

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本