大数据安全系列之hive的kerberos认证配置,其它系列链接如下

https://www.cnblogs.com/bainianminguo/p/12548076.html-----------安装kerberos

https://www.cnblogs.com/bainianminguo/p/12548334.html-----------hadoop的kerberos认证

https://www.cnblogs.com/bainianminguo/p/12548175.html-----------zookeeper的kerberos认证

https://www.cnblogs.com/bainianminguo/p/12584732.html-----------hive的kerberos认证

https://www.cnblogs.com/bainianminguo/p/12584880.html-----------es的search-guard认证

https://www.cnblogs.com/bainianminguo/p/12639821.html-----------flink的kerberos认证

https://www.cnblogs.com/bainianminguo/p/12639887.html-----------spark的kerberos认证

一、安装mysql

1、卸载mariadb

1 2 3 4 | [root@cluster2-host1 yum.repos.d]# rpm -qa|grep mariadbmariadb-libs-5.5.44-2.el7.centos.x86_64[root@cluster2-host1 yum.repos.d]# rpm -e --nodeps mariadb-libs-5.5.44-2.el7.centos.x86_64[root@cluster2-host1 yum.repos.d]# rpm -qa|grep mariadb |

2、创建mysql用户

1 2 | [root@cluster2-host1 yum.repos.d]# groupadd mysql[root@cluster2-host1 yum.repos.d]# useradd mysql -g mysql |

3、下载mysql安装包

1 | https://cdn.mysql.com//Downloads/MySQL-5.7/mysql-5.7.29-1.el7.x86_64.rpm-bundle.tar |

4、解压安装包

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | [root@cluster2-host1 data]# tar -xvf mysql-5.7.29-1.el7.x86_64.rpm-bundle.tar -C /usr/local/mysql/[root@cluster2-host1 mysql]# lltotal 533048-rw-r--r--. 1 7155 31415 27768112 Dec 19 03:12 mysql-community-client-5.7.29-1.el7.x86_64.rpm-rw-r--r--. 1 7155 31415 318972 Dec 19 03:12 mysql-community-common-5.7.29-1.el7.x86_64.rpm-rw-r--r--. 1 7155 31415 4085448 Dec 19 03:12 mysql-community-devel-5.7.29-1.el7.x86_64.rpm-rw-r--r--. 1 7155 31415 47521016 Dec 19 03:12 mysql-community-embedded-5.7.29-1.el7.x86_64.rpm-rw-r--r--. 1 7155 31415 23354680 Dec 19 03:12 mysql-community-embedded-compat-5.7.29-1.el7.x86_64.rpm-rw-r--r--. 1 7155 31415 131015588 Dec 19 03:12 mysql-community-embedded-devel-5.7.29-1.el7.x86_64.rpm-rw-r--r--. 1 7155 31415 2596180 Dec 19 03:12 mysql-community-libs-5.7.29-1.el7.x86_64.rpm-rw-r--r--. 1 7155 31415 1353080 Dec 19 03:12 mysql-community-libs-compat-5.7.29-1.el7.x86_64.rpm-rw-r--r--. 1 7155 31415 183618644 Dec 19 03:12 mysql-community-server-5.7.29-1.el7.x86_64.rpm-rw-r--r--. 1 7155 31415 124193252 Dec 19 03:12 mysql-community-test-5.7.29-1.el7.x86_64.rpm |

5、rpm的方式安装mysql

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | [root@cluster2-host1 mysql]# rpm -ivh mysql-community-common-5.7.29-1.el7.x86_64.rpm warning: mysql-community-common-5.7.29-1.el7.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID 5072e1f5: NOKEYPreparing... ################################# [100%]Updating / installing... 1:mysql-community-common-5.7.29-1.e################################# [100%][root@cluster2-host1 mysql]# rpm -ivh mysql-community-libs-5.7.29-1.el7.x86_64.rpm warning: mysql-community-libs-5.7.29-1.el7.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID 5072e1f5: NOKEYPreparing... ################################# [100%]Updating / installing... 1:mysql-community-libs-5.7.29-1.el7################################# [100%][root@cluster2-host1 mysql]# rpm -ivh mysql-community-client-5.7.29-1.el7.x86_64.rpm warning: mysql-community-client-5.7.29-1.el7.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID 5072e1f5: NOKEYPreparing... ################################# [100%]Updating / installing... 1:mysql-community-client-5.7.29-1.e################################# [100%][root@cluster2-host1 mysql]# rpm -ivh mysql-community-server-5.7.29-1.el7.x86_64.rpm warning: mysql-community-server-5.7.29-1.el7.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID 5072e1f5: NOKEYerror: Failed dependencies: net-tools is needed by mysql-community-server-5.7.29-1.el7.x86_64 |

1 2 3 4 5 6 7 8 | [root@cluster2-host1 mysql]# yum install net-tools -y[root@cluster2-host1 mysql]# rpm -ivh mysql-community-server-5.7.29-1.el7.x86_64.rpm warning: mysql-community-server-5.7.29-1.el7.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID 5072e1f5: NOKEYPreparing... ################################# [100%]Updating / installing... 1:mysql-community-server-5.7.29-1.e################################# [100%] |

6、启动mysql

1 | [root@cluster2-host1 mysql]# service mysqld start |

7、查看mysql的临时密码

1 2 3 | [root@cluster2-host1 mysql]# grep "A temporary password" /var/log/mysqld.log2020-03-02T07:59:38.098144Z 1 [Note] A temporary password is generated for root@localhost: ln/Ot4j-j#hQ[root@cluster2-host1 mysql]# |

8、修改mysql的临时密码

1 2 3 | set global validate_password_policy=0;set global validate_password.length=1;alter user user() identified by "123456"; |

9、设置mysql支持远程访问

1 2 | [root@cluster2-host1 conf]# mysql -u root -pupdate user set host = '%' where user = 'root'; |

10、在其它节点确认可以远程访问mysql即可

1 | mysql -h 10.87.18.34 -p3306 -uroot -p |

二、安装hive

1、解压和重命名hive安装路径

1 2 3 4 | 538 tar -zxvf apache-hive-1.2.0-bin.tar.gz -C /usr/local/ 539 cd /usr/local/ 540 ll 541 mv apache-hive-1.2.0-bin/ hive |

2、修改hive的env文件

1 2 3 | [root@cluster2-host1 conf]# pwd/usr/local/hive/conf[root@cluster2-host1 conf]# cp hive-env.sh.template hive-env.sh |

3、修改hive-env文件

1 2 3 4 5 6 | export HIVE_HOME=/usr/local/hiveexport HADOOP_HOME=/usr/local/hadoop# Hive Configuration Directory can be controlled by:export HIVE_CONF_DIR=/usr/local/hive/confexport HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoopexport PATH=${HIVE_HOME}/bin:$PATH:$HOME/bin: |

4、修改hive的配置文件

vim hive-default.xml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | <property> <name>javax.jdo.option.ConnectionUserName</name> <value>root</value> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>123456</value> </property><property> <name>javax.jdo.option.ConnectionURL</name>mysql <value>jdbc:mysql://10.87.18.34:3306/hive?</value> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> </property> |

5、安装hive的mysql驱动

拷贝驱动到hive/lib目录

1 2 3 | 474 cd mysql-connector-java-5.1.48475 ll476 cp mysql-connector-java-5.1.48-bin.jar /usr/local/hive/lib/ |

6、在mysql中创建hive的database

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | mysql> create database hive;Query OK, 1 row affected (0.00 sec)mysql> show databases;+--------------------+| Database |+--------------------+| information_schema || hive || mysql || performance_schema || sys |+--------------------+5 rows in set (0.00 sec) |

7、拷贝mysql连接驱动到其他未按照hive的节点

1 2 3 4 | [root@cluster2-host1 lib]# scp mysql-connector-java-5.1.48-bin.jar root@cluster2-host2:/usr/local/hive/lib/mysql-connector-java-5.1.48-bin.jar 100% 983KB 983.4KB/s 00:00 [root@cluster2-host1 lib]# scp mysql-connector-java-5.1.48-bin.jar root@cluster2-host3:/usr/local/hive/lib/mysql-connector-java-5.1.48-bin.jar |

三、配置hive的kerberos配置

1、创建主体文件

1 2 3 | kadmin.local: addprinc hive/cluster2-host1kadmin.local: ktadd -norandkey -k /etc/security/keytab/hive.keytab hive/cluster2-host1 |

2、拷贝秘钥文件到hive的目录

1 | scp /etc/security/keytab/hive.keytab /usr/local/hive/conf/ |

3、修改hive的配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | <property> <name>hive.server2.enable.doAs</name> <value>true</value> </property> <property> <name>hive.server2.authentication</name> <value>KERBEROS</value> </property> <property> <name>hive.server2.authentication.kerberos.principal</name> <value>hive/cluster2-host1@HADOOP.COM</value> </property> <property> <name>hive.server2.authentication.kerberos.keytab</name> <value>/usr/local/hive/conf/hive.keytab</value> </property> <property> <name>hive.server2.authentication.spnego.keytab</name> <value>/usr/local/hive/conf/hive.keytab</value> </property> <property> <name>hive.server2.authentication.spnego.principal</name> <value>hive/cluster2-host1@HADOOP.COM</value> </property> <property> <name>hive.metastore.sasl.enabled</name> <value>true</value> </property> <property> <name>hive.metastore.kerberos.keytab.file</name> <value>/usr/local/hive/conf/hive.keytab</value> </property> <property> <name>hive.metastore.kerberos.principal</name> <value>hive/cluster2-host1@HADOOP.COM</value> </property> |

4、修改hadoop的core-site.xml配置文件

1 2 3 4 5 6 7 8 | <property> <name>hadoop.proxyuser.hive.users</name> <value>*</value></property><property> <name>hadoop.proxyuser.hive.hosts</name> <value>*</value></property> |

5、启动hive

1 2 3 4 | [root@cluster2-host1 hive]# nohup ./bin/hive --service metastore > metastore.log 2>&1 &[1] 5637[root@cluster2-host1 hive]# nohup ./bin/hiveserver2 > hive.log 2>&1 &[2] 7361 |

6、通过beeline的方式连接hive

1 2 3 4 5 6 7 8 | [root@cluster2-host1 hive]# ./bin/beeline -u "jdbc:hive2://cluster2-host1:10000/default;principal=hive/cluster2-host1@HADOOP.COM"ls: cannot access /usr/local/spark/lib/spark-assembly-*.jar: No such file or directoryConnecting to jdbc:hive2://cluster2-host1:10000/default;principal=hive/cluster2-host1@HADOOP.COMConnected to: Apache Hive (version 1.2.0)Driver: Hive JDBC (version 1.2.0)Transaction isolation: TRANSACTION_REPEATABLE_READBeeline version 1.2.0 by Apache Hive0: jdbc:hive2://cluster2-host1:10000/default> |

7、登陆进去创建hive表

1 2 | create database myhive;CREATE TABLE student(id int, name string) ROW FORMAT DELIMITED FIELDS TERMINATED BY ' ' LINES TERMINATED BY '\n' STORED AS TEXTFILE; |

查看创建的表

1 2 3 4 5 6 7 8 9 10 11 | 0: jdbc:hive2://cluster2-host1:10000/default> show tables;+------------+--+| tab_name |+------------+--+| student || test1 || test2 || test3 || test4 || test_user |+------------+--+ |

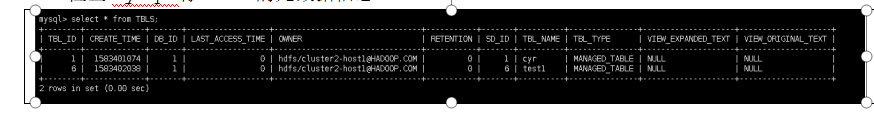

8、检查mysql有hive的元数据信息

9、准备本地数据

1 2 3 4 5 | 2014001 小王12014002 小李22014003 小明32014004 阿狗42014005 姚明5 |

10、上传数据

1 | load data local inpath '/data/hive.txt' into table test1; |

11、查看hdfs上的 数据

1 2 3 | [root@cluster2-host1 data]# hdfs dfs -ls /user/hive/warehouse/test1Found 1 items-rwxr-xr-x 2 hdfs supergroup 112 2020-03-05 04:55 /user/hive/warehouse/test1/hive.txt |

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

2019-03-27 vue的组件

2018-03-27 Dom对象总结介绍&事件介绍&增删查找标签

2018-03-27 Bom对象介绍

2018-03-27 JavaScript对象继续总结