目录

前言

在tvm工程的apps目录下,有一个howto_deploy的工程,根据此工程进行修改,可以得到c++推理程序。

修改cpp_deploy.cc文件

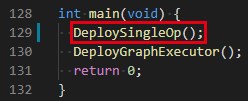

DeploySingleOp()函数不需要,直接将其和相关的Verify函数一起删掉。

修改DeployGraphExecutor()函数

读取指定模型,同时获得后面所需的函数

LOG(INFO) << "Running graph executor...";

printf("load in the library\n");

DLDevice dev{kDLCPU, 1};

tvm::runtime::Module mod_factory = tvm::runtime::Module::LoadFromFile("../model_autotune.so");

printf("create the graph executor module\n");

tvm::runtime::Module gmod = mod_factory.GetFunction("default")(dev);printf(" default\n");

tvm::runtime::PackedFunc set_input = gmod.GetFunction("set_input");printf(" set_input\n");

tvm::runtime::PackedFunc get_output = gmod.GetFunction("get_output");printf(" get_output\n");

tvm::runtime::PackedFunc run = gmod.GetFunction("run");printf(" run\n");

定义输入输出的变量

printf("Use the C++ API\n");

tvm::runtime::NDArray input = tvm::runtime::NDArray::Empty({1, 1, 640}, DLDataType{kDLFloat, 32, 1}, dev);

tvm::runtime::NDArray input_state = tvm::runtime::NDArray::Empty({1, 2, 128, 2}, DLDataType{kDLFloat, 32, 1}, dev);

tvm::runtime::NDArray output = tvm::runtime::NDArray::Empty({1, 1, 640}, DLDataType{kDLFloat, 32, 1}, dev);

tvm::runtime::NDArray output_state = tvm::runtime::NDArray::Empty({1, 2, 128, 2}, DLDataType{kDLFloat, 32, 1}, dev);

从bin文件中读取数据

float input_storage[1 * 1 * 640];

FILE* fp = fopen("../input.bin", "rb");

fread(input->data, 1 * 1 * 640, 4, fp);

fclose(fp);

float input_state_storage[1 * 2 * 128 * 2];

FILE* fp_state = fopen("../input_state.bin", "rb");

fread(input_state->data, 1 * 2 * 128 * 2, 4, fp_state);

fclose(fp_state);

将数据输入到网络

printf("set the right input\n");

set_input("input_4", input);

set_input("input_5", input_state);

运行推理

struct timeval t0, t1;

int times = 100000; // 3394

gettimeofday(&t0, 0);

printf("run the code\n");

for(int i=0;i<times;i++)

run();

gettimeofday(&t1, 0);

printf("%.5fms\n", ((t1.tv_sec - t0.tv_sec) * 1000 + (t1.tv_usec - t0.tv_usec) / 1000.f)/times);

得到输出

printf("get the output\n");

get_output(0, output);printf(" 0\n");

get_output(1, output_state);printf(" 1\n");

将输出保存到bin文件

FILE* fp_out = fopen("output.bin", "wb");

fwrite(output->data, 1 * 1 * 640, 4, fp_out);

fclose(fp_out);

FILE* fp_out_state = fopen("output_state.bin", "wb");

fwrite(output_state->data, 1 * 2 * 128 * 2, 4, fp_out_state);

fclose(fp_out_state);

numpy与bin文件的互相转换

numpy转bin

import numpy as np

import os

input_1 = np.load("./input.npy")

input_2 = np.load("./input_states.npy")

build_dir = "./"

with open(os.path.join(build_dir, "input.bin"), "wb") as fp:

fp.write(input_1.astype(np.float32).tobytes())

with open(os.path.join(build_dir, "input_state.bin"), "wb") as fp:

fp.write(input_2.astype(np.float32).tobytes())

bin转numpy

output = np.fromfile("./output.bin", dtype=np.float32)

output_state = np.fromfile("./output_state.bin", dtype=np.float32)

使用CMakeLists.txt进行编译

在howto_deploy目录下创建CMakeLists.txt

cmake_minimum_required(VERSION 3.2)

project(how2delploy C CXX)

SET(CMAKE_CXX_FLAGS_DEBUG "$ENV{CXXFLAGS} -O3 -Wall -g2 -ggdb")

SET(CMAKE_CXX_FLAGS_RELEASE "$ENV{CXXFLAGS} -O3 -Wall -fPIC")

set(TVM_ROOT /path/to/tvm)

set(DMLC_CORE ${TVM_ROOT}/3rdparty/dmlc-core)

include_directories(${TVM_ROOT}/include)

include_directories(${DMLC_CORE}/include)

include_directories(${TVM_ROOT}/3rdparty/dlpack/include)

link_directories(${TVM_ROOT}/build/Release)

add_definitions(-DDMLC_USE_LOGGING_LIBRARY=<tvm/runtime/logging.h>)

add_executable(cpp_deploy_norm cpp_deploy.cc)

target_link_libraries(cpp_deploy_norm ${TVM_ROOT}/build/libtvm_runtime.so)

老四连

mkdir build

cd build

cmake ..

make

运行

cd build

./cpp_deploy_norm

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 上周热点回顾(3.3-3.9)