ELK 部署环境准备

| 操作系统 | IP 地址 | 主机名 | 软件包 | 备注 |

| CentOS7.5 x86_64 | 192.168.200.111 | elk-node1 | 内存:2G | |

| CentOS7.5 x86_64 | 192.168.200.112 | elk-node2 | 内存:2G | |

| CentOS7.5 x86_64 | 192.168.200.113 | apache | 内存:1G |

创建多台 Elasticsearch 节点的目的是存放数据的多个副本,在实际生产环境中,节点的数量可能更多,另外本案例中,ELasticsearch 和 Kibana 集中部署在 Node1 节点上,也可以采用分布式部署,即 Logstash、Elasticsearch 和 Kibana 分别部署在不同的服务器上。

所有主机关闭防火墙和 selinux ,ELK-node 上配置名称解析。

systemctl stop firewalld

iptables -F

setenforce 0

vim /etc/hosts

192.168.200.111 elk-node1

192.168.200.112 elk-node2

因为 elasticsearch 服务运行需要 java 环境,因此 elasticsearch 服务器需要安装 java 环境。

[root@elk-node2 ~]# which java

/usr/bin/java

安装 elasticsearch 软件

官网下载地址:

https://www.elastic.co/cn/downloads/past-releases#elasticsearch

Elasticsearch 安装可以通过 YUM 安装、源码包安装,这里通过下载的 rpm 包进行安装,生产环境中你可以根据实际情况进行安装方式选择。2个节点都要做

[root@elk-node1 ~]# rpm -ivh elasticsearch-5.5.0.rpm

警告:elasticsearch-5.5.0.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID d88e42b4: NOKEY

准备中... ################################# [100%]

Creating elasticsearch group... OK

Creating elasticsearch user... OK

正在升级/安装...

1:elasticsearch-0:5.5.0-1 ################################# [100%]

### NOT starting on installation, please execute the following statements to configure elasticsearch service to start automatically using systemd

sudo systemctl daemon-reload

sudo systemctl enable elasticsearch.service

### You can start elasticsearch service by executingsudo systemctl start elasticsearch.service

通过执行命令设置成系统服务并设置自动开机启动,2个节点都要做

[root@elk-node1 ~]# systemctl daemon-reload

[root@elk-node1 ~]# systemctl enable elasticsearch.service

Created symlink from /etc/systemd/system/multi-user.target.wants/elasticsearch.service to /usr/lib/systemd/system/elasticsearch.service.

更改 elasticsearch 主配置文件,2个节点都要做,该文件冒号后要加空格

[root@elk-node1 ~]# vim /etc/elasticsearch/elasticsearch.yml

17 cluster.name: my-elk-cluster #集群名字此处配置 node1 与 node2 必须相同

23 node.name: elk-node1 #节点名字(主机名)

33 path.data: /data/elk_data #数据存放路径

37 path.logs: /var/log/elasticsearch #日志存放路径

43 bootstrap.memory_lock: false #不在启动时锁定内存

55 network.host: 0.0.0.0 #提供服务绑定的 ip 地址,0.0.0.0 代表所有地址

59 http.port: 9200 #指定侦听端口

68 discovery.zen.ping.unicast.hosts: ["elk-node1","elk-node2"] #集群中的实例名

http.cors.enabled: true (末行追加)#开启跨区域传送

http.cors.allow-origin: "*" #跨区域访问允许的域名地址

注意 node1 此处不同于 node2,这两行只在第一台加就行

建数据存放路径并授权,2个节点都要做

[root@elk-node1 ~]# mkdir -p /data/elk_data

[root@elk-node1 ~]# chown elasticsearch:elasticsearch /data/elk_data/

启动 elasticsearch 并查看是否成功开启,2个节点都要做

[root@elk-node1 ~]# systemctl start elasticsearch.service

[root@elk-node1 ~]# netstat -lnpt | grep 9200

tcp6 0 0 :::9200 :::* LISTEN 2296/java

Elasticsearch 默认的对外服务的 HTTP 端口是 9200,节点间交互的 TCP 端口是 9300。

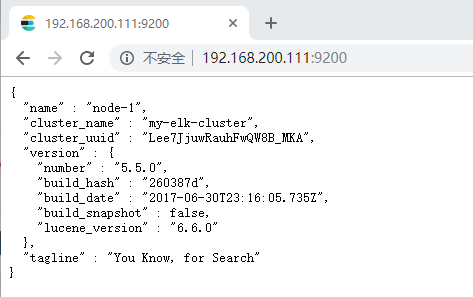

通过浏览器访问节点,可以看到节点信息情况。

[root@elk-node1 ~]# curl http://192.168.200.111:9200

{

"name" : "node-1",

"cluster_name" : "my-elk-cluster",

"cluster_uuid" : "Lee7JjuwRauhFwQW8B_MKA",

"version" : {

"number" : "5.5.0",

"build_hash" : "260387d",

"build_date" : "2017-06-30T23:16:05.735Z",

"build_snapshot" : false,

"lucene_version" : "6.6.0"

},

"tagline" : "You Know, for Search"

}

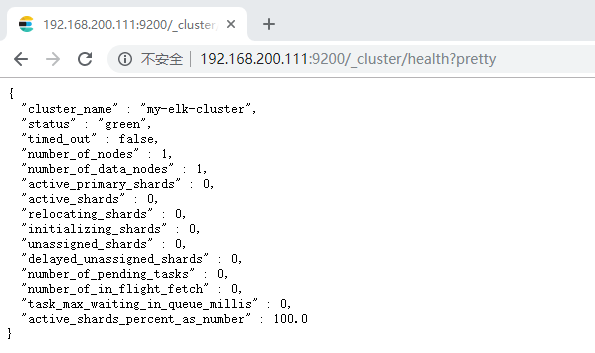

通过浏览器输入 http://192.168.200.111:9200/_cluster/health?pretty 查看集群的健康情况,

对 status 进行分析,green 就是运行正常,yellow 表示副本分片丢失,red 表示主分片丢失。

[root@elk-node1 ~]# curl http://192.168.200.111:9200/_cluster/health?pretty

{

"cluster_name" : "my-elk-cluster",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 1,

"number_of_data_nodes" : 1,

"active_primary_shards" : 0,

"active_shards" : 0,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

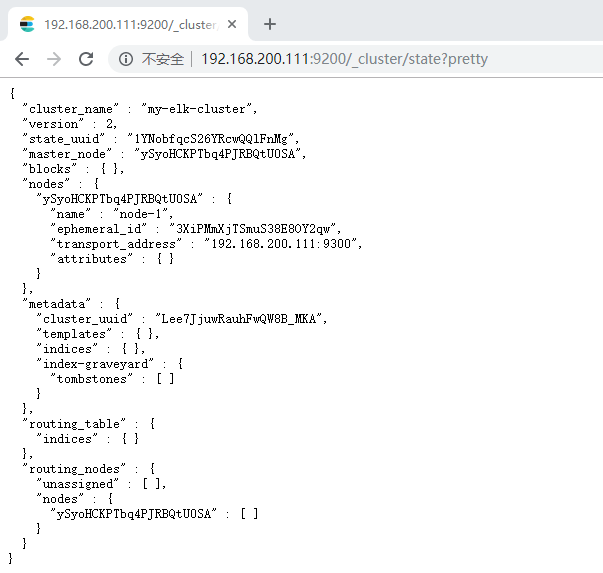

通过浏览器输入 http://192.168.200.111:9200/_cluster/state?pretty 查看集群状态信息

[root@elk-node1 ~]# curl http://192.168.200.111:9200/_cluster/state?pretty

{

"cluster_name" : "my-elk-cluster",

"version" : 2,

"state_uuid" : "1YNobfqcS26YRcwQQlFnMg",

"master_node" : "ySyoHCKPTbq4PJRBQtU0SA",

"blocks" : { },

"nodes" : {

"ySyoHCKPTbq4PJRBQtU0SA" : {

"name" : "node-1",

"ephemeral_id" : "3XiPMmXjTSmuS38E8OY2qw",

"transport_address" : "192.168.200.111:9300",

"attributes" : { }

}

},

"metadata" : {

"cluster_uuid" : "Lee7JjuwRauhFwQW8B_MKA",

"templates" : { },

"indices" : { },

"index-graveyard" : {

"tombstones" : [ ]

}

},

"routing_table" : {

"indices" : { }

},

"routing_nodes" : {

"unassigned" : [ ],

"nodes" : {

"ySyoHCKPTbq4PJRBQtU0SA" : [ ]

}

}

}

安装 elasticsearch-head 插件

我们不可能经常通过命令或浏览器来查看集群的信息,所以就使用到了插件 -head。

Elasticsearch 在 5.0 版本后,elasticsearch-head(基于 Chrome V8 引擎的 JavaScript 运行环境)插件需要作为独立服务进行安装,需要 npm 命令。

两个节点都需要操作

安装node

官网下载地址:

https://nodejs.org/en/download/

[root@elk-node1 ~]# tar xf node-v8.2.1-linux-x64.tar.gz -C /usr/local/

[root@elk-node1 ~]# ln -s /usr/local/node-v8.2.1-linux-x64/bin/node /usr/bin/node

[root@elk-node1 ~]# ln -s /usr/local/node-v8.2.1-linux-x64/bin/npm /usr/local/bin/

[root@elk-node1 ~]# node -v

v8.2.1

[root@elk-node1 ~]# npm -v

5.3.0

安装 elasticsearch-head 作为独立服务并后台启动

这将启动运行在端口 9100 上的本地 web 服务器,该端口服务于 elasticsearch-head

[root@elk-node1 ~]# tar xf elasticsearch-head.tar.gz -C /data/elk_data/

[root@elk-node1 ~]# cd /data/elk_data/

[root@elk-node1 elk_data]# chown -R elasticsearch:elasticsearch elasticsearch-head/

[root@elk-node1 elk_data]# cd /data/elk_data/elasticsearch-head/

[root@elk-node1 elasticsearch-head]# npm install

#报错为正常现象,忽略即可。

[root@elk-node1 elasticsearch-head]# cd _site/

[root@elk-node1 _site]# pwd

/data/elk_data/elasticsearch-head/_site[root@elk-node1 _site]# cp app.js{,.bak}

[root@elk-node1 _site]# vim app.jsthis.base_uri = this.config.base_uri || this.prefs.get("app-base_uri") || "http://192.168.200.111:9200"; #更改为自身 IP

[root@elk-node1 _site]# npm run start &

[root@elk-node1 _site]# systemctl start elasticsearch.service

[root@elk-node1 _site]# netstat -lnpt | grep 9100

tcp 0 0 0.0.0.0:9100 0.0.0.0:* LISTEN 1480/grunt

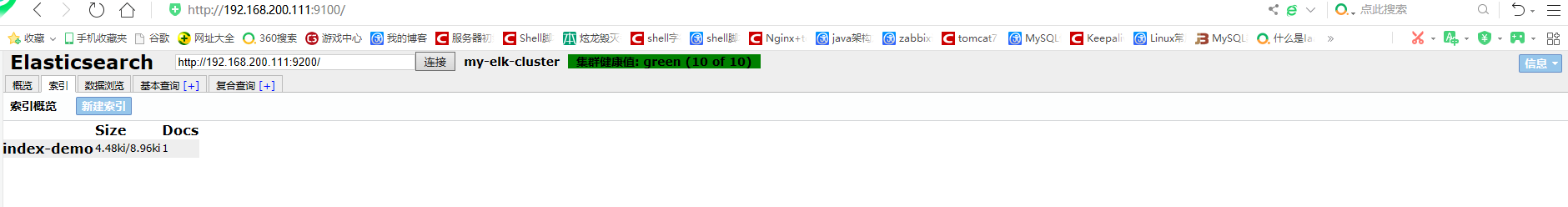

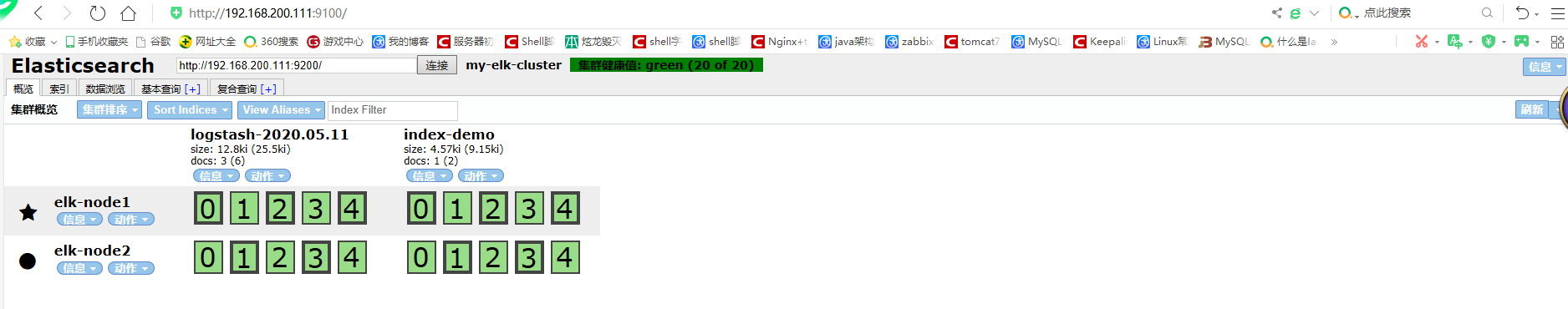

通过浏览器进行访问:http://192.168.200.111:9100 并连接集群

可以通过命令插入一个测试索引,索引为 index-demo,类型为 test,可以看到成功创建。

[root@elk-node1 ~]# curl -XPUT 'localhost:9200/index-demo/test/1?pretty&pretty' -H 'Content-Type:application/json' -d '{"user":"zhangsan","mesg":"hello world"}'

{

"_index" : "index-demo",

"_type" : "test",

"_id" : "1",

"_version" : 1,

"result" : "created",

"_shards" : {

"total" : 2,

"successful" : 2,

"failed" : 0

},

"created" : true

}

刷新浏览器,可以看到创建成功的索引

安装 logstash

Logstash 一般部署在需要监控其日志的服务器中的。本案例中,Logstash 部署在 Apache 服务器上,用于搜集 Apache 服务器的日志信息并发送到 Elasticsearch 中。logstash 也需要 java 环境,所以也要检查当前机器的 java 环境是否存在。

在正式部署之前,先再 Node1 上部署 Logstash,以熟悉 Logstash 的使用方法。

在 elk-node1 上安装

[root@elk-node1 ~]# rpm -ivh logstash-5.5.1.rpm

警告:logstash-5.5.1.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID d88e42b4: NOKEY

准备中... ################################# [100%]

正在升级/安装...

1:logstash-1:5.5.1-1 ################################# [100%]

Using provided startup.options file: /etc/logstash/startup.options

Successfully created system startup script for Logstash

[root@elk-node1 ~]# systemctl start logstash.service

[root@elk-node1 ~]# ln -s /usr/share/logstash/bin/logstash /usr/local/bin/

logstash 基本使用

logstash 使用管道方式进行日志的搜集处理和输出。有点类似 Linux 系统的管道命令 xxx | ccc | ddd,xxx 执行完了会执行 ccc ,然后执行 ddd。

在 logstash 中,包括了三个阶段;

输入 input --> 处理 filter(不是必须的)--> 输出 output

每个阶段都由很多的插件配合工作,比如 filebeat、elasticsearch、Redis 等等。

每个阶段也可以指定多种方式,比如输出既可以输出到 elasticsearch 中,也可以指定到 stdout 在控制台打印。

由于这种插件式的组织方式,使得 logstash 变得易于扩展和定制。

logstash 命令行中常用的命令

-f:通过这个命令可以指定 logstash 的配置文件,根据配置文件配置 logstash。

-e:后面跟着字符串,该字符串可以被当做 logstash 的配置(如果是 “” 则默认使用 stdin 作为输入,stdout 作为输出)

-t:测试配置文件是否正确,然后退出

启动一个 logstash -e: 在命令行执行;input 输入,stdin 标准输入,是一个插件;output输出,stdout 标准输出

[root@elk-node1 ~]# logstash -e 'input{stdin{}}output{stdout{}}'

ERROR StatusLogger No log4j2 configuration file found. Using default configuration: logging only errors to the console.

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path //usr/share/logstash/config/log4j2.properties. Using default config which logs to console

18:07:43.368 [main] INFO logstash.setting.writabledirectory - Creating directory {:setting=>"path.queue", :path=>"/usr/share/logstash/data/queue"}

18:07:43.391 [main] INFO logstash.setting.writabledirectory - Creating directory {:setting=>"path.dead_letter_queue", :path=>"/usr/share/logstash/data/dead_letter_queue"}

18:07:43.514 [LogStash::Runner] INFO logstash.agent - No persistent UUID file found. Generating new UUID {:uuid=>"0dfc709c-02e0-4486-b8f9-87d39279940c", :path=>"/usr/share/logstash/data/uuid"}

18:07:43.832 [[main]-pipeline-manager] INFO logstash.pipeline - Starting pipeline {"id"=>"main", "pipeline.workers"=>1, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>125}

18:07:43.910 [[main]-pipeline-manager] INFO logstash.pipeline - Pipeline main started

The stdin plugin is now waiting for input:

18:07:44.109 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600} #注意出现此行为成功上面报错忽略即可

www.baidu.com #手动输入

2020-05-11T10:08:03.327Z elk-node1 www.baidu.com

www.sina.com.cn #手动输入

2020-05-11T10:09:47.975Z elk-node1 www.sina.com.cn 完成后(Ctrl+C)退出即可

^C18:09:51.612 [SIGINT handler] WARN logstash.runner - SIGINT received. Shutting down the agent.

18:09:51.626 [LogStash::Runner] WARN logstash.agent - stopping pipeline {:id=>"main"}

使用 rubydebug 显示详细输出,codec 为一种编 xxx

[root@elk-node1 ~]# logstash -e 'input{stdin{}} output{stdout{codec=>rubydebug}}'

ERROR StatusLogger No log4j2 configuration file found. Using default configuration: logging only errors to the console.

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path //usr/share/logstash/config/log4j2.properties. Using default config which logs to console

18:16:53.950 [[main]-pipeline-manager] INFO logstash.pipeline - Starting pipeline {"id"=>"main", "pipeline.workers"=>1, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>125}

18:16:54.017 [[main]-pipeline-manager] INFO logstash.pipeline - Pipeline main started

The stdin plugin is now waiting for input:

18:16:54.088 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

www.baidu.com

{

"@timestamp" => 2020-05-11T10:17:08.349Z,

"@version" => "1",

"host" => "elk-node1",

"message" => "www.baidu.com"

}

www.sina.com.cn

{

"@timestamp" => 2020-05-11T10:17:16.354Z,

"@version" => "1",

"host" => "elk-node1",

"message" => "www.sina.com.cn"

}

^C18:17:19.317 [SIGINT handler] WARN logstash.runner - SIGINT received. Shutting down the agent.

18:17:19.328 [LogStash::Runner] WARN logstash.agent - stopping pipeline {:id=>"main"}

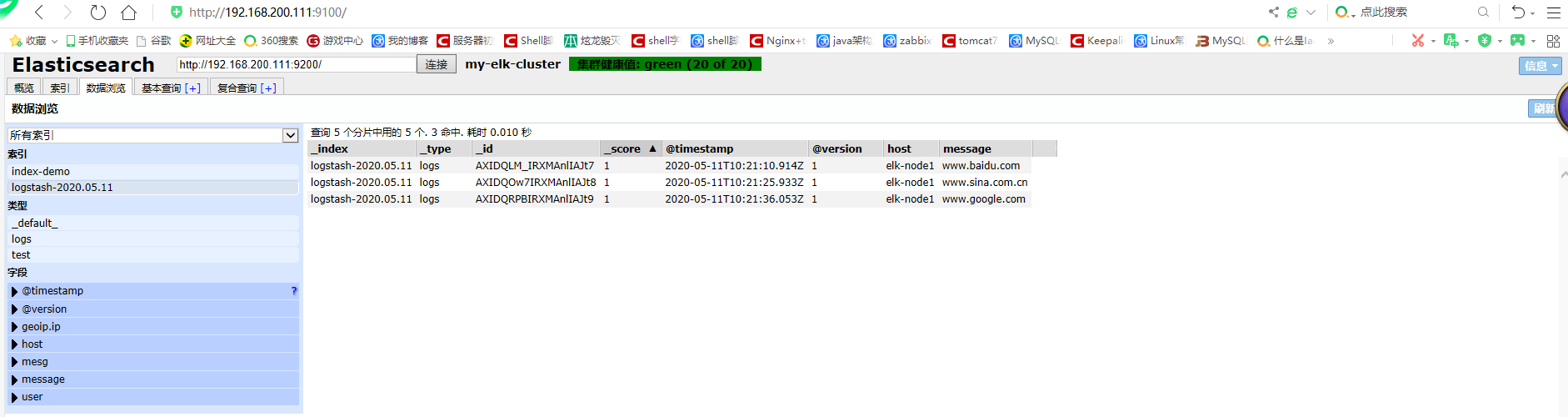

使用 logstash 将信息写入到 elasticsearch 中

[root@elk-node1 ~]# logstash -e 'input{stdin{}} output{ elasticsearch{hosts=>["192.168.200.111:9200"]}}'

ERROR StatusLogger No log4j2 configuration file found. Using default configuration: logging only errors to the console.

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path //usr/share/logstash/config/log4j2.properties. Using default config which logs to console

18:20:41.209 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http://192.168.200.111:9200/]}}

18:20:41.214 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Running health check to see if an Elasticsearch connection is working {:healthcheck_url=>http://192.168.200.111:9200/, :path=>"/"}

18:20:41.411 [[main]-pipeline-manager] WARN logstash.outputs.elasticsearch - Restored connection to ES instance {:url=>#<Java::JavaNet::URI:0x5169c104>}

18:20:41.422 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Using mapping template from {:path=>nil}

18:20:41.707 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Attempting to install template {:manage_template=>{"template"=>"logstash-*", "version"=>50001, "settings"=>{"index.refresh_interval"=>"5s"}, "mappings"=>{"_default_"=>{"_all"=>{"enabled"=>true, "norms"=>false}, "dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"string_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timestamp"=>{"type"=>"date", "include_in_all"=>false}, "@version"=>{"type"=>"keyword", "include_in_all"=>false}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longitude"=>{"type"=>"half_float"}}}}}}}}

18:20:41.742 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Installing elasticsearch template to _template/logstash

18:20:41.962 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>[#<Java::JavaNet::URI:0x2f3a7a0d>]}

18:20:41.965 [[main]-pipeline-manager] INFO logstash.pipeline - Starting pipeline {"id"=>"main", "pipeline.workers"=>1, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>125}

18:20:42.030 [[main]-pipeline-manager] INFO logstash.pipeline - Pipeline main started

The stdin plugin is now waiting for input:

18:20:42.183 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

www.baidu.com

www.sina.com.cn

www.google.com

^C18:21:38.742 [SIGINT handler] WARN logstash.runner - SIGINT received. Shutting down the agent.

18:21:38.756 [LogStash::Runner] WARN logstash.agent - stopping pipeline {:id=>"main"}

访问结果

logstash 配置文件使用

logstash 配置文件基本上由三部分组成,input、output 以及用户需要才添加的 filter,因此标准的配置文件格式如下:

input {...}

filter {...}

output {...}

在每个部分中,也可以指定多个访问方式,例如我想要指定两个日志来源文件,则可以这样写:

input {

file { path => "/var/log/messages" type =>"syslog"}

file { path => "/var/log/apache/access.log" type =>"apache"}

}

下面是一个收集系统日志的配置文件例子,将其放到 /etc/logstash/conf.d/目录中,logstash启动的时候便会加载。注意要给 logstash 读取日志文件的权限。

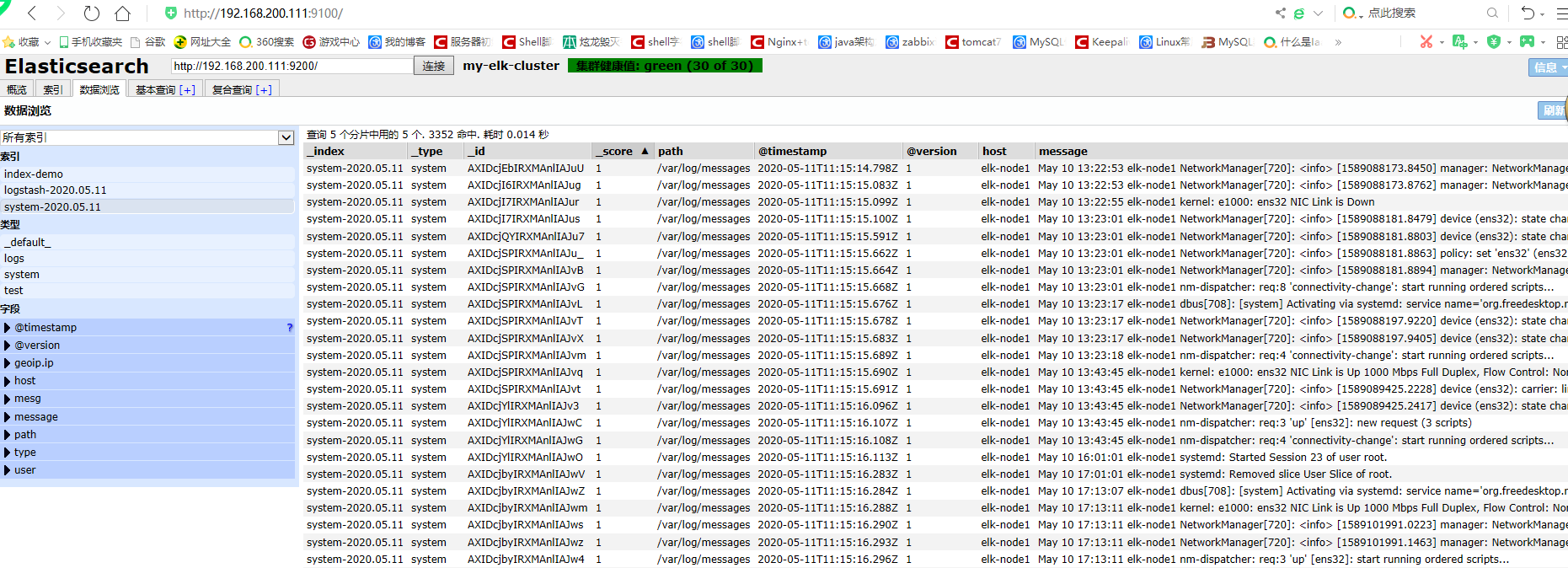

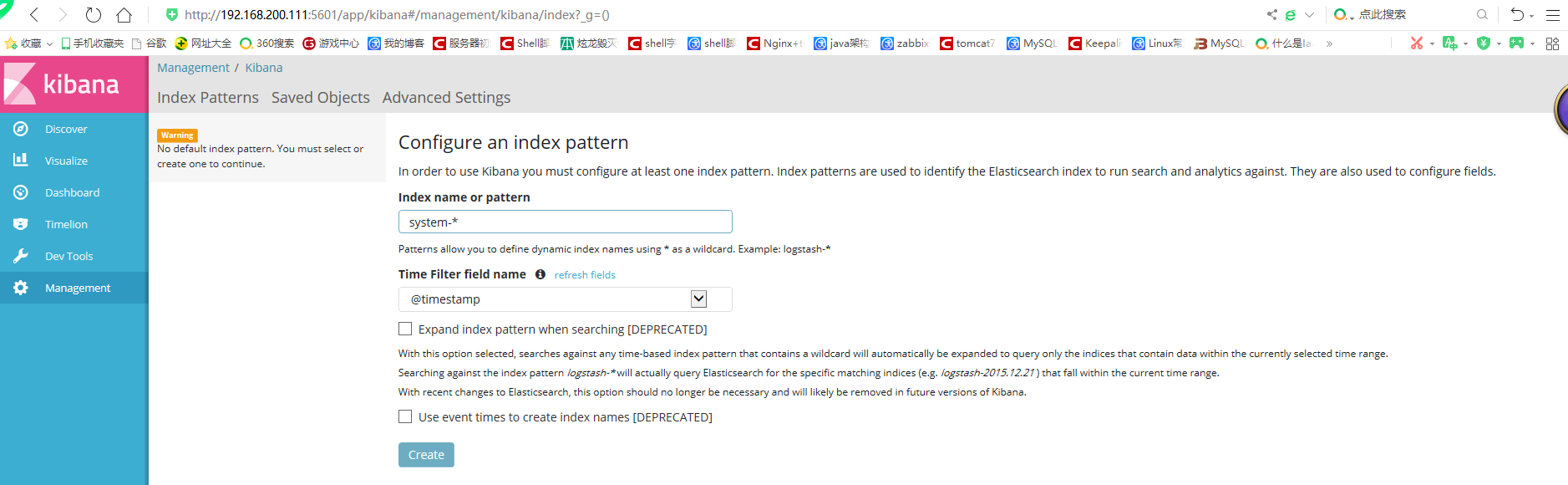

案例:配置收集系统日志

将 system.conf 放到 /etc/logstash/conf.d/目录中,logstash启动的时候便会加载

[root@elk-node1 ~]# cd /etc/logstash/conf.d/

[root@elk-node1 conf.d]# vim system.confinput {

file {

path => "/var/log/messages"

type => "system"

start_position => "beginning"

}

}

output {

elasticsearch {

hosts => ["192.168.200.111:9200"]

index => "system-%{+YYYY.MM.dd}"

}

}

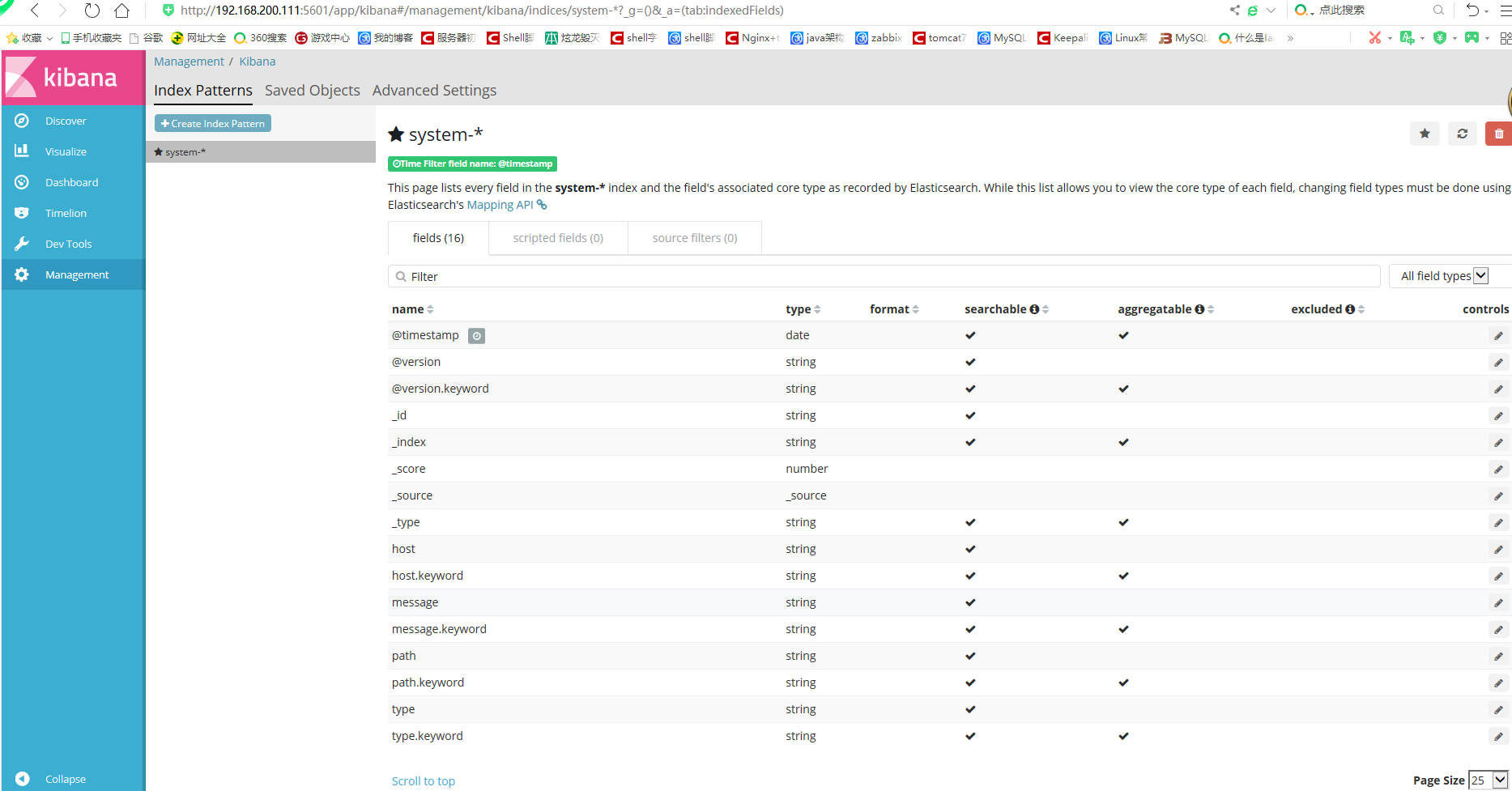

访问查看

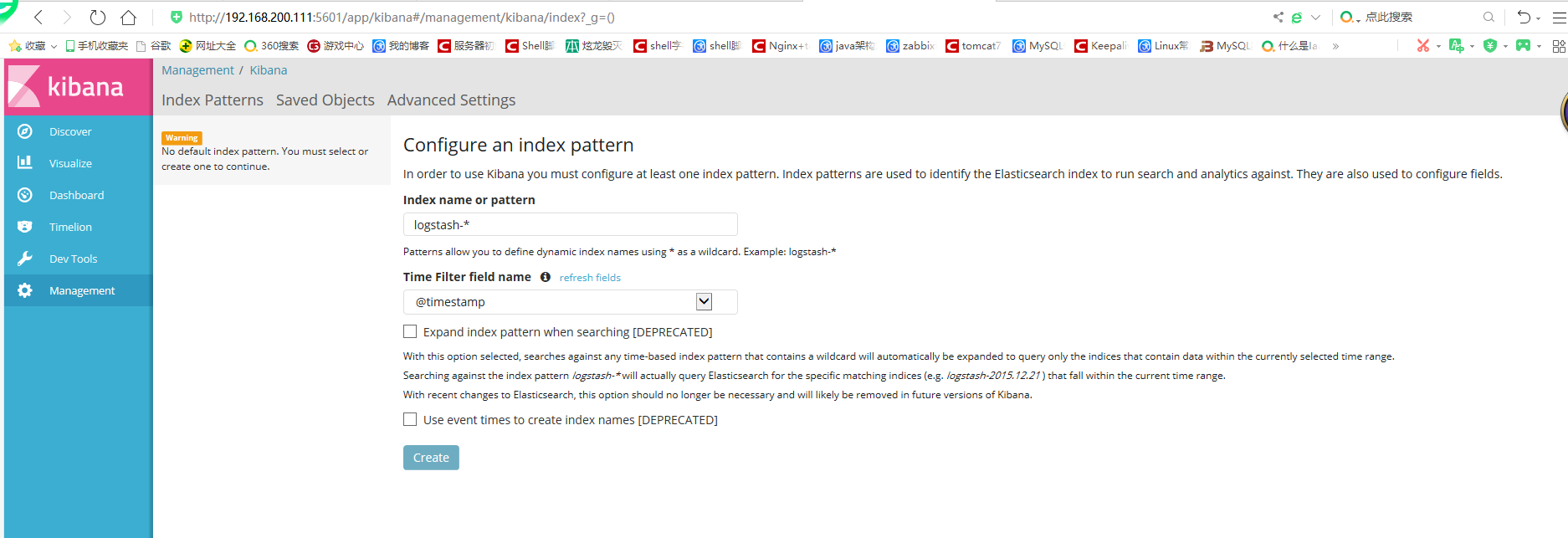

安装 Kibana

在 elk-node1 服务器上安装 Kibana,并设置开机启动。

[root@elk-node1 ~]# rpm -ivh kibana-5.5.1-x86_64.rpm

警告:kibana-5.5.1-x86_64.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID d88e42b4: NOKEY

准备中... ################################# [100%]

正在升级/安装...

1:kibana-5.5.1-1 ################################# [100%]

[root@elk-node1 ~]# systemctl enable kibana.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kibana.service to /etc/systemd/system/kibana.service.

修改配置文件并启动服务

[root@elk-node1 ~]# vim /etc/kibana/kibana.yml

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.url: "http://192.168.200.111:9200"

kibana.index: ".kibana"

[root@elk-node1 ~]# systemctl start kibana.service

[root@elk-node1 ~]# netstat -lnpt | grep 5601

tcp 0 0 0.0.0.0:5601 0.0.0.0:* LISTEN 12140/node

使用浏览器访问测试

http://192.168.200.111:5601

注意上面有一个报错,需要创建一个默认分区

单击 Discover 按钮查看图表信息及日志信息

鼠标指针悬停在“Avbie Fields”中的 host,然后单击 add 按钮,可以看到按照 “host” 筛选后的结果

案例:apache 访问日志

环境准备

centos7.5 系列虚拟机 ip address:192.168.200.113

[root@nginx ~]# hostname apache

[root@nginx ~]# bash

[root@apache ~]# yum -y install httpd[root@apache ~]# java -version

java version "1.8.0_191"

Java(TM) SE Runtime Environment (build 1.8.0_191-b12)

Java HotSpot(TM) 64-Bit Server VM (build 25.191-b12, mixed mode)[root@apache ~]# rpm -ivh logstash-5.5.1.rpm

[root@apache ~]# systemctl start httpd.service

[root@apache ~]# systemctl stop firewalld.service[root@apache ~]# cd /etc/logstash/conf.d/

[root@apache conf.d]# vim apache_log.confinput {

file {

path => "/var/log/httpd/access_log"

type => "access"

start_position => "beginning"

}

file {

path => "/var/log/httpd/error_log"

type => "error"

start_position => "beginning"

}

output {

if [type] == "access" {

elasticsearch {

hosts => ["192.168.200.111:9200"]

index => "apache_access-%{+YYYY.MM.dd}"

}

}

if [type] == "error" {

elasticsearch {

hosts => ["192.168.200.111:9200"]

index => "apache_error-%{+YYYY.MM.dd}"

}

}}

[root@apache conf.d]# /usr/share/logstash/bin/logstash -f apache_log.conf