记录下在搭建 kafka 2.7.1 版本过程中遇到的一些问题及解决方案

背景

目前的三套kafka集群版本比较老,0.9,1.0,1.1版本,并且磁盘容量即将到达限制,无法满足日益增长的产品需求,故计划重新搭建一套新版本的kafka集群

本次搭建的目的

进行测试,计划搭建一套三节点的kafka集群,由于之前版本的kafka集群是很久之前搭建的,版本也比较老,有必要重新踩一遍坑,此外验证下新版本的权限控制功能,是否能满足线上需求

版本为什么选2.7.1

此时kafka 2.8版本已发布三个月,2.8版本移除了对zk的依赖,虽然是稳定版本,但是鉴于此集群用于业务,故暂不使用2.8,2.7.1是第二新的版本,选新不选旧。(其实是拍脑袋定的)

zookeeper的搭建

zk的版本选择3.5.9,这是kafka 2.7.1 的配套版本,具体的搭建配置过程不提,说下遇到的问题

问题1 :启动zk失败,查看log,报错如下

java.lang.UnsupportedClassVersionError: org/springframework/web/SpringServletContainerInitializer : Unsupported major.minor version 52.0 (unable to load class org.springframework.web.SpringServletContainerInitializer)

原因:是java的版本不对,需要的是jdk 1.8 实际线上默认安装的是jdk 1.7,安装1.8后zk正常启动,安装可参考:https://www.cnblogs.com/fswhq/p/10713429.html

问题2:zk启动后,集群状态不对,参看log,报错如下

ERROR [/xxxxx:3888:QuorumCnxManager$Listener@958] - Exception while listening

java.net.BindException: Cannot assign requested address (Bind failed)

at java.net.PlainSocketImpl.socketBind(Native Method)

at java.net.AbstractPlainSocketImpl.bind(AbstractPlainSocketImpl.java:387)

at java.net.ServerSocket.bind(ServerSocket.java:375)

at java.net.ServerSocket.bind(ServerSocket.java:329)

原因:在zoo.cfg文件加上参数quorumListenOnAllIPs=true,貌似物理机上不用加,测试的三台是NVM,可能存在着网络上的一些设置吧,不懂

官网原文:quorumListenOnAllIPs:当设置为true时,ZooKeeper服务器将在所有可用IP地址上侦听来自其对等方的连接,而不仅是在配置文件的服务器列表中配置的地址。它会影响处理ZAB协议和快速领导者选举协议的连接。默认值为false。

问题3:集群启动过程中总有个节点无法加入集群,log中看到链接失败和一个warn

WARN [QuorumConnectionThread-[myid=3]-3:QuorumCnxManager@381] - Cannot open channel to 2 at election address /xxxx:13889

java.net.ConnectException: Connection refused (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:589)

at org.apache.zookeeper.server.quorum.QuorumCnxManager.initiateConnection(QuorumCnxManager.java:373)

at org.apache.zookeeper.server.quorum.QuorumCnxManager$QuorumConnectionReqThread.run(QuorumCnxManager.java:436)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

观察这个warn发现 Cannot open channel to 2 at election address /xxxx:13889,但是这个节点的myid 是 3,

原因:zoo.cfg中配置和myid配置,有两个节点写反了。。。 server.2服务器的myid配置为3了。

kafka集群搭建

kafka集群的搭建比较简单,没有遇到问题,按下不表。接下来重点说下kafka的权限管理(主要是测试,无原理)

kafka自带的权限控制,不符合需求,准备尝试改下kafka的源码看行不行

1,下载kafka2.7.1源码。官网下载即可

2,修改权限认证部分的代码

路径:

/xxxx/kafka-2.7.1-src/clients/src/main/java/org/apache/kafka/common/security/plain/internals/PlainServerCallbackHandler.java

具体的修改内容等能成功运行再贴,免得打脸

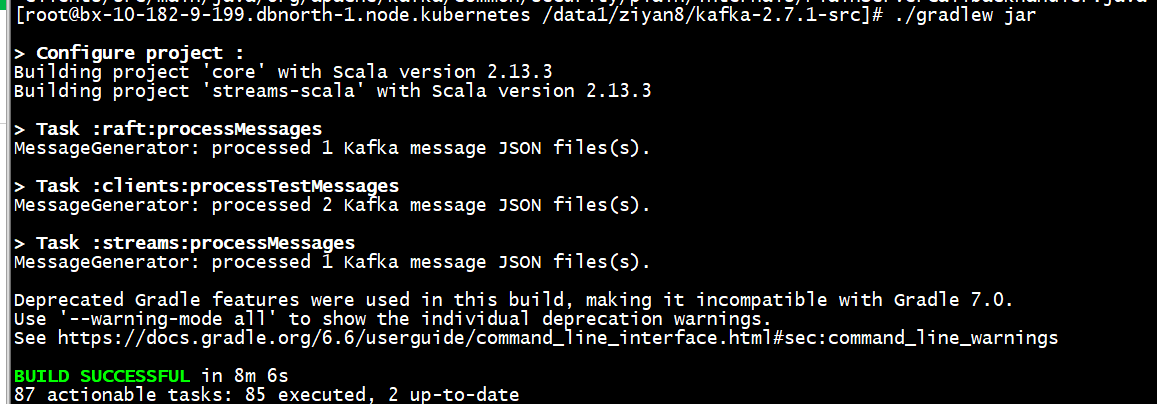

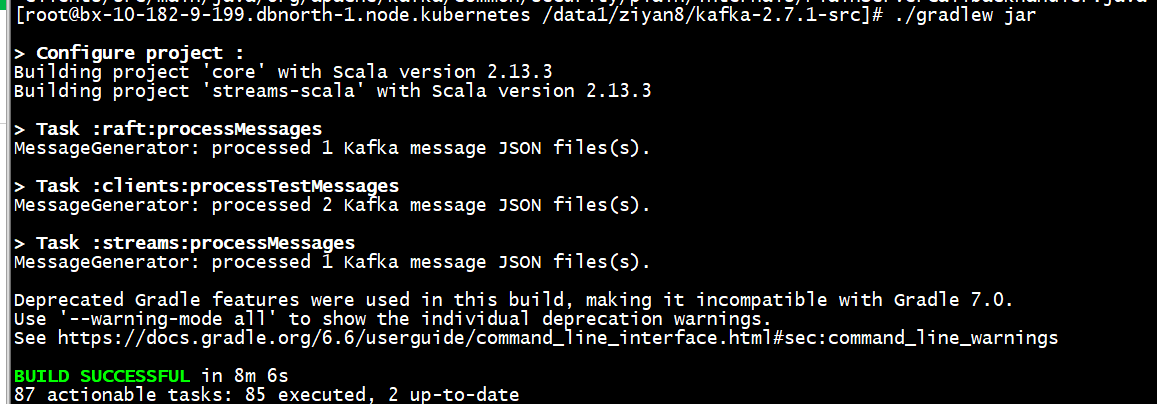

3,编译,修改完代码后 确认下安装了java1.8 然后在源码根目录执行

./gradlew clean build -x test

然后执行 ./gradlew jar 打jar包,修改一些语法错误后,居然就成功了

然后执行 生成jar包 ./gradlew srcJar

生成jar包后,我们只需要kafka_client.jar 找了下在 xxx/kafka-2.7.1-src/clients/build/libs/ 目录下发现了 kafka-clients-2.7.1.jar kafka-clients-2.7.1-sources.jar两个jar包,应该就是他了!

接下来将kafka-clients-2.7.1.jar copy到kafka/libs 目录下,覆盖原有的jar包,至此源码修改部分完成,下面开始配置SASL/PLAIN权限认证部分。

SASL/PLAIN权限认证

首先配置zookeeper

1,在zoo.cfg加配置,申明权限认证方式,这是指broker和zookeepr的认证

authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider requireClientAuthScheme=sasl jaasLoginRenew=3600000

2,新建zk_server_jaas.conf文件,指定链接到zookeeper需要的用户名和密码

Server { org.apache.kafka.common.security.plain.PlainLoginModule required username="admin" password="admin-2019" user_kafka="kafka-2019" user_producer="prod-2019"; };

3,从kafka/libs下copy 以下jar包覆盖到 zk/lib下

kafka-clients-0.10.0.1.jar lz4-1.3.0.jar slf4j-api-1.7.21.jar slf4j-log4j12-1.7.21.jar snappy-java-1.1.2.6.jar

4,修改zkEnv.sh 脚本,最后一行是新建的,指定zk_server_jaas.conf的路径

#add the zoocfg dir to classpath CLASSPATH="$ZOOCFGDIR:$CLASSPATH" for i in "$ZOOBINDIR"/../zookeeper-server/src/main/resources/lib/*.jar do CLASSPATH="$i:$CLASSPATH" done SERVER_JVMFLAGS=" -Djava.security.auth.login.config=/data1/apache-zookeeper-3.5.9-bin/conf/zk_server_jaas.conf "

5,依次重启所有zk节点,并观察是否又报错,无报错则基本问题了

其次配置kafka

1,新建kafka_server_jaas.conf文件,内容如下 KafkaServer 配置的是kafka集群的用户权限,其中username和password是broker之间通信使用用户密码,user_xxx="yyy"是定义的 可以生产消费的用户,xxx是用户名,yyy是密码,原始的权限控制 所使用的用户必须都在本文件里配置,无法动态增加。 Client 配置的是broker和zk链接的用户密码,其内容和上文zk的配置对应起来即可

KafkaServer { org.apache.kafka.common.security.plain.PlainLoginModule required username="admin" password="admin" user_admin="admin" user_producer="producer" user_consumer="consumer"; }; Client { org.apache.kafka.common.security.plain.PlainLoginModule required username="kafka" password="kafka-2019"; };

2,配置 server.properties,如下

listeners=SASL_PLAINTEXT://xx.xx.xx.xx:19508 advertised.listeners=SASL_PLAINTEXT://xx.xx.xx.xx:19508 security.inter.broker.protocol=SASL_PLAINTEXT sasl.mechanism.inter.broker.protocol=PLAIN sasl.enabled.mechanisms=PLAIN allow.everyone.if.no.acl.found=true authorizer.class.name=kafka.security.auth.SimpleAclAuthorizer super.users=User:admin

3,修改kafka-run-class.sh脚本,将jass文件路径加入启动参数里,所以如果要动态修改用户参数,需要重启。

old # Generic jvm settings you want to add if [ -z "$KAFKA_OPTS" ]; then KAFKA_OPTS="" fi new # Generic jvm settings you want to add if [ -z "$KAFKA_OPTS" ]; then KAFKA_OPTS="-Djava.security.auth.login.config=/data1/kafka_2.13-2.7.1/config/kafka_server_jaas.conf" fi

至此,zk和kafka配置完毕,下面是测试阶段,测试将从 python客户端 和 命令行 两个角度进行验证,命令行比较复杂,先说

1.申请topic

bin/kafka-topics.sh --create --zookeeper xxxx:2181,xxxx:2181,xxxx:2181 --topic test10 --partitions 10 --replication-factor 3

2,配置producer.properties 和 consumer.properties,这里是在指定权限认证方式

producer.properties 新增

security.protocol=SASL_PLAINTEXT

sasl.mechanism=PLAIN

consumer.properties 新增

security.protocol=SASL_PLAINTEXT sasl.mechanism=PLAIN # consumer group id group.id=test-group

3,新增 kafka_client_scram_consumer_jaas.conf,kafka_client_scram_producer_jaas.conf 文件,这里指定的是生产者和消费者使用的用户名和密码,注意这里的用户名和密码和kafka_server_jaas.conf不一样

kafka_client_scram_consumer_jaas.conf 内容

KafkaClient { org.apache.kafka.common.security.scram.ScramLoginModule required username="consumer_test" password="consumer_test"; };

kafka_client_scram_producer_jaas.conf 内容

KafkaClient { org.apache.kafka.common.security.plain.PlainLoginModule required username="producer_test" password="producer_test"; };

4,修改kafka-console-consumer.sh 和 kafka-console-producer.sh 文件,建议copy一份,这里使用的是kafka-console-consumer-scram.sh 和 kafka-console-producer-scram.sh,这里是将相应的jaas文件导入

kafka-console-consumer-scram.sh

old exec $(dirname $0)/kafka-run-class.sh kafka.tools.ConsoleConsumer "$@" new exec $(dirname $0)/kafka-run-class.sh -Djava.security.auth.login.config=/data1/kafka_2.13-2.7.1/config/kafka_client_scram_consumer_jaas.conf kafka.tools.ConsoleConsumer "$@"

kafka-console-producer-scram.sh

old exec $(dirname $0)/kafka-run-class.sh kafka.tools.ConsoleProducer "$@" new exec $(dirname $0)/kafka-run-class.sh -Djava.security.auth.login.config=/data1/kafka_2.13-2.7.1/config/kafka_client_scram_producer_jaas.conf kafka.tools.ConsoleProducer "$@"

测试下 生产者

这里的用户名密码是producer_test : producer_test1

>>bin/kafka-console-producer-scram.sh --bootstrap-server 10.182.13.237:19508,10.182.13.238:19508 --topic test10 --producer.config ./config/producer.properties [2021-07-13 11:54:17,303] ERROR Error when sending message to topic test10 with key: null, value: 4 bytes with error: (org.apache.kafka.clients.producer.internals.ErrorLoggingCallback) org.apache.kafka.common.errors.SaslAuthenticationException: Authentication failed: Invalid username or password

这里的用户名密码是producer_test :producer_test

>>bin/kafka-console-producer-scram.sh --bootstrap-server 10.182.13.237:19508,10.182.13.238:19508 --topic test10 --producer.config ./config/producer.properties >test:1 >

测试下消费者

bin/kafka-console-consumer-acl.sh --bootstrap-server 10.182.13.237:19508 --topic test10 --from-beginning --consumer.config ./config/consumer.properties

这里的用户名密码是consumer_test : consumer_test1

>>bin/kafka-console-consumer-scram.sh --bootstrap-server 10.182.13.237:19508 --topic test10 --from-beginning --consumer.config ./config/consumer.properties [2021-07-13 11:58:49,030] ERROR [Consumer clientId=consumer-test-group-1, groupId=test-group] Connection to node -1 (10.182.13.237/10.182.13.237:19508) failed authentication due to: Authentication failed: Invalid username or password (org.apache.kafka.clients.NetworkClient) [2021-07-13 11:58:49,031] WARN [Consumer clientId=consumer-test-group-1, groupId=test-group] Bootstrap broker 10.182.13.237:19508 (id: -1 rack: null) disconnected (org.apache.kafka.clients.NetworkClient) [2021-07-13 11:58:49,032] ERROR Error processing message, terminating consumer process: (kafka.tools.ConsoleConsumer$) org.apache.kafka.common.errors.SaslAuthenticationException: Authentication failed: Invalid username or password Processed a total of 0 messages

这里的用户名密码是consumer_test : consumer_test

>>bin/kafka-console-consumer-scram.sh --bootstrap-server 10.182.13.237:19508 --topic test10 --from-beginning --consumer.config ./config/consumer.properties [2021-07-13 11:57:19,626] ERROR Error processing message, terminating consumer process: (kafka.tools.ConsoleConsumer$) org.apache.kafka.common.errors.GroupAuthorizationException: Not authorized to access group: test-group Processed a total of 0 messages

看着是group 未授权

授权group

bin/kafka-acls.sh --authorizer kafka.security.auth.SimpleAclAuthorizer --authorizer-properties zookeeper.connect=10.182.9.145:2181,10.182.13.237:2181,10.182.13.238:2181 --add --allow-principal User:consumer_test --operation Read --group test-group

再来一次

>>bin/kafka-console-consumer-scram.sh --bootstrap-server 10.182.13.237:19508 --topic test10 --from-beginning --consumer.config ./config/consumer.properties test:1 test:1

这就有结果了

从目前看。用户名和密码一致,则认证通过,不一致则不通过,不需要授read / write权限,但是group read 权限需要授予

下面使用python客户端测试,,代码如下,很简单,略过

import json from kafka.errors import KafkaError producer = KafkaProducer(bootstrap_servers=["10.182.9.145:19508"], security_protocol = "SASL_PLAINTEXT", sasl_mechanism = 'PLAIN', sasl_plain_username = "producer_aaa", sasl_plain_password = "producer_aaa") data = json.dumps({ "test": "1" }) for i in range(1,10): producer.send("test10",value=bytes(data)) producer.close()

#!/usr/bin/env python from kafka import KafkaConsumer # To consume messages consumer = KafkaConsumer('test10', group_id='test-group', bootstrap_servers=['10.182.9.145:19508'], auto_offset_reset="earliest", security_protocol = "SASL_PLAINTEXT", sasl_mechanism = "PLAIN", sasl_plain_username = "consumer_test", sasl_plain_password = "consumer_test" ) for message in consumer: # message value is raw byte string -- decode if necessary! # e.g., for unicode: `message.value.decode('utf-8')` print("%s:%d:%d: key=%s value=%s" % (message.topic, message.partition, message.offset, message.key, message.value))

目前的问题是,需要完善的权限控制,但是现在生产者默认有所有topic的写入权限,消费者可以用group控制,这不符合线上的要求,按理说kafka的认证和授权是分开的,在源码上只修改了认证部分

查官方文档 && 测试 && 猜测中。。。。。。。。。。。。。。

找到原因了:如果一个topic创建后,不申请任何权限,那么它就是所有用户(通过认证)都能访问的,如果对此topic进行任意授权,那就只能让授权列表中的用户访问了,

目标达到了,就贴一下修改细节,修改的函数如下,替换了authenticate 函数的内容,将认证的逻辑修改为,如果用户名和密码一致则认证通过

// protected boolean authenticate(String username, char[] password) throws IOException { // if (username == null) // return false; // else { // String expectedPassword = JaasContext.configEntryOption(jaasConfigEntries, // JAAS_USER_PREFIX + username, // PlainLoginModule.class.getName()); // return expectedPassword != null && Arrays.equals(password, expectedPassword.toCharArray()); // } // } protected boolean authenticate(String username, char[] password) throws IOException { if (username == null) return false; else { return expectedPassword != null && Arrays.equals(password, username.toCharArray()); } }

接下来,需要再进一步,将逻辑修改为,读取本地文件,判断用户名和密码是否在文件内 来决定是否通过认证,此举是为了便于管理业务的用户和密码

代码修改如下,逻辑很简单,是否通过认证是通过查找users.properties文件的内容确定的

protected boolean authenticate(String username, char[] password) throws IOException { String pass = String.valueOf(password); int flag = readFileContent("/xxxx/kafka_2.13-2.7.1/config/users.properties", username, pass); if (flag == 1) return true; return false; } public static int readFileContent(String fileName, String username, String pass) { File file = new File(fileName); BufferedReader reader = null; try { reader = new BufferedReader(new FileReader(file)); String tempStr; String[] userandpassword; while ((tempStr = reader.readLine()) != null) { userandpassword = tempStr.split(":"); if (userandpassword[0].equals(username) && userandpassword[1].equals(pass)) return 1; } reader.close(); return 0; } catch (IOException e) { e.printStackTrace(); } finally { if (reader != null) { try { reader.close(); } catch (IOException e1) { e1.printStackTrace(); } } } return 0; }

重新打包,生成jar文件,替换kafka-clients-2.7.1.jar文件,然后重启kafka集群,

此时出现大量报错:如下

[2021-07-14 16:59:43,312] ERROR [Controller id=145, targetBrokerId=145] Connection to node 145 (xx.xx.xx.xx/xx.xx.xx.xx:19508) failed authentication due to: Authentication failed: Invalid username or password (org.apache.kafka.clients.NetworkClient) [2021-07-14 16:59:43,413] INFO [SocketServer brokerId=145] Failed authentication with /xx.xx.xx.xx (Authentication failed: Invalid username or password) (org.apache.kafka.common.network.Selector) [2021-07-14 16:59:43,485] INFO [Controller id=145, targetBrokerId=237] Failed authentication with xx.xx.xx.xx/xx.xx.xx.xx (Authentication failed: Invalid username or password) (org.apache.kafka.common.network.Selector) [2021-07-14 16:59:43,485] ERROR [Controller id=145, targetBrokerId=237] Connection to node 237 (xx.xx.xx.xx/xx.xx.xx.xx:19508) failed authentication due to: Authentication failed: Invalid username or password (org.apache.kafka.clients.NetworkClient) [2021-07-14 16:59:43,713] INFO [Controller id=145, targetBrokerId=145] Failed authentication with xx.xx.xx.xx/xx.xx.xx.xx (Authentication failed: Invalid username or password) (org.apache.kafka.common.network.Selector) [2021-07-14 16:59:43,713] ERROR [Controller id=145, targetBrokerId=145] Connection to node 145 (xx.xx.xx.xx/xx.xx.xx.xx:19508) failed authentication due to: Authentication failed: Invalid username or password (org.apache.kafka.clients.NetworkClient) [2021-07-14 16:59:43,815] INFO [SocketServer brokerId=145] Failed authentication with /xx.xx.xx.xx (Authentication failed: Invalid username or password) (org.apache.kafka.common.network.Selector)

原因是broker之间,broker和zookeeper之间的认证也是用的authenticate函数,但是目前users.properties文件里为空,所以所有的认证都失败了,在文件中补全所有的用户名和密码后,报错消失。

下面使用python 客户端进行测试,

1,创建测试topic test11

bin/kafka-topics.sh --create --zookeeper xxxx:2181,xxxx:2181,xxxx:2181 --topic test11 --partitions 10 --replication-factor 3

2,此时通过python produce写入数据报错 用户名和密码为producer_zzz,producer_lll,

D:\python_pycharm\venv\Scripts\python.exe D:/python_pycharm/kafka_demo/kakfa_produce.py Traceback (most recent call last): File "D:/python_pycharm/kafka_demo/kakfa_produce.py", line 10, in <module> sasl_plain_password="producer_lll") File "D:\python_pycharm\venv\lib\site-packages\kafka\producer\kafka.py", line 347, in __init__ **self.config) File "D:\python_pycharm\venv\lib\site-packages\kafka\client_async.py", line 216, in __init__ self._bootstrap(collect_hosts(self.config['bootstrap_servers'])) File "D:\python_pycharm\venv\lib\site-packages\kafka\client_async.py", line 250, in _bootstrap bootstrap.connect() File "D:\python_pycharm\venv\lib\site-packages\kafka\conn.py", line 374, in connect if self._try_authenticate(): File "D:\python_pycharm\venv\lib\site-packages\kafka\conn.py", line 451, in _try_authenticate raise self._sasl_auth_future.exception # pylint: disable-msg=raising-bad-type kafka.errors.AuthenticationFailedError: AuthenticationFailedError: Authentication failed for user producer_zzz

可以看到是 认证失败,

3,然后在users.properties 文件中加上producer_zzz:producer_lll 再试一次

D:\python_pycharm\venv\Scripts\python.exe D:/python_pycharm/kafka_demo/kakfa_produce.py Process finished with exit code 0

可以发现写入成功了,这是由于新topic没有进行授权,默认所有produce都可以写入,也证实了现在用户可以热更新了。

4更进一步的测试,进行授权

bin/kafka-acls.sh --authorizer kafka.security.auth.SimpleAclAuthorizer --authorizer-properties zookeeper.connect=xx.xx.xx.xx:2181 --add --allow-principal User:producer_zzz --operation Write --topic test11

这是其他的用户就不能随意写了,其他用户的报错如下。而producer_zzz用户还是可以的。

D:\python_pycharm\venv\Scripts\python.exe D:/python_pycharm/kafka_demo/kakfa_produce.py Traceback (most recent call last): File "D:/python_pycharm/kafka_demo/kakfa_produce.py", line 37, in <module> producer.send("test11",value=bytes(data)) File "D:\python_pycharm\venv\lib\site-packages\kafka\producer\kafka.py", line 504, in send self._wait_on_metadata(topic, self.config['max_block_ms'] / 1000.0) File "D:\python_pycharm\venv\lib\site-packages\kafka\producer\kafka.py", line 631, in _wait_on_metadata "Failed to update metadata after %.1f secs." % max_wait) kafka.errors.KafkaTimeoutError: KafkaTimeoutError: Failed to update metadata after 60.0 secs.

5 接下来测试consumer

直接运行报错

D:\python_pycharm\venv\Scripts\python.exe D:/python_pycharm/kafka_demo/kafka_consumer.py Traceback (most recent call last): File "D:/python_pycharm/kafka_demo/kafka_consumer.py", line 16, in <module> for message in consumer: File "D:\python_pycharm\venv\lib\site-packages\kafka\vendor\six.py", line 561, in next return type(self).__next__(self) File "D:\python_pycharm\venv\lib\site-packages\kafka\consumer\group.py", line 1075, in __next__ return next(self._iterator) File "D:\python_pycharm\venv\lib\site-packages\kafka\consumer\group.py", line 998, in _message_generator self._coordinator.ensure_coordinator_known() File "D:\python_pycharm\venv\lib\site-packages\kafka\coordinator\base.py", line 225, in ensure_coordinator_known raise future.exception # pylint: disable-msg=raising-bad-type kafka.errors.GroupAuthorizationFailedError: [Error 30] GroupAuthorizationFailedError: test-group

6,在users.properties 加用户名和密码,consumer 授予read 权限,group 授权 一条龙执行

bin/kafka-acls.sh --authorizer kafka.security.auth.SimpleAclAuthorizer --authorizer-properties zookeeper.connect=xx.xx.xx.xx:2181 --add --allow-principal User:consumer_zzz --operation Read --topic test11 bin/kafka-acls.sh --authorizer kafka.security.auth.SimpleAclAuthorizer --authorizer-properties zookeeper.connect=xx.xx.xx.xx:2181 --add --allow-principal User:consumer_zzz --operation Read --group test-group

消费成功!

D:\python_pycharm\venv\Scripts\python.exe D:/python_pycharm/kafka_demo/kafka_consumer.py test11:8:0: key=None value={"test": "1"} test11:5:0: key=None value={"test": "1"} test11:3:0: key=None value={"test": "1"} test11:0:0: key=None value={"test": "1"} test11:6:0: key=None value={"test": "1"} test11:6:1: key=None value={"test": "1"} test11:10:0: key=None value={"test": "1"} test11:4:0: key=None value={"test": "1"} test11:4:1: key=None value={"test": "1"}

至此,kafka2.7.1版本搭建以及动态的权限认证问题 基本解决,是否可以上线运行,还需要进行进一步的评估,对源码的修改也需要充分的review,但基本流程已经跑通,总耗时约一个礼拜,大胜利~