Knative Eventing

创建crd

[root@master ~]# kubectl apply -f https://github.com/knative/eventing/releases/download/knative-v1.7.4/eventing-crds.yaml

customresourcedefinition.apiextensions.k8s.io/apiserversources.sources.knative.dev created

customresourcedefinition.apiextensions.k8s.io/brokers.eventing.knative.dev created

customresourcedefinition.apiextensions.k8s.io/channels.messaging.knative.dev created

customresourcedefinition.apiextensions.k8s.io/containersources.sources.knative.dev created

customresourcedefinition.apiextensions.k8s.io/eventtypes.eventing.knative.dev created

customresourcedefinition.apiextensions.k8s.io/parallels.flows.knative.dev created

customresourcedefinition.apiextensions.k8s.io/pingsources.sources.knative.dev created

customresourcedefinition.apiextensions.k8s.io/sequences.flows.knative.dev created

customresourcedefinition.apiextensions.k8s.io/sinkbindings.sources.knative.dev created

customresourcedefinition.apiextensions.k8s.io/subscriptions.messaging.knative.dev created

customresourcedefinition.apiextensions.k8s.io/triggers.eventing.knative.dev created

[root@master ~]# kubectl api-resources --api-group=messaging.knative.dev

NAME SHORTNAMES APIVERSION NAMESPACED KIND

channels ch messaging.knative.dev/v1 true Channel

subscriptions sub messaging.knative.dev/v1 true Subscription

[root@master ~]# kubectl api-resources --api-group=eventing.knative.dev

NAME SHORTNAMES APIVERSION NAMESPACED KIND

brokers eventing.knative.dev/v1 true Broker

eventtypes eventing.knative.dev/v1beta1 true EventType

triggers eventing.knative.dev/v1 true Trigger安装eventing

[root@master ~]# kubectl apply -f https://github.com/knative/eventing/releases/download/knative-v1.7.4/eventing-core.yaml

[root@master ~]# kubectl get pods -n knative-eventing

NAME READY STATUS RESTARTS AGE

eventing-controller-865f8c98d4-xb2lk 1/1 Running 0 64s

eventing-webhook-84b9987ccb-p82vk 1/1 Running 0 64s安装in-memory

如果是一对多不过滤部署到此处就够了

[root@master ~]# kubectl apply -f https://github.com/knative/eventing/releases/download/knative-v1.7.4/in-memory-channel.yaml

[root@master ~]# kubectl get pods -n knative-eventing

NAME READY STATUS RESTARTS AGE

eventing-controller-865f8c98d4-xb2lk 1/1 Running 0 4m28s

eventing-webhook-84b9987ccb-p82vk 1/1 Running 0 4m28s

imc-controller-67964f548d-qjn9f 1/1 Running 0 114s

imc-dispatcher-8585c4c4bc-8llsd 1/1 Running 0 113s安装mt-channel-broker

[root@master ~]# kubectl apply -f https://github.com/knative/eventing/releases/download/knative-v1.7.4/mt-channel-broker.yaml

[root@master ~]# kubectl get pods -n knative-eventing

NAME READY STATUS RESTARTS AGE

eventing-controller-865f8c98d4-xb2lk 1/1 Running 0 7m52s

eventing-webhook-84b9987ccb-p82vk 1/1 Running 0 7m52s

imc-controller-67964f548d-qjn9f 1/1 Running 0 5m18s

imc-dispatcher-8585c4c4bc-8llsd 1/1 Running 0 5m17s

mt-broker-controller-585768d967-hsjc6 1/1 Running 0 88s

mt-broker-filter-55f57b859f-rh5fk 1/1 Running 0 88s

mt-broker-ingress-844d685b8b-8xzsx 1/1 Running 0 88s创建一个events示例

[root@master ~]# kn service create event-display --image ikubernetes/event_display --port 8080 --scale-min 1

Creating service 'event-display' in namespace 'default':

0.056s The Route is still working to reflect the latest desired specification.

0.070s ...

0.104s Configuration "event-display" is waiting for a Revision to become ready.

9.649s ...

9.726s Ingress has not yet been reconciled.

9.783s Waiting for load balancer to be ready

9.962s Ready to serve.

Service 'event-display' created to latest revision 'event-display-00001' is available at URL:

http://event-display.default.yang.com

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

event-display-00001-deployment-bfbd9945-682c8 3/3 Running 0 17s

[root@master ~]# kubectl get vs

NAME GATEWAYS HOSTS AGE

event-display-ingress ["knative-serving/knative-ingress-gateway","knative-serving/knative-local-gateway"] ["event-display.default","event-display.default.svc","event-display.default.svc.cluster.local","event-display.default.yang.com"] 23s

event-display-mesh ["mesh"] ["event-display.default","event-display.default.svc","event-display.default.svc.cluster.local"] 23s

hello.ik8s.io-ingress ["knative-serving/knative-ingress-gateway"] ["hello.ik8s.io"] 28h

www.ik8s.io-ingress ["knative-serving/knative-ingress-gateway"] ["www.ik8s.io"] 25h创建域名映射(自动创建cdn,之前章节有修改方法)

[root@master ~]# kn domain create event.yang.com --ref "ksvc:event-display"

Domain mapping 'event.yang.com' created in namespace 'default'.

[root@master ~]# kn domain list

NAME URL READY KSVC

event.yang.com http://event.yang.com True event-display使用curl命令调用

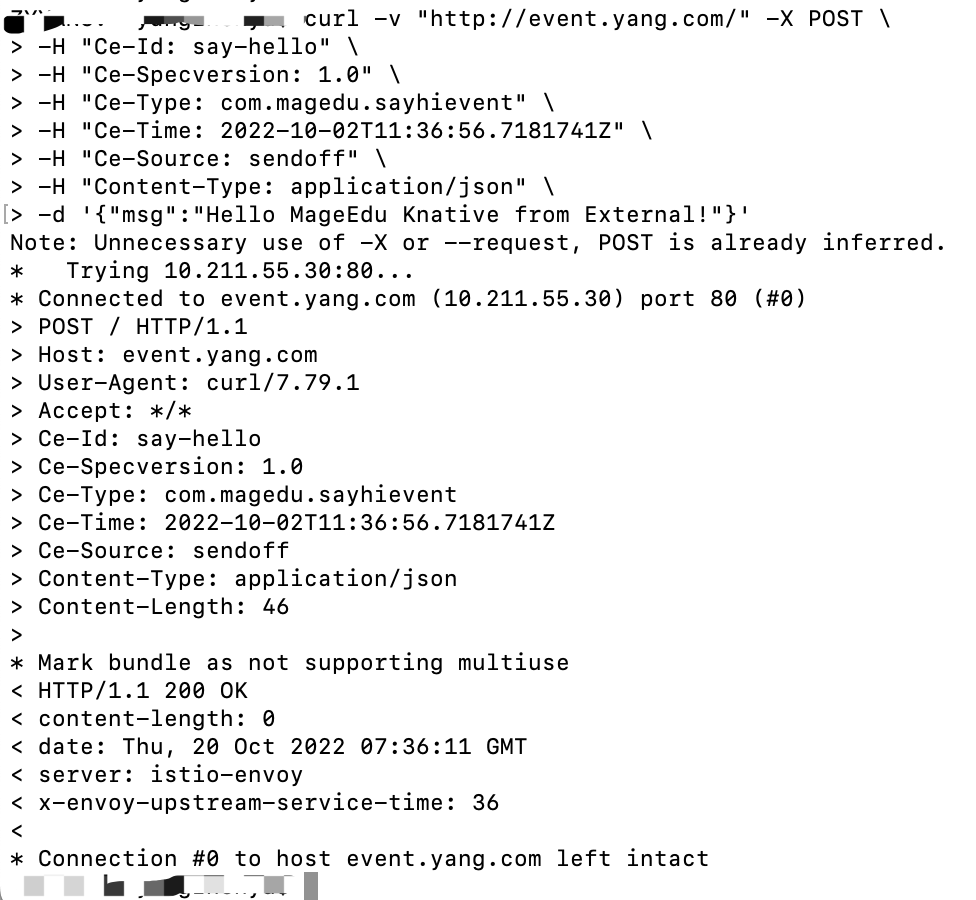

curl -v "http://event.yang.com/" -X POST \

-H "Ce-Id: say-hello" \

-H "Ce-Specversion: 1.0" \

-H "Ce-Type: com.magedu.sayhievent" \

-H "Ce-Time: 2022-10-02T11:36:56.7181741Z" \

-H "Ce-Source: sendoff" \

-H "Content-Type: application/json" \

-d '{"msg":"Hello MageEdu Knative from External!"}'

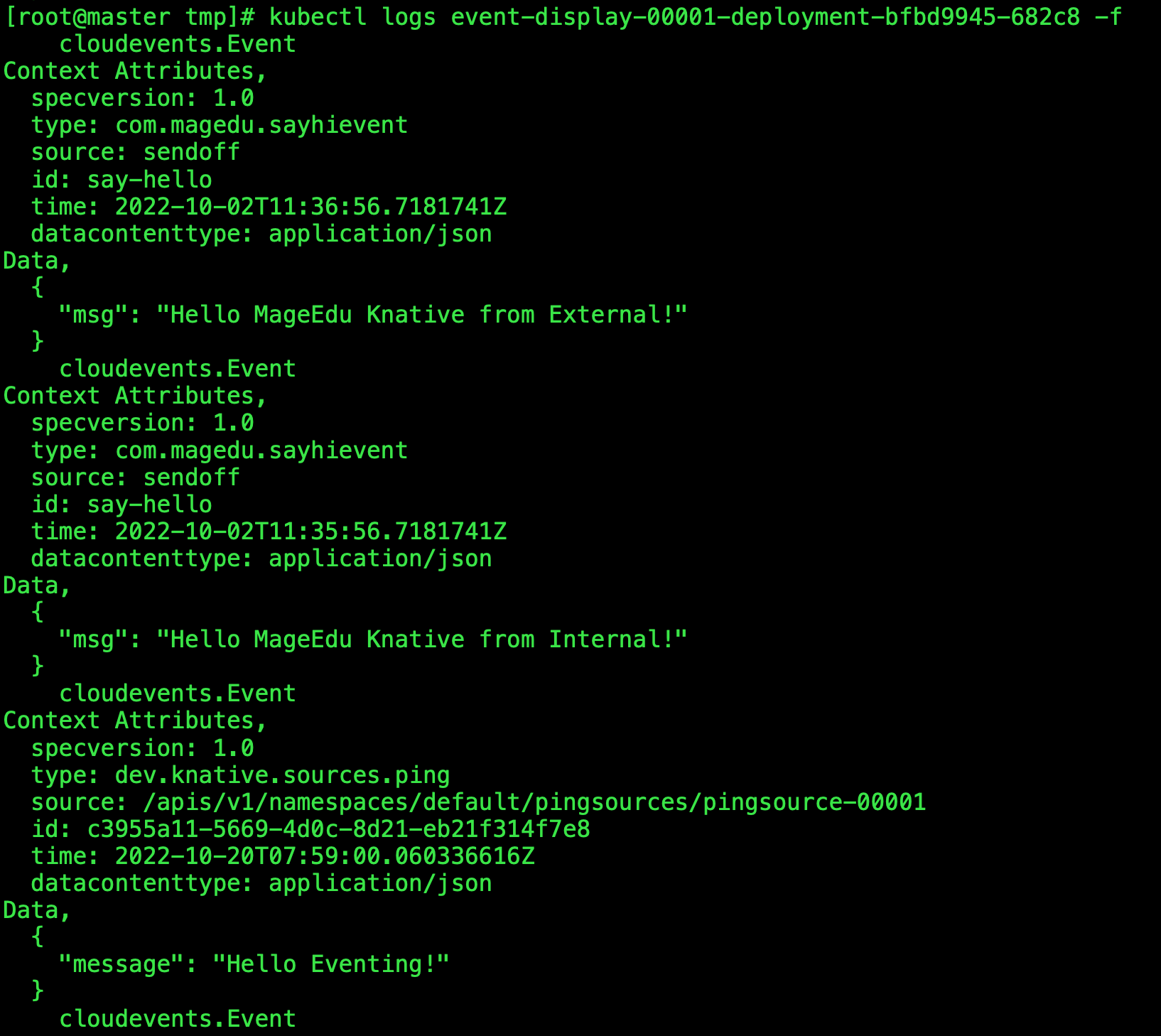

集群内部调用

root@client-17589 # curl -v "http://event-display.default.svc.cluster.local" -X POST \

> -H "Ce-Id: say-hello" \

> -H "Ce-Specversion: 1.0" \

> -H "Ce-Type: com.magedu.sayhievent" \

> -H "Ce-Time: 2022-10-02T11:35:56.7181741Z" \

> -H "Ce-Source: sendoff" \

> -H "Content-Type: application/json" \

> -d '{"msg":"Hello MageEdu Knative from Internal!"}'

Note: Unnecessary use of -X or --request, POST is already inferred.

* Trying 10.111.234.134:80...

* TCP_NODELAY set

* Connected to event-display.default.svc.cluster.local (10.111.234.134) port 80 (#0)

> POST / HTTP/1.1

> Host: event-display.default.svc.cluster.local

> User-Agent: curl/7.67.0

> Accept: */*

> Ce-Id: say-hello

> Ce-Specversion: 1.0

> Ce-Type: com.magedu.sayhievent

> Ce-Time: 2022-10-02T11:35:56.7181741Z

> Ce-Source: sendoff

> Content-Type: application/json

> Content-Length: 46

>

* upload completely sent off: 46 out of 46 bytes

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< content-length: 0

< date: Thu, 20 Oct 2022 07:43:45 GMT

< server: envoy

< x-envoy-upstream-service-time: 21

<

* Connection #0 to host event-display.default.svc.cluster.local left intactSource to sink消息模式实践 pingsource模式

[root@master tmp]# kubectl apply -f 03-pingsource-to-event-display.yaml

pingsource.sources.knative.dev/pingsource-00001 created

[root@master tmp]# cat 03-pingsource-to-event-display.yaml

---

apiVersion: sources.knative.dev/v1

kind: PingSource

metadata:

name: pingsource-00001

spec:

schedule: "* * * * *"

contentType: "application/json"

data: '{"message": "Hello Eventing!"}'

sink:

ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: event-display

[root@master tmp]# kubectl get sources

NAME SINK SCHEDULE AGE READY REASON

pingsource.sources.knative.dev/pingsource-00001 http://event-display.default.svc.cluster.local * * * * * 56s True

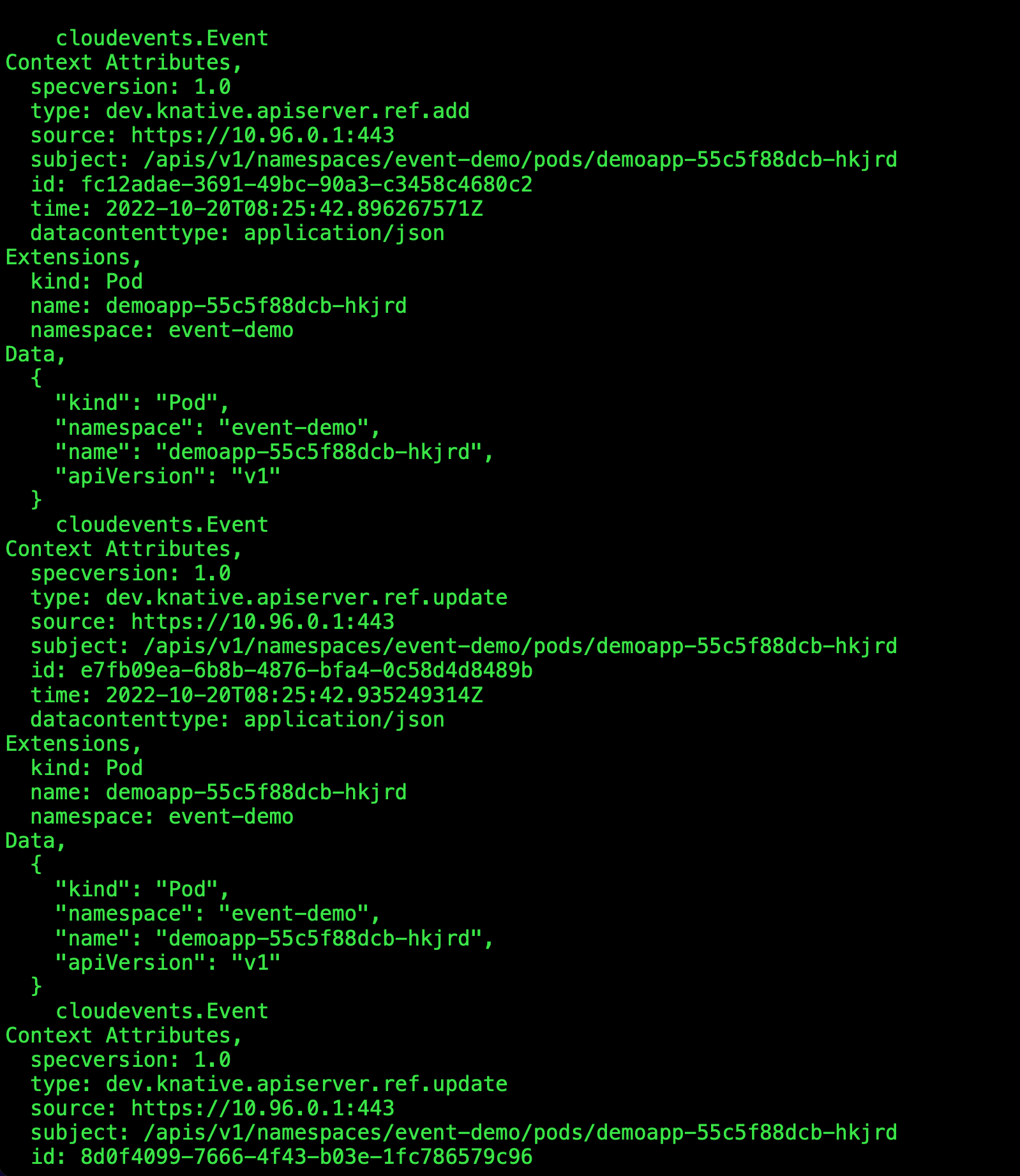

[root@master tmp]# kubectl logs event-display-00001-deployment-bfbd9945-682c8 -f

container source类型

[root@master 03-containersource-to-knative-service]# kubectl apply -f 03-containersource-to-event-display.yaml

containersource.sources.knative.dev/containersource-heartbeat created

[root@master 03-containersource-to-knative-service]# cat 03-containersource-to-event-display.yaml

apiVersion: sources.knative.dev/v1

kind: ContainerSource

metadata:

name: containersource-heartbeat

spec:

template:

spec:

containers:

- image: ikubernetes/containersource-heartbeats:latest

name: heartbeats

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

sink:

ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: event-displayApiServerSource

[root@master 04-apiserversource-to-knative-service]# kubectl apply -f .

namespace/event-demo created

service.serving.knative.dev/event-display created

serviceaccount/pod-watcher created

role.rbac.authorization.k8s.io/pod-reader created

rolebinding.rbac.authorization.k8s.io/pod-reader created

apiserversource.sources.knative.dev/pods-event created

[root@master 04-apiserversource-to-knative-service]# cat 01-namespace.yaml

kind: Namespace

apiVersion: v1

metadata:

name: event-demo

---

[root@master 04-apiserversource-to-knative-service]# cat 02-kservice-event-display.yaml

---

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: event-display

namespace: event-demo

spec:

template:

metadata:

annotations:

autoscaling.knative.dev/min-scale: "1"

spec:

containers:

- image: ikubernetes/event_display

ports:

- containerPort: 8080

[root@master 04-apiserversource-to-knative-service]# cat 03-serviceaccount-and-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: pod-watcher

namespace: event-demo

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: pod-reader

namespace: event-demo

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: pod-reader

namespace: event-demo

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: pod-reader

subjects:

- kind: ServiceAccount

name: pod-watcher

namespace: event-demo

[root@master 04-apiserversource-to-knative-service]# cat 04-ApiServerSource-to-knative-service.yaml

apiVersion: sources.knative.dev/v1

kind: ApiServerSource

metadata:

name: pods-event

namespace: event-demo

spec:

serviceAccountName: pod-watcher

mode: Reference

resources:

- apiVersion: v1

kind: Pod

#selector:

# matchLabels:

# app: demoapp

sink:

ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: event-display

[root@master 02-pingsource-to-knative-service]# kubectl get pods -n event-demo

NAME READY STATUS RESTARTS AGE

apiserversource-pods-event-77b81aa9-01ad-40a3-bdf2-dab8e909kxpf 1/1 Running 0 110s

event-display-00001-deployment-785fb8877d-7zb25 2/2 Running 0 112s

[root@master 02-pingsource-to-knative-service]# kubectl get ksvc -n event-demo

NAME URL LATESTCREATED LATESTREADY READY REASON

event-display http://event-display.event-demo.yang.com event-display-00001 event-display-00001 True

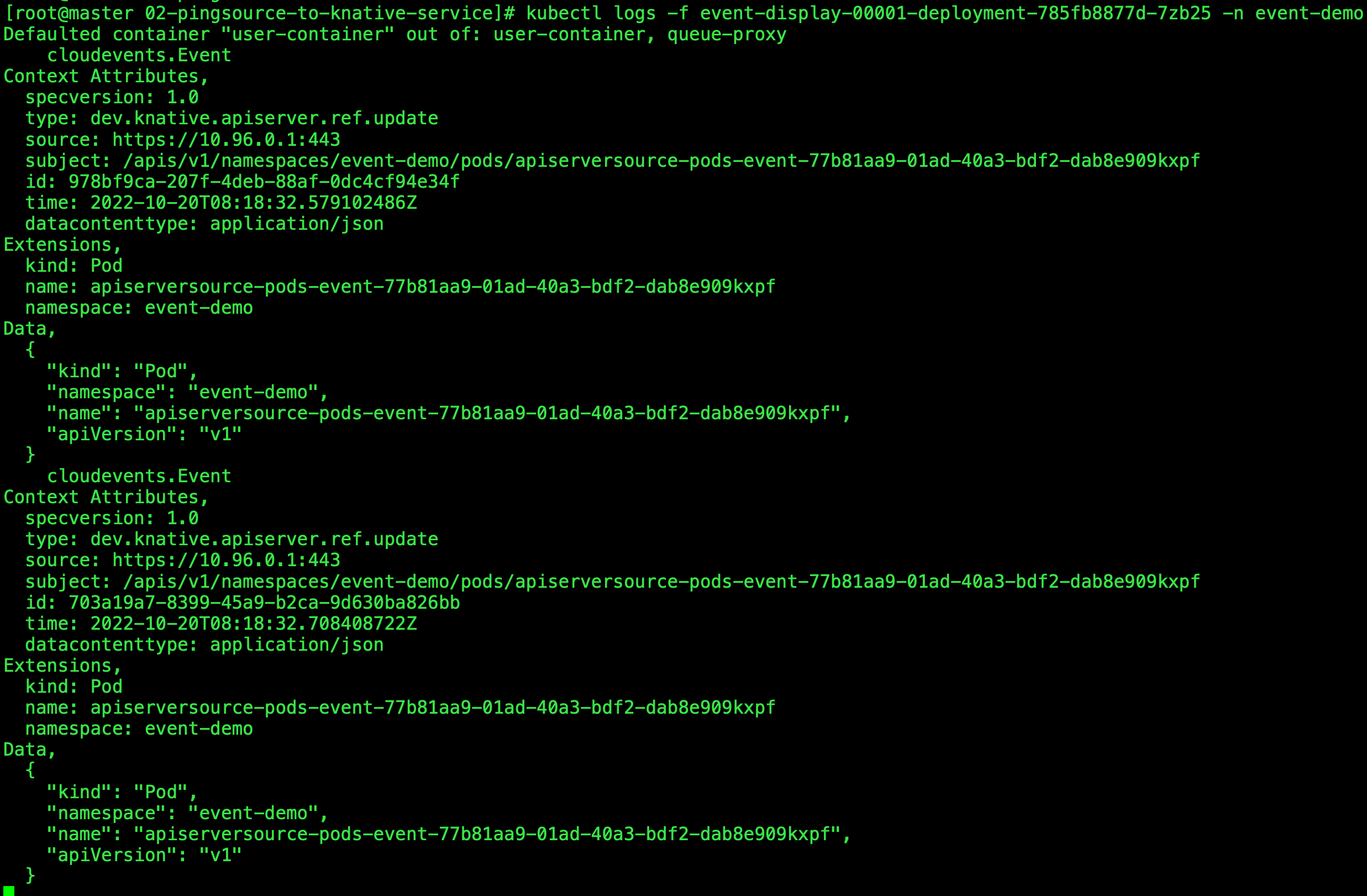

[root@master 02-pingsource-to-knative-service]# kubectl logs -f event-display-00001-deployment-785fb8877d-7zb25 -n event-demo

我们新创建一个pod就可以看到有新的事件产生,删除也会有。

[root@master 04-apiserversource-to-knative-service]# kubectl create deployment demoapp --image ikubernetes/demoapp:v1.0 -n event-demo

deployment.apps/demoapp created