ceph crush进阶

查看当前状态

ceph psd df

weight:表示设备(device)的容量相对值,比如1TB对应1.00,那么500G的OSD的weight就应该是0.5,weight是基于磁盘空间分配PG的数量,让crush算法尽可能往磁盘空间大的OSD多分配PG,往磁盘空间小的OSD分配较少的PG。

Reweight:参数的目的是重新平衡ceph的CRUSH算法随机分配的PG,默认的分配是概率上的均衡,即使OSD都是一样的磁盘空间也会产生一些PG分布不均匀的情况,此时可以通过调整reweight参数,让ceph集群立即重新平衡当前磁盘的PG,以达到数据均衡分布的目的,REWEIGHT是PG已经分配完成,要在ceph集群重新平衡PG的分布。

修改WEIGHT并验证

ceph osd reweight 1 0.8修改REWEIGHT

ceph osd crush reweight osd.10 1.5

Crush运行图管理

导出crush运行图,用root

mkdir /data/ceph -p

ceph osd get crush map -o /data/ceph/crushmap-v1apt install ceph-base

crushtool -d /data/ceph/crushmap-v1 > /data/ceph/crushmap-v1.txt

file /data/ceph/crushmap-v1.txt

head /data/ceph/crushmap-v1.txt -n 30

vim /data/ceph/crushmap-v1.txt #自定义修改

crushtool -c /data/ceph/crushmap-v1.txt -o /data/ceph/crushmap-v2 #将文本转换为crush格式

ceph osd setcrushmap -i /data/ceph/crushmap-v2 #导入新的crush

ceph osd crush rule dump #验证crush运行图是否生效crush数据分类管理

crushtool -d /data/ceph/crushmap-v3 > /data/ceph/crushmap-v3.txt #将运行图转换为文本,自己备份一个在修改添加自定义配置

定义每台服务器有一个固态盘osd.0 osd.5 osd.10 osd.15

#magedu ssh node

host ceph-ssdnode1 {

id -103 # do not change unnecessarily

id -104 class hdd # do not change unnecessarily

# weight 0.098

alg straw2

hash 0 # rjenkins1

item osd.0 weight 0.098

}

host ceph-ssdnode2 {

id -105 # do not change unnecessarily

id -106 class hdd # do not change unnecessarily

# weight 0.098

alg straw2

hash 0 # rjenkins1

item osd.5 weight 0.098

}

host ceph-ssdnode3 {

id -107 # do not change unnecessarily

id -108 class hdd # do not change unnecessarily

# weight 0.098

alg straw2

hash 0 # rjenkins1

item osd.10 weight 0.098

}

host ceph-ssdnode4 {

id -109 # do not change unnecessarily

id -110 class hdd # do not change unnecessarily

# weight 0.098

alg straw2

hash 0 # rjenkins1

item osd.15 weight 0.098

}

#magedu bucket

root ssd {

id -127 # do not change unnecessarily

id -11 class hdd # do not change unnecessarily

# weight 1.952

alg straw

hash 0 # rjenkins1

item ceph-ssdnode1 weight 0.488

item ceph-ssdnode2 weight 0.488

item ceph-ssdnode3 weight 0.488

item ceph-ssdnode4 weight 0.488

}

#magedu rules

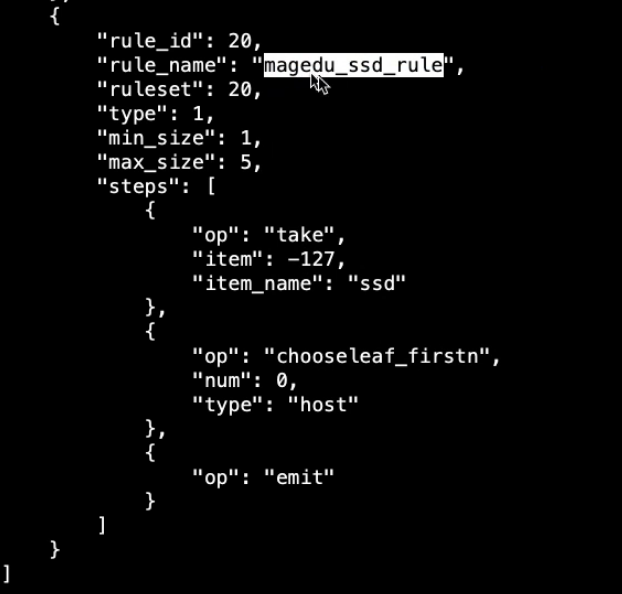

rule magedu_ssd_rule {

id 20

type replicated

min_size 1

max_size 5

step take ssd

step chooseleaf firstn 0 type host

step emit

}转换为crush 二进制格式

crushtool -c /data/ceph/crushmap-v3.txt -o /data/ceph/crushmap-v4导入新的crush运行图

ceph osd setcrushmap -i /data/ceph/crushmap-v4验证crush运行图是否生效

ceph osd crush rule dump

测试创建存储池

ceph osd pool create magedu-ssdpool 32 32 magedu_ssd_rule验证pgp状态

ceph pg ls-by-pool magedu-ssdpool | awk '{print $1,$2,$15}'