Ceph命令总结

只显示存储池

cephadmin@deploy:~$ ceph osd pool ls

device_health_metrics

mypool

myrbd1

.rgw.root

default.rgw.log

default.rgw.control

default.rgw.meta

cephfs-metadata

cephfs-data列出存储池并显示Id

cephadmin@deploy:~$ ceph osd lspools

1 device_health_metrics

2 mypool

3 myrbd1

4 .rgw.root

5 default.rgw.log

6 default.rgw.control

7 default.rgw.meta

8 cephfs-metadata

9 cephfs-data查看pg状态

cephadmin@deploy:~$ ceph pg stat

297 pgs: 297 active+clean; 11 MiB data, 290 MiB used, 600 GiB / 600 GiB avail; 170 B/s rd, 2.0 KiB/s wr, 1 op/s查看指定pool或所有的pool的状态

cephadmin@deploy:~$ ceph osd pool stats mypool

pool mypool id 2

nothing is going on查看集群状态

cephadmin@deploy:~$ ceph df

--- RAW STORAGE ---

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 600 GiB 600 GiB 291 MiB 291 MiB 0.05

TOTAL 600 GiB 600 GiB 291 MiB 291 MiB 0.05

--- POOLS ---

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

device_health_metrics 1 1 0 B 0 0 B 0 190 GiB

mypool 2 32 0 B 0 0 B 0 190 GiB

myrbd1 3 64 10 MiB 17 31 MiB 0 190 GiB

.rgw.root 4 32 1.3 KiB 4 48 KiB 0 190 GiB

default.rgw.log 5 32 3.6 KiB 177 408 KiB 0 190 GiB

default.rgw.control 6 32 0 B 8 0 B 0 190 GiB

default.rgw.meta 7 8 0 B 0 0 B 0 190 GiB

cephfs-metadata 8 32 583 KiB 23 1.8 MiB 0 190 GiB

cephfs-data 9 64 2.7 KiB 2 20 KiB 0 190 GiB查看集群存储状态详情

cephadmin@deploy:~$ ceph df detail

--- RAW STORAGE ---

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 600 GiB 600 GiB 292 MiB 292 MiB 0.05

TOTAL 600 GiB 600 GiB 292 MiB 292 MiB 0.05

--- POOLS ---

POOL ID PGS STORED (DATA) (OMAP) OBJECTS USED (DATA) (OMAP) %USED MAX AVAIL QUOTA OBJECTS QUOTA BYTES DIRTY USED COMPR UNDER COMPR

device_health_metrics 1 1 0 B 0 B 0 B 0 0 B 0 B 0 B 0 190 GiB N/A N/A N/A 0 B 0 B

mypool 2 32 0 B 0 B 0 B 0 0 B 0 B 0 B 0 190 GiB N/A N/A N/A 0 B 0 B

myrbd1 3 64 10 MiB 10 MiB 0 B 17 31 MiB 31 MiB 0 B 0 190 GiB N/A N/A N/A 0 B 0 B

.rgw.root 4 32 1.3 KiB 1.3 KiB 0 B 4 48 KiB 48 KiB 0 B 0 190 GiB N/A N/A N/A 0 B 0 B

default.rgw.log 5 32 3.6 KiB 3.6 KiB 0 B 177 408 KiB 408 KiB 0 B 0 190 GiB N/A N/A N/A 0 B 0 B

default.rgw.control 6 32 0 B 0 B 0 B 8 0 B 0 B 0 B 0 190 GiB N/A N/A N/A 0 B 0 B

default.rgw.meta 7 8 0 B 0 B 0 B 0 0 B 0 B 0 B 0 190 GiB N/A N/A N/A 0 B 0 B

cephfs-metadata 8 32 624 KiB 624 KiB 0 B 23 1.9 MiB 1.9 MiB 0 B 0 190 GiB N/A N/A N/A 0 B 0 B

cephfs-data 9 64 1.3 KiB 1.3 KiB 0 B 2 16 KiB 16 KiB 0 B 0 190 GiB N/A N/A N/A 0 B 0 B查看osd状态

cephadmin@deploy:~$ ceph osd stat

12 osds: 12 up (since 3h), 12 in (since 3h); epoch: e229显示osd的地城详细信息

cephadmin@deploy:~$ ceph osd dump

epoch 229

fsid dc883878-2675-4786-b000-ae9b68ab3098

created 2022-09-07T09:59:06.166200+0800

modified 2022-09-07T13:33:50.062279+0800

flags sortbitwise,recovery_deletes,purged_snapdirs,pglog_hardlimit

crush_version 25

full_ratio 0.95

backfillfull_ratio 0.9

nearfull_ratio 0.85

require_min_compat_client luminous

min_compat_client luminous

require_osd_release pacific

stretch_mode_enabled false

pool 1 'device_health_metrics' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 1 pgp_num 1 autoscale_mode on last_change 18 flags hashpspool stripe_width 0 pg_num_max 32 pg_num_min 1 application mgr_devicehealth

pool 2 'mypool' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 autoscale_mode on last_change 69 flags hashpspool stripe_width 0

pool 3 'myrbd1' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 64 pgp_num 64 autoscale_mode on last_change 78 flags hashpspool,selfmanaged_snaps stripe_width 0 application rbd

pool 4 '.rgw.root' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 autoscale_mode on last_change 83 flags hashpspool stripe_width 0 application rgw

pool 5 'default.rgw.log' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 autoscale_mode on last_change 85 flags hashpspool stripe_width 0 application rgw

pool 6 'default.rgw.control' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 autoscale_mode on last_change 87 flags hashpspool stripe_width 0 application rgw

pool 7 'default.rgw.meta' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 8 pgp_num 8 autoscale_mode on last_change 218 lfor 0/218/216 flags hashpspool stripe_width 0 pg_autoscale_bias 4 pg_num_min 8 application rgw

pool 8 'cephfs-metadata' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32 autoscale_mode on last_change 228 flags hashpspool stripe_width 0 pg_autoscale_bias 4 pg_num_min 16 recovery_priority 5 application cephfs

pool 9 'cephfs-data' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 64 pgp_num 64 autoscale_mode on last_change 228 flags hashpspool stripe_width 0 application cephfs

max_osd 12

osd.0 up in weight 1 up_from 5 up_thru 223 down_at 0 last_clean_interval [0,0) [v2:10.0.0.10:6800/34644,v1:10.0.0.10:6801/34644] [v2:10.0.0.10:6802/34644,v1:10.0.0.10:6803/34644] exists,up 7ae301a0-f311-4a42-ae34-60474187b297

osd.1 up in weight 1 up_from 10 up_thru 223 down_at 0 last_clean_interval [0,0) [v2:10.0.0.10:6808/36585,v1:10.0.0.10:6809/36585] [v2:10.0.0.10:6810/36585,v1:10.0.0.10:6811/36585] exists,up 3f8a2755-1e7b-46da-8849-ff7ec630ab02

osd.2 up in weight 1 up_from 15 up_thru 226 down_at 0 last_clean_interval [0,0) [v2:10.0.0.10:6816/38548,v1:10.0.0.10:6817/38548] [v2:10.0.0.10:6818/38548,v1:10.0.0.10:6819/38548] exists,up e49e457d-26e6-425d-b5ff-7b00cee51642

osd.3 up in weight 1 up_from 21 up_thru 226 down_at 0 last_clean_interval [0,0) [v2:10.0.0.3:6800/14471,v1:10.0.0.3:6801/14471] [v2:10.0.0.3:6802/14471,v1:10.0.0.3:6803/14471] exists,up 02d2ea8d-481e-4ca2-8a70-e9b1a968b4e3

osd.4 up in weight 1 up_from 26 up_thru 226 down_at 0 last_clean_interval [0,0) [v2:10.0.0.3:6808/16401,v1:10.0.0.3:6809/16401] [v2:10.0.0.3:6810/16401,v1:10.0.0.3:6811/16401] exists,up 714fd355-2581-4ce2-a7cf-f66c94ca8985

osd.5 up in weight 1 up_from 31 up_thru 223 down_at 0 last_clean_interval [0,0) [v2:10.0.0.3:6816/18347,v1:10.0.0.3:6817/18347] [v2:10.0.0.3:6818/18347,v1:10.0.0.3:6819/18347] exists,up 71700419-3f3b-435d-a037-3464f0657ace

osd.6 up in weight 1 up_from 36 up_thru 226 down_at 0 last_clean_interval [0,0) [v2:10.0.0.2:6800/14191,v1:10.0.0.2:6801/14191] [v2:10.0.0.2:6802/14191,v1:10.0.0.2:6803/14191] exists,up 431db8ff-f9dd-4f3f-9964-8586f45e9458

osd.7 up in weight 1 up_from 42 up_thru 223 down_at 0 last_clean_interval [0,0) [v2:10.0.0.2:6808/16107,v1:10.0.0.2:6809/16107] [v2:10.0.0.2:6810/16107,v1:10.0.0.2:6811/16107] exists,up ac357d98-48f8-4b22-9a77-1815753b2750

osd.8 up in weight 1 up_from 48 up_thru 226 down_at 0 last_clean_interval [0,0) [v2:10.0.0.2:6816/18017,v1:10.0.0.2:6817/18017] [v2:10.0.0.2:6818/18017,v1:10.0.0.2:6819/18017] exists,up e9e491fe-8648-461c-b4d0-bd3dca9249b6

osd.9 up in weight 1 up_from 53 up_thru 223 down_at 0 last_clean_interval [0,0) [v2:10.0.0.12:6800/14219,v1:10.0.0.12:6801/14219] [v2:10.0.0.12:6802/14219,v1:10.0.0.12:6803/14219] exists,up b0690625-1217-4948-b2bd-ef096ef7d27f

osd.10 up in weight 1 up_from 59 up_thru 226 down_at 0 last_clean_interval [0,0) [v2:10.0.0.12:6808/16134,v1:10.0.0.12:6809/16134] [v2:10.0.0.12:6810/16134,v1:10.0.0.12:6811/16134] exists,up e7ff21fa-2d50-43fd-bb33-dec1911b04f6

osd.11 up in weight 1 up_from 64 up_thru 226 down_at 0 last_clean_interval [0,0) [v2:10.0.0.12:6816/18070,v1:10.0.0.12:6817/18070] [v2:10.0.0.12:6818/18070,v1:10.0.0.12:6819/18070] exists,up e15f2ec9-b97c-4b44-81f4-04db92395d17

pg_upmap_items 3.e [11,2]

pg_upmap_items 3.34 [11,9]

pg_upmap_items 3.36 [10,2]

pg_upmap_items 3.38 [10,9]

pg_upmap_items 3.3c [10,9]

pg_upmap_items 9.b [5,4]

pg_upmap_items 9.c [7,8]

pg_upmap_items 9.e [1,0]

pg_upmap_items 9.10 [10,8]

pg_upmap_items 9.18 [7,8]

pg_upmap_items 9.1d [10,11]

pg_upmap_items 9.27 [7,8]

pg_upmap_items 9.2c [10,11]

pg_upmap_items 9.36 [10,4]显示osd和节点的对应关系

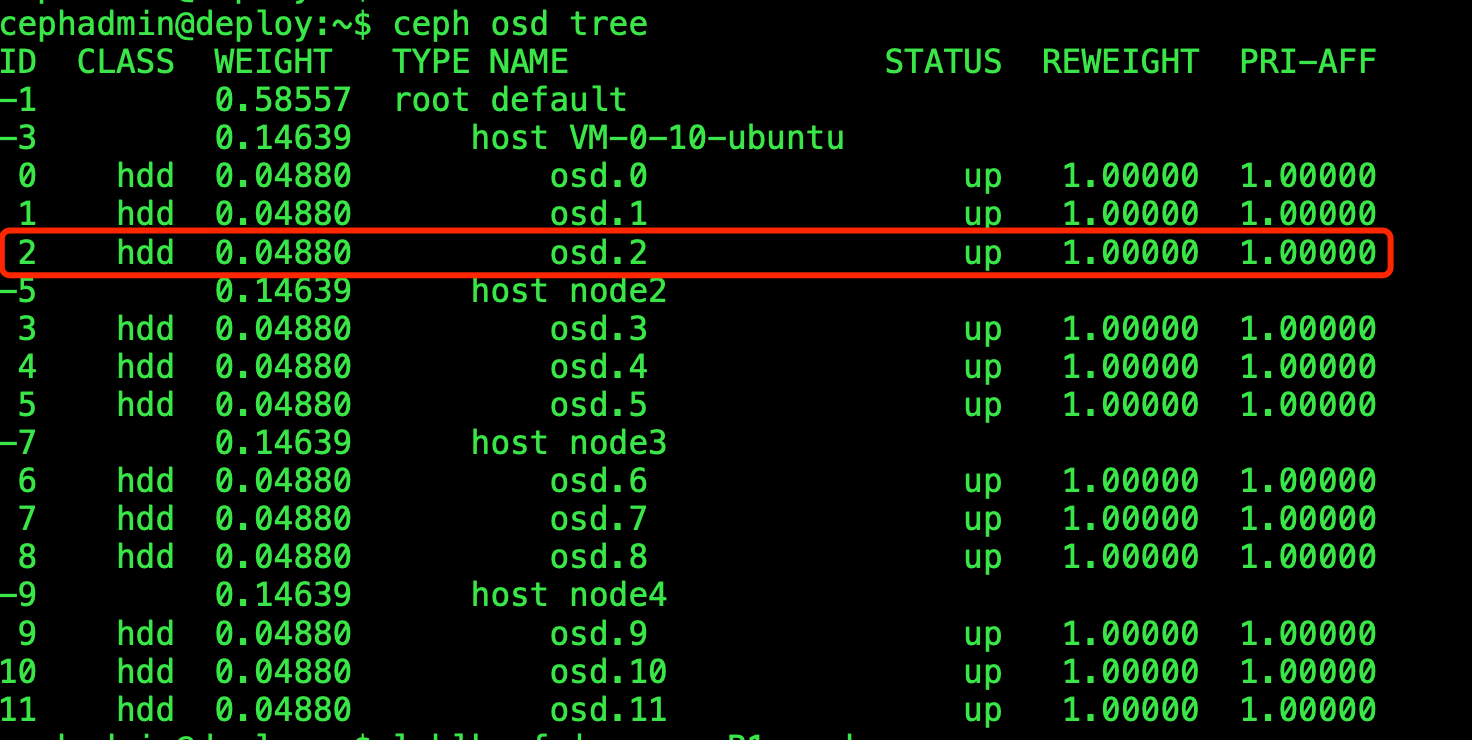

cephadmin@deploy:~$ ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.58557 root default

-3 0.14639 host VM-0-10-ubuntu

0 hdd 0.04880 osd.0 up 1.00000 1.00000

1 hdd 0.04880 osd.1 up 1.00000 1.00000

2 hdd 0.04880 osd.2 up 1.00000 1.00000

-5 0.14639 host node2

3 hdd 0.04880 osd.3 up 1.00000 1.00000

4 hdd 0.04880 osd.4 up 1.00000 1.00000

5 hdd 0.04880 osd.5 up 1.00000 1.00000

-7 0.14639 host node3

6 hdd 0.04880 osd.6 up 1.00000 1.00000

7 hdd 0.04880 osd.7 up 1.00000 1.00000

8 hdd 0.04880 osd.8 up 1.00000 1.00000

-9 0.14639 host node4

9 hdd 0.04880 osd.9 up 1.00000 1.00000

10 hdd 0.04880 osd.10 up 1.00000 1.00000

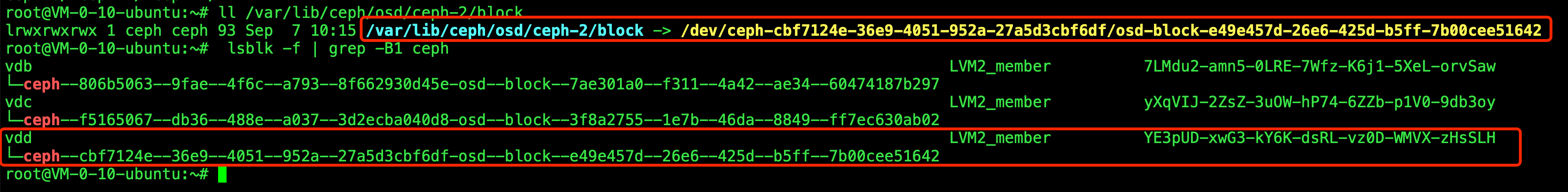

11 hdd 0.04880 osd.11 up 1.00000 1.00000查找OSD对应的磁盘

查看mon节点状态

cephadmin@deploy:~$ ceph mon stat

e3: 3 mons at {mon1=[v2:10.0.0.17:3300/0,v1:10.0.0.17:6789/0],mon2=[v2:10.0.0.6:3300/0,v1:10.0.0.6:6789/0],mon3=[v2:10.0.0.8:3300/0,v1:10.0.0.8:6789/0]}, election epoch 12, leader 0 mon1, quorum 0,1,2 mon1,mon2,mon3查看mon节点的dumo信息

cephadmin@deploy:~$ ceph mon dump

epoch 3

fsid dc883878-2675-4786-b000-ae9b68ab3098

last_changed 2022-09-07T10:21:39.274444+0800

created 2022-09-07T09:59:05.606260+0800

min_mon_release 16 (pacific)

election_strategy: 1

0: [v2:10.0.0.17:3300/0,v1:10.0.0.17:6789/0] mon.mon1

1: [v2:10.0.0.6:3300/0,v1:10.0.0.6:6789/0] mon.mon2

2: [v2:10.0.0.8:3300/0,v1:10.0.0.8:6789/0] mon.mon3

dumped monmap epoch 3

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 如何调用 DeepSeek 的自然语言处理 API 接口并集成到在线客服系统

· 【译】Visual Studio 中新的强大生产力特性

· 2025年我用 Compose 写了一个 Todo App