Ceph集群应用基础-块存储RBD

块存储RBD基础

创建RBD

创建存储池,制定pg和pgp的数量,pgp是对存在于pg的数据进行组合存储,pgp通常等于pg的值

ceph osd pool create myrbd1 64 64对存储池启用RBD功能

ceph osd pool application enable myrbd1 rbd通过rbd命令对存储池进行初始化

rbd pool init -p myrbd1创建一个名为myimg1的镜像

rbd create myimg1 --size 5G --pool myrbd1查看指定rbd信息

cephadmin@deploy:~$ rbd --image myimg1 --pool myrbd1 info

rbd image 'myimg1':

size 5 GiB in 1280 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 10f8357f64fe

block_name_prefix: rbd_data.10f8357f64fe

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

op_features:

flags:

create_timestamp: Wed Sep 7 10:37:21 2022

access_timestamp: Wed Sep 7 10:37:21 2022

modify_timestamp: Wed Sep 7 10:37:21 2022创建指定特性的img

cephadmin@deploy:~$ rbd create myimg2 --size 3G --pool myrbd1 --image-format 2 --image-feature layering

cephadmin@deploy:~$ rbd --image myimg2 --pool myrbd1 info

rbd image 'myimg2':

size 3 GiB in 768 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 1113fd72fb3e

block_name_prefix: rbd_data.1113fd72fb3e

format: 2

features: layering

op_features:

flags:

create_timestamp: Wed Sep 7 10:44:07 2022

access_timestamp: Wed Sep 7 10:44:07 2022

modify_timestamp: Wed Sep 7 10:44:07 2022当前ceph状态

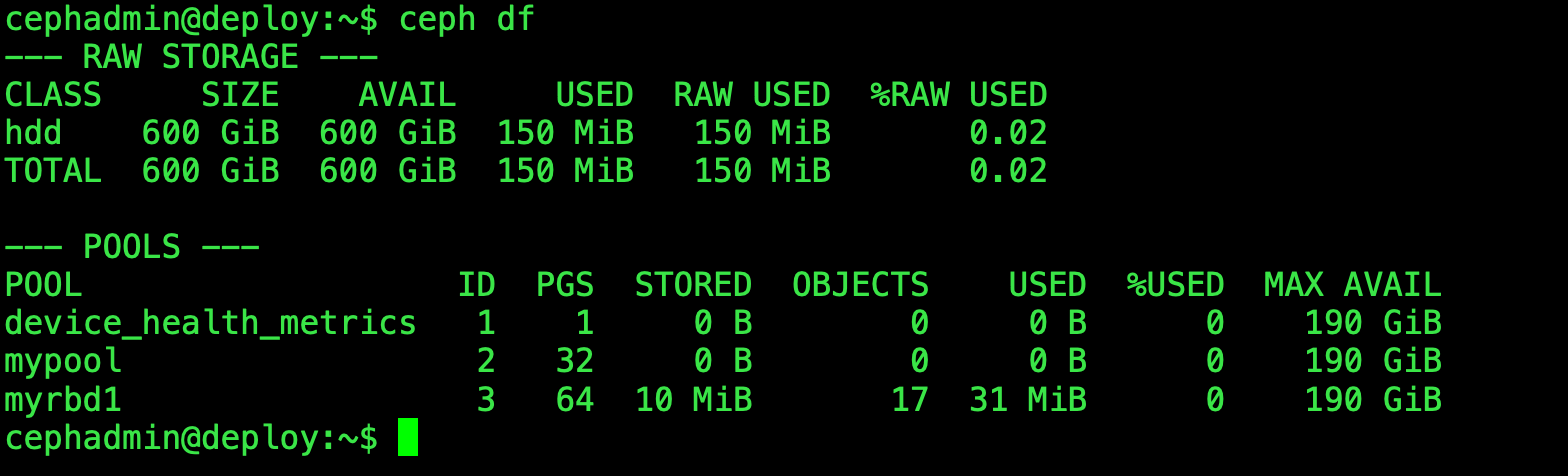

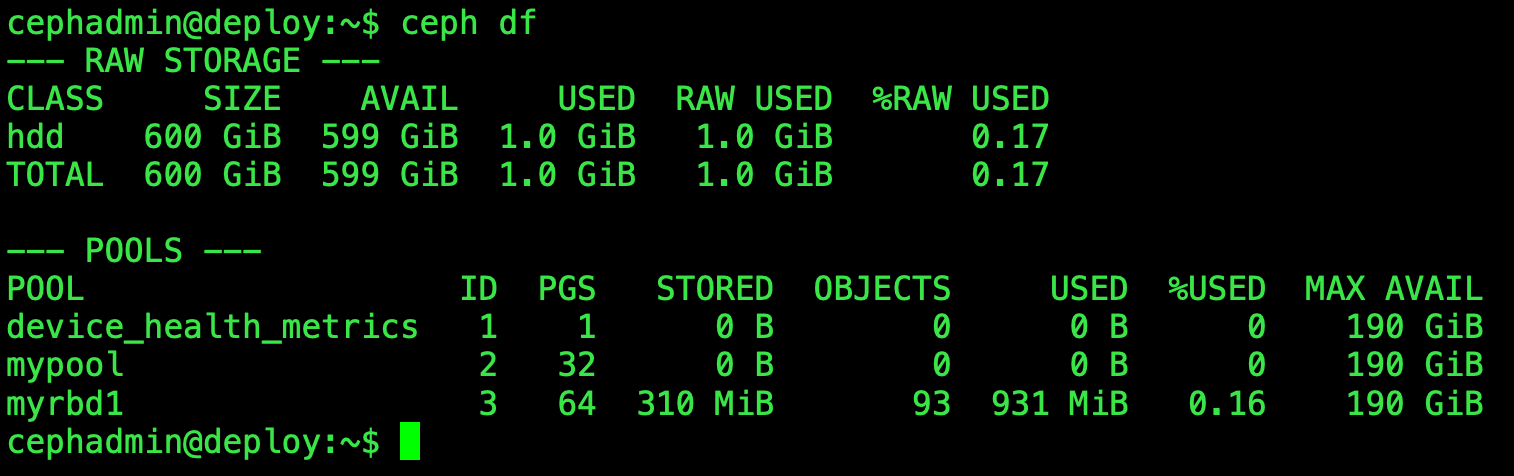

cephadmin@deploy:~$ ceph df

--- RAW STORAGE ---

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 600 GiB 600 GiB 81 MiB 81 MiB 0.01

TOTAL 600 GiB 600 GiB 81 MiB 81 MiB 0.01

--- POOLS ---

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

device_health_metrics 1 1 0 B 0 0 B 0 190 GiB

mypool 2 32 0 B 0 0 B 0 190 GiB

myrbd1 3 64 405 B 7 48 KiB 0 190 GiB使用centos挂载ceph存储,安装ceph-common

yum install epel-release

yum install https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-octopus/el7/noarch/ceph-release-1-1.el7.noarch.rpm -y

yum install ceph-common -y客户端映射img,myimg2可以映射,myimg1不可以,img格式问题,内核不支持,可以升级内核试试。

或者关掉object-map fast-diff deep-flatten特性就可以映射了

[root@VM-0-9-centos ~]# rbd -p myrbd1 map myimg2

/dev/rbd0

[root@VM-0-9-centos ~]# rbd -p myrbd1 map myimg1

rbd: sysfs write failed

RBD image feature set mismatch. You can disable features unsupported by the kernel with "rbd feature disable myrbd1/myimg1 object-map fast-diff deep-flatten".

In some cases useful info is found in syslog - try "dmesg | tail".

rbd: map failed: (6) No such device or address

[root@VM-0-9-centos ~]# rbd feature disable myrbd1/myimg1 object-map fast-diff deep-flatten

[root@VM-0-9-centos ~]# rbd -p myrbd1 map myimg1

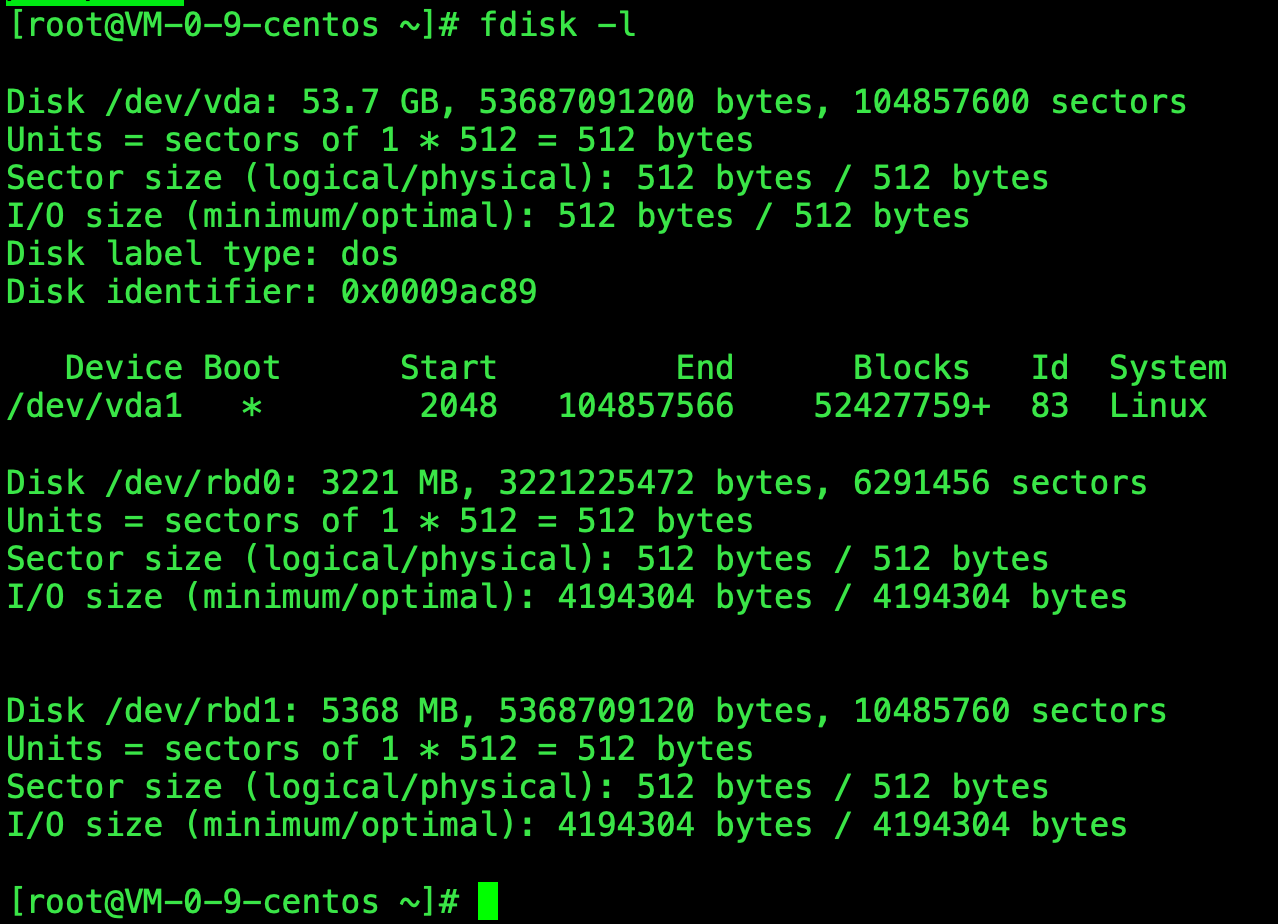

/dev/rbd1映射之后fdisk -l就可以看到了

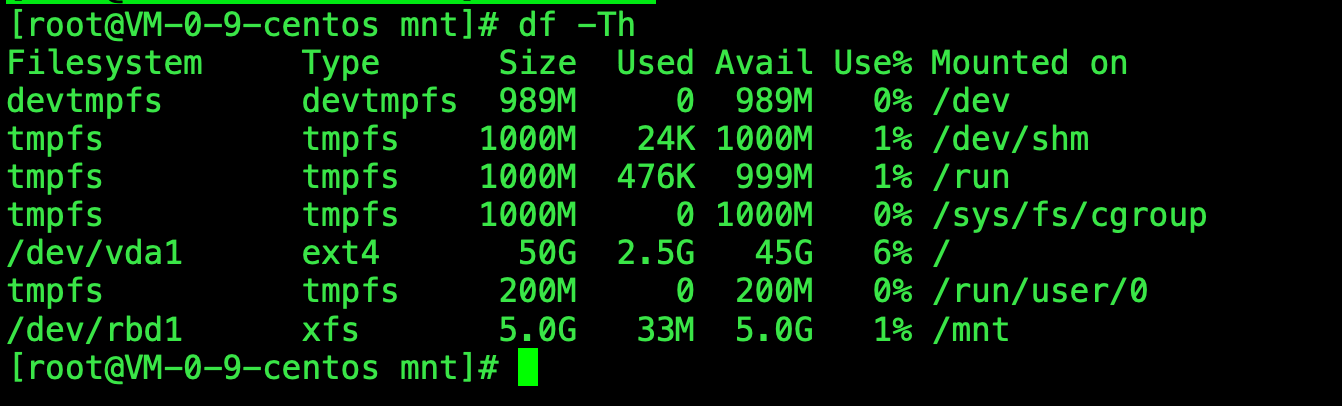

直接将/dev/rbd1格式话 挂载就可以使用了

[root@VM-0-9-centos ~]# mkfs.xfs /dev/rbd1

meta-data=/dev/rbd1 isize=512 agcount=8, agsize=163840 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=1310720, imaxpct=25

= sunit=1024 swidth=1024 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

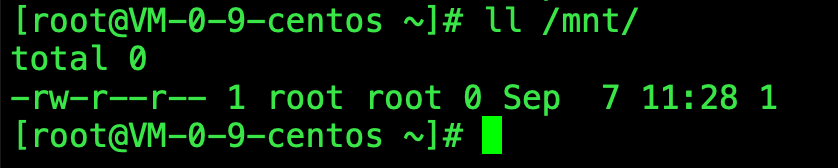

[root@VM-0-9-centos ~]# mount /dev/rbd1 /mnt

[root@VM-0-9-centos ~]# cd /mnt/

[root@VM-0-9-centos mnt]# touch 1

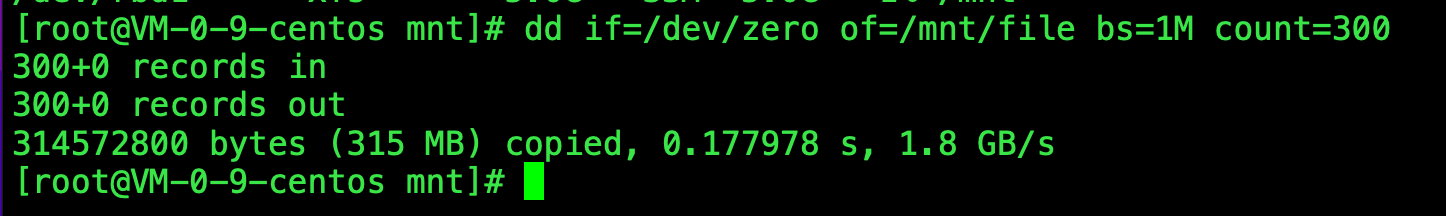

测试一下速率1.8G/s 速度还是不错的。

删除文件

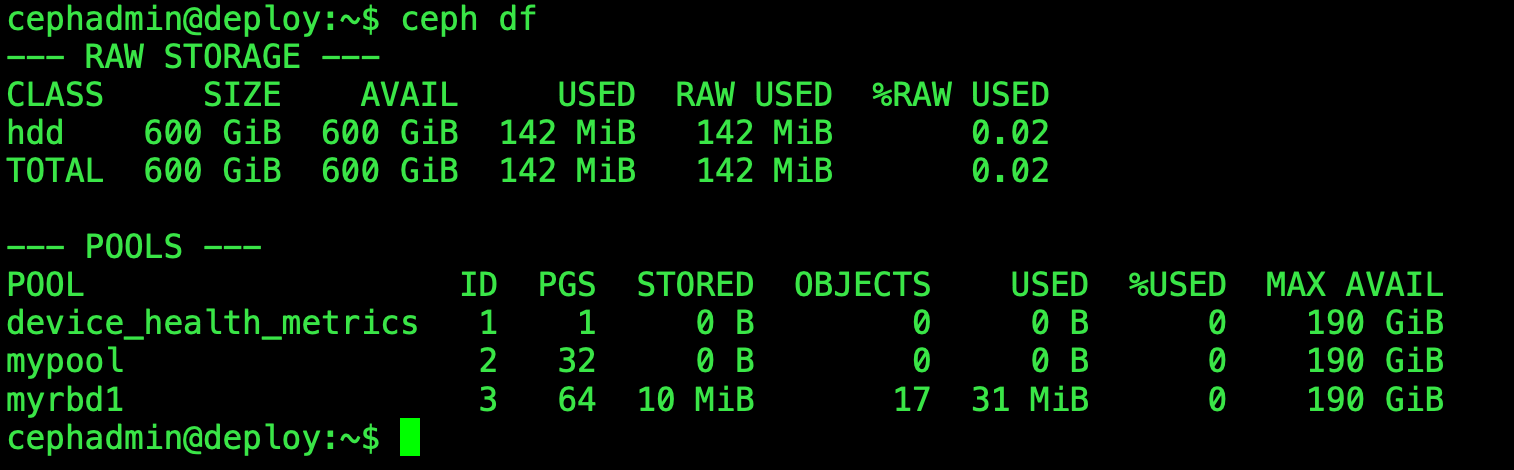

rm -rf /mnt/file直接删除文件看空间并没有释放出来。

释放空间

[root@VM-0-9-centos ~]# fstrim -v /mnt

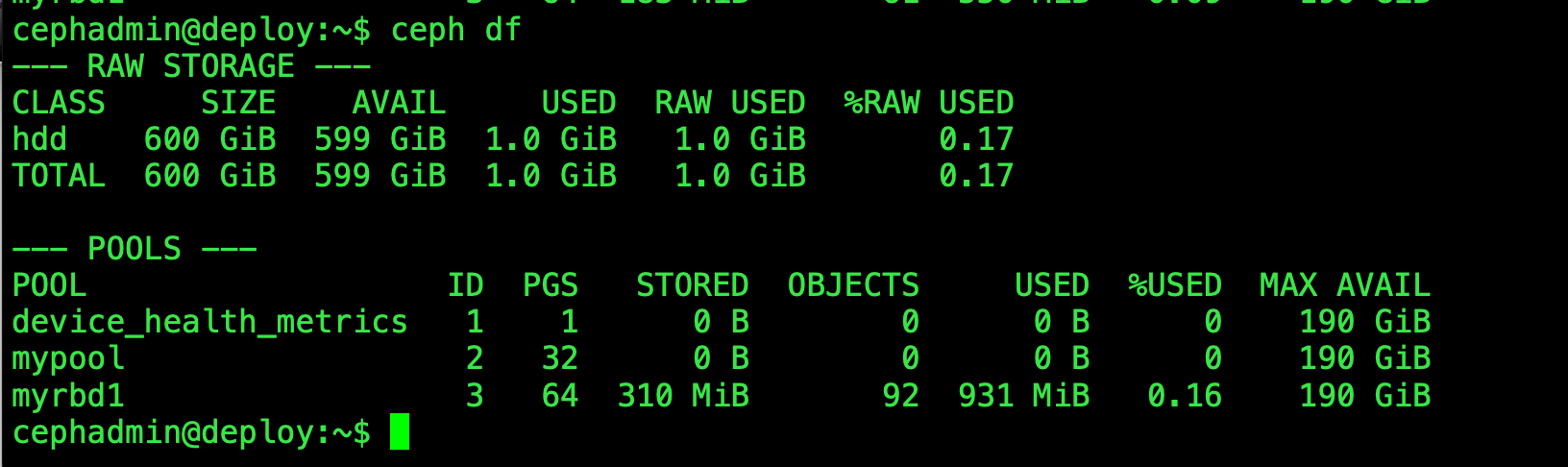

/mnt: 5 GiB (5353766912 bytes) trimmed在查就有了

配置挂载选项

[root@VM-0-9-centos ~]# umount /mnt

[root@VM-0-9-centos ~]# mount -t xfs -o discard /dev/rbd1 /mnt还可以看到之前的数据

继续写一个文件

[root@VM-0-9-centos ~]# dd if=/dev/zero of=/mnt/file bs=1M count=300

把文件删掉,这次空间就会自动释放掉了

[root@VM-0-9-centos ~]# rm -rf /mnt/file