Ceph安装部署pacific版本

| Monitor | Mgr | OSD | Deploy | |

| 数量 | 2 | 2 | 4 | 1 |

| CPU |

8c |

8c | 4c | 4c |

| Memory |

16g |

16g | 16g | 8g |

| 硬盘 | 200g | 200g | 500g*3 | 200g |

| 系统 | ubuntu18.04 | ubuntu18.04 | ubuntu18.04 | ubuntu18.04 |

设置apt源,所有服务器都需要设置

sudo -i

lsb_release -a

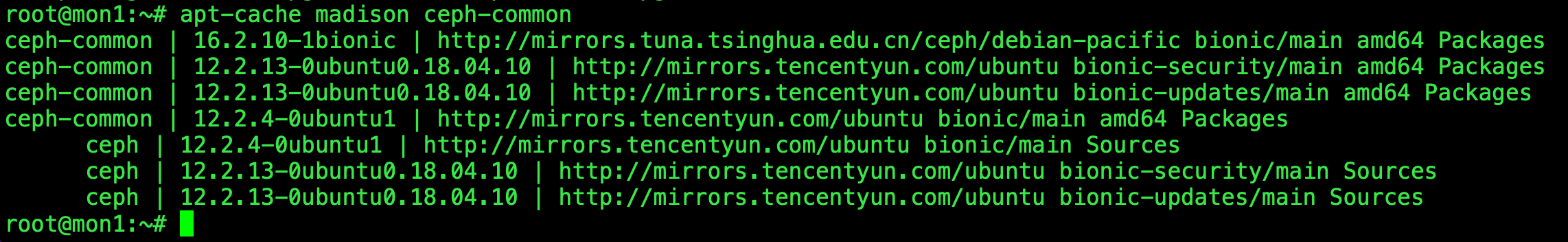

apt-cache madison ceph-common

wget -q -O- 'https://download.ceph.com/keys/release.asc' | sudo apt-key add -

sudo echo 'deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific/ bionic main' >> /etc/apt/sources.list

sudo apt-get -y install apt-transport-https ca-certificates curl software-properties-common

sed -i 's/https/http/g' /etc/apt/sources.list

apt update

配置一个普通用户负责部署ceph,并生成密钥copy到其他机器,所有服务器

groupadd -r -g 2088 cephadmin && useradd -r -m -s /bin/bash -u 2088 -g 2088 cephadmin && echo cephadmin:123456 | chpasswd

echo "cephadmin ALL=(ALL) NOPASSWD: ALL" >> /etc/sudoers

ssh-keygen

ssh-copy-id cephadmin@10.0.0.16

ssh-copy-id cephadmin@10.0.0.9

ssh-copy-id cephadmin@10.0.0.17

ssh-copy-id cephadmin@10.0.0.3

ssh-copy-id cephadmin@10.0.0.7

ssh-copy-id cephadmin@10.0.0.15

ssh-copy-id cephadmin@10.0.0.14

ssh-copy-id cephadmin@10.0.0.10

ssh-copy-id cephadmin@10.0.0.12添加hosts文件 所有服务器

sudo vim /etc/hosts

10.0.0.12 node4.yzy.com node4

10.0.0.10 node3.yzy.com node3

10.0.0.14 node2.yzy.com node2

10.0.0.15 node1.yzy.com node1

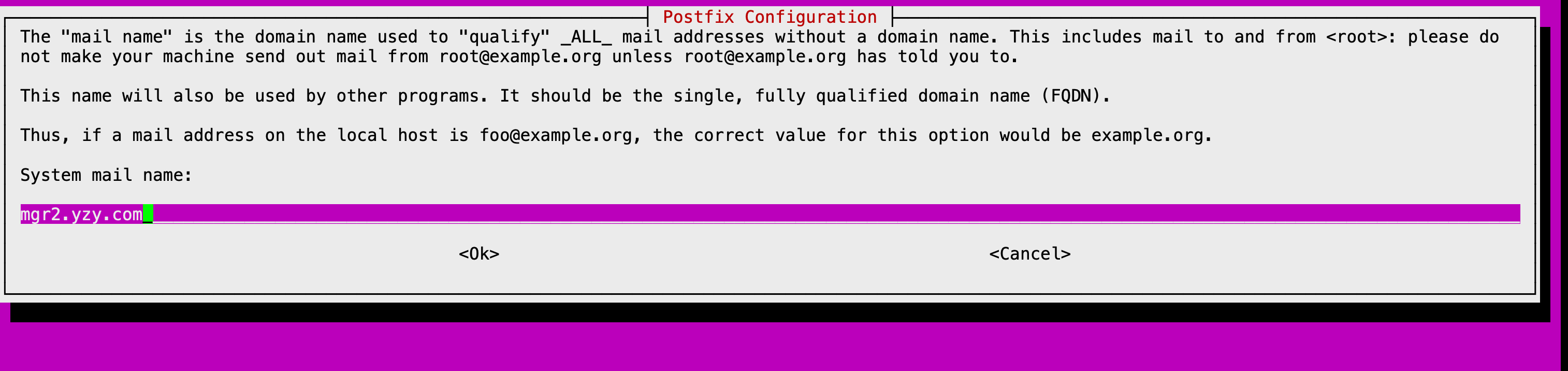

10.0.0.7 mgr2.yzy.com mgr2

10.0.0.3 mgr1.yzy.com mgr1

10.0.0.17 mon3.yzy.com mon3

10.0.0.9 mon2.yzy.com mon2

10.0.0.16 mon1.yzy.com mon1

10.0.0.2 deploy.yzy.com deploy安装ceph-deploy deploy服务器

sudo apt install ceph-deploy安装python2.7deploy服务器

sudo apt install python2.7 -y

sudo ln -sv /usr/bin/python2.7 /usr/bin/python2初始化mon节点,使用cephadmin用户 deploy服务器,最好两个网卡一个cluster一个public

su -cephadmin

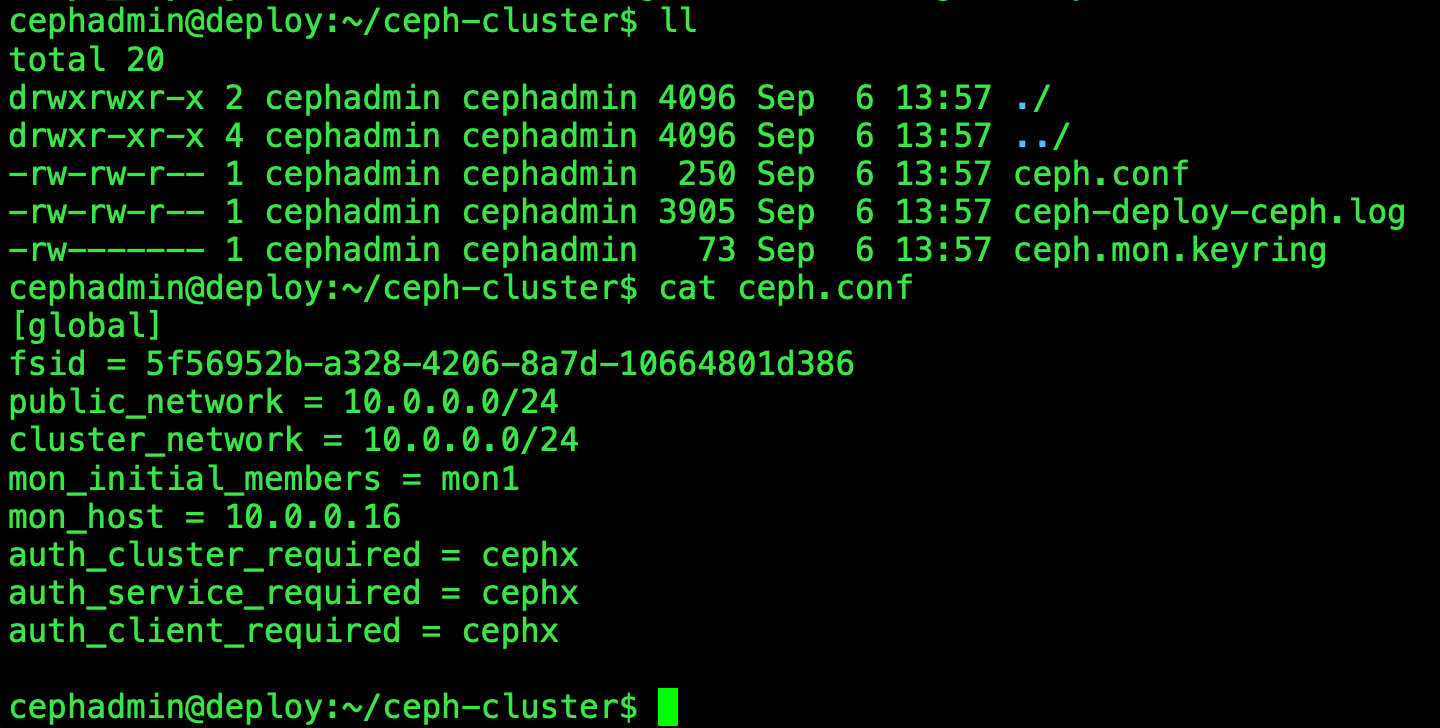

mkdir ceph-cluster

cd ceph-cluster

ceph-deploy new --cluster-network 10.0.0.0/24 --public-network 10.0.0.0/24 mon1.yzy.com

初始化node节点 deploy执行

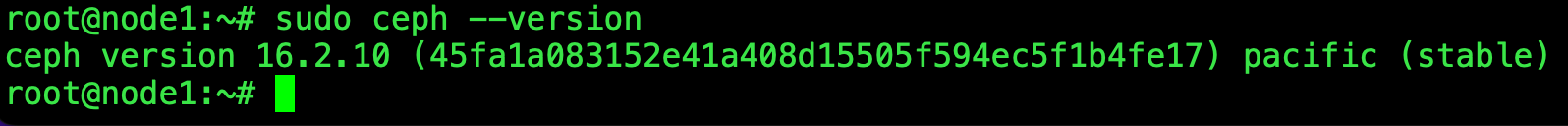

ceph-deploy install --no-adjust-repos --nogpgcheck node1 node2 node3 node4

安装ceph-mon mon服务器

apt-cache madison ceph-mon

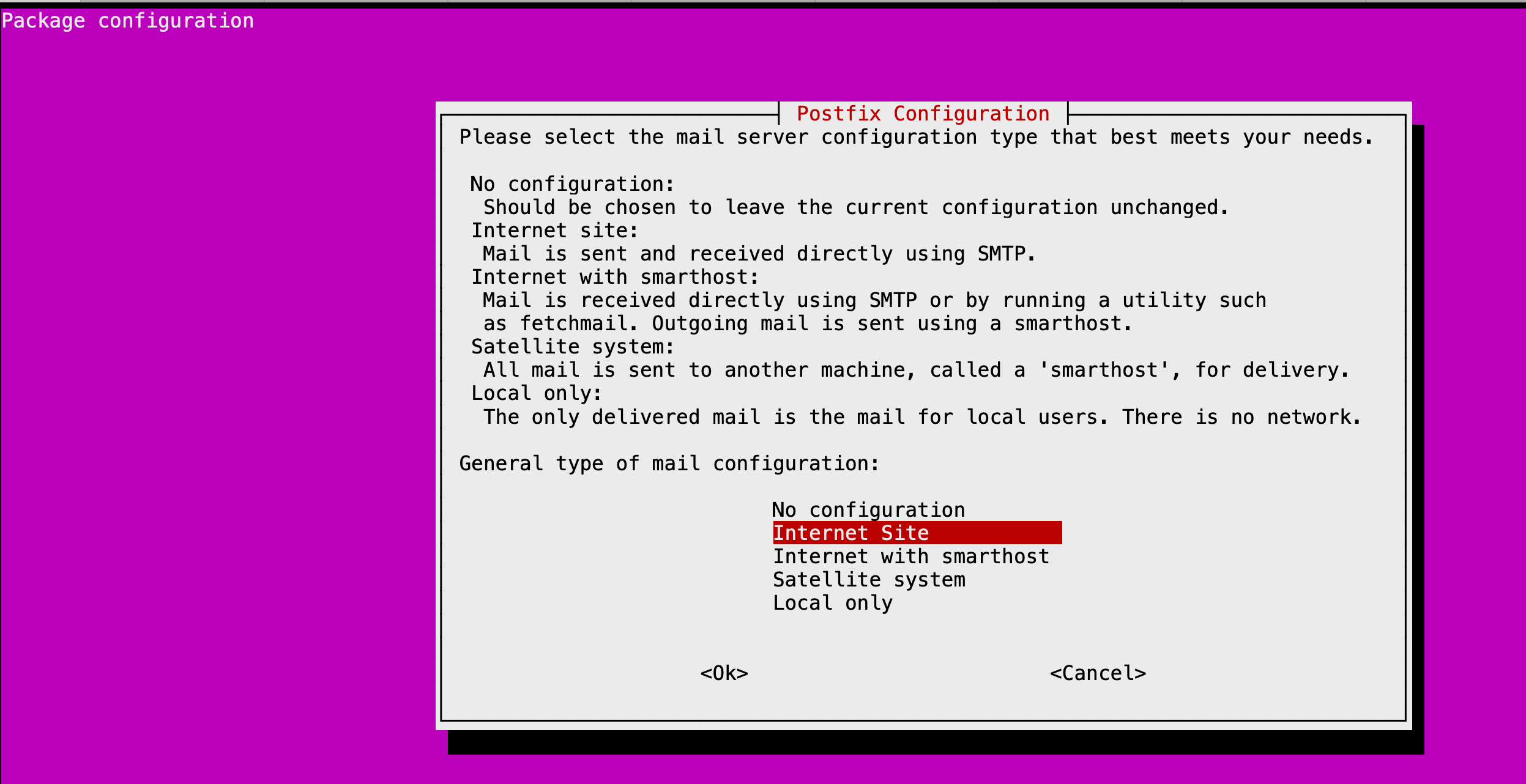

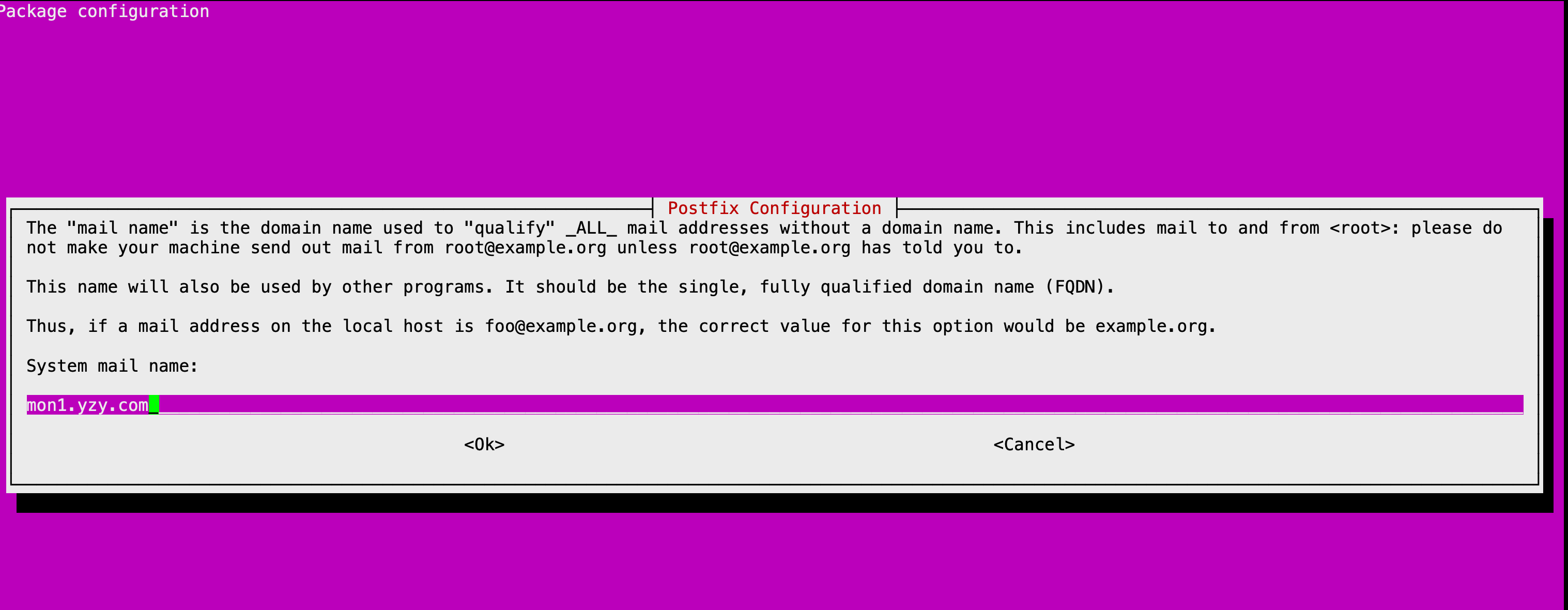

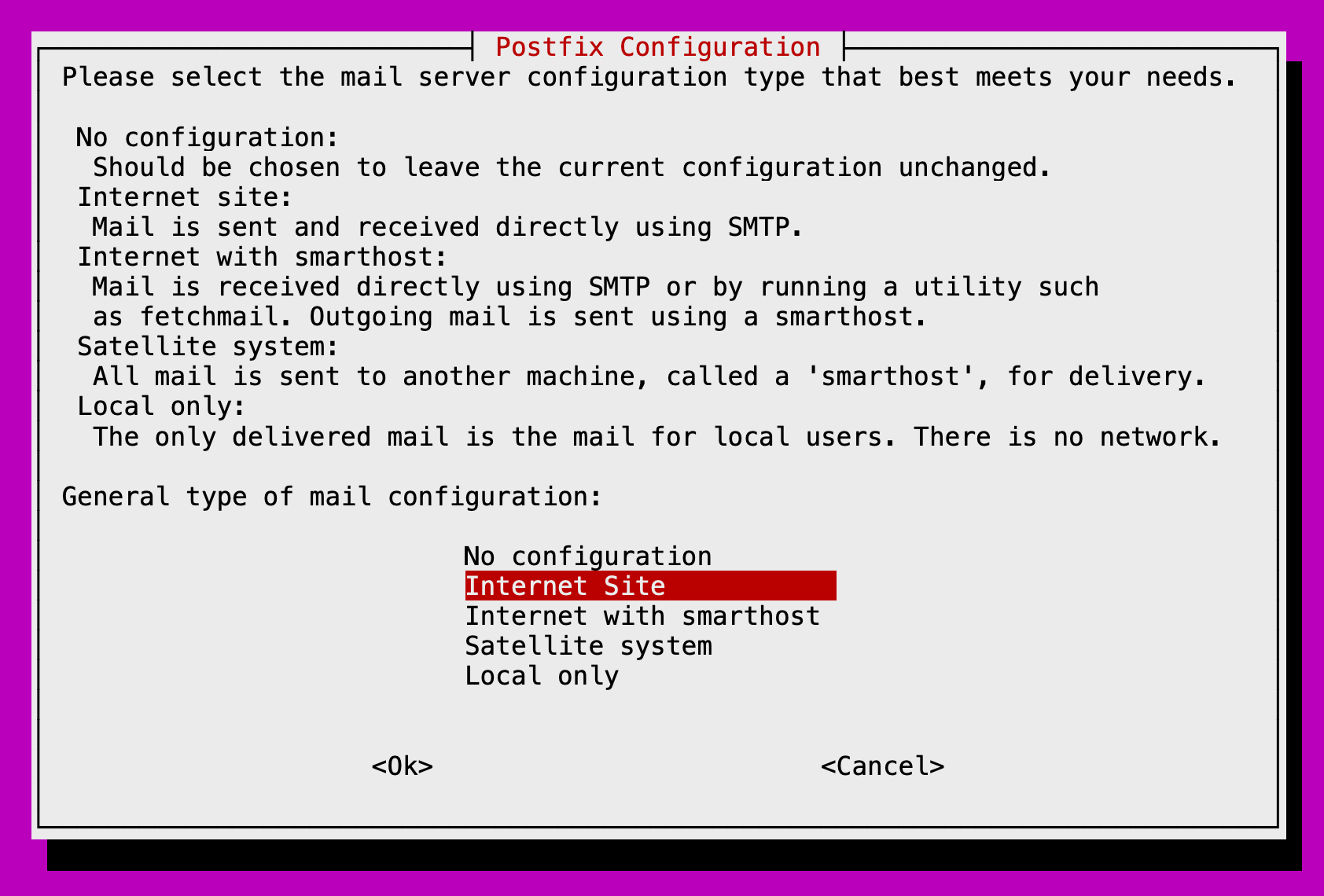

sudo apt install ceph-mon -y直接默认

ceph集群添加mon服务 deploy服务器

ceph-deploy mon create-initial

分发admin密钥 ,如果node节点没有也需要安装 deploy服务器

sudo apt install ceph-common -y

ceph-deploy admin deploy

ls /etc/ceph/

ceph-deploy admin node1 node2 node3 node4node节点验证密钥

sudo setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring

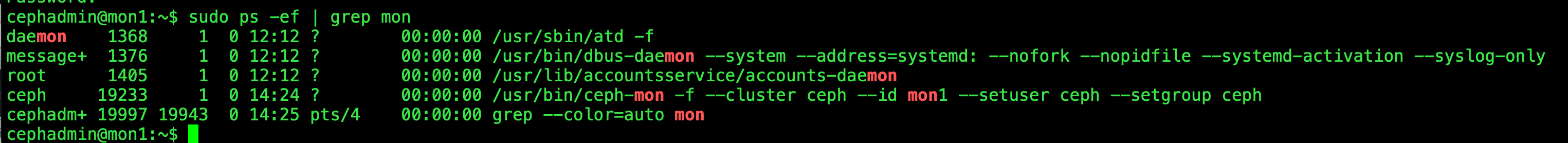

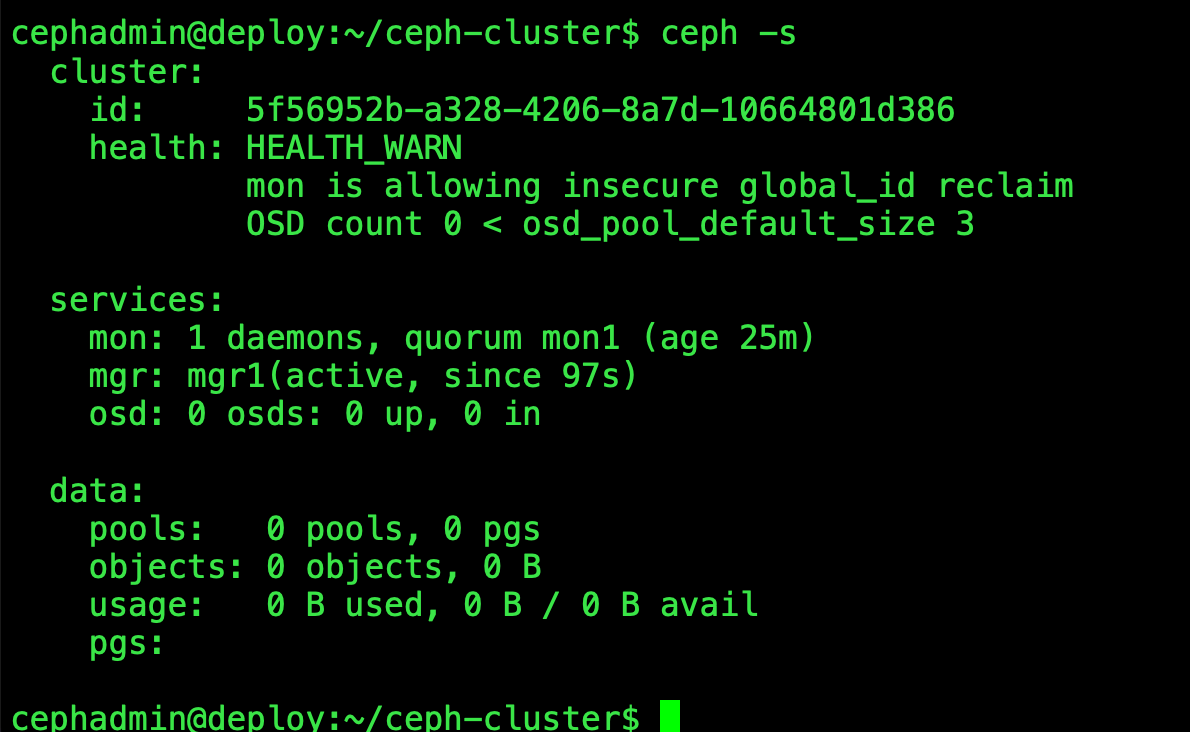

ceph -s

安装mgr mgr服务器

sudo apt install ceph-mgr

deploy节点

ceph-deploy mgr create mgr1

初始化存储节点 deploy服务器

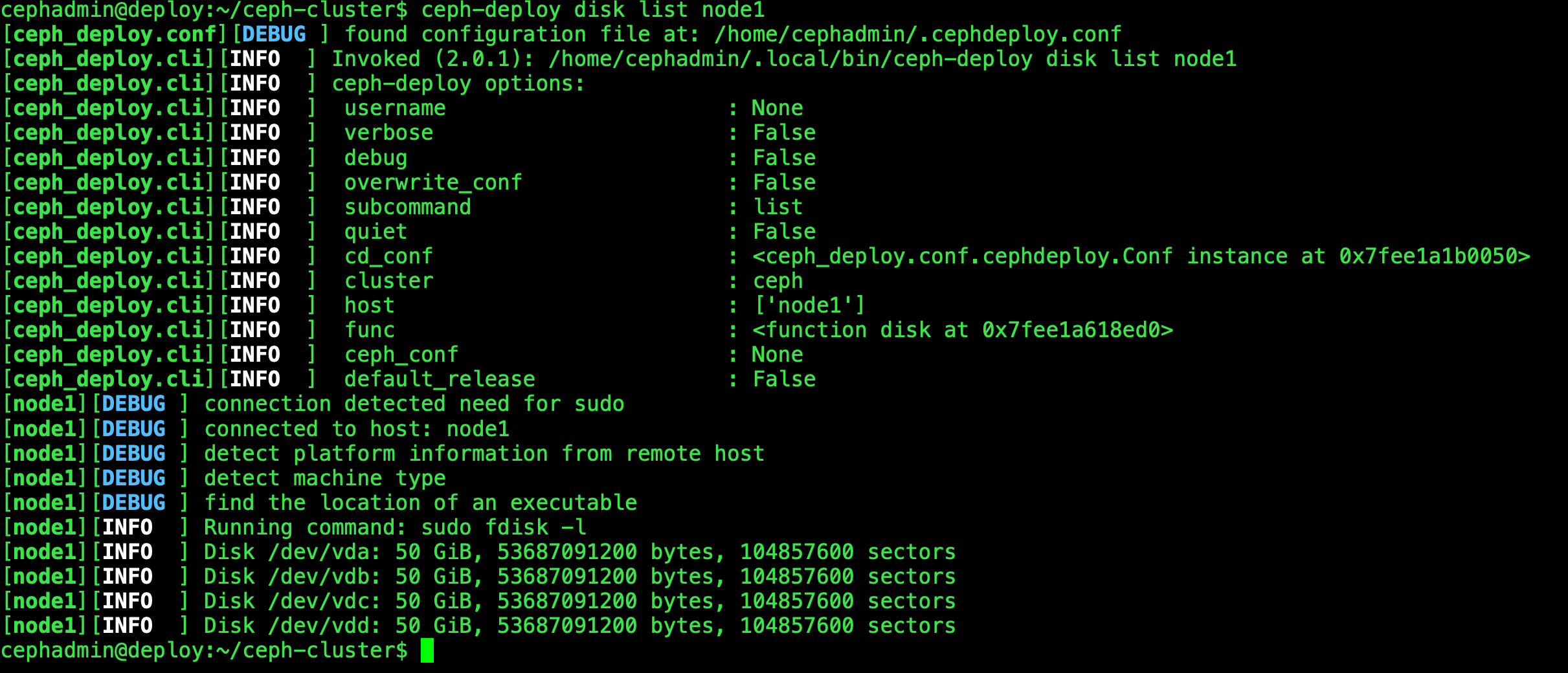

ceph-deploy disk list node1

报错:

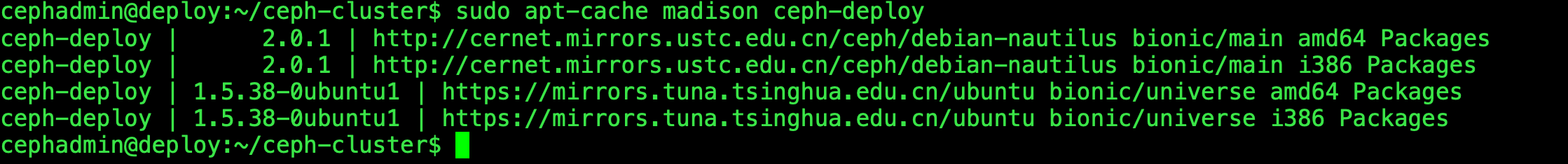

[ceph_deploy][ERROR ] ExecutableNotFound: Could not locate executable 'ceph-disk' make sure it is installed and available on node1后来查看官网https://docs.ceph.com/en/pacific/ceph-volume/发现,在Ceph version 13.0.0时,ceph-disk已经被弃用,改用ceph-volume,查看所有命令确实没有ceph-disk只有ceph-volume,直接更新ceph-deploy 2.0.1版本

只有pacific版本的镜像源还能找到2.0.1版本

sudo vim /etc/apt/sources.list

deb http://cernet.mirrors.ustc.edu.cn/ceph/debian-nautilus/ bionic main

sudo apt install ceph-deploy=2.0.1

查下node1上有多少磁盘

ceph-deploy disk list node1

擦除磁盘 deploy服务器

ceph-deploy disk zap node1 /dev/vdb

ceph-deploy disk zap node1 /dev/vdc

ceph-deploy disk zap node1 /dev/vdd

ceph-deploy disk zap node2 /dev/vdb

ceph-deploy disk zap node2 /dev/vdc

ceph-deploy disk zap node2 /dev/vdd

ceph-deploy disk zap node3 /dev/vdb

ceph-deploy disk zap node3 /dev/vdc

ceph-deploy disk zap node3 /dev/vdd

ceph-deploy disk zap node4 /dev/vdb

ceph-deploy disk zap node4 /dev/vdc

ceph-deploy disk zap node4 /dev/vdd添加OSD deploy服务器

ceph-deploy osd create node1 --data /dev/vdb

ceph-deploy osd create node1 --data /dev/vdc

ceph-deploy osd create node1 --data /dev/vdd

ceph-deploy osd create node2 --data /dev/vdb

ceph-deploy osd create node2 --data /dev/vdc

ceph-deploy osd create node2 --data /dev/vdd

ceph-deploy osd create node3 --data /dev/vdb

ceph-deploy osd create node3 --data /dev/vdc

ceph-deploy osd create node3 --data /dev/vdd

ceph-deploy osd create node4 --data /dev/vdb

ceph-deploy osd create node4 --data /dev/vdc

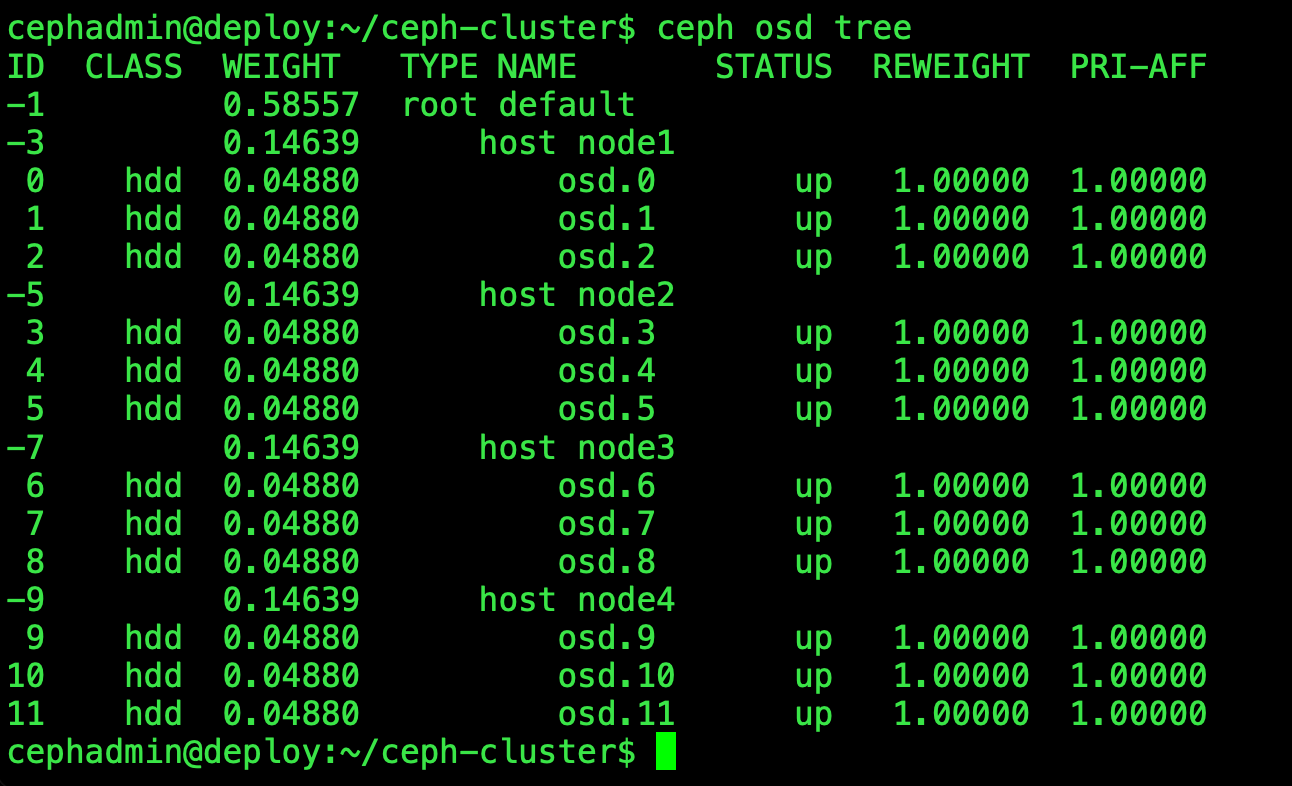

ceph-deploy osd create node4 --data /dev/vdd磁盘ID表格,方便自己查是哪个磁盘坏了

| node1 | node2 | node3 | node4 | |

| /dev/vdb | 0 | 3 | 6 | 9 |

| /dev/vdc | 1 | 4 | 7 | 10 |

| /dev/vdd | 2 | 5 | 8 | 11 |

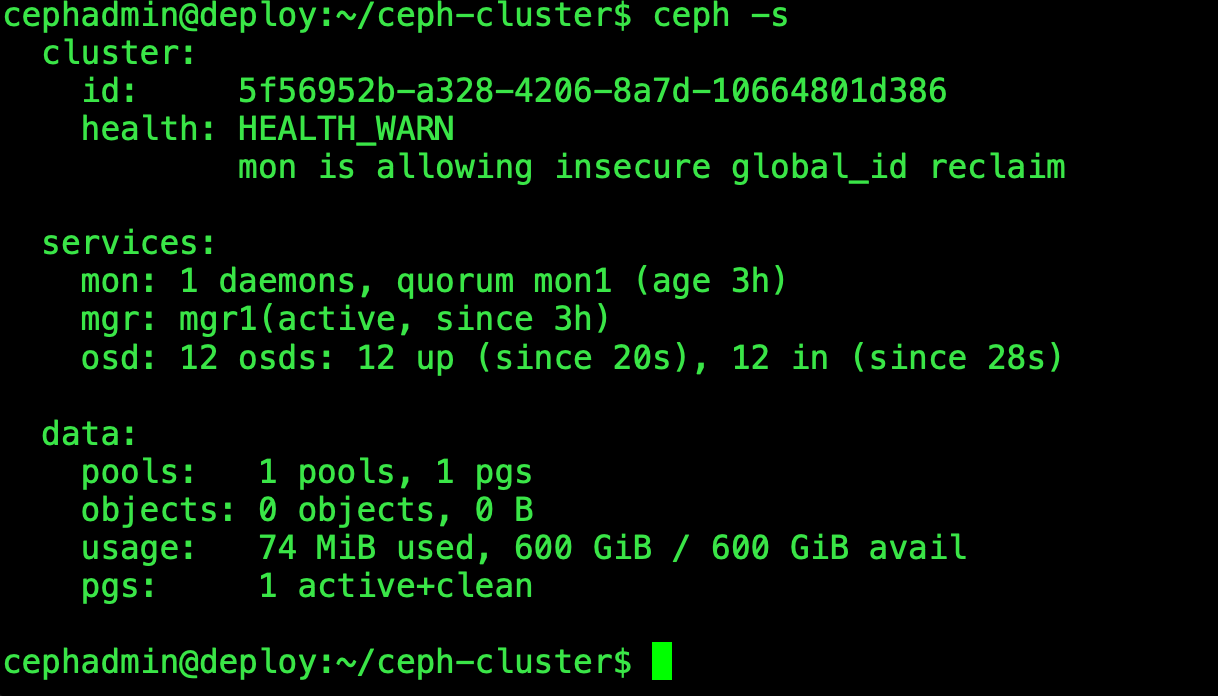

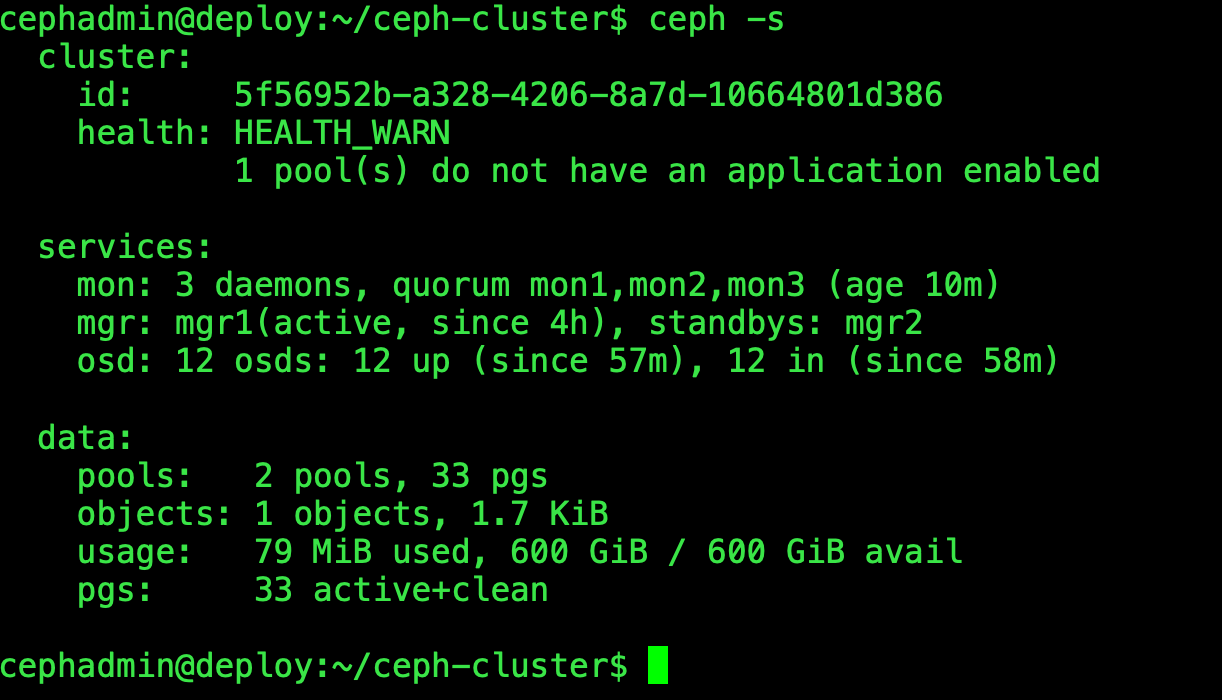

ceph -s 已经可以看12块磁盘了

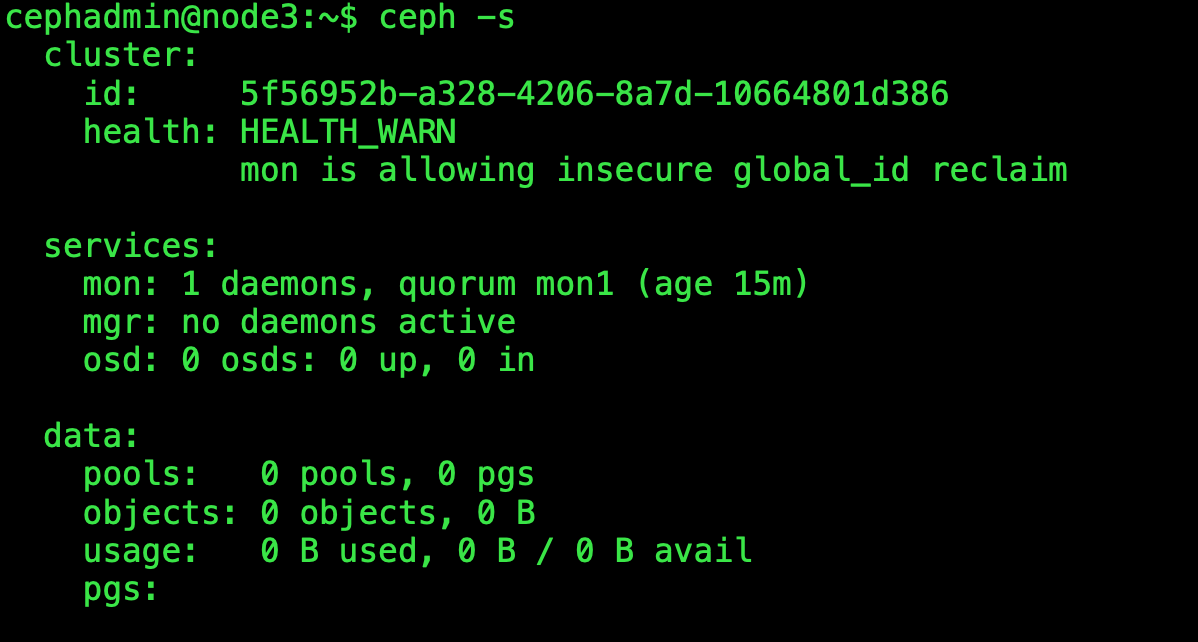

ceph -s一直提示mon is allowing insecure global_id reclaim

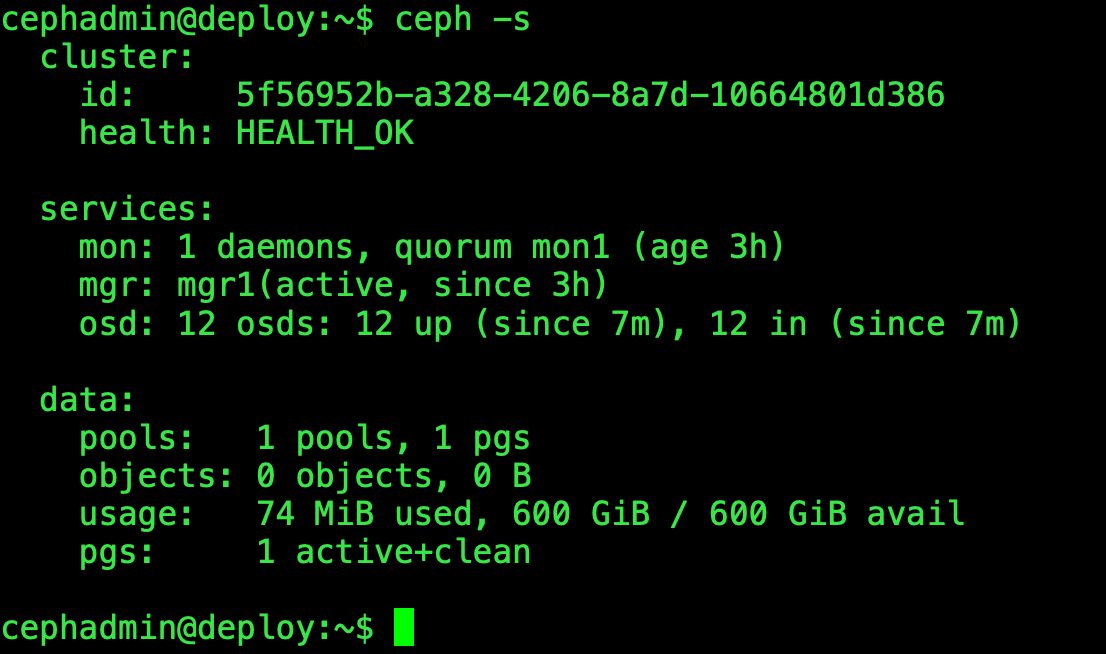

不允许非安全通信

ceph config set mon auth_allow_insecure_global_id_reclaim false

从RADOS中移除OSD

ceph osd out {osd-num} #停用设备

sudo systemctl stop ceph-osd@{osd-num} #停止进程

ceph osd purge {id} --yes-i-really-mean-it #移除设备创建pool

cephadmin@deploy:~$ ceph osd pool create mypool 32 32

pool 'mypool' created

cephadmin@deploy:~$ ceph osd pool ls

device_health_metrics

mypool3,6,0表示id为3 6 0组成的,3是主,是有32种组合关系

cephadmin@deploy:~$ ceph pg ls-by-pool mypool | awk '{print $1,$2,$15}'

PG OBJECTS ACTING

2.0 0 [3,6,0]p3

2.1 0 [9,0,6]p9

2.2 0 [5,1,10]p5

2.3 0 [11,5,8]p11

2.4 0 [1,7,9]p1

2.5 0 [8,0,4]p8

2.6 0 [1,6,10]p1

2.7 0 [3,10,2]p3

2.8 0 [9,7,0]p9

2.9 0 [1,4,9]p1

2.a 0 [6,1,9]p6

2.b 0 [8,5,10]p8

2.c 0 [6,0,5]p6

2.d 0 [6,10,2]p6

2.e 0 [2,8,9]p2

2.f 0 [8,9,4]p8

2.10 0 [10,7,0]p10

2.11 0 [9,3,1]p9

2.12 0 [7,1,3]p7

2.13 0 [9,4,2]p9

2.14 0 [3,7,11]p3

2.15 0 [9,1,8]p9

2.16 0 [5,7,11]p5

2.17 0 [5,6,2]p5

2.18 0 [9,4,6]p9

2.19 0 [0,4,7]p0

2.1a 0 [3,8,2]p3

2.1b 0 [6,5,11]p6

2.1c 0 [8,4,1]p8

2.1d 0 [10,6,3]p10

2.1e 0 [2,7,9]p2

2.1f 0 [0,3,8]p0测试上传与下载数据,测试都没问题,说明我门的ceph可以正常工作了

sudo rados put msg1 /var/log/syslog --pool=mypool #上传文件

rados ls --pool=mypool #列出文件

ceph osd map mypool msg1 #文件信息

sudo rados get msg1 --pool=mypool /tmp/my.txt #下载文件

ll /tmp/my.txt

-rw-r--r-- 1 root root 465881 Sep 6 18:38 /tmp/my.txt

sudo rados get msg1 --pool=mypool /tmp/my1.txt #修改文件

ll /tmp/my1.txt

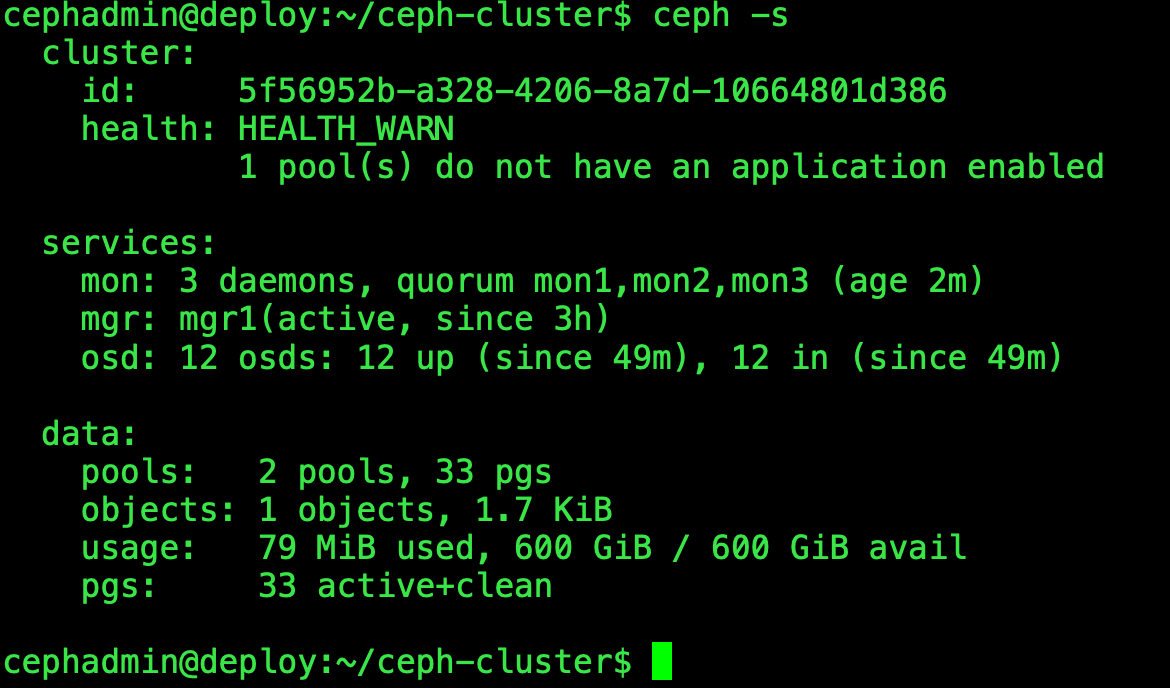

-rw-r--r-- 1 root root 1779 Sep 6 18:42 /tmp/my1.txt添加mon,现在只有一个mon

mon服务器

sudo apt install ceph-mondeploy服务器

ceph-deploy mon add mon2

ceph-deploy mon add mon3ceph -s就可以看到有三个mon了

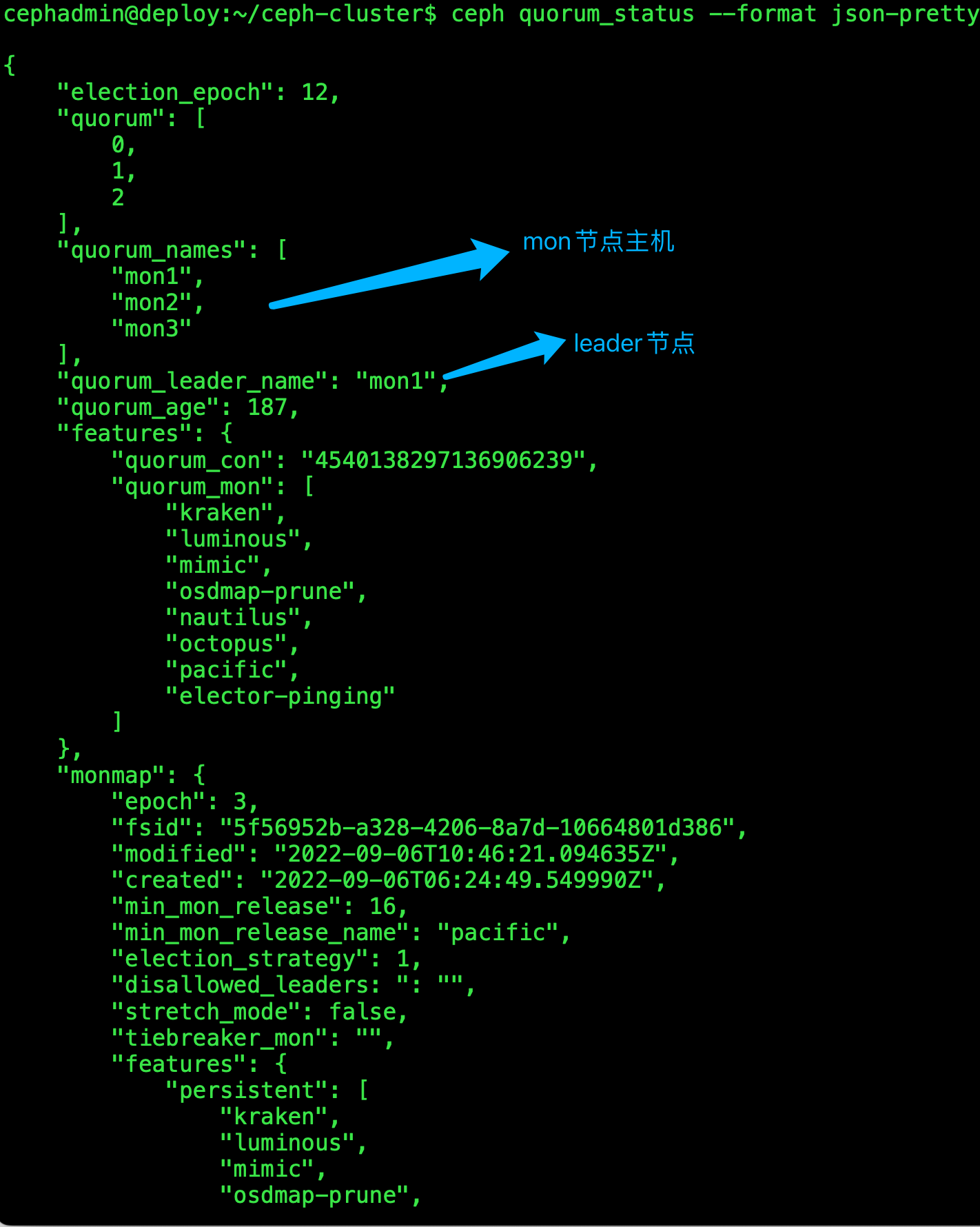

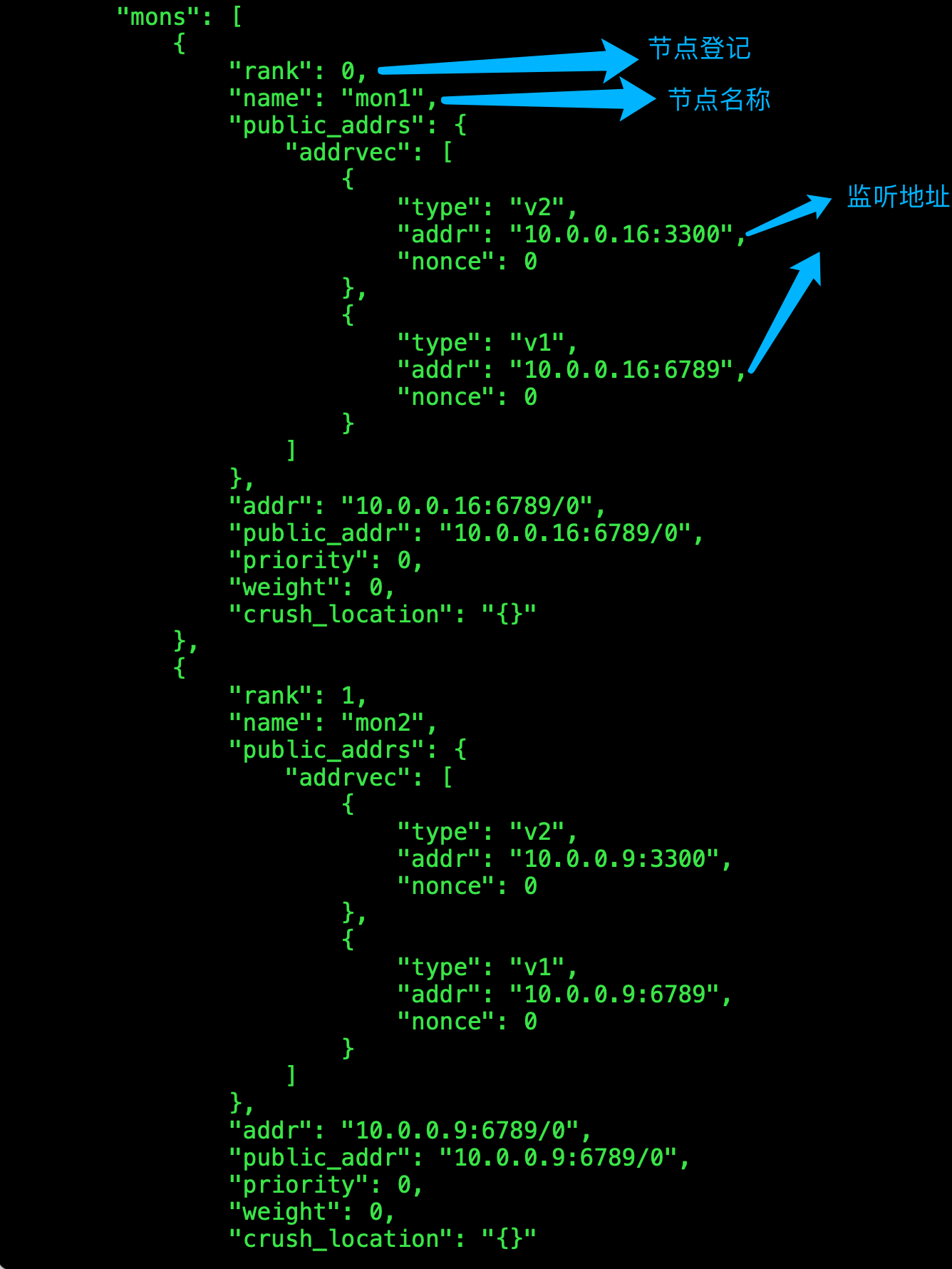

验证ceph-mon状态

ceph quorum_status --format json-pretty

扩展mgr节点

mgr服务器

sudo apt install ceph-mgrdeploy服务器

ceph-deploy mgr create mgr2

ceph-deploy admin mgr2 #同步配置文件到mgr2ceph -s 就可以看到有两个mgr了

到此集群版本的ceph就安装完成了