Envoy超时重试

环境信息

### 环境说明

##### 四个Service:

- envoy:Front Proxy,地址为172.31.65.10

- 3个后端服务

- service_blue:对应于Envoy中的blue_abort集群,带有abort故障注入配置,地址为172.31.65.5;

- service_red:对应于Envoy中的red_delay集群,带有delay故障注入配置,地址为172.31.65.7;

- service_green:对应于Envoy中的green集群,地址为172.31.65.6;

##### 使用的abort配置

```

http_filters:

- name: envoy.filters.http.fault

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.fault.v3.HTTPFault

max_active_faults: 100

abort:

http_status: 503

percentage:

numerator: 50 # 为一半的请求注入中断故障,以便于在路由侧模拟重试的效果;

denominator: HUNDRED

```

##### 使用的delay配置

```

http_filters:

- name: envoy.filters.http.fault

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.fault.v3.HTTPFault

max_active_faults: 100

delay:

fixed_delay: 10s

percentage:

numerator: 50 # 为一半的请求注入延迟故障,以便于在路由侧模拟超时的效果;

denominator: HUNDRED

```

##### 超时和重试相关的配置

```

virtual_hosts:

- name: backend

domains:

- "*"

routes:

- match:

prefix: "/service/blue"

route:

cluster: blue_abort

retry_policy:

retry_on: "5xx" # 响应码为5xx时,则进行重试,重试最大次数为3次;

num_retries: 3

- match:

prefix: "/service/red"

route:

cluster: red_delay

timeout: 1s # 超时时长为1秒,长于1秒,则执行超时操作;

- match:

prefix: "/service/green"

route:

cluster: green

- match:

prefix: "/service/colors"

route:

cluster: mycluster

retry_policy: # 超时和重试策略同时使用;

retry_on: "5xx"

num_retries: 3

timeout: 1s

启动

docker-compose up# cat docker-compose.yaml

version: '3.3'

services:

envoy:

image: envoyproxy/envoy-alpine:v1.21-latest

environment:

- ENVOY_UID=0

- ENVOY_GID=0

volumes:

- ./front-envoy.yaml:/etc/envoy/envoy.yaml

networks:

envoymesh:

ipv4_address: 172.31.65.10

aliases:

- front-proxy

expose:

# Expose ports 80 (for general traffic) and 9901 (for the admin server)

- "80"

- "9901"

service_blue:

image: ikubernetes/servicemesh-app:latest

volumes:

- ./service-envoy-fault-injection-abort.yaml:/etc/envoy/envoy.yaml

networks:

envoymesh:

ipv4_address: 172.31.65.5

aliases:

- service_blue

- colored

environment:

- SERVICE_NAME=blue

expose:

- "80"

service_green:

image: ikubernetes/servicemesh-app:latest

networks:

envoymesh:

ipv4_address: 172.31.65.6

aliases:

- service_green

- colored

environment:

- SERVICE_NAME=green

expose:

- "80"

service_red:

image: ikubernetes/servicemesh-app:latest

volumes:

- ./service-envoy-fault-injection-delay.yaml:/etc/envoy/envoy.yaml

networks:

envoymesh:

ipv4_address: 172.31.65.7

aliases:

- service_red

- colored

environment:

- SERVICE_NAME=red

expose:

- "80"

networks:

envoymesh:

driver: bridge

ipam:

config:

- subnet: 172.31.65.0/24

# cat front-envoy.yaml

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

layered_runtime:

layers:

- name: admin

admin_layer: {}

static_resources:

listeners:

- name: listener_0

address:

socket_address: { address: 0.0.0.0, port_value: 80 }

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

route_config:

name: local_route

virtual_hosts:

- name: backend

domains:

- "*"

routes:

- match:

prefix: "/service/blue"

route:

cluster: blue_abort

retry_policy:

retry_on: "5xx"

num_retries: 3

- match:

prefix: "/service/red"

route:

cluster: red_delay

timeout: 1s

- match:

prefix: "/service/green"

route:

cluster: green

- match:

prefix: "/service/colors"

route:

cluster: mycluster

retry_policy:

retry_on: "5xx"

num_retries: 3

timeout: 1s

http_filters:

- name: envoy.filters.http.router

clusters:

- name: red_delay

connect_timeout: 0.25s

type: STRICT_DNS

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: red_delay

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: service_red

port_value: 80

- name: blue_abort

connect_timeout: 0.25s

type: STRICT_DNS

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: blue_abort

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: service_blue

port_value: 80

- name: green

connect_timeout: 0.25s

type: STRICT_DNS

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: green

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: service_green

port_value: 80

- name: mycluster

connect_timeout: 0.25s

type: STRICT_DNS

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: mycluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: colored

port_value: 80

# cat service-envoy-fault-injection-delay.yaml

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

layered_runtime:

layers:

- name: admin

admin_layer: {}

static_resources:

listeners:

- name: listener_0

address:

socket_address: { address: 0.0.0.0, port_value: 80 }

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

route_config:

name: local_route

virtual_hosts:

- name: service

domains: ["*"]

routes:

- match:

prefix: "/"

route:

cluster: local_service

http_filters:

- name: envoy.filters.http.fault

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.fault.v3.HTTPFault

max_active_faults: 100

delay:

fixed_delay: 10s

percentage:

numerator: 50

denominator: HUNDRED

- name: envoy.filters.http.router

typed_config: {}

clusters:

- name: local_service

connect_timeout: 0.25s

type: strict_dns

lb_policy: round_robin

load_assignment:

cluster_name: local_service

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: 127.0.0.1

port_value: 8080

# cat service-envoy-fault-injection-abort.yaml

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

layered_runtime:

layers:

- name: admin

admin_layer: {}

static_resources:

listeners:

- name: listener_0

address:

socket_address: { address: 0.0.0.0, port_value: 80 }

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

route_config:

name: local_route

virtual_hosts:

- name: service

domains: ["*"]

routes:

- match:

prefix: "/"

route:

cluster: local_service

http_filters:

- name: envoy.filters.http.fault

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.fault.v3.HTTPFault

max_active_faults: 100

abort:

http_status: 503

percentage:

numerator: 50

denominator: HUNDRED

- name: envoy.filters.http.router

typed_config: {}

clusters:

- name: local_service

connect_timeout: 0.25s

type: strict_dns

lb_policy: round_robin

load_assignment:

cluster_name: local_service

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: 127.0.0.1

port_value: 8080

# cat service-envoy.yaml

admin:

profile_path: /tmp/envoy.prof

access_log_path: /tmp/admin_access.log

address:

socket_address:

address: 0.0.0.0

port_value: 9901

layered_runtime:

layers:

- name: admin

admin_layer: {}

static_resources:

listeners:

- name: listener_0

address:

socket_address: { address: 0.0.0.0, port_value: 80 }

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

codec_type: AUTO

route_config:

name: local_route

virtual_hosts:

- name: service

domains: ["*"]

routes:

- match:

prefix: "/"

route:

cluster: local_service

http_filters:

- name: envoy.filters.http.router

clusters:

- name: local_service

connect_timeout: 0.25s

type: strict_dns

lb_policy: round_robin

load_assignment:

cluster_name: local_service

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: 127.0.0.1

port_value: 8080

# cat send-requests.sh

#!/bin/bash

#

if [ $# -ne 2 ]

then

echo "USAGE: $0 <URL> <COUNT>"

exit 1;

fi

URL=$1

COUNT=$2

c=1

#interval="0.2"

while [[ ${c} -le ${COUNT} ]];

do

#echo "Sending GET request: ${URL}"

curl -o /dev/null -w '%{http_code}\n' -s ${URL}

(( c++ ))

# sleep $interval

done

# cat curl_format.txt

time_namelookup: %{time_namelookup}\n

time_connect: %{time_connect}\n

time_appconnect: %{time_appconnect}\n

time_pretransfer: %{time_pretransfer}\n

time_redirect: %{time_redirect}\n

time_starttransfer: %{time_starttransfer}\n

----------\n

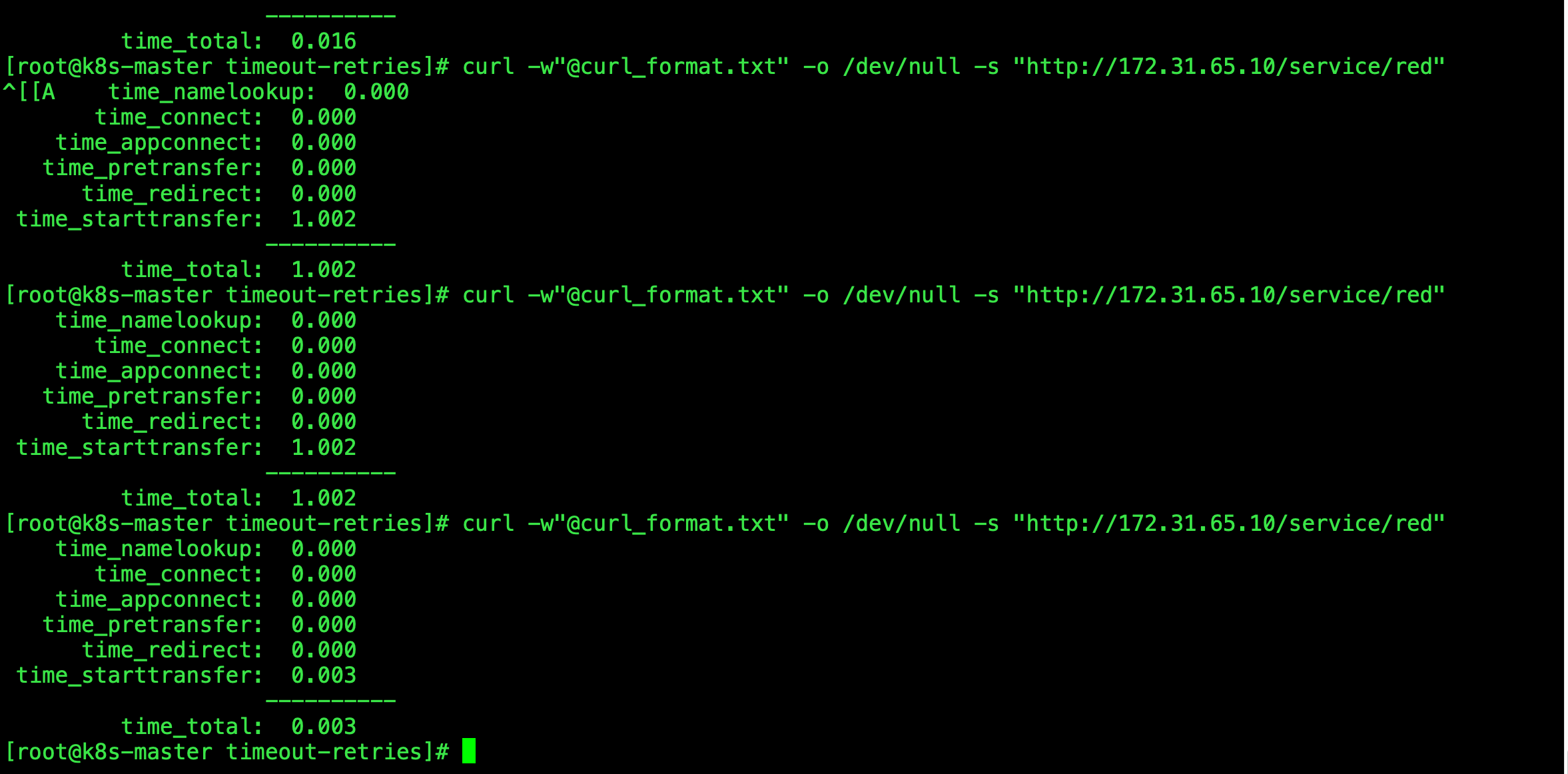

time_total: %{time_total}\n测试注入的delay故障

反复向/service/red发起多次请求,被注入延迟的请求,会有较长的响应时长

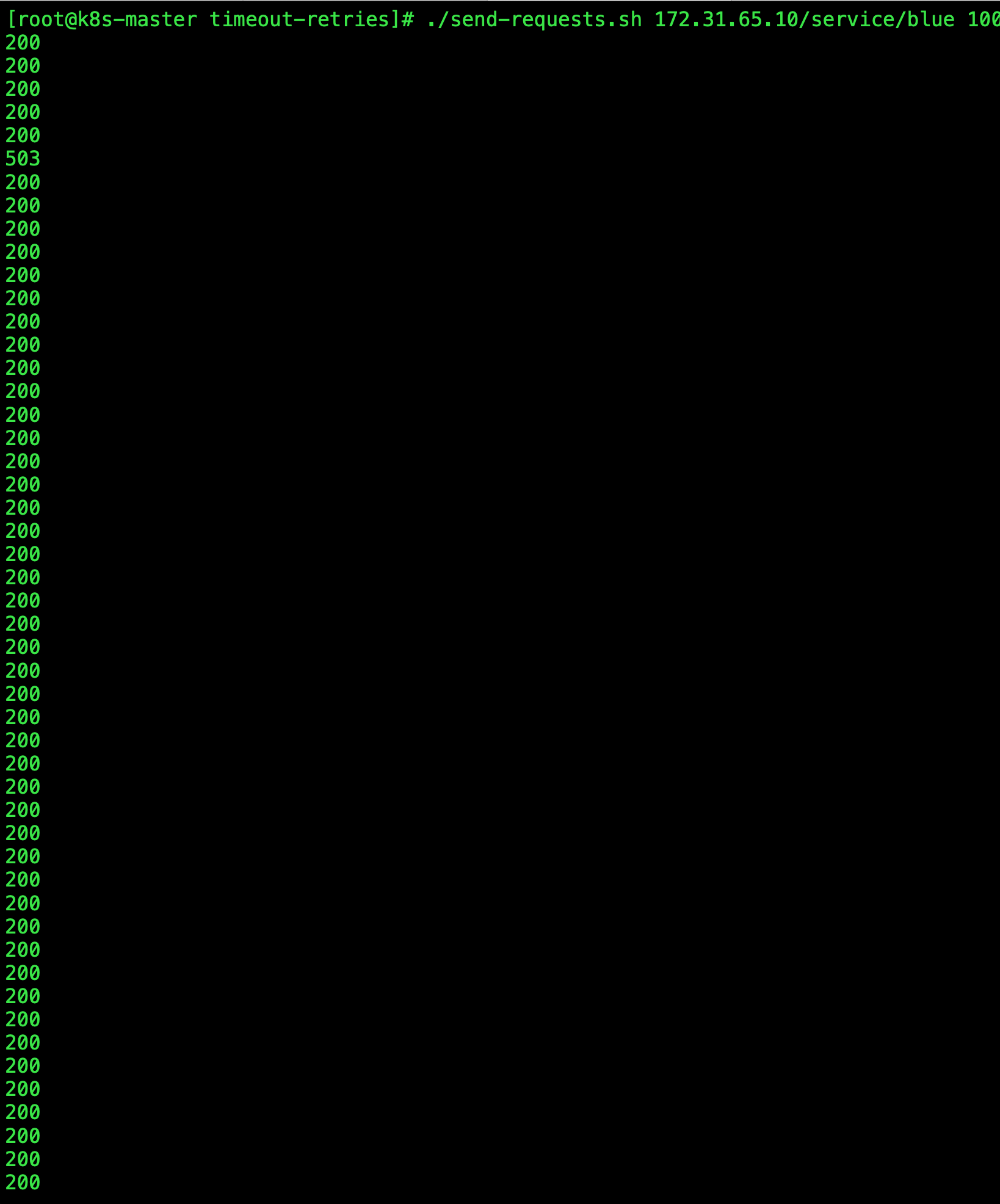

测试注入的abort故障

./send-requests.sh 172.31.65.10/service/blue 100反复向/service/blue发起多次请求,后端被Envoy注入中断的请求,会因为响应的503响应码而触发自定义的

重试操作;最大3次的重试,仍有可能在连续多次的错误响应后,仍然响应以错误信息,但其比例会大大降低。

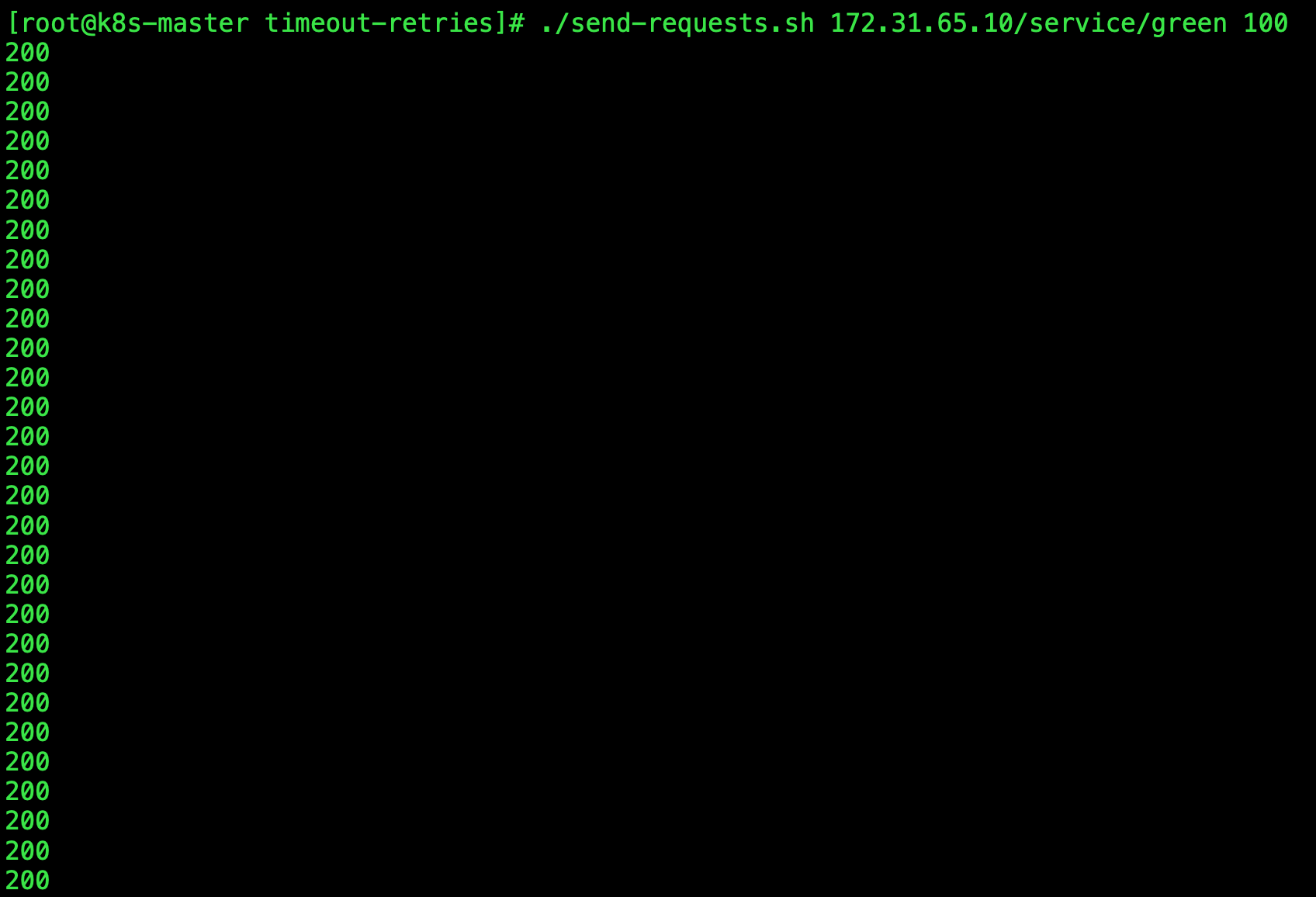

发往/service/green的请求,因后端无故障注入而几乎全部得到正常响应

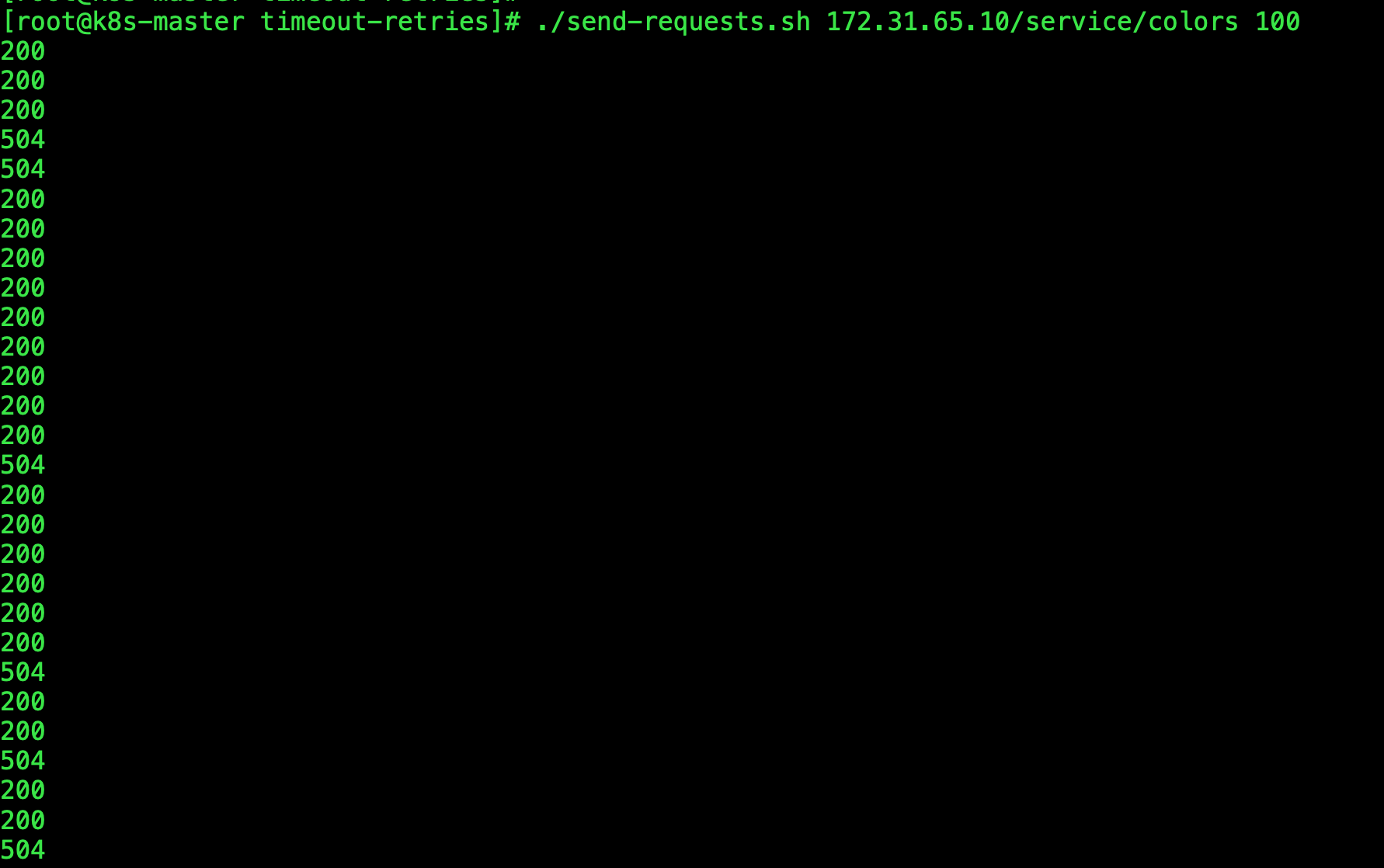

发往/service/colors的请求,会被调度至red_delay、blue_abort和green三个集群,它们有的可能被延迟、有的可能被中断;

# 504响应码是由于上游请求超时所致

./send-requests.sh 172.31.65.10/service/colors 100