Envoy路由配置负载均衡子集

启动

docker-compose upcat docker-compose.yaml

# Author: MageEdu <mage@magedu.com>

# Version: v1.0.2

# Site: www.magedu.com

#

version: '3'

services:

front-envoy:

image: envoyproxy/envoy-alpine:v1.21-latest

environment:

- ENVOY_UID=0

- ENVOY_GID=0

volumes:

- ./front-envoy.yaml:/etc/envoy/envoy.yaml

networks:

envoymesh:

ipv4_address: 172.31.33.2

expose:

# Expose ports 80 (for general traffic) and 9901 (for the admin server)

- "80"

- "9901"

e1:

image: ikubernetes/demoapp:v1.0

hostname: e1

networks:

envoymesh:

ipv4_address: 172.31.33.11

aliases:

- e1

expose:

- "80"

e2:

image: ikubernetes/demoapp:v1.0

hostname: e2

networks:

envoymesh:

ipv4_address: 172.31.33.12

aliases:

- e2

expose:

- "80"

e3:

image: ikubernetes/demoapp:v1.0

hostname: e3

networks:

envoymesh:

ipv4_address: 172.31.33.13

aliases:

- e3

expose:

- "80"

e4:

image: ikubernetes/demoapp:v1.0

hostname: e4

networks:

envoymesh:

ipv4_address: 172.31.33.14

aliases:

- e4

expose:

- "80"

e5:

image: ikubernetes/demoapp:v1.0

hostname: e5

networks:

envoymesh:

ipv4_address: 172.31.33.15

aliases:

- e5

expose:

- "80"

e6:

image: ikubernetes/demoapp:v1.0

hostname: e6

networks:

envoymesh:

ipv4_address: 172.31.33.16

aliases:

- e6

expose:

- "80"

e7:

image: ikubernetes/demoapp:v1.0

hostname: e7

networks:

envoymesh:

ipv4_address: 172.31.33.17

aliases:

- e7

expose:

- "80"

networks:

envoymesh:

driver: bridge

ipam:

config:

- subnet: 172.31.33.0/24

cat front-envoy.yaml

# Author: MageEdu <mage@magedu.com>

# Version: v1.0.2

# Site: www.magedu.com

#

admin:

access_log_path: "/dev/null"

address:

socket_address: { address: 0.0.0.0, port_value: 9901 }

static_resources:

listeners:

- address:

socket_address: { address: 0.0.0.0, port_value: 80 }

name: listener_http

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

codec_type: auto

stat_prefix: ingress_http

route_config:

name: local_route

virtual_hosts:

- name: backend

domains:

- "*"

routes:

- match:

prefix: "/"

headers:

- name: x-custom-version

exact_match: pre-release

route:

cluster: webcluster1

metadata_match:

filter_metadata:

envoy.lb:

version: "1.2-pre"

stage: "dev"

- match:

prefix: "/"

headers:

- name: x-hardware-test

exact_match: memory

route:

cluster: webcluster1

metadata_match:

filter_metadata:

envoy.lb:

type: "bigmem"

stage: "prod"

- match:

prefix: "/"

route:

weighted_clusters:

clusters:

- name: webcluster1

weight: 90

metadata_match:

filter_metadata:

envoy.lb:

version: "1.0"

- name: webcluster1

weight: 10

metadata_match:

filter_metadata:

envoy.lb:

version: "1.1"

metadata_match:

filter_metadata:

envoy.lb:

stage: "prod"

http_filters:

- name: envoy.filters.http.router

clusters:

- name: webcluster1

connect_timeout: 0.5s

type: STRICT_DNS

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: webcluster1

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: e1

port_value: 80

metadata:

filter_metadata:

envoy.lb:

stage: "prod"

version: "1.0"

type: "std"

xlarge: true

- endpoint:

address:

socket_address:

address: e2

port_value: 80

metadata:

filter_metadata:

envoy.lb:

stage: "prod"

version: "1.0"

type: "std"

- endpoint:

address:

socket_address:

address: e3

port_value: 80

metadata:

filter_metadata:

envoy.lb:

stage: "prod"

version: "1.1"

type: "std"

- endpoint:

address:

socket_address:

address: e4

port_value: 80

metadata:

filter_metadata:

envoy.lb:

stage: "prod"

version: "1.1"

type: "std"

- endpoint:

address:

socket_address:

address: e5

port_value: 80

metadata:

filter_metadata:

envoy.lb:

stage: "prod"

version: "1.0"

type: "bigmem"

- endpoint:

address:

socket_address:

address: e6

port_value: 80

metadata:

filter_metadata:

envoy.lb:

stage: "prod"

version: "1.1"

type: "bigmem"

- endpoint:

address:

socket_address:

address: e7

port_value: 80

metadata:

filter_metadata:

envoy.lb:

stage: "dev"

version: "1.2-pre"

type: "std"

lb_subset_config:

fallback_policy: DEFAULT_SUBSET

default_subset:

stage: "prod"

version: "1.0"

type: "std"

subset_selectors:

- keys: ["stage", "type"]

- keys: ["stage", "version"]

- keys: ["version"]

- keys: ["xlarge", "version"]

health_checks:

- timeout: 5s

interval: 10s

unhealthy_threshold: 2

healthy_threshold: 1

http_health_check:

path: /livez

expected_statuses:

start: 200

end: 399

cat test.sh

#!/bin/bash

declare -i v10=0

declare -i v11=0

for ((counts=0; counts<200; counts++)); do

if curl -s http://$1/hostname | grep -E "e[125]" &> /dev/null; then

# $1 is the host address of the front-envoy.

v10=$[$v10+1]

else

v11=$[$v11+1]

fi

done

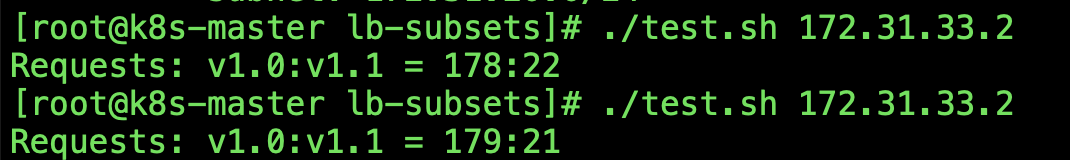

echo "Requests: v1.0:v1.1 = $v10:$v11"test.sh脚本接受front-envoy的地址,并持续向该地址发起请求,而后显示流量分配的结果;根据路由规则,未指定x-hardware-test和x-custom-version且给予了相应值的请求,均会调度给默认子集,且在两个组之间进行流量分发;

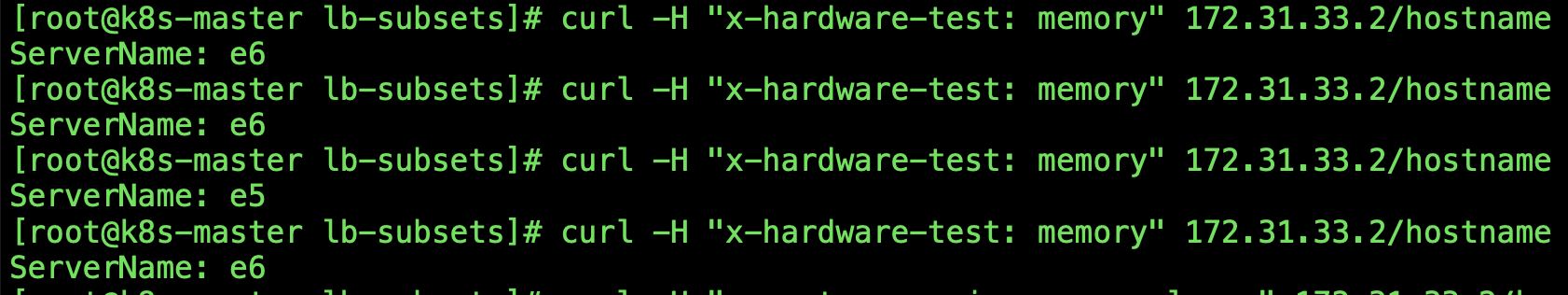

我们可以指定特殊的首部发出特定的请求,例如附带有”x-hardware-test: memory”的请求,将会被分发至特定的子集;该子集要求标签type的值为bigmem,而标签stage的值为prod;该子集共有e5和e6两个端点

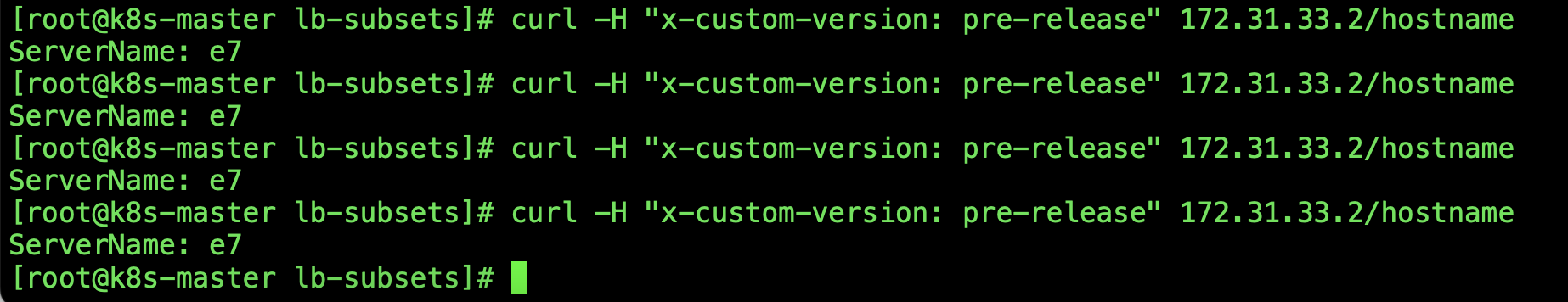

或者,我们也可以指定特殊的首部发出特定的请求,例如附带有”x-custom-version: pre-release”的请求,将会被分发至特定的子集;该子集要求标签version的值为1.2-pre,而标签stage的值为dev;该子集有e7一个端点;

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· Manus爆火,是硬核还是营销?

· 一文读懂知识蒸馏

· 终于写完轮子一部分:tcp代理 了,记录一下