|

1、去kakfa官网下载2.0.1

wget https://archive.apache.org/dist/kafka/2.0.1/kafka_2.11-2.0.1.tgz

2、去zookeeper官网下载3.7.2

wget https://dlcdn.apache.org/zookeeper/zookeeper-3.7.2/apache-zookeeper-3.7.2-bin.tar.gz

3、配置好jdk环境

# 我这里就全局配置了,也可以根据部署的用户去配用户级别的环境

vi /etc/profile

export JAVA_HOME=/usr/local/soft/jdk64/jdk-1.8.0_352

export PATH=$JAVA_HOME/bin:$PATH

4、安装zookeeper

tar -zxf apache-zookeeper-3.7.2-bin.tar.gz -C /usr/local/soft

# 单机版的几乎可以不用配置什么了,将conf的zoo_sample.cfg拷贝 修改一下数据目录

cp zoo_sample.cfg zoo.cfg

vi zoo.cfg

dataDir=/tmp/zookeeper

/usr/local/soft/apache-zookeeper-3.7.2-bin/zkServer.sh start

5、安装kafka

tar -zxf kafka_2.11-2.0.1.tgz -C /usr/local/soft

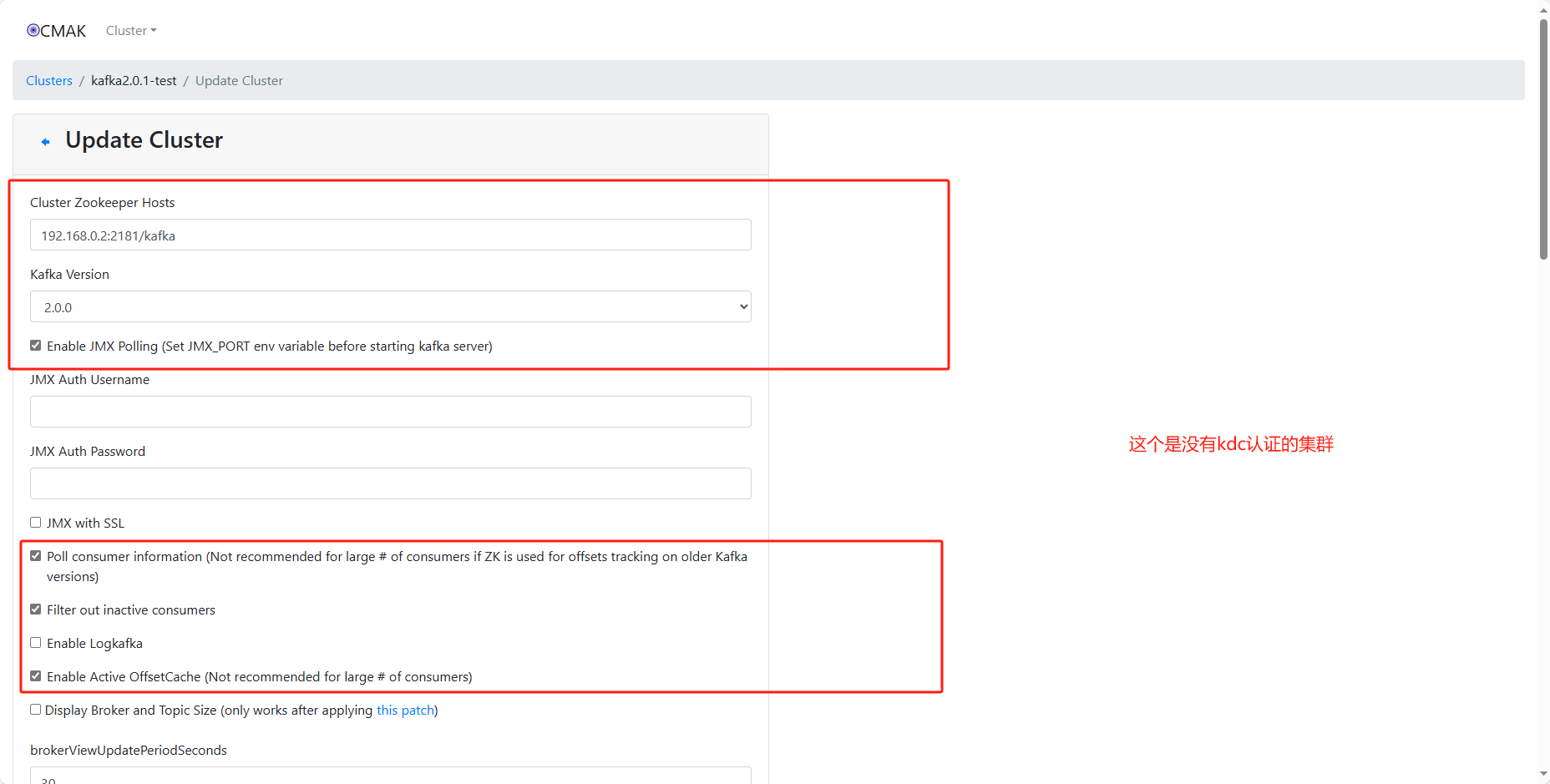

# 后面的kafka配置我这里使用两个端口,一个是不需要任何认证的访问端口(9092),一个是使用kdc认证访问的端口(9091)

vi config/server.properties

broker.id=0

listeners=PLAINTEXT://zyp.com:9092,SASL_PLAINTEXT://zyp.com:9091

advertised.listeners=PLAINTEXT://:9092,SASL_PLAINTEXT://:9091

security.inter.broker.protocol=SASL_PLAINTEXT

sasl.mechanism.inter.broker.protocol=GSSAPI

sasl.enabled.mechanisms=GSSAPI

sasl.kerberos.service.name=kafka

zookeeper.connect=localhost:2181/kafka

然后编辑一个jaas,配置kdc认证端口

vi config/kafka-server-jaas.conf

KafkaServer {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

storeKey=true

keyTab="/etc/security/keytabs/kafka.keytab"

principal="kafka/zyp.com@KAFKA.COM";

};

KafkaClient {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

storeKey=true

keyTab="/etc/security/keytabs/kafka.keytab"

principal="kafka/zyp.com@KAFKA.COM";

};

# 创建一个软连接

mkdir -m 755 -p /etc/kafka/conf && ln -s /usr/local/soft/kafka_2.11-2.0.1/config/kafka-server-jaas.conf /etc/kafka/conf/kafka-jaas.conf

# kafka启动的时候加入kdc认证配置,并开启kafka的jmx的指标采集端口,放到启动脚本的前面

vi bin/kafka-server-start.sh

export KAFKA_OPTS="-Djava.security.krb5.conf=/etc/krb5.conf -Djavax.security.auth.useSubjectCredsOnly=false -Djava.security.auth.login.config=/etc/kafka/conf/kafka-jaas.conf"

export KAFKA_JMX_OPTS="-Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Djava.rmi.server.hostname=zyp.com -Dcom.sun.management.jmxremote.rmi.port=8096 -Dcom.sun.management.jmxremote.local.only=false -Dcom.sun.management.jmxremote.port=8096"

到这里就配置完成了kafka,但是先不要启动服务,还要安装一下kerberos

6、安装kerberos

yum install -y krb5-libs krb5-workstation krb5-server krb5-devel

# 修改/etc/krb5.conf

# Configuration snippets may be placed in this directory as well

includedir /etc/krb5.conf.d/

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

dns_lookup_realm = false

ticket_lifetime = 24h

# renew_lifetime = 7d 这里的这个配要注销,不然会报错java KrbException: Message stream modified (41)

forwardable = true

rdns = false

pkinit_anchors = FILE:/etc/pki/tls/certs/ca-bundle.crt

default_realm = KAFKA.COM

# default_ccache_name = KEYRING:persistent:%{uid} 这里也是,不然klist的时候会报系统用户的uid异常

[realms]

KAFKA.COM = {

kdc = zyp.com

admin_server = zyp.com

}

[domain_realm]

.zyp.com = KAFKA.COM

zyp.com = KAFKA.COM

# 配置kdc的管理用户

vi /var/kerberos/krb5kdc/kadm5.acl

*/admin@KAFKA.COM

# 初始化数据库

kdb5_util create -r KAFKA.COM -s

# 启动服务

systemctl start krb5kdc

systemctl start kadmin

kadmin.local

# 创建管理员

addprinc root/admin@KAFKA.COM # 记录输入的密码

# 创建kafka普通用户

addprinc kafka/zyp.com@KAFKA.COM # 记录输入的密码

# 生成kafka用户的密钥文件

ktadd -k /etc/security/keytabs/kafka.keytab kafka/zyp.com@KAFKA.COM

# 执行票据初始化看看有没有我问题

kinit -kt /etc/security/keytabs/kafka.keytab kafka/zyp.com@KAFKA.COM

klist

# 没有问题后,修改好keytab文件的权限,然后启动kafka

/usr/local/soft/kafka_2.11-2.0.1/bin/kafka-server-start.sh /usr/local/soft/kafka_2.11-2.0.1/config/server.properties # 观察启动是否有报错情况

7、部署cmak

# 首先去GitHub下载cmak

wget https://github.com/yahoo/CMAK/releases/download/3.0.0.6/cmak-3.0.0.6.zip

# 创建一个dockerfile,编辑以下内容

vi dockerfile

FROM openjdk:11

RUN mkdir -p /app/cmak

WORKDIR /app/cmak

COPY . /app/cmak

CMD ["/app/cmak/bin/cmak","-Dconfig.file=/app/cmak/conf/application.conf","-Dapplication.home=/app/cmak","-Dhttp.port=9000"]

# 修改application.conf

# Copyright 2015 Yahoo Inc. Licensed under the Apache License, Version 2.0

# See accompanying LICENSE file.

# This is the main configuration file for the application.

# ~~~~~

# Secret key

# ~~~~~

# The secret key is used to secure cryptographics functions.

# If you deploy your application to several instances be sure to use the same key!

play.crypto.secret="^<csmm5Fx4d=r2HEX8pelM3iBkFVv?k[mc;IZE<_Qoq8EkX_/7@Zt6dP05Pzea3U"

#play.crypto.secret=${?APPLICATION_SECRET}

play.http.session.maxAge="1h"

# The application languages

# ~~~~~

play.i18n.langs=["en"]

play.http.requestHandler = "play.http.DefaultHttpRequestHandler"

play.http.context = "/"

play.application.loader=loader.KafkaManagerLoader

# Settings prefixed with 'kafka-manager.' will be deprecated, use 'cmak.' instead.

# https://github.com/yahoo/CMAK/issues/713

kafka-manager.zkhosts="192.168.0.2:2181"

#kafka-manager.zkhosts=${?ZK_HOSTS}

cmak.zkhosts="192.168.0.2:2181"

#cmak.zkhosts=${?ZK_HOSTS}

pinned-dispatcher.type="PinnedDispatcher"

pinned-dispatcher.executor="thread-pool-executor"

application.features=["KMClusterManagerFeature","KMTopicManagerFeature","KMPreferredReplicaElectionFeature","KMReassignPartitionsFeature", "KMScheduleLeaderElectionFeature"]

akka {

loggers = ["akka.event.slf4j.Slf4jLogger"]

loglevel = "INFO"

}

akka.logger-startup-timeout = 60s

basicAuthentication.enabled=false

#basicAuthentication.enabled=${?KAFKA_MANAGER_AUTH_ENABLED}

basicAuthentication.ldap.enabled=false

#basicAuthentication.ldap.enabled=${?KAFKA_MANAGER_LDAP_ENABLED}

basicAuthentication.ldap.server=""

#basicAuthentication.ldap.server=${?KAFKA_MANAGER_LDAP_SERVER}

basicAuthentication.ldap.port=389

#basicAuthentication.ldap.port=${?KAFKA_MANAGER_LDAP_PORT}

basicAuthentication.ldap.username=""

#basicAuthentication.ldap.username=${?KAFKA_MANAGER_LDAP_USERNAME}

basicAuthentication.ldap.password=""

#basicAuthentication.ldap.password=${?KAFKA_MANAGER_LDAP_PASSWORD}

basicAuthentication.ldap.search-base-dn=""

#basicAuthentication.ldap.search-base-dn=${?KAFKA_MANAGER_LDAP_SEARCH_BASE_DN}

basicAuthentication.ldap.search-filter="(uid=$capturedLogin$)"

#basicAuthentication.ldap.search-filter=${?KAFKA_MANAGER_LDAP_SEARCH_FILTER}

basicAuthentication.ldap.group-filter=""

#basicAuthentication.ldap.group-filter=${?KAFKA_MANAGER_LDAP_GROUP_FILTER}

basicAuthentication.ldap.connection-pool-size=10

#basicAuthentication.ldap.connection-pool-size=${?KAFKA_MANAGER_LDAP_CONNECTION_POOL_SIZE}

basicAuthentication.ldap.ssl=false

#basicAuthentication.ldap.ssl=${?KAFKA_MANAGER_LDAP_SSL}

basicAuthentication.ldap.ssl-trust-all=false

#basicAuthentication.ldap.ssl-trust-all=${?KAFKA_MANAGER_LDAP_SSL_TRUST_ALL}

basicAuthentication.username="admin"

#basicAuthentication.username=${?KAFKA_MANAGER_USERNAME}

basicAuthentication.password="xxxxx"

#basicAuthentication.password=${?KAFKA_MANAGER_PASSWORD}

basicAuthentication.realm="Kafka-Manager"

basicAuthentication.excluded=["/api/health"] # ping the health of your instance without authentification

#kafka-manager.consumer.properties.file=${?CONSUMER_PROPERTIES_FILE}

kafka-manager.consumer.properties.file=/app/cmak/conf/consumer.properties

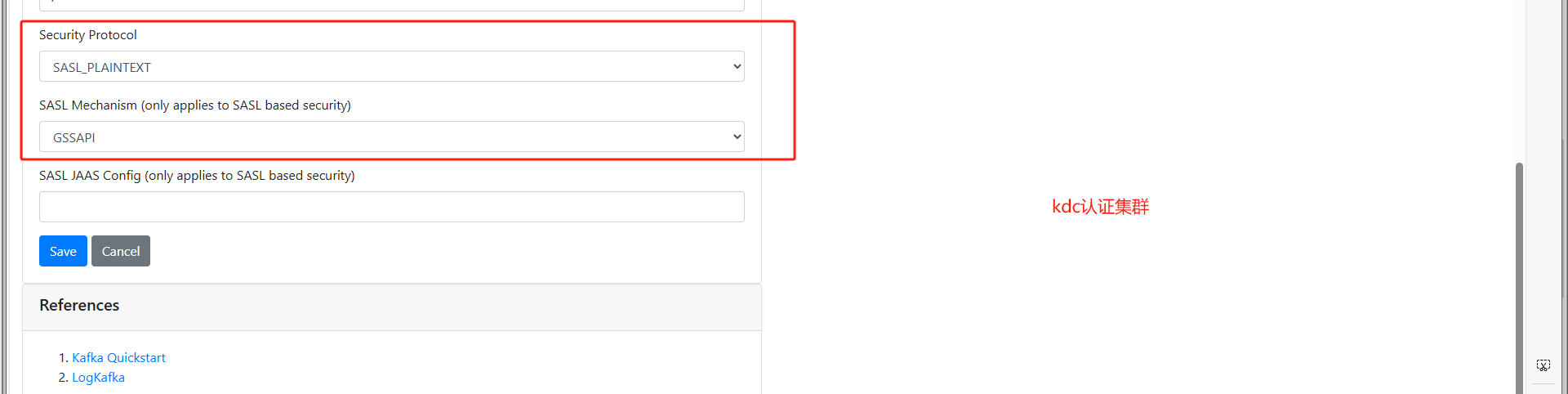

# 修改consumer.properties

security.protocol=SASL_PLAINTEXT

key.deserializer=org.apache.kafka.common.serialization.ByteArrayDeserializer

value.deserializer=org.apache.kafka.common.serialization.ByteArrayDeserializer

sasl.mechanism=GSSAPI

sasl.kerberos.service.name=kafka

# 增加jaas.config配置

vi jaas.config

KafkaClient{

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="/app/cmak/conf/kafka.keytab"

principal="kafka/zyp.com@KAFKA.COM"

serviceName="kafka"

doNotPrompt=true;

};

Client {

// Noauthentication required

};

# 由于zookeeeper没有开启认证,所以创建一个空模块Client就好

# 将kafka.keytab和krb5.conf配置文件拷贝到当前的conf中去

8、构建cmak的docker镜像

docker build -t cmak_kcd:v1 . && docker run -itd --name cmak_kdc -p 9000:9000 cmak_kdc:v1 && docker exec -it cmak_kdc /bin/bash

# 由于我用了域名,说有我还要进入容器加上域名配置

echo "192.168.0.2 zyp.com" >> /etc/hosts

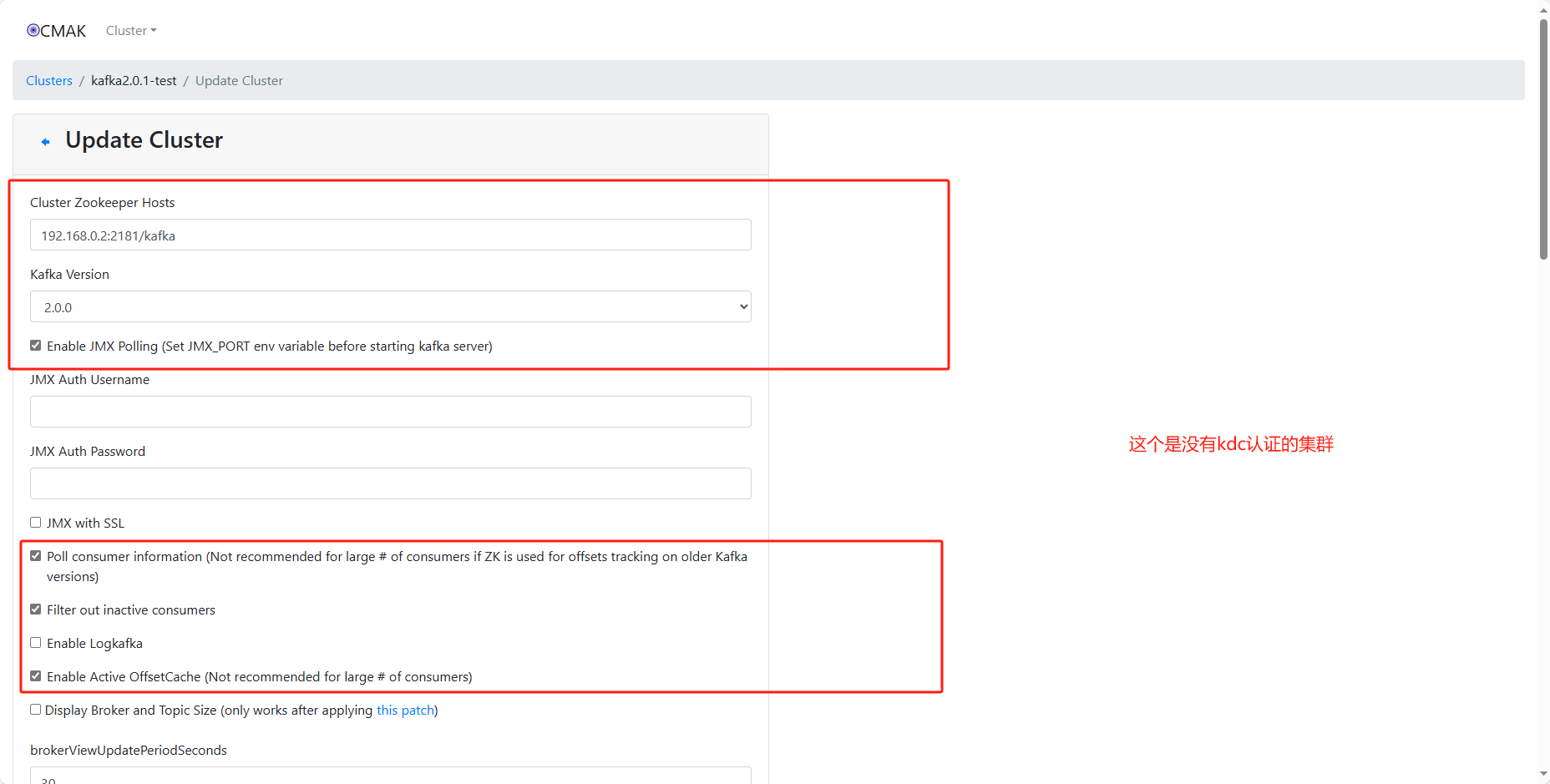

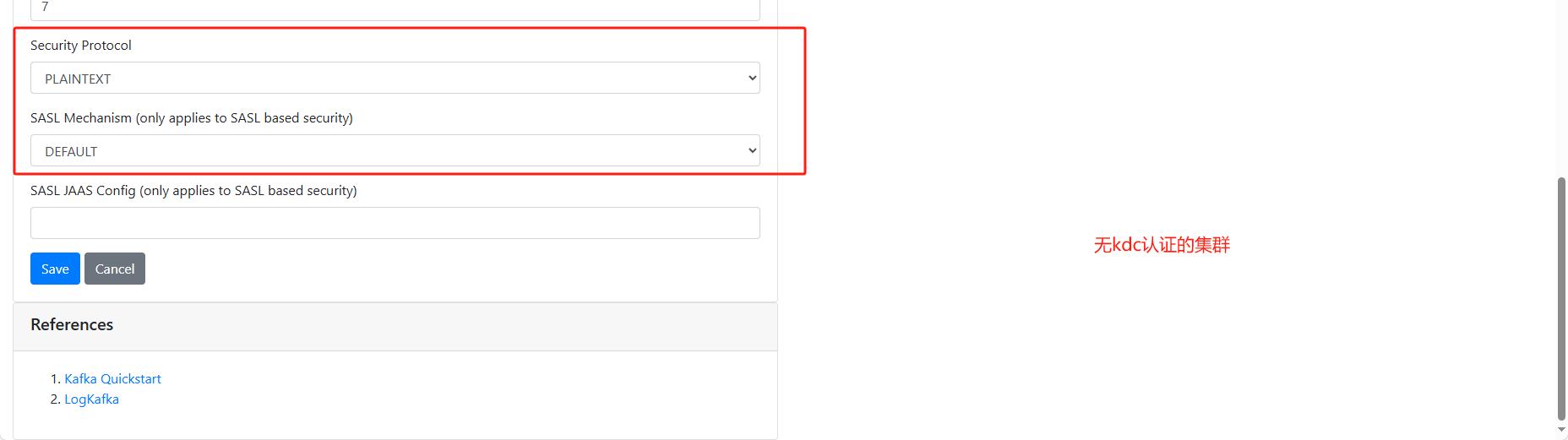

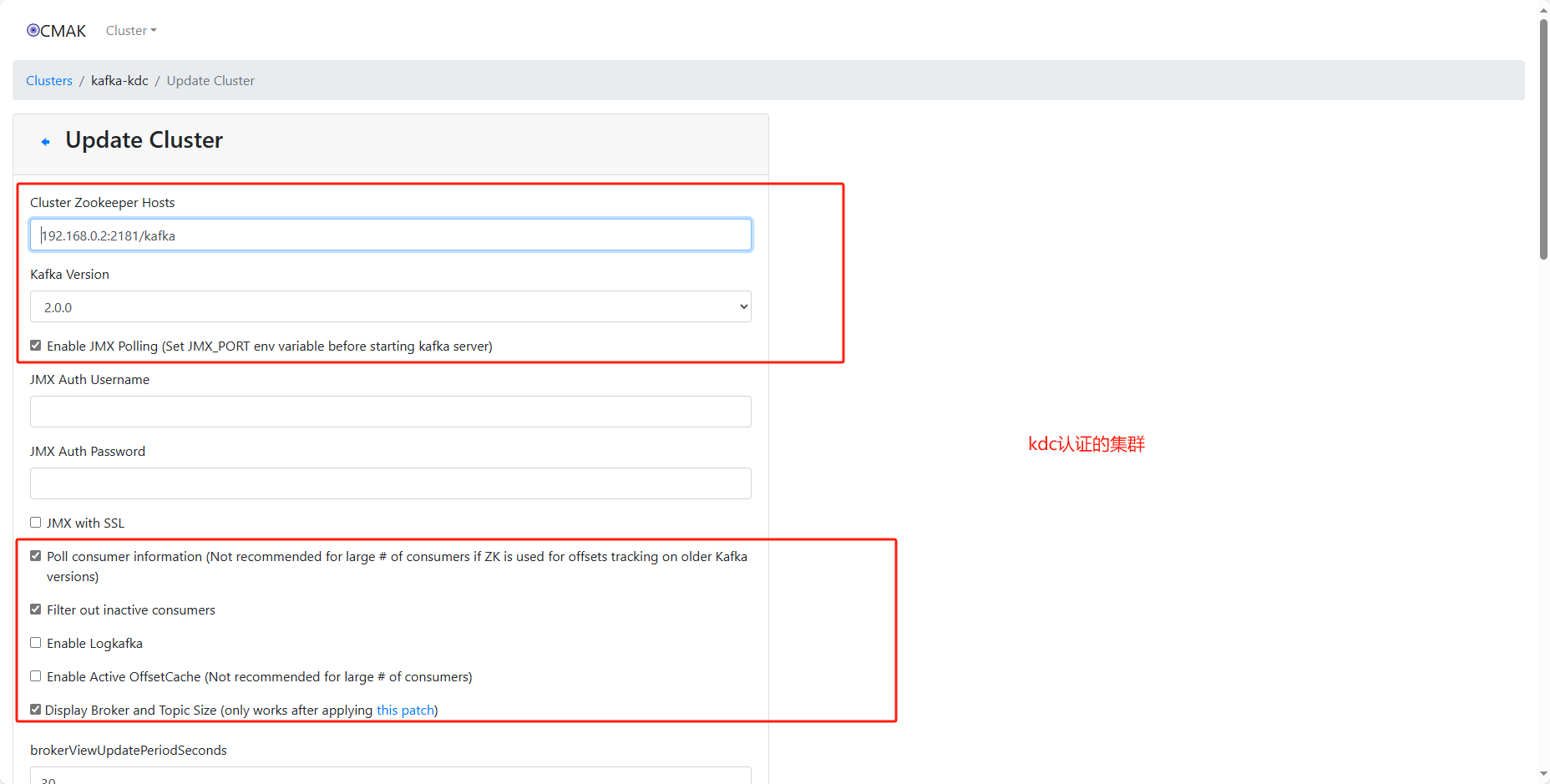

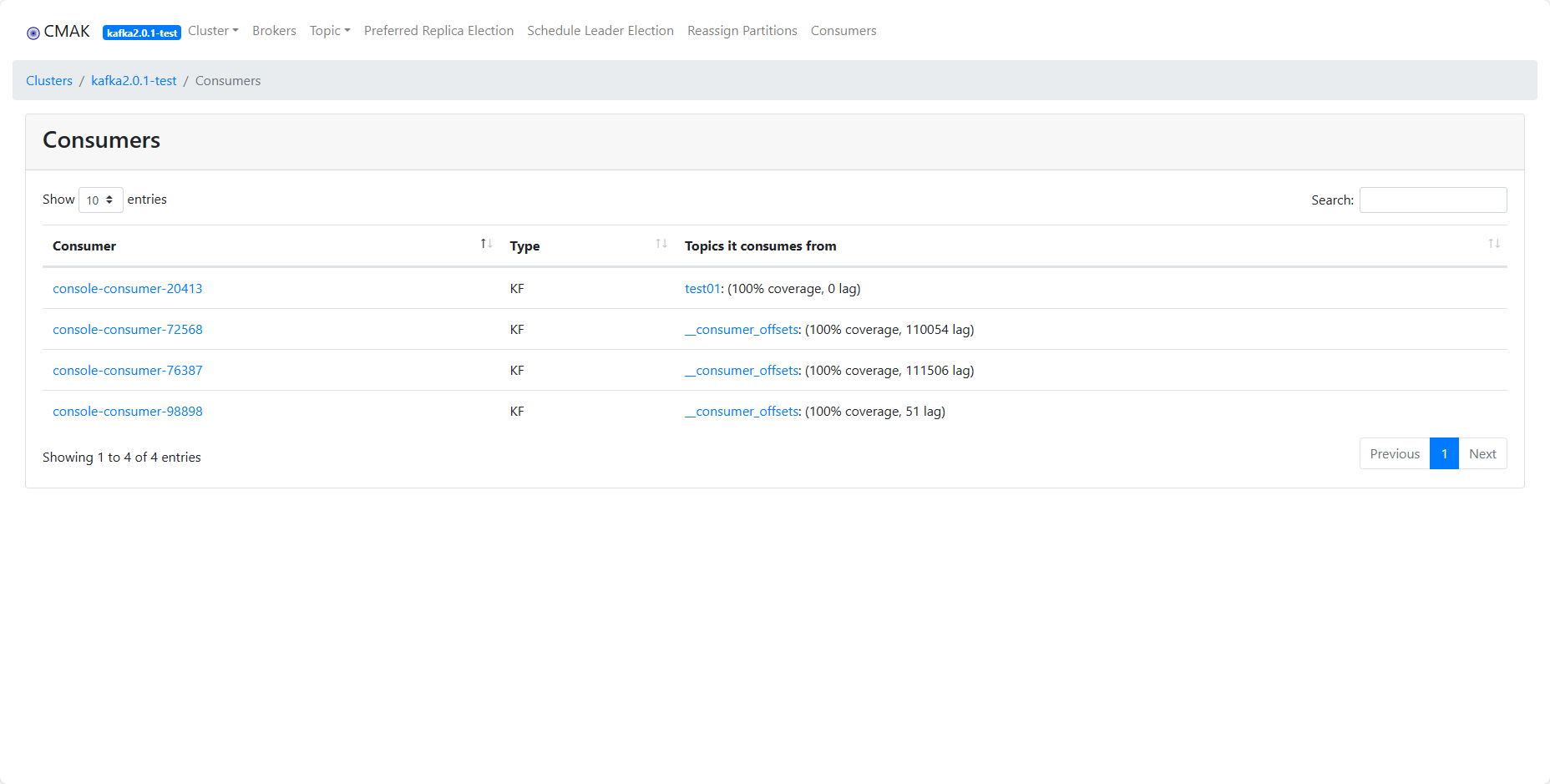

9、开始添加kafka集群信息

http://192.168.0.2:9001/

# 输入账号密码登录

# 添加集群

|

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律