电信旅游集市

- 1、开启hadoop的权限验证

- 2、在hive中创建数据库

- 3、在hdfs中创建5个目录

- 4、在linux中创建5个用户

- 5、将目录权限赋值给不同用户

- 6、修改hive权限

- 7、在ods中创建表

- 8、将四个表的数据上传到hdfs

- 9、增加分区

- 10、为每一个用户在hdfs中创建一个目录

- 11、新建maven项目,建立moudle

- 12、DWD层-位置融合表

- 13、DWS层-停留表

- 14、SparkTools工具类

- 15、使用gird工具类对DwsStaypointMskD类的网格点设置经纬度(使用udf函数),将grid工具包也放入comm1模块中

- 16、配置文件优化

- 17、DIM维度层处理

- 17、ADS层 根据DWS层处理业务省游客表

- 18、AZKABAN

- 错误总结

详:https://gitee.com/zhang-yilei-bigdata/telecom-tourism-fair

1、开启hadoop的权限验证

关闭hadoop

stop-all.sh

修改hdfs-site.xml文件

<property>

<name>dfs.permissions</name>

<value>true</value>

</property>

启动hadoop

start-all.sh

2、在hive中创建数据库

数据仓库分层中,每一个层对应hive中一个数据库,对应hdfs中一个目录

通过root用户先创建

create database ods;

create database dwd;

create database dws;

create database dim;

create database ads;

3、在hdfs中创建5个目录

一个层对应一个目录

先用root用户创建

hadoop dfs -mkdir -p /daas/motl/ods/

hadoop dfs -mkdir -p /daas/motl/dim/

hadoop dfs -mkdir -p /daas/motl/dwd/

hadoop dfs -mkdir -p /daas/motl/dws/

hadoop dfs -mkdir -p /daas/motl/ads/

4、在linux中创建5个用户

每一个用户对应一个层

useradd ods

passwd ods

useradd dwd

passwd dwd

useradd dws

passwd dws

useradd dim

passwd dim

useradd ads

passwd ads

5、将目录权限赋值给不同用户

用root用户修改权限

hadoop dfs -chown ods:ods /daas/motl/ods/

hadoop dfs -chown dwd:dwd /daas/motl/dwd/

hadoop dfs -chown dws:dws /daas/motl/dws/

hadoop dfs -chown dim:dim /daas/motl/dim/

hadoop dfs -chown ads:ads /daas/motl/ads/

6、修改hive权限

如果不该启动用户进不了hive

删除hive的tmp目录

rm -rf /usr/local/soft/hive-1.2.1/tmp

修改权限

chmod 777 /usr/local/soft/hive-1.2.1

7、在ods中创建表

使用ods用户进入hive

ods_ddr

ods_oidd

ods_dpi

ods_wcdr

8、将四个表的数据上传到hdfs

9、增加分区

alter table ods.ods_ddr add if not exists partition(day_id='20180503') ;

alter table ods.ods_dpi add if not exists partition(day_id='20180503') ;

alter table ods.ods_oidd add if not exists partition(day_id='20180503') ;

alter table ods.ods_wcdr add if not exists partition(day_id='20180503') ;

10、为每一个用户在hdfs中创建一个目录

通过root用户

hadoop dfs -mkdir /user/dwd/

hadoop dfs -chown dwd:dwd /user/dwd

hadoop dfs -mkdir /user/dws/

hadoop dfs -chown dws:dws /user/dws

hadoop dfs -mkdir /user/dim/

hadoop dfs -chown dim:dim /user/dim

hadoop dfs -mkdir /user/ads/

hadoop dfs -chown ads:ads /user/ads

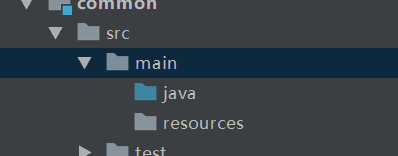

11、新建maven项目,建立moudle

12、DWD层-位置融合表

1.使用spark来实现,编写spark代码,union all 来聚合四张表,注意列的数量

import org.apache.spark.sql.SparkSession

object DwdResRegnMergelocationMskD {

def main(args: Array[String]): Unit = {

val spark: SparkSession = SparkSession.builder()

.appName("DwdResRegnMergelocationMskD")

.enableHiveSupport() //获取hive元数据

.getOrCreate()

// 时间参数

val day_id:String = args(0)

spark.sql(

s"""

|insert overwrite table dwd.dwd_res_regn_mergelocation_msk_d partition(day_id=$day_id)

|select

|md5(mdn) as mdn,

|start_time,

|county_id,

|longi,

|lati,

|bsid,

|grid_id,

|biz_type,

|event_type,

|data_source

|from

|(

|(select * from ods.ods_ddr where day_id=$day_id)

|union all

|(select * from ods.ods_dpi where day_id=$day_id)

|union all

|(select * from ods.ods_oidd where day_id=$day_id)

|union all

|(select * from ods.ods_wcdr where day_id=$day_id)

|)

""".stripMargin)

}

}

2.提交jar包

spark-submit --master yarn-client --class DwdResRegnMergelocationMskD --num-executors 1 --executor-memory 6G --executor-cores 3 dwd-1.0.jar 20180503

13、DWS层-停留表

1.DSL语句编写spark代码(这里并没有写经纬度)

import org.apache.spark.sql.{SaveMode, SparkSession}

import org.apache.spark.sql.expressions.Window

object DwsStaypointMskD {

def main(args: Array[String]): Unit = {

val spark: SparkSession = SparkSession.builder()

.appName("DwsStaypointMskD")

.enableHiveSupport()

.getOrCreate()

val day_id: String = args(0)

import spark.implicits._

import org.apache.spark.sql.functions._

spark

//读取融合表

.table("dwd.dwd_res_regn_mergelocation_msk_d")

//取出指定分区

.where($"day_id" === day_id)

//获取开始时间和结束时间

.withColumn("start_date", split($"start_time", ",")(1))

.withColumn("end_date", split($"start_time", ",")(0))

//按照手机号分组,按照时间排序,取上一条数据的网格编号

.withColumn("s_grid", lag($"grid_id", 1, "") over Window.partitionBy($"mdn").orderBy($"start_date"))

//判断当前网格编号和上一条数据的网格编号是否一致,划分边界

.withColumn("flag", when($"s_grid" === $"grid_id", 0).otherwise(1))

//在同一个组的数据打上同样的标记

.withColumn("class", sum($"flag") over Window.partitionBy($"mdn").orderBy($"start_date"))

//将同一个人在同一个网格中的数据分到同一个组

.groupBy($"class", $"mdn", $"county_id", $"grid_id")

//获取用户在网格中第一个点的时间和最后一个点的时间

.agg(min($"start_date") as "grid_first_time", max($"end_date") as "grid_last_time")

//获取停留时间

.withColumn("stay_time", unix_timestamp($"grid_last_time", "yyyyMMddHHmmss") - unix_timestamp($"grid_first_time", "yyyyMMddHHmmss"))

//整理数据

.select($"mdn",$"grid_id",$"county_id",round($"stay_time"/60,4) as "stay_time",$"grid_first_time", $"grid_last_time" )

.write

.mode(SaveMode.Overwrite)

.format("csv")

.option("sep","\t")

.save("/daas/motl/dws/dws_staypoint_msk_d/day_id="+day_id)

}

}

2.提交jar包

spark-submit --master yarn-client --class DwsStaypointMskD --num-executors 1 --executor-memory 6G --executor-cores 3 dws-1.0.jar 20180503

14、SparkTools工具类

可以将sparkSession封装起来,并设置传入时间参数,这样以后可以直接写sparksql或者DSL,只需要继承sparkSTools类

package com.zyl.common

import org.apache.spark.internal.Logging

import org.apache.spark.sql.SparkSession

abstract class SparkTools extends Logging{

var day_id:String = _

def main(args: Array[String]): Unit = {

/**

* 获取时间参数

*

*/

if(args.length==0){

logError("请传入时间参数")

return

}

day_id = args(0)

logInfo(s"时间参数$day_id")

logInfo("创建Spark环境")

val spark: SparkSession = SparkSession

.builder()

.appName(this.getClass.getSimpleName.replace("$", ""))

.enableHiveSupport() //获取hive元数据

.getOrCreate()

run(spark)

}

def run(spark:SparkSession)

}

需要注意的是:我新建的一个Common1模块存在公共类,所以项目最外层pom增加common1,而且需要放在最上面,因为先打包common1.common1打包后才能出现一个jar包 common1/target/common1-1.0.jar 供后面的模块使用

<modules>

<module>common1</module>

<module>dwd</module>

<module>dws</module>

<module>dim</module>

</modules>

使用common1的模块pom中也需要增加:

<dependency>

<groupId>com.zyl</groupId>

<artifactId>common1</artifactId>

<version>1.0</version>

</dependency>

因为每个模块的类我都是写入shell脚本运行的,脚本中需要增加common1的 common1-1.0.jar 包才能运行,所以修改:

day_id=$1

spark-submit \

--master yarn-client \

--class DwdResRegnMergelocationMskD \

--num-executors 1 \

--executor-memory 6G \

--executor-cores 4 \

--jars common1-1.0.jar \

dwd-1.0.jar $day_id

15、使用gird工具类对DwsStaypointMskD类的网格点设置经纬度(使用udf函数),将grid工具包也放入comm1模块中

// 经度

val getLongi: UserDefinedFunction = udf((grid: String) => {

val point: Point2D.Double = Grid.getCenter(grid.toLong)

point.getX

})

// 纬度

val getLati: UserDefinedFunction = udf((grid: String) => {

val point: Point2D.Double = Grid.getCenter(grid.toLong)

point.getY

})

16、配置文件优化

把 一些可能会变化的表名,表的位置增加配置,在commo1的resources下创建config.properties,然后编写Config 工具类

package com.zyl.common

import java.io.InputStream

import java.util.Properties

object Config {

//加载config.properties配置文件

//使用类加载器从resources获取一个文件输入流

val inputStream: InputStream = this.getClass

.getClassLoader

.getResourceAsStream("config.properties")

//使用Properties读取配置文件

private val properties = new Properties()

properties.load(inputStream)

def get(key:String): String ={

properties.getProperty(key)

}

使用命令:

val DWD1: String = Config.get("mergelocation.table.name")

insert overwrite table $DWD1 partition(day_id=$day_id)

每次都需要写Config.get,所以可以在common1增加接口Constant,Sparktools来实现

trait Constant {

val DDR_TABLE_NAME: String = Config.get("ddr.table.name")

}

abstract class SparkTools extends Logging with Constant {

}

17、DIM维度层处理

1、ods层hive创建三张表

2、通过datax工具将三个表从mysql导入到hdfs,实现ods层

datax.py scenic_boundary_mysql_to_scenic_boundary_hive_ods.json

datax.py admin_code_mysql_to_admin_code_hive_ods.json

datax.py -p "-Dmonth_id=201805" usertag_mysql_to_usertag_hive_ods.json

3、dim层对ods层三表进行处理

3.1 ods库中创建三张表

3.2 因为其中用户画像表分区是月份,所以需要需要对传入的day_id进行分隔成month_id,因为这是编写spark代码类DimUsertagMskM需要传入month_id,所以同时sparktools也要增加month_id参数

abstract class SparkTools extends Logging with Constant {

var day_id: String = _

var month_id: String = _

def main(args: Array[String]): Unit = {

/**

* 获取时间参数

*

*/

if (args.length == 0) {

logWarning("请传入时间参数")

} else {

day_id = args(0)

logInfo(s"时间参数$day_id")

month_id = day_id.substring(0, 6)

}

3.3 从ods脱敏操作将三张表导入到dim(spark来写)

用户画像表

import com.zyl.common.SparkTools

import org.apache.spark.sql.{SaveMode, SparkSession}

object DimUsertagMskM extends SparkTools{

override def run(spark: SparkSession): Unit = {

import spark.implicits._

import org.apache.spark.sql.functions._

spark.table(ODS_USERTAG_TABLE_NAME)

.where($"month_id"===month_id)

.select(

md5($"mdn") as "mdn",

md5($"name") as "name",

$"gender",

$"age",

md5($"id_number") as "id_number",

$"number_attr",

$"trmnl_brand",

$"trmnl_price",

$"packg",

$"conpot",

$"resi_grid_id",

$"resi_county_id"

).write

.format("csv")

.option("sep","\t")

.mode(SaveMode.Overwrite)

.save(s"${DIM_USERTAG_PATH}month_id="+month_id)

spark.sql(

s"""

|alter table $DIM_USERTAG_TABLE_NAME add if not exists partition(month_id=$month_id)

""".stripMargin)

}

}

用户画像表shell脚本(后面类似)

day_id=$1

spark-submit \

--master yarn-client \

--class DimUsertagMskM \

--num-executors 1 \

--executor-memory 6G \

--executor-cores 4 \

--jars common1-1.0.jar \

dim-1.0.jar $day_id

行政区配置表

import com.zyl.common.SparkTools

import org.apache.spark.sql.SparkSession

object DimAdmincode extends SparkTools{

override def run(spark: SparkSession): Unit = {

spark.sql(

s"""

|insert overwrite table $DIM_ADMINCODE_TABLE_NAME

|select * from $ODS_ADMINCODE_TABLE_NAME

""".stripMargin)

}

}

景区配置表

import com.zyl.common.SparkTools

import org.apache.spark.sql.SparkSession

object DimScenicBoundary extends SparkTools{

override def run(spark: SparkSession): Unit = {

spark.sql(

s"""

|insert overwrite table $DIM_SCENIC_BOUNDARY_TABLE_NAME

|select * from $ODS_SCENIC_BOUNDARY_TABLE_NAME

|

""".stripMargin)

}

}

17、ADS层 根据DWS层处理业务省游客表

1、创建省游客表

2、编写spark代码

import com.zyl.common.SparkTools

import com.zyl.common.grid.Geography

import org.apache.spark.sql.expressions.{UserDefinedFunction, Window}

import org.apache.spark.sql.{DataFrame, SaveMode, SparkSession}

/**

* 计算省游客

* 1、停留时间大于3小时

* 2、出游距离大于10KM

*

*/

object AdsProvinceTouristMskD extends SparkTools{

override def run(spark: SparkSession): Unit = {

import spark.implicits._

import org.apache.spark.sql.functions._

val staypoint: DataFrame = spark.table(s"$STAYPOINT_TABLE_NAME")

.where($"day_id"===day_id)

val usertag: DataFrame = spark.table(s"$DIM_USERTAG_TABLE_NAME")

.where($"month_id"===month_id)

val admincode: DataFrame = spark.table(s"$DIM_ADMINCODE_TABLE_NAME")

val calculateLength: UserDefinedFunction = udf((p1: String, p2: String) => {

Geography.calculateLength(p1.toLong, p2.toLong)

})

staypoint.join(usertag,"mdn")

.join(admincode,"county_id")

.withColumn("d_stay_time",sum($"duration") over Window.partitionBy($"mdn",$"prov_id"))

.where($"d_stay_time">180)

.withColumn("distance",calculateLength($"grid_id",$"resi_grid_id"))

.withColumn("d_max_distance",max($"distance") over Window.partitionBy($"mdn",$"prov_id"))

.where($"d_max_distance">10000)

.select(

$"mdn",

$"resi_county_id" as "source_county_id",

$"prov_id" as "d_province_id",

round($"d_stay_time"/60,4),

round($"d_max_distance"/1000,4)

)

.distinct()

.write

.format("csv")

.mode(SaveMode.Overwrite)

.option("sep","\t")

.save(s"${ADS_PROVINCE_TOURIST_PATH}day_id="+day_id)

spark.sql(

s"""

|

|alter table $ADS_PROVINCE_TOURIST_TABLE_NAME add if not exists partition(day_id=$day_id)

|

""".stripMargin)

}

}

18、AZKABAN

1、启动azkaban命令:./bin/start-solo.sh ,不要在bin目录下启动

2、修改脚本指定各个jar包和shell脚本的位置,然后再/root/tour下增加这些jar包和脚本

每个shell脚本中增加:

#获取脚本所在目录

shell_home="$( cd "$( dirname "${BASH_SOURCE[0]}" )" && pwd )"

#进入脚本目录

cd $shell_home

3、修改azkaban下的basic.flow文件

---

config:

nodes:

- name: DwdResRegnMergelocationMskD

type: command

config:

command: sh /root/tour/DwdResRegnMergelocationMskD.sh 20180503

- name: DwsStaypointMskD

type: command

config:

command: sh /root/tour/DwsStaypointMskD.sh 20180503

dependsOn:

- DwdResRegnMergelocationMskD

- name: AdsProvinceTouristMskD

type: command

config:

command: sh /root/tour/AdsProvinceTouristMskD.sh 20180503

dependsOn:

- DwsStaypointMskD

如果要实现当日t+1模式的调度,改为

---

config:

day_id: $(new("org.joda.time.DateTime").minusDays(1).toString("yyyyMMdd"))

nodes:

- name: DwdResRegnMergelocationMskD

type: command

config:

command: sh /root/tour/DwdResRegnMergelocationMskD.sh $day_id

- name: DwsStaypointMskD

type: command

config:

command: sh /root/tour/DwsStaypointMskD.sh $day_id

dependsOn:

- DwdResRegnMergelocationMskD

- name: AdsProvinceTouristMskD

type: command

config:

command: sh /root/tour/AdsProvinceTouristMskD.sh $day_id

dependsOn:

- DwsStaypointMskD

4、访问azkaban

http://master:8081

用户名密码 azkaban/azkaban

配置邮箱服务

vim conf/azkaban.properties

mail.sender 发送方

mail.host 邮箱服务器的地址

mail.user 用户名

mail.password 授权码

增加以下配置

mail.sender=987262086@qq.com

mail.host=smtp.qq.com

mail.user=987262086@qq.com

mail.password=aaaaa

重启azkaban

cd /usr/local/soft/azkaban-solo-server

关闭

./bin/shutdown-solo.sh

启动

./bin/start-solo.sh

错误总结

1。把snapshot去掉

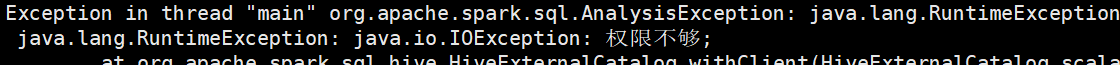

2.因为此时hive中有一个tem的目录,没有权限访问,可以用root用户删掉

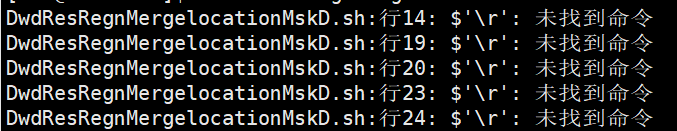

3.这是因为idea中的符号""与lunix中的不一样

改成CF格式

4.如果出现这种没有颜色,尝试重新导一下最外层的pom依赖

5.最外层的pom不能导入内部的依赖,这里值最外层pom不能导common1的依赖,只能在每个需要的moudle一个个去加

6.这是因为传入dws目录下的文件只传了dws的jar包和shell脚本,没有传入common1的jar包

7.$符号是指在 " "中引用特殊量,并且当在" "中出现字符串拼接的时候,需要加{},例如特殊量 ABC,格式1:"$ABC" 格式2:"${ABC}abc",且在使用时" "前有自动加上一个s,如果没有可以手动添加便可以显示,在sparksql中如果引用字段,也是这样的格式,但$符在" "号的外面

浙公网安备 33010602011771号

浙公网安备 33010602011771号