推荐系统实践—ItemCF实现

参考:https://github.com/Lockvictor/MovieLens-RecSys/blob/master/usercf.py#L169

数据集

本文使用了MovieLens中的ml-100k小数据集,数据集的地址为:传送门

该数据集中包含了943个独立用户对1682部电影做的10000次评分。

完整代码

总体和UserCF差不多,将用户相似度的计算改为物品相似度的计算即可。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 | import numpy as npimport pandas as pdimport mathfrom collections import defaultdictfrom operator import itemgetternp.random.seed(1)class ItemCF(object): def __init__(self): self.train_set = {} self.test_set = {} self.movie_popularity = {} self.tot_movie = 0 self.W = {} # 相似度矩阵 self.K = 160 # 最接近的K部电影 self.M = 10 # 推荐电影数 def split_data(self, data, ratio): ''' 按ratio的比例分成训练集和测试集 ''' for line in data.itertuples(): user, movie, rating = line[1], line[2], line[3] if np.random.random() < ratio: self.train_set.setdefault(user, {}) self.train_set[user][movie] = int(rating) else: self.test_set.setdefault(user, {}) self.test_set[user][movie] = int(rating) print('数据预处理完成') def item_similarity(self): ''' 计算物品相似度 ''' for user, items in self.train_set.items(): for movie in items.keys(): if movie not in self.movie_popularity: # 用于后面计算新颖度 self.movie_popularity[movie] = 0 self.movie_popularity[movie] += 1 self.tot_movie = len(self.movie_popularity) # 用于计算覆盖率 C, N = {}, {} # C记录电影两两之间共同喜欢的人数, N记录电影的打分人数 for user, items in self.train_set.items(): for m1 in items.keys(): N.setdefault(m1, 0) N[m1] += 1 C.setdefault(m1, defaultdict(int)) for m2 in items.keys(): if m1 == m2: continue else: C[m1][m2] += 1 count = 1 for u, related_movies in C.items(): print('\r相似度计算进度:{:.2f}%'.format(count * 100 / self.tot_movie), end='') count += 1 self.W.setdefault(u, {}) for v, cuv in related_movies.items(): self.W[u][v] = float(cuv) / math.sqrt(N[u] * N[v]) print('\n相似度计算完成') def recommend(self, u): ''' 推荐M部电影 ''' rank = {} user_movies = self.train_set[u] for movie, rating in user_movies.items(): for related_movie, similarity in sorted(self.W[movie].items(), key=itemgetter(1), reverse=True)[0:self.K]: if related_movie in user_movies: continue else: rank.setdefault(related_movie, 0) rank[related_movie] += similarity * rating return sorted(rank.items(), key=itemgetter(1), reverse=True)[0:self.M] def evaluate(self): ''' 评测算法 ''' hit = 0 ret = 0 rec_tot = 0 pre_tot = 0 tot_rec_movies = set() # 推荐电影 for user in self.train_set: test_movies = self.test_set.get(user, {}) rec_movies = self.recommend(user) for movie, pui in rec_movies: if movie in test_movies.keys(): hit += 1 tot_rec_movies.add(movie) ret += math.log(1+self.movie_popularity[movie]) pre_tot += self.M rec_tot += len(test_movies) precision = hit / (1.0 * pre_tot) recall = hit / (1.0 * rec_tot) coverage = len(tot_rec_movies) / (1.0 * self.tot_movie) ret /= 1.0 * pre_tot print('precision=%.4f' % precision) print('recall=%.4f' % recall) print('coverage=%.4f' % coverage) print('popularity=%.4f' % ret)if __name__ == '__main__': data = pd.read_csv('u.data', sep='\t', names=['user_id', 'item_id', 'rating', 'timestamp']) itemcf = ItemCF() itemcf.split_data(data, 0.7) itemcf.item_similarity() itemcf.evaluate() |

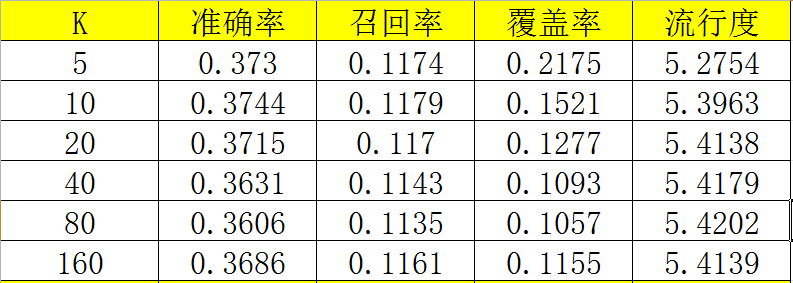

结果

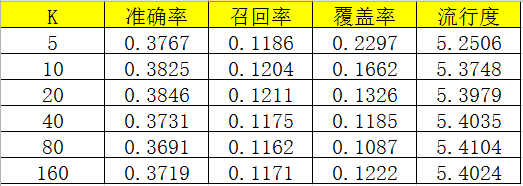

物品相似度的归一化

如果将ItemCF的相似度矩阵按最大值归一化,可以提高性能。

将上述相似度计算的部分代码改为

1 2 3 4 5 6 7 8 9 10 11 12 13 | count = 1 for u, related_movies in C.items(): print('\r相似度计算进度:{:.2f}%'.format(count * 100 / self.tot_movie), end='') count += 1 self.W.setdefault(u, {}) mx = 0.0 for v, cuv in related_movies.items(): self.W[u][v] = float(cuv) / math.sqrt(N[u] * N[v]) if self.W[u][v] > mx: mx = self.W[u][v] for v, cuv in related_movies.items(): self.W[u][v] /= mx print('\n相似度计算完成') |

可以看到性能均有所提升。

如果您觉得阅读本文对您有帮助,请点一下“推荐”按钮,您的“推荐”将是我最大的写作动力!欢迎各位转载,但是未经作者本人同意,转载文章之后必须在文章页面明显位置给出作者和原文连接,否则保留追究法律责任的权利。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 地球OL攻略 —— 某应届生求职总结

· 提示词工程——AI应用必不可少的技术

· Open-Sora 2.0 重磅开源!

· 周边上新:园子的第一款马克杯温暖上架

2017-05-02 POJ 1236 Network of School

2017-05-02 UVa 11324 最大团(强连通分量缩点)

2017-05-02 LA 3295 数三角形