第三次作业

作业1

1)、实验内容:指定一个网站,爬取这个网站中的所有的所有图片,例如中国气象网(http://www.weather.com.cn)。分别使用单线程和多线程的方式爬取

这个代码在书上有,我们只是做了一个复现。

单线程代码如下:

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import urllib.request

import scrapy

def imageSpider(start_url):

try:

urls=[]

req = urllib.request.Request(start_url,headers=headers)

data=urllib.request.urlopen(req)

data=data.read()

dammit=UnicodeDammit(data,["utf-8","gbk"])

data=dammit.unicode_markup

soup=BeautifulSoup(data,"lxml")

images=soup.select("img")

for image in images:

try:

src=image["src"]

url=urllib.request.urljoin(start_url,src)

if url not in urls:

urls.append(url)

print(url)

download(url)

except Exception as err:

print(err)

except Exception as err:

print(err)

def download(url):

global count

try:

count=count+1

if(url[len(url)-4]=="."):

ext=url[len(url)-4:]

else:

ext=""

req=urllib.request.Request(url,headers=headers)

data=urllib.request.urlopen(req,timeout=100)

data=data.read()

fobj=open(r"images/"+str(count)+ext,"wb")

fobj.write(data)

fobj.close()

print("downloaded"+str(count)+ext)

except Exception as err:

print(err)

start_url="http://www.weather.com.cn/weather/101280601.shtml"

headers={"User-Agent":"Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"}

count=0

imageSpider(start_url)

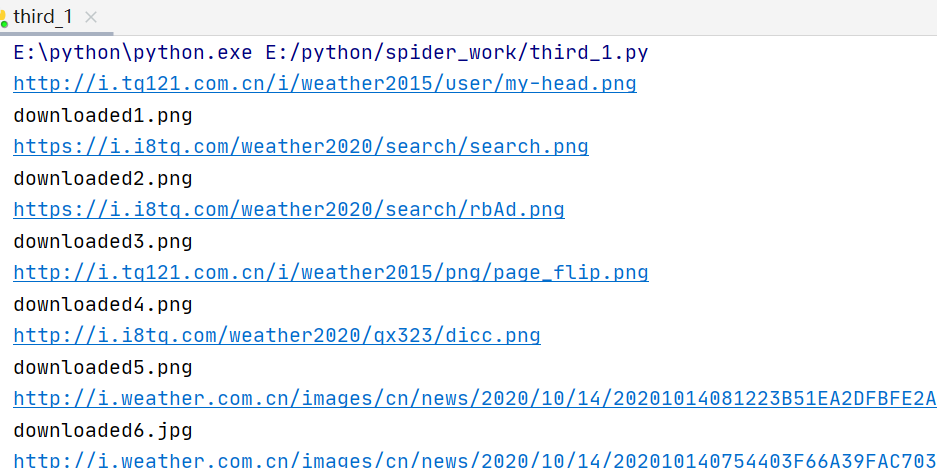

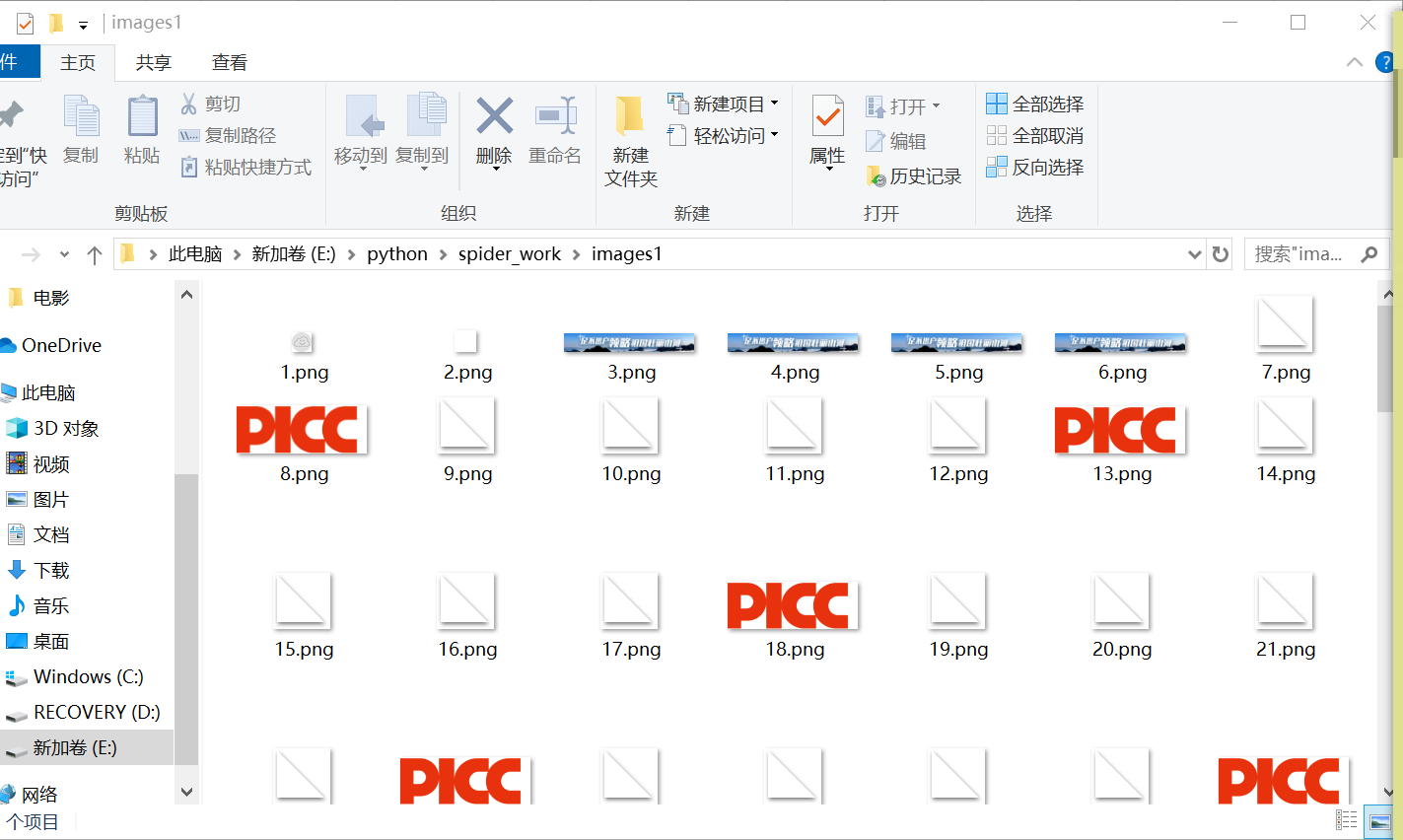

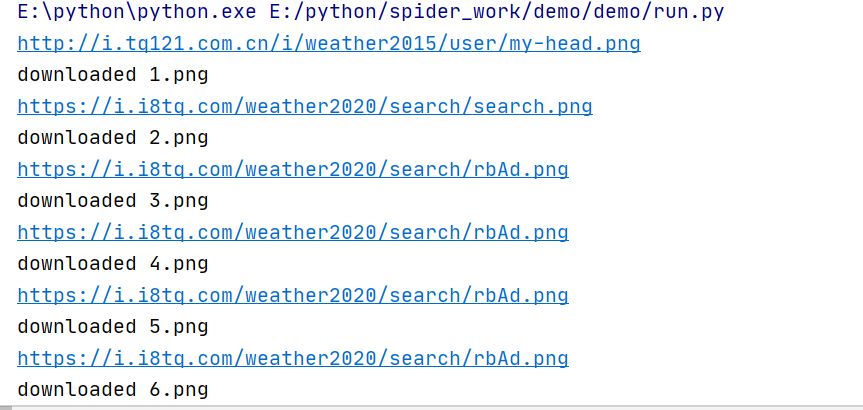

单线程结果如下:

多线程代码如下:

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import urllib.request

import threading

def imageSpider(start_url):

global threads

global count

try:

urls=[]

req = urllib.request.Request(start_url,headers=headers)

data=urllib.request.urlopen(req)

data=data.read()

dammit=UnicodeDammit(data,["utf-8","gbk"])

data=dammit.unicode_markup

soup=BeautifulSoup(data,"lxml")

images=soup.select("img")

for image in images:

try:

src=image["src"]

url=urllib.request.urljoin(start_url,src)

if url not in urls:

print(url)

count=count+1

T=threading.Thread(target=download,args=(url,count))

T.setDaemon(False)

T.start()

threads.append(T)

except Exception as err:

print(err)

except Exception as err:

print(err)

def download(url,count):

try:

if(url[len(url)-4]=="."):

ext=url[len(url)-4:]

else:

ext=""

req=urllib.request.Request(url,headers=headers)

data=urllib.request.urlopen(req,timeout=100)

data=data.read()

fobj=open(r"images1/"+str(count)+ext,"wb")

fobj.write(data)

fobj.close()

print("downloaded"+str(count)+ext)

except Exception as err:

print(err)

start_url="http://www.weather.com.cn/weather/101280601.shtml"

headers={"User-Agent":"Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"}

count=0

threads=[]

imageSpider(start_url)

for t in threads:

t.join()

print("The End")

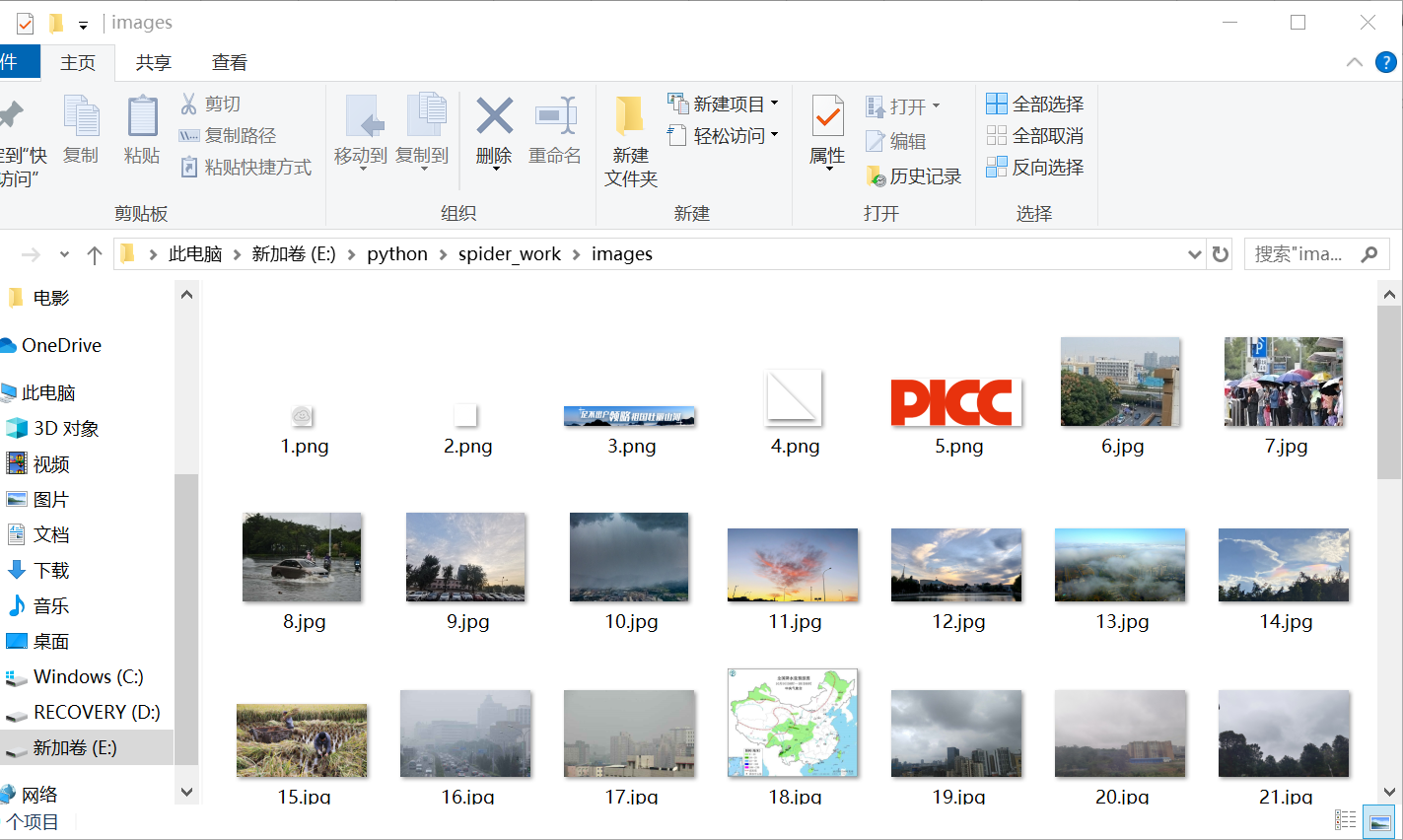

多线程结果如下:

2)、心得体会

这次实验是书上原封不动的实验的复现,通过对单线程和多线程的复现,我们了解到了如何利用线程更高效的爬取网站内容。

作业2

1)、实验内容:使用scrapy框架复现作业①。

scrapy各部分框架代码如下:

MySpider代码:

import scrapy

import re

from scrapy.http import Request

from ..items import ImgItem

class MySpider(scrapy.Spider):

name = "MySpider"

allowed_domains = ['weather.com.cn']

start_urls=["http://www.weather.com.cn/weather/101280601.shtml"]

def parse(self, response):

try:

data = response.body.decode()

selector = scrapy.Selector(text=data)

srcs = selector.xpath('//img/@src').extract()

for src in srcs:

print(src)

item = ImgItem()

item['src'] = src

yield item

except Exception as err:

print(err)

items的代码:

import scrapy

class ImgItem(scrapy.Item):

# name = scrapy.Field()

src = scrapy.Field()

pass

pipelines的代码:

import urllib

from scrapy.pipelines.images import ImagesPipeline

class ImagePipeline:

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/85.0.4183.121 Safari/537.36"}

count = 0

def process_item(self, item, spider):

try:

self.count += 1

src = item['src']

if src[len(src) - 4] == ".":

ext = src[len(src) - 4:]

else:

ext = ""

req = urllib.request.Request(src, headers=self.headers)

data = urllib.request.urlopen(req, timeout=100)

data = data.read()

fobj = open("E:\\python\\spider_work\\demo\\demo\\images\\" + str(self.count) + ext, "wb")

fobj.write(data)

fobj.close()

print("downloaded "+str(self.count)+ext)

except Exception as err:

print(err)

return item

settings的代码:

BOT_NAME = 'demo'

SPIDER_MODULES = ['demo.spiders']

NEWSPIDER_MODULE = 'demo.spiders'

ITEM_PIPELINES = {

'demo.pipelines.ImagePipeline': 300,

}

ROBOTSTXT_OBEY = False

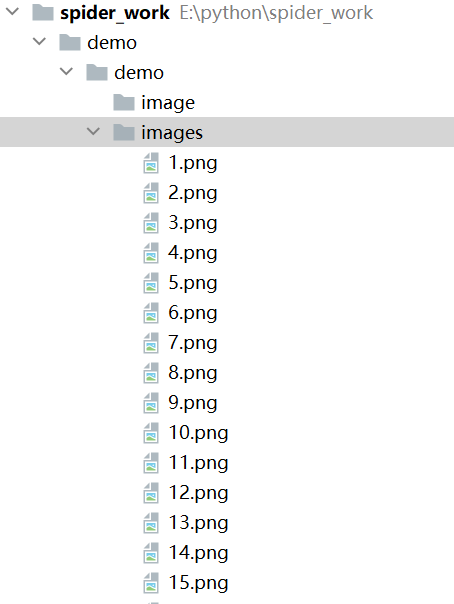

实验结果:

2)、心得体会

scrapy的框架其实就是将原来的爬虫代码按功能拆分成好几个部分,然后分别写在scrapy提供的各个文件下,这样便于实现大规模的爬虫程序,其实爬虫的内核没有什么变化,但是需要注意一些细节的变化,这样才能够爬出正确的结果。在这个实验中,用MySpider获得各个图片的url,再通过pipelines写下载保存的方法。

作业3

1)、实验内容:使用scrapy框架爬取股票相关信息。

各部分代码如下:

MySpider代码:

import scrapy

import json

from ..items import StockItem

class stockSpider(scrapy.Spider):

name = 'stock'

start_urls = ['http://49.push2.eastmoney.com/api/qt/clist/get?cb=jQuery11240918880626239239_1602070531441&pn=1&pz=20&po=1&np=3&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&fid=f3&fs=m:1+t:2,m:1+t:23&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1602070531442']

def parse(self, response):

jsons = response.text[41:][:-2] # 将前后用不着的字符排除

text_json = json.loads(jsons)

for data in text_json['data']['diff']:

item = StockItem()

item["f12"] = data['f12']

item["f14"] = data['f14']

item["f2"] = data['f2']

item["f3"] = data['f3']

item["f4"] = data['f4']

item["f5"] = data['f5']

item["f6"] = data['f6']

item["f7"] = data['f7']

yield item

print("完成")

items的代码:

import scrapy

class StockItem(scrapy.Item):

f12 = scrapy.Field()

f14 = scrapy.Field()

f2 = scrapy.Field()

f3 = scrapy.Field()

f4 = scrapy.Field()

f5 = scrapy.Field()

f6 = scrapy.Field()

f7 = scrapy.Field()

pass

pipelines的代码:

class stockPipeline(object):

count = 0

print("序号\t", "代码\t", "名称\t", "最新价(元)\t ", "涨跌幅 (%)\t", "跌涨额(元)\t", "成交量\t", "成交额(元)\t", "涨幅(%)\t")

def process_item(self, item, spider):

self.count += 1

print(str(self.count) + "\t", item['f12'] + "\t", item['f14'] + "\t", str(item['f2']) + "\t", str(item['f3']) + "%\t",str(item['f4']) + "\t", str(item['f5']) + "\t", str(item['f6']) + "\t", str(item['f7']) + "%")

return item

settings的代码:

BOT_NAME = 'stock'

SPIDER_MODULES = ['stock.spiders']

NEWSPIDER_MODULE = 'stock.spiders'

ITEM_PIPELINES = {

'stock.pipelines.stockPipeline': 300,

}

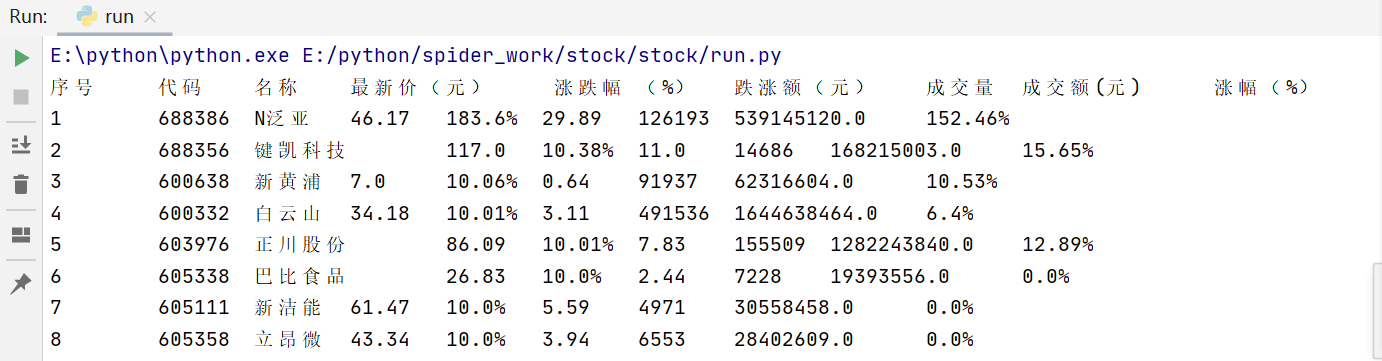

实验结果:

2)、心得体会

有了上一次爬取股票作业的基础,用scrapy复现就没有那么难,就是按各个部分拆分代码,MySpider用来爬取,pipelines用来按格式输出等等。