第十四次作业:手写数字识别-小数据集

1.手写数字数据集

# 1.手写数字数据集

from sklearn.datasets import load_digits

import numpy as np

digits = load_digits() # 读取手写数字数据集

2.图片数据预处理

- x:归一化MinMaxScaler()

-

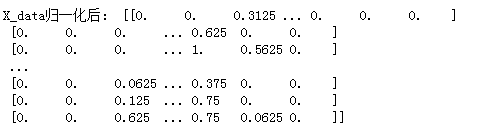

# 对X_data进行归一化MinMaxScaler scaler = MinMaxScaler() X_data = scaler.fit_transform(X_data) print("X_data归一化后:",X_data

- y:独热编码OneHotEncoder()或to_categorical

- 张量结构

-

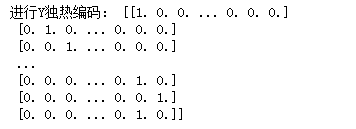

# OneHotEncoder独热编码

from sklearn.preprocessing import OneHotEncodery = digits.target.astype(np.float32).reshape(-1,1) #将Y_data变为一列

Y = OneHotEncoder().fit_transform(y).todense() #张量结构todense

print("进行Y独热编码:",Y)

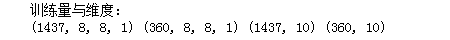

- 训练集测试集划分

-

# 划分训练集和测试集 from sklearn.model_selection import train_test_split x_train,x_test,y_train,y_test = train_test_split(X,Y,test_size=0.2,random_state=0,stratify=Y) print(x_train.shape, x_test.shape, y_train.shape, y_test.shape)

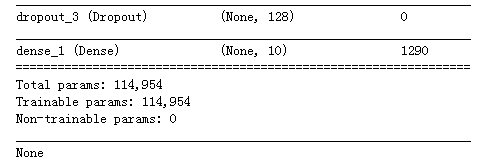

3.设计卷积神经网络结构

- 绘制模型结构图,并说明设计依据。

-

#3.设计卷积神经网络结构 from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense,Dropout,Conv2D,MaxPool2D,Flatten #3、建立模型 model = Sequential() ks = (3, 3) # 卷积核的大小 input_shape = x_train.shape[1:] # 一层卷积,padding='same',tensorflow会对输入自动补0 model.add(Conv2D(filters=16, kernel_size=ks, padding='same', input_shape=input_shape, activation='relu')) # 池化层1 model.add(MaxPool2D(pool_size=(2, 2))) # 防止过拟合,随机丢掉连接 model.add(Dropout(0.25)) # 二层卷积 model.add(Conv2D(filters=32, kernel_size=ks, padding='same', activation='relu')) # 池化层2 model.add(MaxPool2D(pool_size=(2, 2))) model.add(Dropout(0.25)) # 三层卷积 model.add(Conv2D(filters=64, kernel_size=ks, padding='same', activation='relu')) # 四层卷积 model.add(Conv2D(filters=128, kernel_size=ks, padding='same', activation='relu')) # 池化层3 model.add(MaxPool2D(pool_size=(2, 2))) model.add(Dropout(0.25)) # 平坦层 model.add(Flatten()) # 全连接层 model.add(Dense(128, activation='relu')) model.add(Dropout(0.25)) # 激活函数softmax model.add(Dense(10, activation='softmax')) print(model.summary())

4.模型训练

import matplotlib.pyplot as plt # 画图 def show_train_history(train_history, train, validation): plt.plot(train_history.history[train]) plt.plot(train_history.history[validation]) plt.title('Train History') plt.ylabel('train') plt.xlabel('epoch') plt.legend(['train', 'validation'], loc='upper left') plt.show() #4.模型训练 model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) train_history = model.fit(x=x_train, y=y_train, validation_split=0.2, batch_size=300, epochs=10, verbose=2) #训练十次 # 准确率 show_train_history(train_history, 'accuracy', 'val_accuracy') # 损失率 show_train_history(train_history, 'loss', 'val_loss')

准确率:

损失率:

5.模型评价

- model.evaluate()

-

# 4、模型评价 import pandas as pd import seaborn as sns score = model.evaluate(x_test,y_test) print("score:",score) # 预测值 pre = model.predict_classes(x_test) print("预测值为:",pre[:10])

- 交叉表与交叉矩阵

- pandas.crosstab

- seaborn.heatmap

# 交差表与交叉矩阵 y_test1 = np.argmax(y_test,axis=1).reshape(-1) y_true = np.array(y_test1)[0] # 交叉表查看预测数据与原数据对比 pd.crosstab(y_true,pre,rownames=['true'],colnames=['predict']) # 交叉矩阵 y_test1 = y_test1.tolist()[0] a = pd.crosstab(np.array(y_test1),pre,rownames=['Lables'],colnames=['Predict']) # 转换成dataframe df = pd.DataFrame(a) sns.heatmap(df,annot=True,cmap="Oranges",linewidths=0.2,linecolor="G")