一、认识keepalived

1、前言

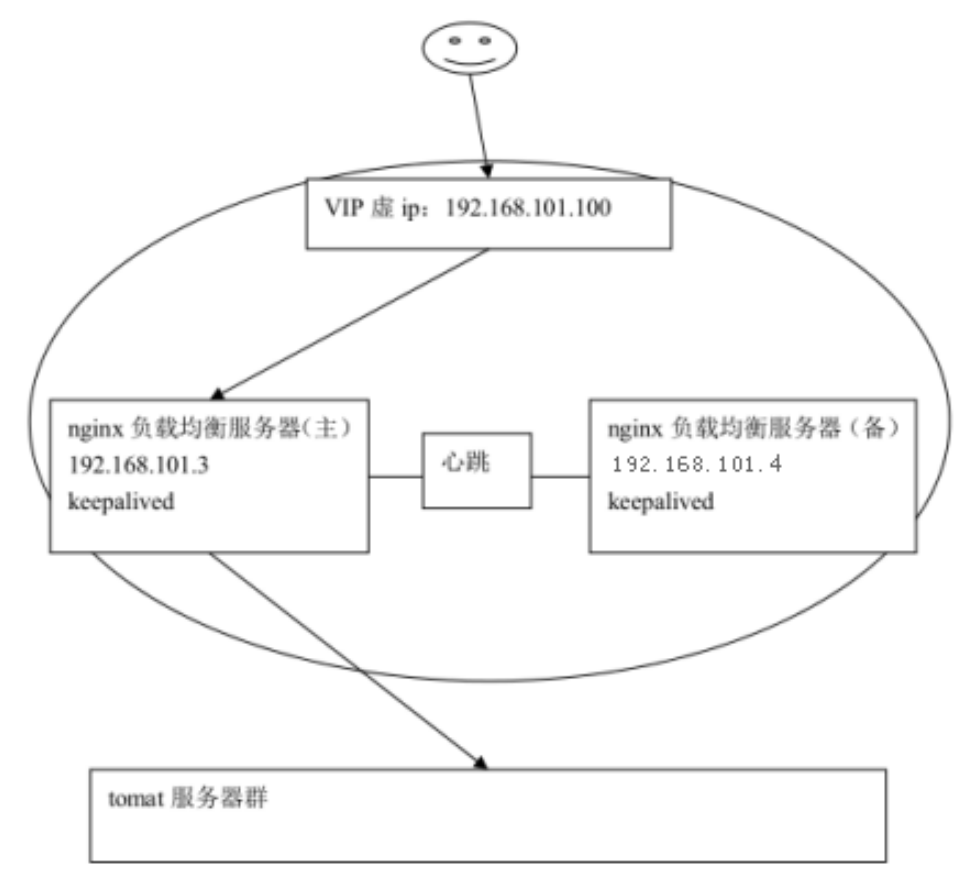

nginx 作为负载均衡器,所有请求都到了 nginx,可见 nginx 处于非常重点的位置,如果nginx 服务器宕机后端 web 服务将无法提供服务,影响严重。

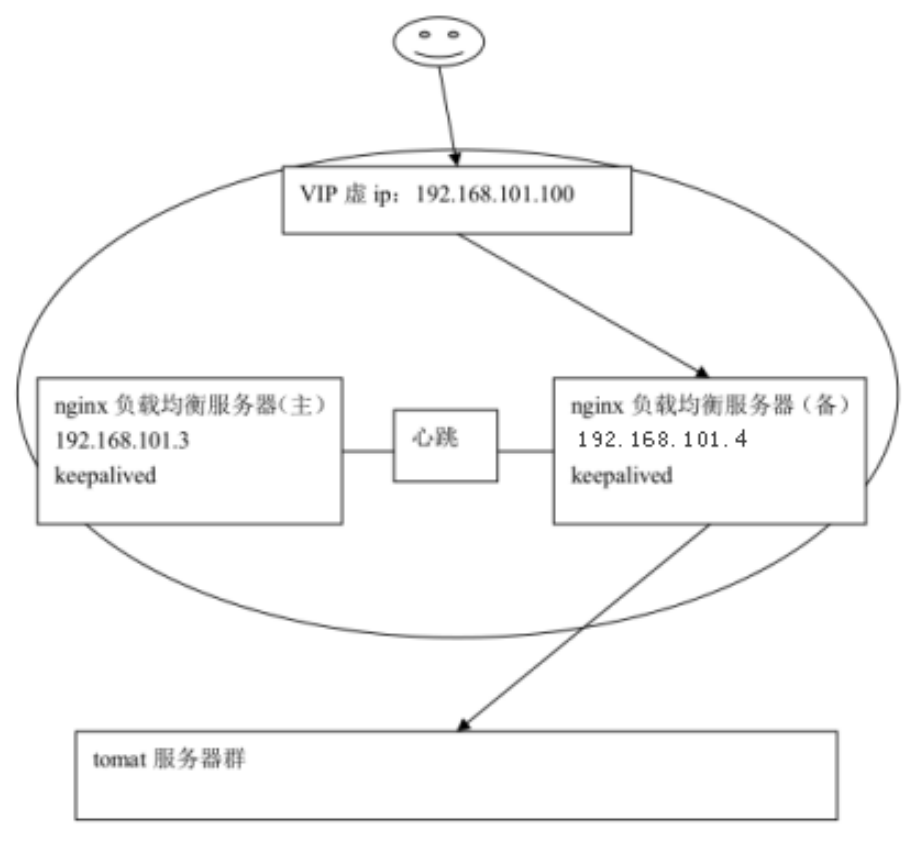

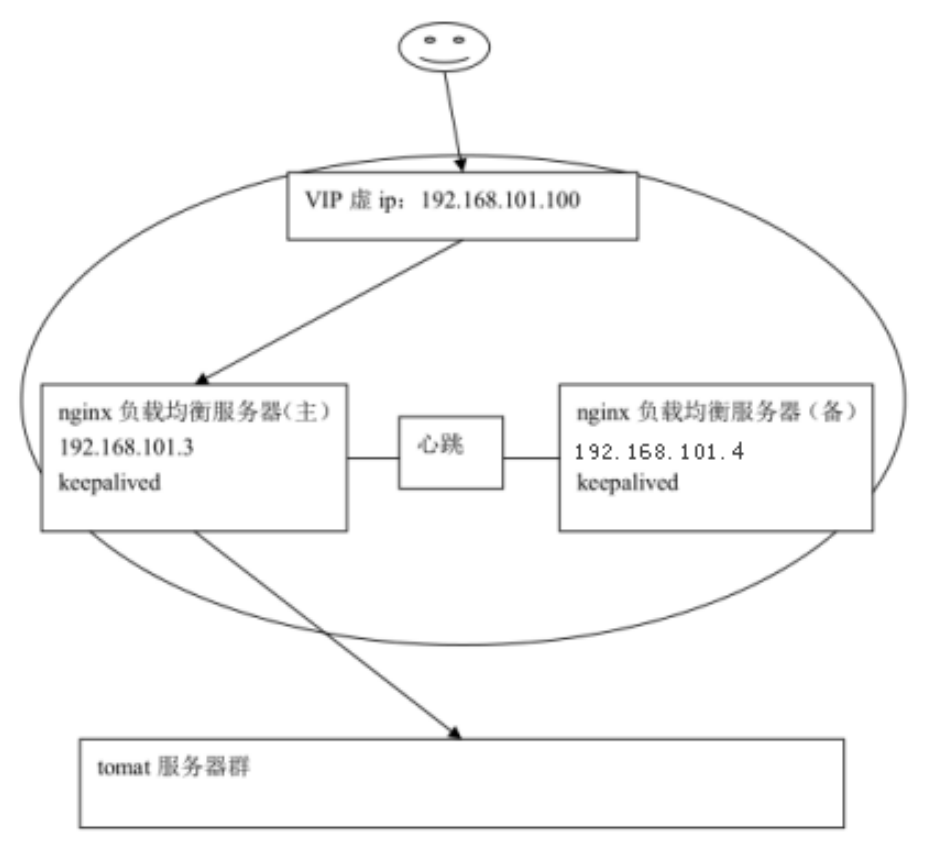

为了屏蔽负载均衡服务器的宕机,需要建立一个备份机。主服务器和备份机上都运行高可用(High Availability)监控程序(如keepalived),通过传送诸如“I am alive”这样的信息来监控对方的运行状况。当备份机不能在一定的时间内收到这样的信息时,它就接管主服务器的服务 IP 并继续提供负载均衡服务;当备份管理器又从主管理器收到“I am alive”这样的信息时,它就释放服务 IP 地址,这样的主服务器就开始再次提供负载均衡服务。

2、keepalived简介

keepalived 是集群管理中保证集群(如今天将的nginx集群)高可用的一个服务软件,用来防止单点故障。

Keepalived 的作用是检测 web 服务器(如nginx)的状态,如果有一台 web 服务器死机,或工作出现故障,(备机中的)Keepalived 将检测到,并将有故障的 web 服务器从系统中剔除,当 web 服务器工作正常后 Keepalived 自动将 web 服务器加入到服务器群中,这些工作全部自动完成,不需要人工干涉,需要人工做的只是修复故障的 web 服务器。

keepalived 是以 VRRP 协议为实现基础的,VRRP 全称 Virtual Router Redundancy Protocol,即虚拟路由冗余协议。

虚拟路由冗余协议,可以认为是实现路由器高可用的协议,即将 N 台提供相同功能的路由器组成一个路由器组,这个组里面有一个 master 和多个 backup,master 上面有一个对外提供服务的 vip(VIP = Virtual IPAddress,虚拟 IP 地址,该路由器所在局域网内其他机器的默认路由为该 vip),master 会发组播,当 backup 收不到 VRRP 包时就认为 master 宕掉了,这时就需要根据 VRRP 的优先级来选举一个 backup 当 master。这样的话就可以保证路由器的高可用了。

keepalived 主要有三个模块,分别是 core、check 和 VRRP。core 模块为 keepalived 的核心,负责主进程的启动、维护以及全局配置文件的加载和解析。check 负责健康检查,包括常见的各种检查方式。VRRP 模块是来实现 VRRP 协议的。

初始状态:

后端服务器集群(如nginx集群)通过VIP 193.168.101.100对外提供服务,客户端只知道VIP,并不关注后端服务器的真实地址。

主机宕机:

主机恢复:

virtual_ipaddress用于设置虚拟IP地址(VIP),又叫做漂移IP地址。可以设置多个虚拟IP地址,每行一个。之所以称为漂移IP地址,是因为Keepalived切换到Master状态时,这个IP地址会自动添加到(主服务器 的)系统中,而切换到BACKUP状态时,这些IP又会自动从(主服务器的)系统中删除。Keepalived通过“ip address add”命令的形式将VIP添加进系统中。要查看系统中添加的VIP地址,可以通过“ip add”命令实现。

“virtual_ipaddress”段中添加的IP形式可以多种多样,例如可以写成 “192.168.16.189/24 dev eth1” 这样的形式,而Keepalived会使用IP命令“ip addr add 192.168.16.189/24 dev eth1”将IP信息添加到系统中。因此,这里的配置规则和IP命令的使用规则是一致的。

虚拟IP(Virtual IP Address,简称VIP)是一个未分配给真实弹性云服务器网卡的IP地址。弹性云服务器除了拥有私有IP地址外,还可以拥有虚拟IP地址,用户可以通过其中任意一个IP(私有IP/虚拟IP)访问此弹性云服务器。同时,虚拟IP地址拥有私有IP地址同样的网络接入能力,包括VPC内二三层通信、VPC之间对等连接访问。虚拟IP地址用于为网卡提供第二个IP地址,同时支持与多个云服务器的网卡绑定,从而实现多个云服务器之间的高可用性。

VIP用于向客户端提供一个固定的“虚拟”访问地址,以避免后端服务器发生切换时对客户端的影响。VIP被加载在Master的网卡上,所有指向VIP的请求会被发向Master,Slave服务器出于Standby状态。如果Master出现故障,集群会通过选举算法从可用的Slave节点中选出一个新的Master节点,并将VIP也迁移到新Master节点的网卡上。这样可以保证服务始终可用,并且对客户端来说访问的IP也不会变化。注意VIP始终指向一个Master,因此VIP的方案并不能实现LB,只能实现HA。

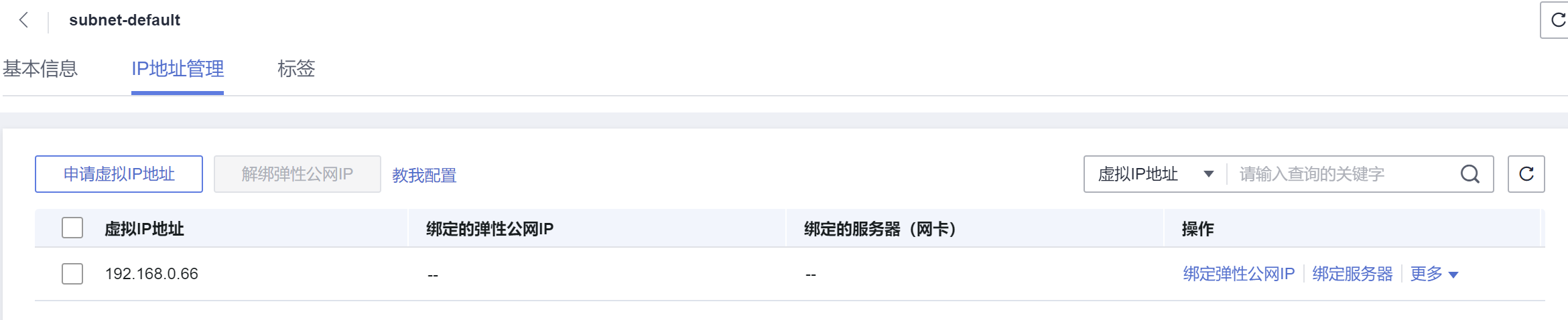

二、我的华为云服务器配置虚拟IP

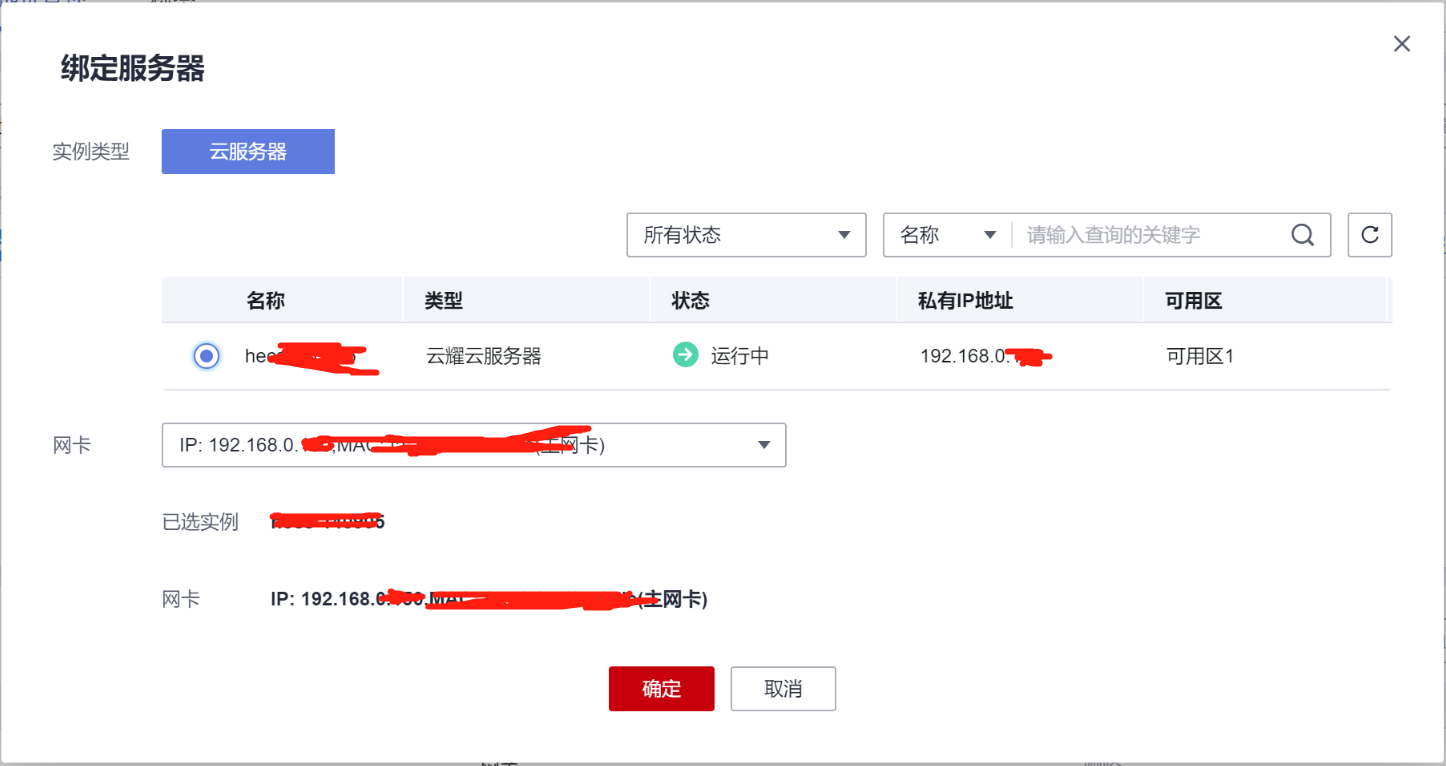

由于我的是华为云服务器,故只讲华为云服务器如何设置虚拟IP。

登录管理控制台,选择“总览 >云耀服务器”,在云耀服务器列表中,单击云耀服务器名称,系统跳转至该弹性云服务器详情页面,选择“网卡”页签,单击“管理虚拟IP地址",进入如下页面:

点击申请虚拟IP地址

点击确定

点击绑定服务器

点击确定。

三、docker安装nginx和keepalived

下面我们来实现keepalived高可用,在centos7容器中安装nginx和keepalived。下图仅作示意,ip地址与本文无关。

1、运行两个centos容器

(1)、下载centos:7.6.1810镜像

docker pull centos:7.6.1810

定义自定义网络

docker network create --driver bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 mynet

(2)、运行两个centos容器

由于VIP要与keepalived的IP网段一致,故指定mynet网络。

docker run --privileged --cap-add SYS_ADMIN -e container=docker --name centos_master --net mynet -d centos:7.6.1810 /usr/sbin/init docker run --privileged --cap-add SYS_ADMIN -e container=docker --name centos_slave --net mynet -d centos:7.6.1810 /usr/sbin/init

这个命令用来建立一个CENTOS的容器。

--privileged 指定容器是否是特权容器。这里开启特权模式。

--cap-add SYS_ADMIN 添加系统的权限。不然,系统很多功能都用不了的。

-e container=docker 设置容器的类型。

/usr/sbin/init 初始容器里的CENTOS。

以上的参数是必需的。不然,建立的CENTOS容器不能正常使用和互动。

如果没有初始化和特权等等的开关,就不能使用systemctl。所以,以上的开关和设置是一样不能少的。

查看mynet网络:docker network inspect mynet

"Containers": { "a7c5ac274402c817af76b0c32cded901a0625755c957a4ed8487a81aa9aa172a": { "Name": "centos_slave", "EndpointID": "c677d37936bd0d7c107d5d5f7a074cebd90900873df531f7eaaa8c8f3d1a85a9", "MacAddress": "02:42:c0:a8:00:03", "IPv4Address": "192.168.0.3/16", "IPv6Address": "" }, "c6e689818ca21969265e620261f0b0e07f19bc524847adaee170ff03e63b469c": { "Name": "centos_master", "EndpointID": "5848c8cfa67cef6ed143465b7a34b68660453a1cac696ed40e4697d3c0b5f69f", "MacAddress": "02:42:c0:a8:00:02", "IPv4Address": "192.168.0.2/16", "IPv6Address": "" } },

由于要连接外网进行下载,故将my_centos_master和my_centos_slave加入到bridge。

docker network connect bridge centos_master

docker network connect bridge centos_slave

查看bridge网络:docker network inspect bridge

"Containers": { "a7c5ac274402c817af76b0c32cded901a0625755c957a4ed8487a81aa9aa172a": { "Name": "centos_slave", "EndpointID": "e44bcad2341c1fce318519a117fa5cd8e40b00a1d5f3db2911922c6760e729c6", "MacAddress": "02:42:ac:11:00:03", "IPv4Address": "172.17.0.3/16", "IPv6Address": "" }, "c6e689818ca21969265e620261f0b0e07f19bc524847adaee170ff03e63b469c": { "Name": "centos_master", "EndpointID": "c14aca283413d2d1b7e3cea7017dda2c9f5f7f11943469ef9652172220bd9b13", "MacAddress": "02:42:ac:11:00:02", "IPv4Address": "172.17.0.2/16", "IPv6Address": "" } },

此时,centos_master和centos_slave既在bridge网络中,又在mynet网络中。

(3)、进入容器

查看容器:docker ps

[root@xxx ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES a7c5ac274402 centos:7.6.1810 "/usr/sbin/init" 3 minutes ago Up 3 minutes centos_slave c6e689818ca2 centos:7.6.1810 "/usr/sbin/init" 3 minutes ago Up 3 minutes centos_master

进入容器

docker exec -it centos_master /bin/bash

docker exec -it centos_slave /bin/bash

2、容器内安装Nginx的库和Nginx

(1)、先安装nginx的依赖库

rpm -Uvh http://nginx.org/packages/centos/7/noarch/RPMS/nginx-release-centos-7-0.el7.ngx.noarch.rpm

(2)、安装nginx

yum install -y nginx

查看nginx版本

[root@xxxx /]# nginx -v nginx version: nginx/1.22.0

说明nginx安装成功。

(3)、安装网络包(需要使用ifconfig和ping命令)

yum install -y iproute

yum install -y net-tools

输入ifconfig查看IP

主容器

[root@xxx /]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.0.2 netmask 255.255.0.0 broadcast 192.168.255.255 ether 02:42:c0:a8:00:02 txqueuelen 0 (Ethernet) RX packets 8 bytes 656 (656.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 6 bytes 252 (252.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.17.0.2 netmask 255.255.0.0 broadcast 172.17.255.255 ether 02:42:ac:11:00:02 txqueuelen 0 (Ethernet) RX packets 11489 bytes 29739901 (28.3 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 11438 bytes 940859 (918.8 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 loop txqueuelen 1000 (Local Loopback) RX packets 128 bytes 11208 (10.9 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 128 bytes 11208 (10.9 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

从容器

[root@a1ca509408ba /]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.0.3 netmask 255.255.0.0 broadcast 192.168.255.255 ether 02:42:c0:a8:00:03 txqueuelen 0 (Ethernet) RX packets 8 bytes 656 (656.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.17.0.3 netmask 255.255.0.0 broadcast 172.17.255.255 ether 02:42:ac:11:00:03 txqueuelen 0 (Ethernet) RX packets 6860 bytes 29393745 (28.0 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 6675 bytes 458937 (448.1 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 loop txqueuelen 1000 (Local Loopback) RX packets 116 bytes 10414 (10.1 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 116 bytes 10414 (10.1 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

说明net-tools安装成功。

3、进入master 和slave容器修改标题

(1)、修改index.html文件

cd /usr/share/nginx/html

vi index.html

主容器的nginx

<!DOCTYPE html> <html> <head> <title>Welcome to nginx Master!</title> <style> html { color-scheme: light dark; } body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html>

从容器的nginx

<!DOCTYPE html> <html> <head> <title>Welcome to nginx Slave!</title> <style> html { color-scheme: light dark; } body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html>

(2)、启动nginx

cd /usr/sbin/

./nginx

知道如何启动nginx,同时也要知道如何关闭nginx

• 查看nginx进程id

ps -ef | grep nginx

• kill所有进程

killall nginx

(3)、检测master容器中nginx是否启动

curl localhost

结果:

[root@xxx sbin]# curl localhost

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx Master!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

4、容器内安装keepalived

(1)、安装keepalived依赖环境

yum install -y gcc openssl-devel popt-devel

(2)、通过源码安装keepalived

yum -y install wget cd /root wget http://www.keepalived.org/software/keepalived-2.0.8.tar.gz --no-check-certificate tar -zxvf keepalived-2.0.8.tar.gz

rm -f keepalived-2.0.8.tar.gz cd keepalived-2.0.8 ./configure --prefix=/usr/local/keepalived make && make install

将keepalived.conf文件拷贝到/etc/keepalived

mkdir /etc/keepalived

cp /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/

(3)、在/etc/keepalived目录下创建Keepalived检测nginx的脚本check_nginx.sh,并赋权

cd /etc/keepalived

touch check_nginx.sh

vi check_nginx.sh

添加如下内容

A=`ps -ef | grep nginx | grep -v grep | wc -l` if [ $A -eq 0 ];then nginx sleep 2 if [ `ps -ef | grep nginx | grep -v grep | wc -l` -eq 0 ];then #killall keepalived ps -ef|grep keepalived|grep -v grep|awk '{print $2}'|xargs kill -9 fi fi

通过脚本检测nginx有没有挂,一旦挂了,就杀掉keepalived。这样备节点就成为了主节点。

(4)、给check_nginx.sh赋于执行权限

chmod +x /etc/keepalived/check_nginx.sh

(5)、编辑keepalived.conf

先编辑master的keepalived.conf文件

vi keepalived.conf

修改如下

! Configuration File for keepalived global_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 192.168.200.1 smtp_connect_timeout 30 router_id LVS_MASTER vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 } vrrp_script chk_nginx { script "/etc/keepalived/check_nginx.sh" interval 2 weight -20 fall 3 rise 2 user root } vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 51 priority 100 advert_int 2 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.0.66 } track_script { chk_nginx } }

再编辑slave的keepalived.conf文件

vi keepalived.conf

修改如下

! Configuration File for keepalived global_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 192.168.200.1 smtp_connect_timeout 30 router_id LVS_SLAVE vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 } vrrp_script chk_nginx { script "/etc/keepalived/check_nginx.sh" interval 2 weight -20 fall 3 rise 2 user root } vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 51 priority 99 advert_int 2 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.0.66 } track_script { chk_nginx } }

5、容器内启动keepalived

(1)、启动keepalived

如果不能使用systemctl启动,可以按下面的方法启动

cd /usr/local/keepalived/sbin/

./keepalived -f /etc/keepalived/keepalived.conf

关闭keepalived

ps -ef | grep keepalived kill -9 3747

(2)、使用ip add命令查看主备容器的ip情况

主容器

[root@a0d600c99ee7 sbin]# ip add 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 146: eth0@if147: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0 valid_lft forever preferred_lft forever inet 192.168.0.66/32 scope global eth0 valid_lft forever preferred_lft forever 148: eth1@if149: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:c0:a8:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 192.168.0.2/16 brd 192.168.255.255 scope global eth1 valid_lft forever preferred_lft forever

从容器

[root@a1ca509408ba sbin]# ip add 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 150: eth0@if151: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0 valid_lft forever preferred_lft forever 152: eth1@if153: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:c0:a8:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 192.168.0.3/16 brd 192.168.255.255 scope global eth1 valid_lft forever preferred_lft forever

可以发现虚拟ip 192.168.0.66 此时已绑定在主容器上。

(5)、通过curl命令访问VIP

在master容器中访问192.168.0.66

如果报错如下:

[root@c6e689818ca2 sbin]# curl 192.168.0.66 curl: (7) Failed connect to 192.168.0.66:80; Connection timed out

修改keepalived.conf文件,将下面的内容注释掉即可。

vrrp_strict

keepalived默认使用组播,进行ARP广播,但是在云服务器上是禁止进行ARP广播的,容易造成ARP风暴。所以云服务器上部署或物理机部署采用单播的方式实现。

即:

! Configuration File for keepalived global_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 192.168.200.1 smtp_connect_timeout 30 router_id LVS_MASTER vrrp_skip_check_adv_addr # vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 } vrrp_script chk_nginx { script "/etc/keepalived/check_nginx.sh" interval 2 weight -20 fall 3 rise 2 user root } vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 51 priority 100 advert_int 2 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.0.66 } track_script { chk_nginx } }

启动keepalived,再次通过curl命令访问192.168.0.66。

[root@a0d600c99ee7 sbin]# curl 192.168.0.66 <!DOCTYPE html> <html> <head> <title>Welcome to nginx Master!</title> <style> html { color-scheme: light dark; } body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html>

在slave容器中访问192.168.0.66

[root@a0d600c99ee7 sbin]# curl 192.168.0.66 <!DOCTYPE html> <html> <head> <title>Welcome to nginx Master!</title> <style> html { color-scheme: light dark; } body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html>

可以看现,此时master和slave容器两边通过虚拟vip : 192.168.0.66 访问nginx数据,请求返回的数据都是master容器中nginx配置的数据: welcome to nginx master。

继续验证,关掉master容器的keepalived服务:

ps -ef | grep keepalived kill -9 3747

注意:当备份机不能在一定的时间内收到“I am alive”这样的信息时,它就接管主服务器的服务 IP 并继续提供负载均衡服务;

查看主容器的IP

[root@a0d600c99ee7 sbin]# ip add 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 146: eth0@if147: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0 valid_lft forever preferred_lft forever 148: eth1@if149: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:c0:a8:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 192.168.0.2/16 brd 192.168.255.255 scope global eth1 valid_lft forever preferred_lft forever

查看从容器的IP

[root@a1ca509408ba sbin]# ip add 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 150: eth0@if151: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0 valid_lft forever preferred_lft forever inet 192.168.0.66/32 scope global eth0 valid_lft forever preferred_lft forever 152: eth1@if153: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:c0:a8:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 192.168.0.3/16 brd 192.168.255.255 scope global eth1 valid_lft forever preferred_lft forever

可以发现虚拟ip 192.168.0.66 此时已绑定在从容器上。

此时在主容器访问curl 192.168.0.66

[root@a0d600c99ee7 sbin]# curl 192.168.0.66 <!DOCTYPE html> <html> <head> <title>Welcome to nginx Slave!</title> <style> html { color-scheme: light dark; } body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html>

验证得到的结果是当master容器中的keepalived服务关掉后,curl 192.168.0.66请求返回的数据来自slave,welcome to nginx slave。在从容器中访问也是返回的welcome to nginx Slave。

再继续验证,把关掉master容器的keepalived服务再开启:

cd /usr/local/keepalived/sbin/

./keepalived -f /etc/keepalived/keepalived.conf

注意:当备份管理器又从主管理器收到“I am alive”这样的信息时,它就释放服务 IP 地址,这样的主服务器就开始再次提供负载均衡服务。

此时查看主容器的IP

[root@a0d600c99ee7 sbin]# ip add 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 146: eth0@if147: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0 valid_lft forever preferred_lft forever inet 192.168.0.66/32 scope global eth0 valid_lft forever preferred_lft forever 148: eth1@if149: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:c0:a8:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 192.168.0.2/16 brd 192.168.255.255 scope global eth1 valid_lft forever preferred_lft forever

发现虚拟ip 192.168.0.66 此时又绑定在主容器上。

此时再访问curl 192.168.0.66

[root@a0d600c99ee7 sbin]# curl 192.168.0.66 <!DOCTYPE html> <html> <head> <title>Welcome to nginx Master!</title> <style> html { color-scheme: light dark; } body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html>

curl 192.168.0.66请求返回的数据来自Master,故可以说明已经实现了高可用。

四、先制作镜像再创建两个容器

根据第三条来创建两个容器,你会发现有大量的重复操作,我们可以先制作一个镜像,再根据该镜像创建两个容器,从而避免了大量重复操作。

1、配置模板容器

(1)、下载centos:7.6.1810镜像

docker pull centos:7.6.1810

(2)、启动并进入容器

docker run -it centos:7.6.1810 /bin/bash

(3)、安装基础工具

yum install -y iproute

yum install -y net-tools

(4)、安装nginx的依赖库和安装nginx

先安装nginx依赖

rpm -Uvh http://nginx.org/packages/centos/7/noarch/RPMS/nginx-release-centos-7-0.el7.ngx.noarch.rpm

再安装nginx

yum install -y nginx

(5)、修改index.html的标题

cd /usr/share/nginx/html

vi index.html

修改如下

<!DOCTYPE html> <html> <head> <title>Welcome to nginx Master!</title> <style> html { color-scheme: light dark; } body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html>

(6)、启动nginx

cd /usr/sbin/ ./nginx

知道如何启动nginx,同时也要知道如何关闭nginx

• 查看nginx进程id

ps -ef | grep nginx

• kill所有进程

killall nginx

(7)、检测master容器中nginx是否启动

curl localhost

结果:

[root@07c519d6244b sbin]# curl localhost <!DOCTYPE html> <html> <head> <title>Welcome to nginx Master!</title> <style> html { color-scheme: light dark; } body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html>

(8)、容器内安装keepalived

安装keepalived依赖环境

yum install -y gcc openssl-devel popt-devel

通过源码安装keepalived

yum -y install wget

cd /root

wget http://www.keepalived.org/software/keepalived-2.0.8.tar.gz --no-check-certificate

tar -zxvf keepalived-2.0.8.tar.gz

rm -f keepalived-2.0.8.tar.gz

cd keepalived-2.0.8

./configure --prefix=/usr/local/keepalived

make && make install

将keepalived.conf文件拷贝到/etc/keepalived

mkdir /etc/keepalived

cp /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/

(9)、在/etc/keepalived目录下创建Keepalived检测nginx的脚本check_nginx.sh,并赋权

cd /etc/keepalived

touch check_nginx.sh

vi check_nginx.sh

添加如下内容

A=`ps -ef | grep nginx | grep -v grep | wc -l`

if [ $A -eq 0 ];then

nginx

sleep 2

if [ `ps -ef | grep nginx | grep -v grep | wc -l` -eq 0 ];then

#killall keepalived

ps -ef|grep keepalived|grep -v grep|awk '{print $2}'|xargs kill -9

fi

fi

通过脚本检测nginx有没有挂,一旦挂了,就杀掉keepalived。这样备节点就成为了主节点。

给check_nginx.sh赋于执行权限

chmod +x /etc/keepalived/check_nginx.sh

(10)、编辑keepalived.conf

先编辑master的keepalived.conf文件

vi keepalived.conf

修改如下

! Configuration File for keepalived global_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 192.168.200.1 smtp_connect_timeout 30 router_id LVS_MASTER vrrp_skip_check_adv_addr # vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 } vrrp_script chk_nginx { script "/etc/keepalived/check_nginx.sh" interval 2 weight -20 fall 3 rise 2 user root } vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 51 priority 100 advert_int 2 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.0.66 } track_script { chk_nginx } }

(11)、退出容器,制作镜像

执行exit退出容器,查看容器

docker ps -a

结果

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 07c519d6244b centos:7.6.1810 "/bin/bash" 25 minutes ago Exited (1) 2 minutes ago peaceful_fermi

使用commit命令将容器保存为镜像

docker commit peaceful_fermi keepalived_nginx:v1

此镜像的内容就是当前容器的内容,接下来你可以用此镜像再次运行新的容器

2、启动主备keepalived容器

启动主容器

docker run -it \ --privileged=true \ --name keepalived_master \ --restart=always \ --net mynet --ip 192.168.0.2 \ keepalived_nginx:v1 \ /usr/sbin/init

去掉\改成一行执行

docker run -it --privileged=true --name keepalived_master --restart=always --net mynet --ip 192.168.0.2 keepalived_nginx:v1 /usr/sbin/init

启动从容器

docker run -it \ --privileged=true \ --name keepalived_slave \ --restart=always \ --net mynet --ip 192.168.0.3 \ keepalived_nginx:v1 \ /usr/sbin/init

去掉\改成一行执行

docker run -it --privileged=true --name keepalived_slave --restart=always --net mynet --ip 192.168.0.3 keepalived_nginx:v1 /usr/sbin/init

/usr/sbin/init 启动容器之后可以使用systemctl方法。

3、进入备机中修改keepalived配置

docker exec -it keepalived_slave bash cd /etc/keepalived vi keepalived.conf

修改如下:

! Configuration File for keepalived global_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 192.168.200.1 smtp_connect_timeout 30 router_id LVS_SLAVE vrrp_skip_check_adv_addr # vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 } vrrp_script chk_nginx { script "/etc/keepalived/check_nginx.sh" interval 2 weight -20 fall 3 rise 2 user root } vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 51 priority 99 advert_int 2 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.0.66 } track_script { chk_nginx } }

修改router_id、state、priority即可。

修改完后重启keepalived

cd /usr/local/keepalived/sbin/

./keepalived -f /etc/keepalived/keepalived.conf

关闭keepalived

ps -ef | grep keepalived

kill -9 3747

修改nginx中index.html的标题

cd /usr/share/nginx/html

vi index.html

启动nginx

cd /usr/sbin/

./nginx

4、测试高可用

进入主容器启动nginx和keepalived

查看主容器IP

[root@b3a23e52b268 sbin]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 188: eth0@if189: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:c0:a8:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 192.168.0.2/16 brd 192.168.255.255 scope global eth0 valid_lft forever preferred_lft forever inet 192.168.0.66/32 scope global eth0 valid_lft forever preferred_lft forever

进入从容器查看IP

[root@ad57dfb60f80 /]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 190: eth0@if191: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:c0:a8:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 192.168.0.3/16 brd 192.168.255.255 scope global eth0 valid_lft forever preferred_lft forever

主容器中通过curl命令访问192.168.0.66

[root@b3a23e52b268 sbin]# curl 192.168.0.66 <!DOCTYPE html> <html> <head> <title>Welcome to nginx Master!</title> <style> html { color-scheme: light dark; } body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html>

从容器中通过curl命令访问192.168.0.66

[root@ad57dfb60f80 /]# curl 192.168.0.66 <!DOCTYPE html> <html> <head> <title>Welcome to nginx Master!</title> <style> html { color-scheme: light dark; } body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html>

关闭主容器的keepalived

[root@b3a23e52b268 sbin]# ps -ef | grep keepalived root 3771 0 1 09:19 ? 00:00:04 ./keepalived -f /etc/keepalived/keepalived.conf root 3773 3771 0 09:19 ? 00:00:00 ./keepalived -f /etc/keepalived/keepalived.conf root 15815 3744 0 09:23 pts/1 00:00:00 grep --color=auto keepalived

杀死keepalived

kill -9 3771

在主容器中查看IP

[root@b3a23e52b268 sbin]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 188: eth0@if189: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:c0:a8:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 192.168.0.2/16 brd 192.168.255.255 scope global eth0 valid_lft forever preferred_lft forever

在从容器中查看IP

[root@ad57dfb60f80 /]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 190: eth0@if191: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:c0:a8:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 192.168.0.3/16 brd 192.168.255.255 scope global eth0 valid_lft forever preferred_lft forever inet 192.168.0.66/32 scope global eth0 valid_lft forever preferred_lft forever

发现VIP绑定到从容器中了。

在主容器中通过curl命令访问192.168.0.66

[root@b3a23e52b268 sbin]# curl 192.168.0.66 <!DOCTYPE html> <html> <head> <title>Welcome to nginx Slave!</title> <style> html { color-scheme: light dark; } body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html>

在从容器中通过curl命令访问192.168.0.66

[root@ad57dfb60f80 sbin]# curl 192.168.0.66 <!DOCTYPE html> <html> <head> <title>Welcome to nginx Slave!</title> <style> html { color-scheme: light dark; } body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html>

主容器中启动keepalived

主容器中查看ip

[root@b3a23e52b268 sbin]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 192: eth0@if193: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:c0:a8:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 192.168.0.2/16 brd 192.168.255.255 scope global eth0 valid_lft forever preferred_lft forever inet 192.168.0.66/32 scope global eth0 valid_lft forever preferred_lft forever

发现VIP又绑定到主容器上了。

在主容器中通过curl命令访问192.168.0.66

[root@b3a23e52b268 sbin]# curl 192.168.0.66 <!DOCTYPE html> <html> <head> <title>Welcome to nginx Master!</title> <style> html { color-scheme: light dark; } body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html>

说明keepalived实现了nginx的高可用。

五、实现nginx负载均衡

1、搭建mysql主从复制

详细步骤参考:https://www.cnblogs.com/zwh0910/p/16511041.html

创建mysql-master主容器

docker run -it -p 3301:3306 --name mysql-master --net mynet --ip 192.168.0.30 -v ~/mysql-master/conf/my.cnf:/etc/mysql/my.cnf -v ~/mysql-master/data:/var/lib/mysql --privileged=true --restart=always -e MYSQL_ROOT_PASSWORD=123456 -e TZ=Asia/Shanghai mysql:8.0.26

创建mysql-slave从容器

docker run -it -p 3302:3306 --name mysql-slave --net mynet --ip 192.168.0.31 -v ~/mysql-slave/conf/my.cnf:/etc/mysql/my.cnf -v ~/mysql-slave/data:/var/lib/mysql --privileged=true --restart=always -e MYSQL_ROOT_PASSWORD=123456 -e TZ=Asia/Shanghai mysql:8.0.26

2、搭建nacos集群

参考:https://www.cnblogs.com/zwh0910/p/16483429.html

创建第一nacos容器

docker run -it -e PREFER_HOST_MODE=ip -e MODE=cluster -e NACOS_SERVERS="192.168.0.21:8848 192.168.0.22:8848" -e SPRING_DATASOURCE_PLATFORM=mysql -e MYSQL_SERVICE_HOST=192.168.0.30 -e MYSQL_SERVICE_PORT=3306 -e MYSQL_SERVICE_USER=root -e MYSQL_SERVICE_PASSWORD=123456 -e MYSQL_SERVICE_DB_NAME=nacos_config -e MYSQL_SERVICE_DB_PARAM='characterEncoding=utf8&connectTimeout=1000&socketTimeout=3000&autoReconnect=true&useSSL=false&useUnicode=true&serverTimezone=UTC' -p 9902:8848 --name nacos2 --net mynet --ip 192.168.0.20 --restart=always nacos/nacos-server:2.0.3

创建第二个nacos容器

docker run -it -e PREFER_HOST_MODE=ip -e MODE=cluster -e NACOS_SERVERS="192.168.0.20:8848 192.168.0.22:8848" -e SPRING_DATASOURCE_PLATFORM=mysql -e MYSQL_SERVICE_HOST=192.168.0.30 -e MYSQL_SERVICE_PORT=3306 -e MYSQL_SERVICE_USER=root -e MYSQL_SERVICE_PASSWORD=123456 -e MYSQL_SERVICE_DB_NAME=nacos_config -e MYSQL_SERVICE_DB_PARAM='characterEncoding=utf8&connectTimeout=1000&socketTimeout=3000&autoReconnect=true&useSSL=false&useUnicode=true&serverTimezone=UTC' -p 9904:8848 --name nacos4 --net mynet --ip 192.168.0.21 --restart=always nacos/nacos-server:2.0.3

创建第三个nacos容器

docker run -it -e PREFER_HOST_MODE=ip -e MODE=cluster -e NACOS_SERVERS="192.168.0.20:8848 192.168.0.21:8848" -e SPRING_DATASOURCE_PLATFORM=mysql -e MYSQL_SERVICE_HOST=192.168.0.30 -e MYSQL_SERVICE_PORT=3306 -e MYSQL_SERVICE_USER=root -e MYSQL_SERVICE_PASSWORD=123456 -e MYSQL_SERVICE_DB_NAME=nacos_config -e MYSQL_SERVICE_DB_PARAM='characterEncoding=utf8&connectTimeout=1000&socketTimeout=3000&autoReconnect=true&useSSL=false&useUnicode=true&serverTimezone=UTC' -p 9906:8848 --name nacos6 --net mynet --ip 192.168.0.22 --restart=always nacos/nacos-server:2.0.3

3、修改keepalived主从容器中nginx的default.conf文件

查找nginx

[root@xxx /]# whereis nginx

nginx: /usr/sbin/nginx /usr/lib64/nginx /etc/nginx /usr/share/nginx

编辑default.conf文件

vi /etc/nginx/conf.d/default.conf

修改如下:

upstream nacosList { server 192.168.0.21:8848 weight=1; server 192.168.0.22:8848 weight=2; server 192.168.0.20:8848 weight=3; } server { listen 80; server_name localhost; #access_log /var/log/nginx/host.access.log main; location / { root /usr/share/nginx/html; index index.html index.htm; } #error_page 404 /404.html; # redirect server error pages to the static page /50x.html # error_page 500 502 503 504 /50x.html; location = /50x.html { root /usr/share/nginx/html; } location /nacos { proxy_pass http://nacosList; } # proxy the PHP scripts to Apache listening on 127.0.0.1:80 # #location ~ \.php$ { # proxy_pass http://127.0.0.1; #} # pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000 # #location ~ \.php$ { # root html; # fastcgi_pass 127.0.0.1:9000; # fastcgi_index index.php; # fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name; # include fastcgi_params; #} # deny access to .htaccess files, if Apache's document root # concurs with nginx's one # #location ~ /\.ht { # deny all; #} }

从容器中nginx的default.conf文件也进行相同的修改。

启动nginx

cd /usr/sbin/

./nginx

容器内访问:

[root@ad57dfb60f80 sbin]# curl -X POST "http://192.168.0.66/nacos/v1/cs/configs?dataId=nacos.cfg.dataId&group=test&content=HelloWorld" true

[root@ad57dfb60f80 sbin]# curl -X GET "http://192.168.0.66/nacos/v1/cs/configs?dataId=nacos.cfg.dataId&group=test" HelloWorld

若返回helloWorld,则反向代理搭建成功。

六、实现内网穿透

这样一个内网环境的Nacos集群就搭建完成了,但是无法在外网进行访问,为此需要实现内网穿透。

这里我使用的是nps可视化穿透服务,同样使用docker的方式来进行安装。

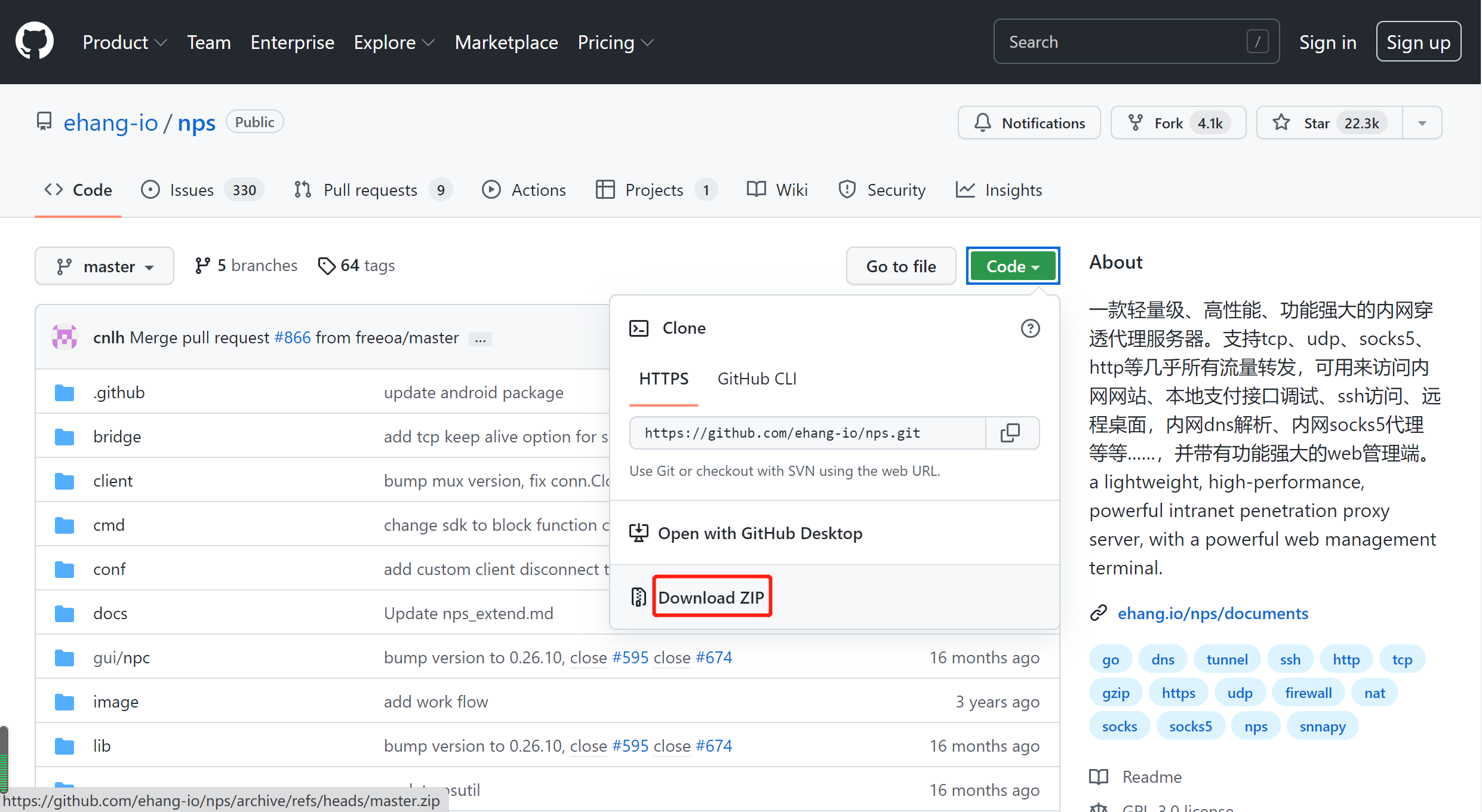

1、下载nps

网址:https://github.com/ehang-io/nps

解压后,目录结构如下所示:

在/root目录下创建nps/server目录

mkdir -p /root/nps/server

将刚才下载的conf目录通过Filezilla上传到/root/nps/server

nps.conf文件中一般需要注意的配置内容如下

bridge_port=8024 #NPS的服务端和客户端进行连接的默认端口 web_username=admin #web界面管理账号 web_password=123 #web界面管理密码 web_port = 8080 #web管理端口,通过访问该端口可以访问NPS后台

注意:NPS的web页面默认端口是8080,默认用户名密码是admin/123。最好进行修改。NPS的服务端和客户端进行连接的默认端口是8024,这个端口可以进行修改,修改之后,在连接时注意使用修改后的端口。

2、创建nps容器

拉取nps镜像

docker pull ffdfgdfg/nps

创建容器:

docker run -it \ --name nps \ --net mynet --ip 192.168.0.50 \ -p 8088:8080 \ -p 15876:15876 \ -p 15877:15877 \ -p 15878:15878 \ -v ~/nps/server/conf:/conf \ ffdfgdfg/nps

修改web管理端口8080为8088。

去掉\换成一行执行

docker run -it --name nps --net mynet --ip 192.168.0.50 -p 8088:8080 -p 15876:15876 -p 15877:15877 -p 15878:15878 -v ~/nps/server/conf:/conf ffdfgdfg/nps

查看容器

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 4a68e02470e4 ffdfgdfg/nps "/nps" 4 hours ago Up 4 minutes 0.0.0.0:15876-15878->15876-15878/tcp, :::15876-15878->15876-15878/tcp, 0.0.0.0:8088->8080/tcp, :::8088->8080/tcp nps

日志如下:

2022/07/29 03:53:58.361 [I] [nps.go:202] the version of server is 0.26.10 ,allow client core version to be 0.26.0 2022/07/29 03:53:59.005 [I] [connection.go:36] server start, the bridge type is tcp, the bridge port is 8024 2022/07/29 03:53:59.170 [I] [server.go:200] tunnel task start mode:httpHostServer port 0 2022/07/29 03:53:59.170 [I] [connection.go:71] web management start, access port is 8080 2022/07/29 03:53:59.172 [I] [connection.go:62] start https listener, port is 443 2022/07/29 03:53:59.172 [I] [connection.go:53] start http listener, port is 80

通过查看日志可以发现,该系统目前一共占用了3个端口:分别为80、443和8080,其中8080中管理端口。

将nps容器加入bridge网络

docker network connect bridge nps

此时可访问宿主机8088端口http://ip:8088/打开控制页面,

使用admin/123登录,进入如下页面:

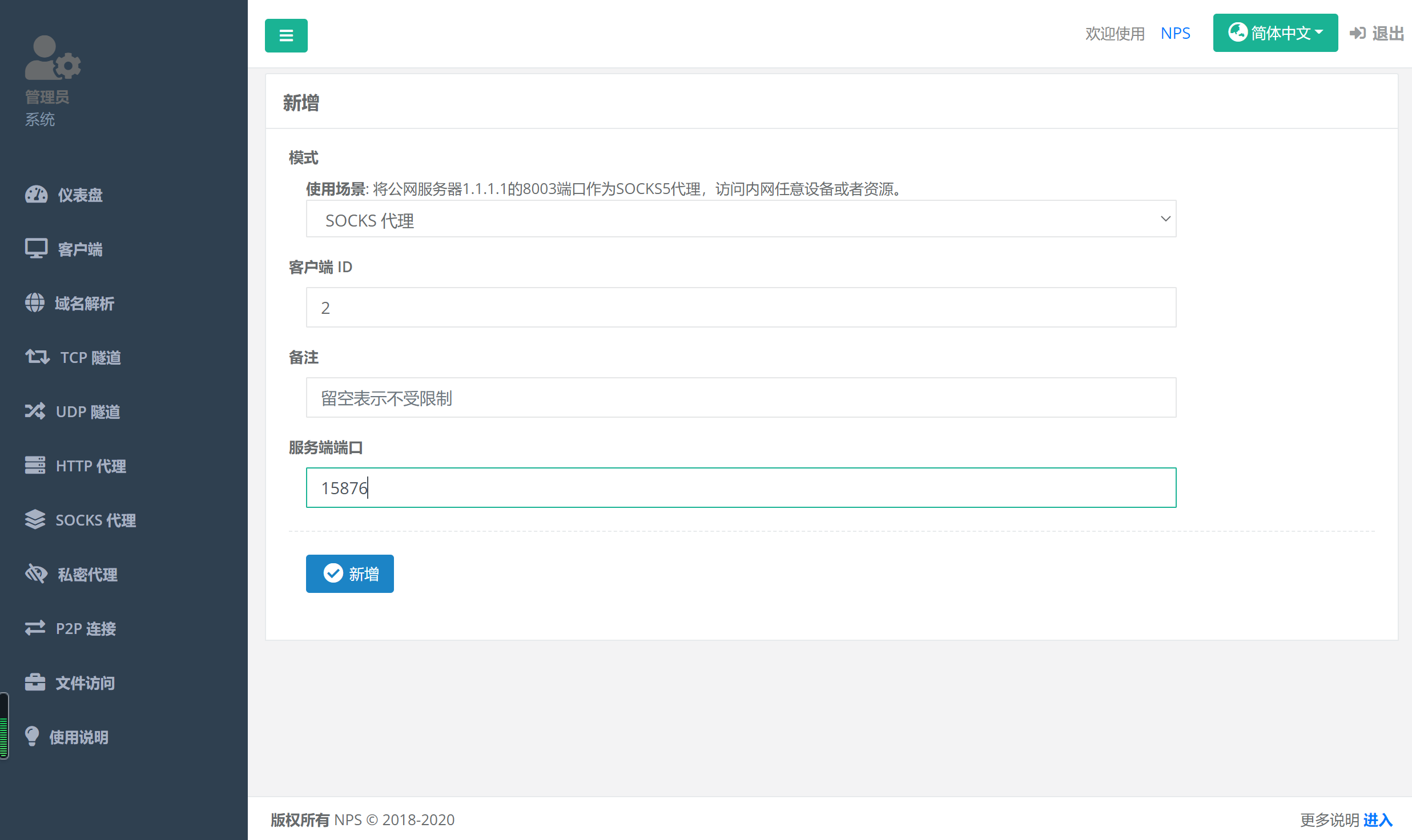

在客户端选项卡中新增一条记录,记录此时的vkey。

点击客户端,进入如下页面

其中,Basic认证是sock5相较于sock4新增的,可以不填。

我们什么都不填,直接点击新增

此时的vkey为:gxoen4mzoy0wdhnt

3、在keepalived-master主容器内安装客户端

服务端叫nps,客户端叫npc

NPS的客户端一般会被放在我们已经拿下的内网主机上,我们会指定NPS服务器的客户端需要连接的NPS服务器的IP和端口,这样,我们就成功的将NPS的服务器端和NPS的客户端连接了起来。

(1)、进入容器

docker exec -it keepalived_master bash

(2)、下载客户端

cd /opt wget -e use_proxy=yes -e http_proxy=127.0.0.1:1080 https://github.com/ehang-io/nps/releases/download/v0.26.10/linux_amd64_client.tar.gz

如果容器内无法下载,我们可以在宿主机中下载,然后赋值到容器内。

docker cp linux_amd64_client.tar.gz keepalived_master:/opt

在opt目录下创建linux_amd64_client文件夹

mkdir -p linux_amd64_client

解压到linux_amd64_client文件夹内

tar -zxvf linux_amd64_client.tar.gz -C linux_amd64_client

(3)、将客户端注册到系统服务

cd /opt/linux_amd64_client nohup ./npc -server=192.168.0.50:8024 -vkey=${vkey} > npc.out 2>&1 &

根据我的vkey修改上面的命令,如下所示:

nohup ./npc -server=192.168.0.50:8024 -vkey=gxoen4mzoy0wdhnt > npc.out 2>&1 &

结果为:

[root@xxx linux_amd64_client]# nohup ./npc -server=192.168.0.50:8024 -vkey=gxoen4mzoy0wdhnt > npc.out 2>&1 & [1] 21037

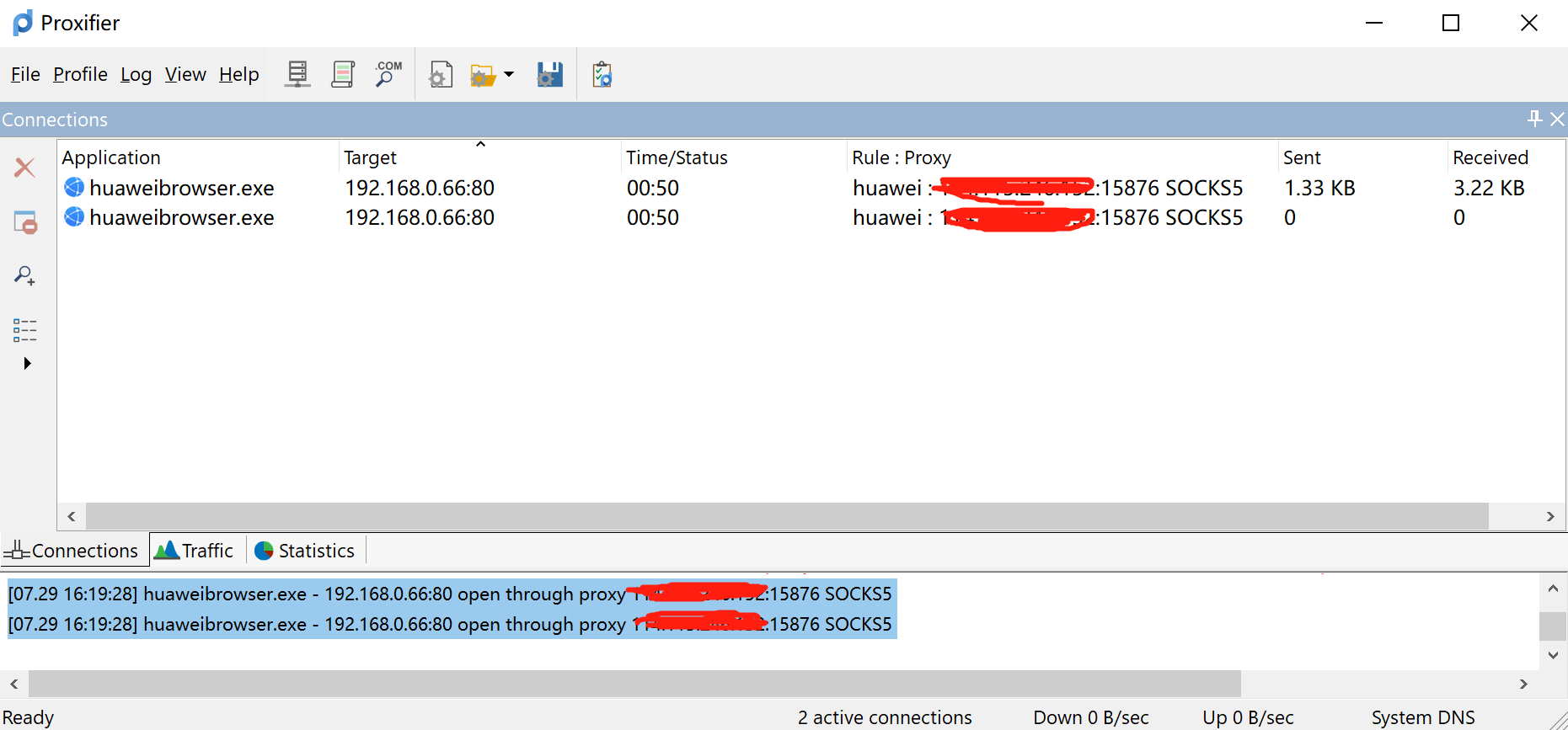

此时可以在客户端页面看到连接为在线,此时服务端与客户端就建立起来隧道。

在SOCKS代理页面,根据客户端ID,新增一条服务端端口为15876的SOCKS 代理

点击新增,进入如下页面

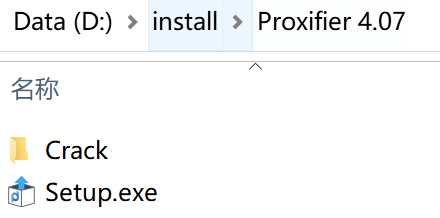

4、下载并安装Proxifier工具

参考文档:https://www.nite07.com/proxifier/

Proxifier是一款功能非常强大的socks5客户端,可以让不支持通过代理服务器工作的网络程序能通过HTTPS或SOCKS代理或代理链。

下载地址:https://file.nite07.com/cn/Proxifier%204.07.zip

下载后如下所示:

![]()

解压文件,输入密码:nite07.com,解压后如下所示:

运行 Setup.exe 安装,安装完成后如下所示:

运行 Crack\keygen.exe,将 License Key 复制到 Proxifier 中激活,激活成功后进入如下页面:

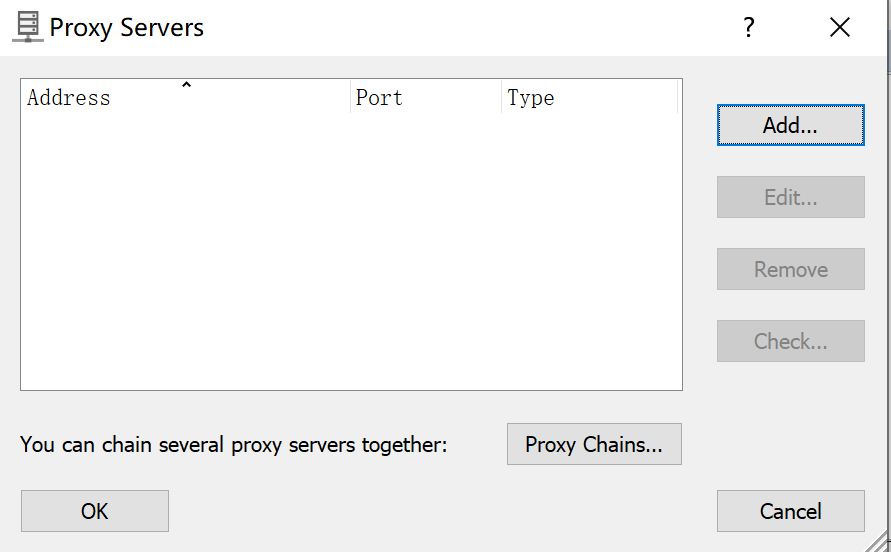

5、添加代理服务器

(1)、点击菜单 Profile -> Proxy Servers…

(2)、点击 Add

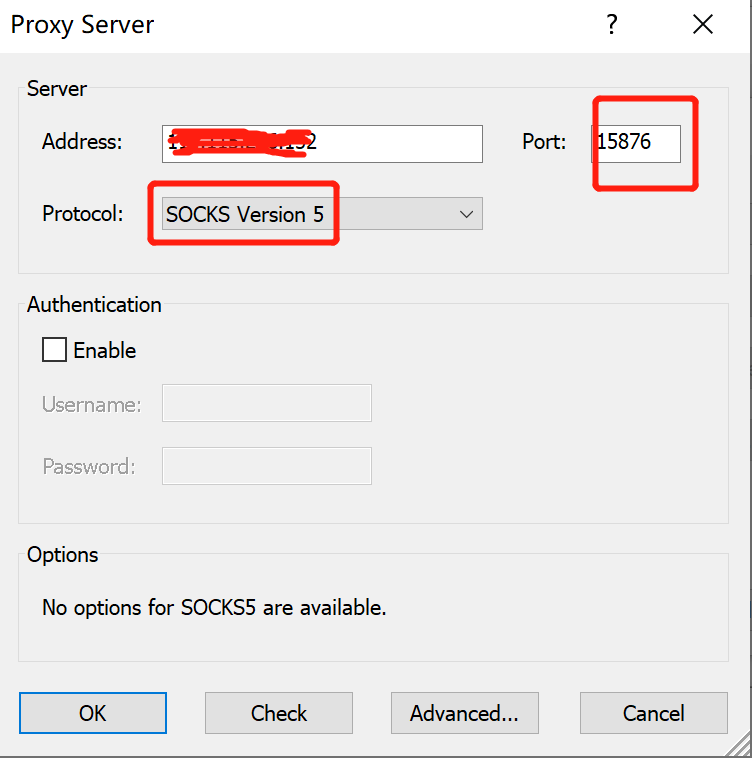

(3)、填入Socks或HTTPS代理服务器IP和端口

Address填写宿主机ip,Port填写15876,Protocol选择Socks Version5

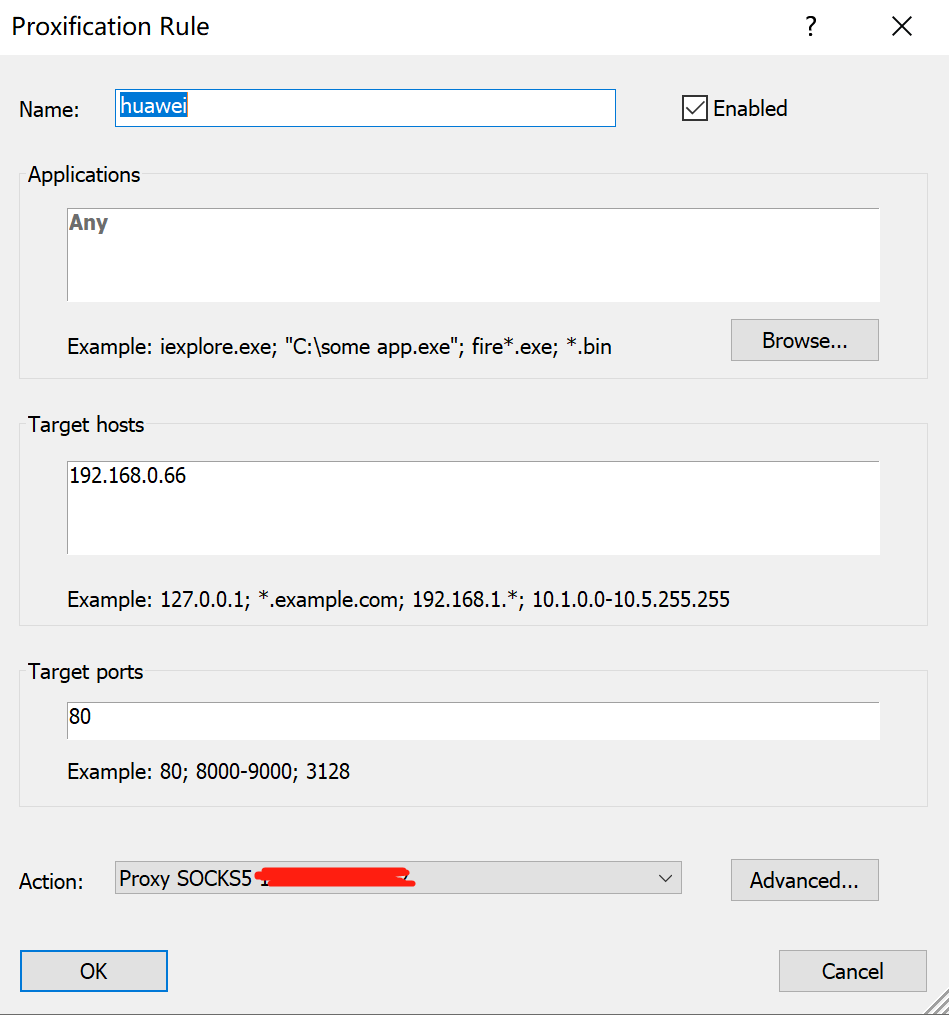

设置代理规则

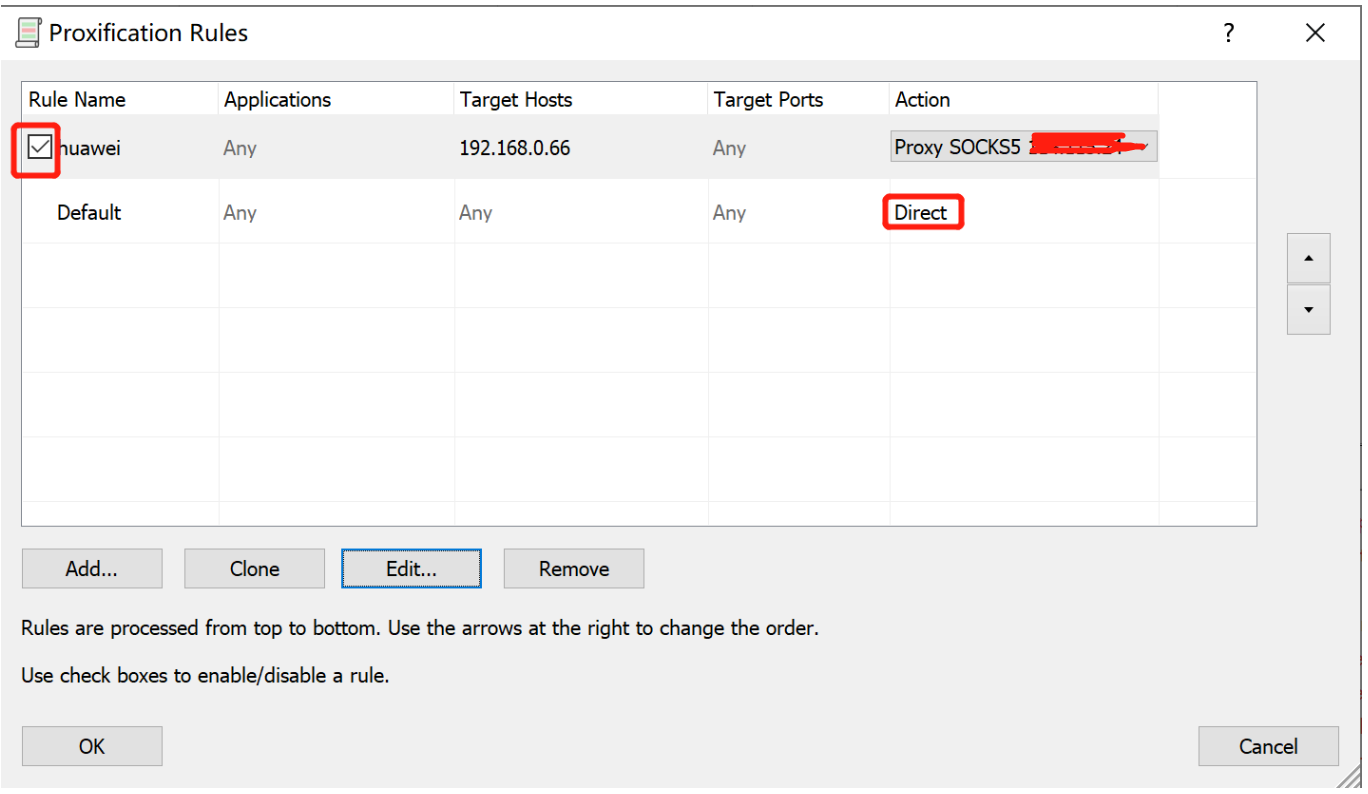

单击Proxification Rules图标—add,这里设置如果访问192.168.0.66这个ip段则走socks5代理

点击OK

注意:1、勾选我们添加的代理规则,2、默认的代理规勾选为Direct!!!

这样我们就能直接访问目标内网的机器,像在本地一样开burp做渗透测试

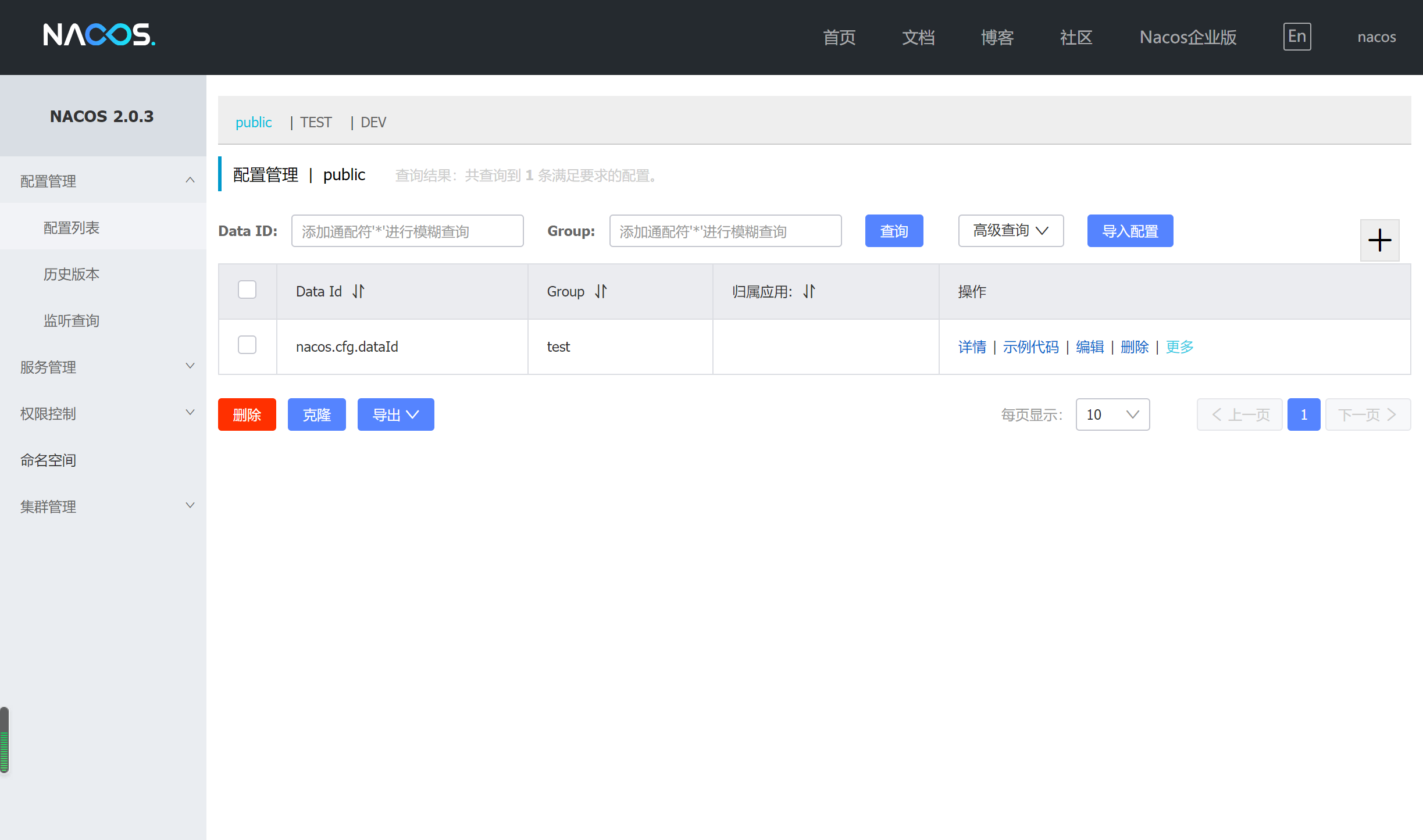

首先我们访问端口为9902的nacos,http://ip:9902/nacos,进入如下页面

说明nacos服务是好的。

进入主容器keepalived_master,执行以下语句:

curl -X GET "http://192.168.0.66/nacos/v1/cs/configs?dataId=nacos.cfg.dataId&group=test"

结果:

[root@b3a23e52b268 /]# curl -X GET "http://192.168.0.66/nacos/v1/cs/configs?dataId=nacos.cfg.dataId&group=test" HelloWorld

说明nginx实现了反向代理。

注意:服务器安全组放开15876端口。

在主机浏览器中输入http://192.168.0.66/nacos

成功在外网访问到内网网段的Nacos集群!

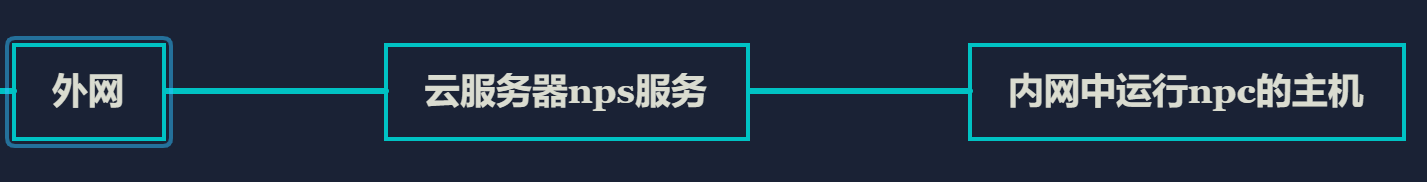

5、NPS的原理

运行NPS服务的云服务器和运行NPS客户端的主机之间会创建一条TCP或UDP隧道,可以映射云服务器上的某个端口到客户端主机的指定端口,其他主机访问云服务器的这个端口的流量都会通过创建的这条隧道转发到映射的主机端口,实现内网穿透效果。

代理过程如下:192.168.0.66:80代理到"云服务器公网IP:15876",这样就找到了nps服务端,再将NPS的服务器端和内网主机中的NPS的客户端连接了起来。