量化细节:非对称激活量化时的神经网络非线性如何保证?

首先看下量化:

我们对a1的量化称为激活量化,那么采用非对称量化的话,参考下面公式:

Asymmetric Quantization

During the training we optimize the input_low and input_range parameters using gradient descent:

For better accuracy, floating-point zero should be within quantization range and strictly mapped into quant (without rounding). Therefore, the following scheme is applied to ranges of weights and activations before quantization:

也就是,将a1的值量化到0-255了,这不就是说都大于零了么?那relu就没用了啊!那神经网络非线性怎么体现呢?

接下来,我们在谷歌发表的论文[QuantizationandTrainingofNeuralNetworksforEfficientInteger-Arithmetic-OnlyInference]里寻找答案。

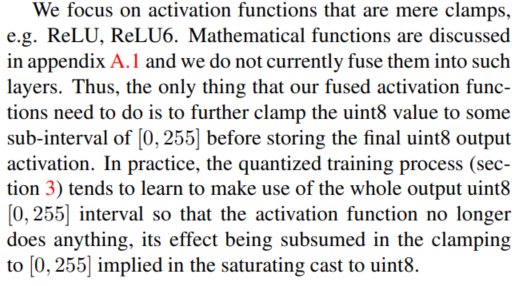

这篇文章里有这样一句话

:

也就是说,clamping吸收了relu的功能,起到了非线性的作用。

但是,其实量化中,每一个参数的量化都有round操作,这不就是也是非线性么!!!暂时先这么理解吧🤣