cephadm快速部署指定版本ceph集群及生产问题处理

官方文档:https://docs.ceph.com/en/pacific/

1、虚拟机规划:centos8

| 主机名 |

IP |

角色 |

| ceph1 |

172.30.3.61 |

cephadm,mon,mgr,osd,rgw |

| ceph2 |

172.30.3.62 |

mon,mgr,osd,rgw |

| ceph3 |

172.30.3.63 |

mon,mgr,rosd,rgw |

2、ceph版本:(安装指定版本在源里面指定即可)

- ceph version 15.2.12(生产)

- ceph version 16.2.4(测试)

3、虚拟机操作系统:

4、初始化工作(三台机器同时操作):

4.1关闭防火墙:

| systemctl stop firewalld && systemctl disable firewalld |

4.2 关闭SELinux:

| setenforce 0 && sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config |

| [root@ceph3 ~] |

| |

| |

| |

| |

| |

| |

| SELINUX=disabled |

| |

| |

| |

| |

| SELINUXTYPE=targeted |

| |

| |

4.3 设置时间同步:

| $ cat /etc/chrony.conf |

| |

| |

| |

| server ntp.aliyun.com iburst |

| |

| driftfile /var/lib/chrony/drift |

| |

| |

| |

| makestep 1.0 3 |

| |

| |

| rtcsync |

| |

| |

| |

| |

| |

| |

| |

| |

| |

| |

| |

| |

| |

| |

| |

| keyfile /etc/chrony.keys |

| |

| |

| leapsectz right/UTC |

| |

| |

| logdir /var/log/chrony |

| |

| |

| |

| |

| |

| 重启服务 |

| $ systemctl restart chronyd |

| $ systemctl enable chronyd |

4.4 配置epel源:

CentOS Linux 8 已经停止更新维护,因此需要修改YUM源:

| $ cd /etc/yum.repos.d/ |

| sed -i 's/mirrorlist/#mirrorlist/g' /etc/yum.repos.d/CentOS-* |

| sed -i 's|#baseurl=http://mirror.centos.org|baseurl=http://vault.centos.org|g' /etc/yum.repos.d/CentOS-* |

生成缓存:

配置epel源:(这里必须用命令安装,vi 写入key不识别)

| dnf install epel-release -y |

4.5 设置主机名:

| $ hostnamectl set-hostname hostname |

| |

4.6修改域名解析文件:

| [root@ceph1 ~] |

| 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 |

| ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 |

| 172.30.3.61 ceph1 |

| 172.30.3.62 ceph2 |

| 172.30.3.63 ceph3 |

| |

4.7配置ceph镜像源

| [root@ceph1 yum.repos.d] |

| [Ceph] |

| name=Ceph packages for $basearch |

| baseurl=https://download.ceph.com/rpm-16.2.4/el8/$basearch |

| enabled=1 |

| gpgcheck=0 |

| type=rpm-md |

| gpgkey=https://download.ceph.com/keys/release.asc |

| priority=1 |

| |

| [Ceph-noarch] |

| name=Ceph noarch packages |

| baseurl=https://download.ceph.com/rpm-16.2.4/el8/noarch |

| enabled=1 |

| gpgcheck=0 |

| type=rpm-md |

| gpgkey=https://download.ceph.com/keys/release.asc |

| priority=1 |

| |

| [ceph-source] |

| name=Ceph source packages |

| baseurl=https://download.ceph.com/rpm-16.2.4/el8/SRPMS |

| enabled=1 |

| gpgcheck=0 |

| type=rpm-md |

| gpgkey=https://download.ceph.com/keys/release.asc |

| priority=1 |

| [root@ceph1 yum.repos.d] |

| |

| 想要更换其他版本 |

| 在vim 下面输入直接替换即可 |

| ESC |

| :%s/16.2.4/15.2.12/g Enter |

生成缓存:

安装ceph:

验证ceph是否安装成功:

| [root@node1 ~] |

| ceph version 15.2.12 (ce065eabfa5ce81323b009786bdf5bb03127cbe1) octopus (stable) |

| [root@node1 ~] |

| |

5、安装docker(三台机器同时操作):(8中默认有podman)

官方文档:https://docs.docker.com/engine/install/centos/

5.1安装需要的软件包

| $ yum install -y yum-utils |

5.2设置stable镜像仓库:

| $ yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo |

5.3生成缓存:

5.4安装DOCKER CE:

| $ yum install docker-ce docker-ce-cli containerd.io docker-compose-plugin |

5.5配置阿里云镜像加速

| $ mkdir -p /etc/docker |

| $ tee /etc/docker/daemon.json <<-'EOF' |

| { |

| "registry-mirrors": ["https://fxt824bw.mirror.aliyuncs.com"] |

| } |

| EOF |

5.6验证docker是否安装

| $ docker -v |

| Docker version 20.10.18, build b40c2f6 |

5.7启动docker:

| $ systemctl start docker && systemctl enable docker |

6、安装cephadm(ceph1节点操作)部署集群:

6.1安装cephadm:

6.2引导新群集:

| $ cephadm bootstrap --mon-ip ceph1的IP |

| 此操作比较慢,要从镜像源拉取镜像 |

| |

| |

| Verifying podman|docker is present... |

| Verifying lvm2 is present... |

| Verifying time synchronization is in place... |

| Unit chronyd.service is enabled and running |

| Repeating the final host check... |

| podman|docker (/usr/bin/docker) is present |

| systemctl is present |

| lvcreate is present |

| Unit chronyd.service is enabled and running |

| Host looks OK |

| Cluster fsid: 09feacf4-4f13-11ed-a401-000c29d6f8f4 |

| Verifying IP 192.168.150.120 port 3300 ... |

| Verifying IP 192.168.150.120 port 6789 ... |

| Mon IP 192.168.150.120 is in CIDR network 192.168.150.0/24 |

| - internal network (--cluster-network) has not been provided, OSD replication will default to the public_network |

| Pulling container image docker.io/ceph/ceph:v16... |

| Ceph version: ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable) |

| Extracting ceph user uid/gid from container image... |

| Creating initial keys... |

| Creating initial monmap... |

| Creating mon... |

| Waiting for mon to start... |

| Waiting for mon... |

| mon is available |

| Assimilating anything we can from ceph.conf... |

| Generating new minimal ceph.conf... |

| Restarting the monitor... |

| Setting mon public_network to 192.168.150.0/24 |

| Wrote config to /etc/ceph/ceph.conf |

| Wrote keyring to /etc/ceph/ceph.client.admin.keyring |

| Creating mgr... |

| Verifying port 9283 ... |

| Waiting for mgr to start... |

| Waiting for mgr... |

| mgr not available, waiting (1/15)... |

| mgr not available, waiting (2/15)... |

| mgr not available, waiting (3/15)... |

| mgr not available, waiting (4/15)... |

| mgr is available |

| Enabling cephadm module... |

| Waiting for the mgr to restart... |

| Waiting for mgr epoch 5... |

| mgr epoch 5 is available |

| Setting orchestrator backend to cephadm... |

| Generating ssh key... |

| Wrote public SSH key to /etc/ceph/ceph.pub |

| Adding key to root@localhost authorized_keys... |

| Adding host ceph1... |

| Deploying mon service with default placement... |

| Deploying mgr service with default placement... |

| Deploying crash service with default placement... |

| Enabling mgr prometheus module... |

| Deploying prometheus service with default placement... |

| Deploying grafana service with default placement... |

| Deploying node-exporter service with default placement... |

| Deploying alertmanager service with default placement... |

| Enabling the dashboard module... |

| Waiting for the mgr to restart... |

| Waiting for mgr epoch 13... |

| mgr epoch 13 is available |

| Generating a dashboard self-signed certificate... |

| Creating initial admin user... |

| Fetching dashboard port number... |

| Ceph Dashboard is now available at: |

| |

| URL: https://ceph1:8443/ |

| User: admin |

| Password: mo5ahyp1wx |

| |

| You can access the Ceph CLI with: |

| |

| sudo /usr/sbin/cephadm shell --fsid 09feacf4-4f13-11ed-a401-000c29d6f8f4 -c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring |

| |

| Please consider enabling telemetry to help improve Ceph: |

| |

| ceph telemetry on |

| |

| For more information see: |

| |

| https://docs.ceph.com/docs/pacific/mgr/telemetry/ |

| |

| Bootstrap complete. |

拉取下来的镜像

| [root@ceph1 ~] |

| REPOSITORY TAG IMAGE ID CREATED SIZE |

| ceph/ceph-grafana 6.7.4 557c83e11646 22 months ago 486MB |

| ceph/ceph v16 6933c2a0b7dd 23 months ago 1.2GB |

| prom/prometheus v2.18.1 de242295e225 3 years ago 140MB |

| prom/alertmanager v0.20.0 0881eb8f169f 3 years ago 52.1MB |

| prom/node-exporter v0.18.1 e5a616e4b9cf 4 years ago 22.9MB |

| [root@ceph1 ~] |

| |

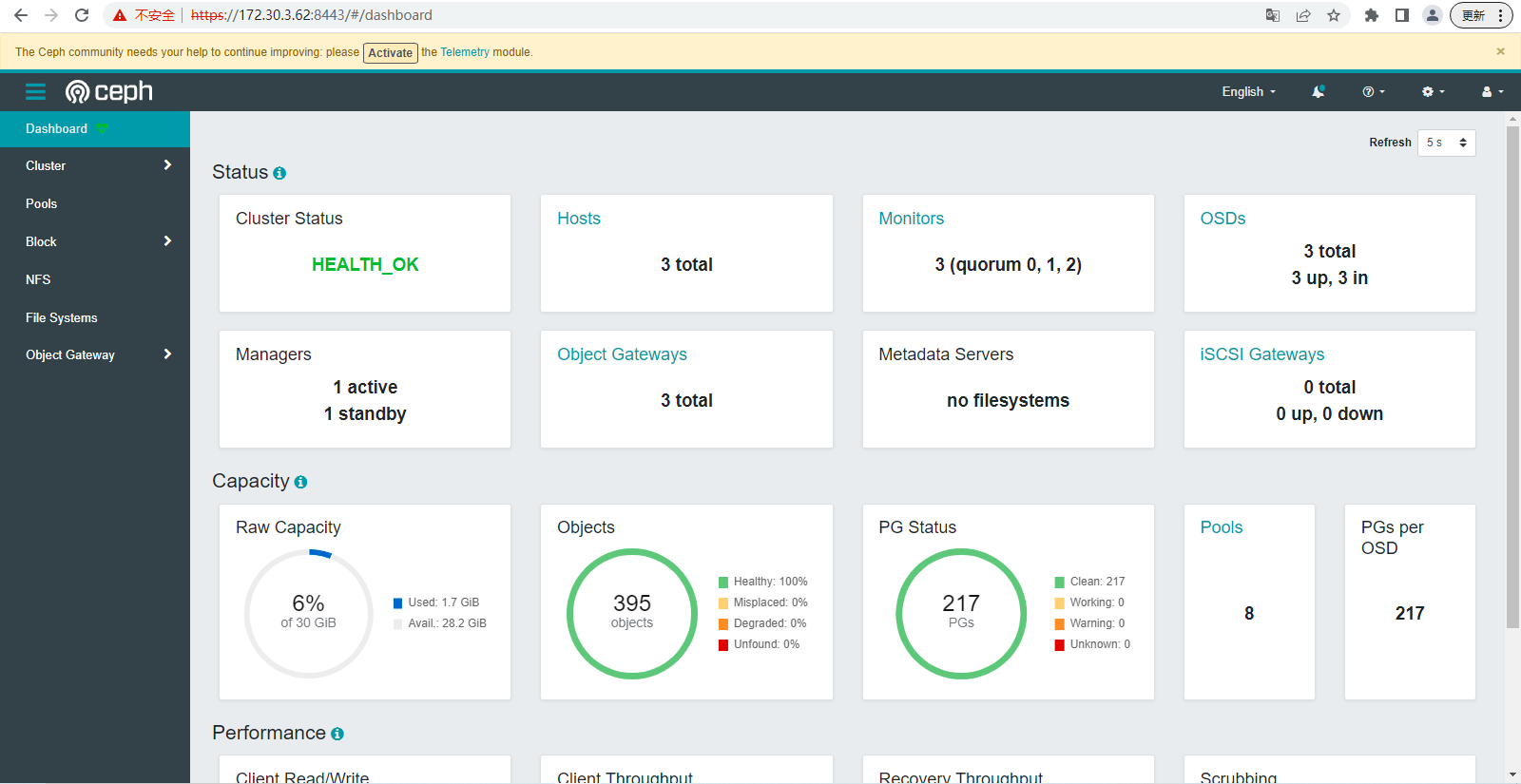

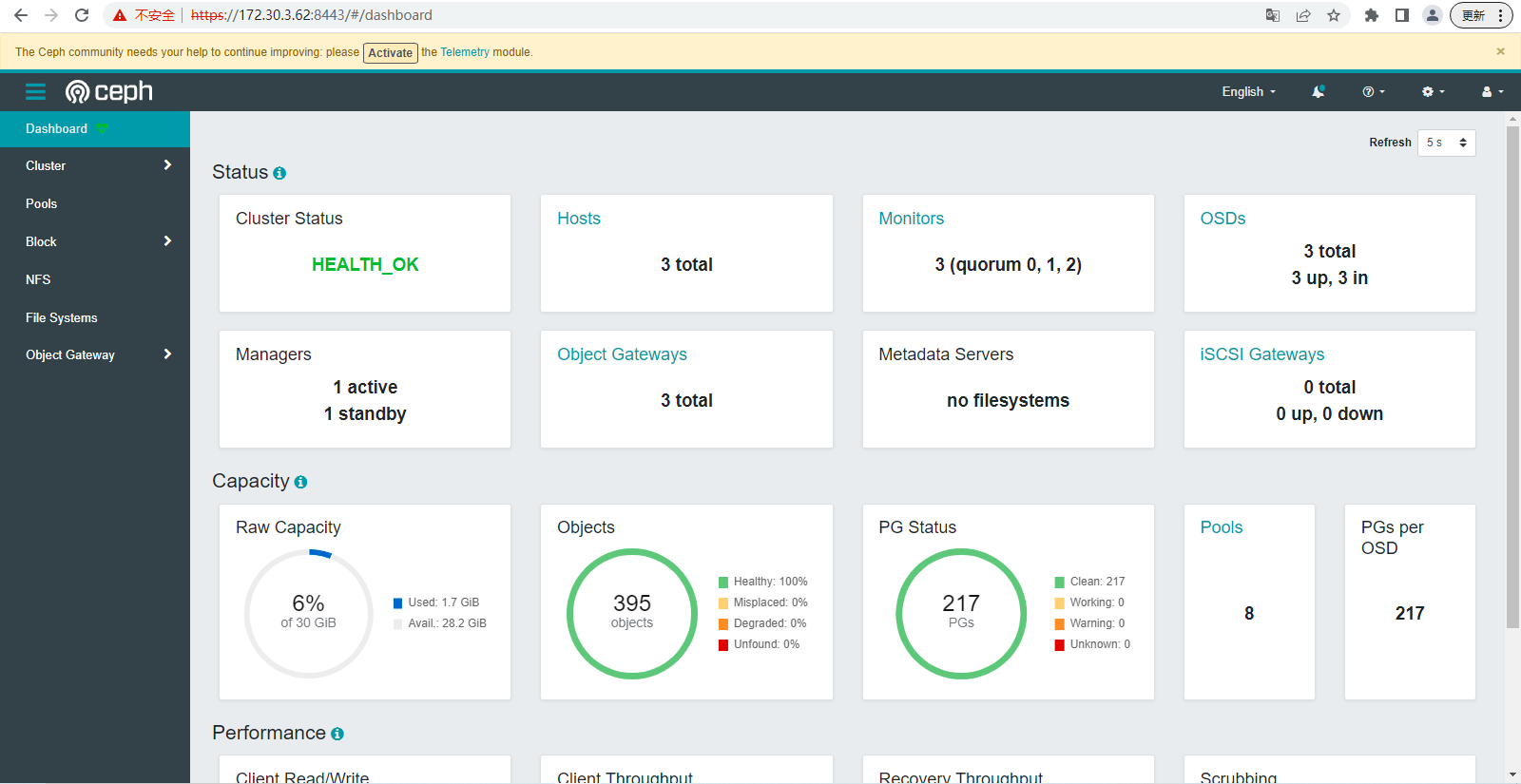

通过URL可以访问到可视化界面,输入用户名和密码即可进入界面

6.3其他主机加入集群:

| 启用 CEPH CLI: |

| cephadm shell |

安装集群公共SSH密钥:

| $ ceph cephadm get-pub-key > ~/ceph.pub |

| $ ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph2 |

| $ ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph3 |

新主机加入集群:

| $ ceph orch host add ceph2 |

| $ ceph orch host add ceph3 |

| 这里如果无法加入后面加上IP即可 |

| $ ceph orch host add ceph2 172.30.3.62 |

| $ ceph orch host add ceph3 172.30.3.63 |

等待一段时间(可能需要等待的时间比较久,新加入的主机需要拉取需要的镜像和启动容器实例),查看集群状态:

| $ ceph -s |

| cluster: |

| id: 09feacf4-4f13-11ed-a401-000c29d6f8f4 |

| health: HEALTH_WARN |

| OSD count 0 < osd_pool_default_size 3 |

| |

| services: |

| mon: 3 daemons, quorum ceph1,ceph2,ceph3 (age 90s) |

| mgr: ceph1.sqfwyo(active, since 22m), standbys: ceph2.mwhuqa |

| osd: 0 osds: 0 up, 0 in |

| |

| data: |

| pools: 0 pools, 0 pgs |

| objects: 0 objects, 0 B |

| usage: 0 B used, 0 B / 0 B avail |

| pgs: |

可以看到新主机加入到集群中的时候会自动扩展mon和mgr节点。

6.4部署OSD:

查看磁盘分区:

| $ lsblk |

| NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT |

| sr0 11:0 1 10.1G 0 rom |

| nvme0n1 259:0 0 20G 0 disk |

| |-nvme0n1p1 259:1 0 1G 0 part |

| `-nvme0n1p2 259:2 0 19G 0 part |

| |-cl-root 253:0 0 17G 0 lvm /var/lib/ceph/crash |

| `-cl-swap 253:1 0 2G 0 lvm [SWAP] |

| nvme0n2 259:3 0 10G 0 disk |

| |

将集群中所有的空闲磁盘分区全部部署到集群中:

| $ ceph orch daemon add osd ceph1:/dev/nvme0n2 |

| Created osd(s) 0 on host 'ceph1' |

| |

| $ ceph orch daemon add osd ceph2:/dev/nvme0n2 |

| Created osd(s) 3 on host 'ceph2' |

| |

| $ ceph orch daemon add osd ceph3:/dev/nvme0n2 |

| Created osd(s) 6 on host 'ceph3' |

| |

查看集群状态:

| $ ceph -s |

| cluster: |

| id: 09feacf4-4f13-11ed-a401-000c29d6f8f4 |

| health: HEALTH_OK |

| |

| services: |

| mon: 3 daemons, quorum ceph1,ceph2,ceph3 (age 23m) |

| mgr: ceph2.mwhuqa(active, since 13m), standbys: ceph1.sqfwyo |

| osd: 9 osds: 9 up (since 7m), 9 in (since 8m) |

| |

| data: |

| pools: 1 pools, 1 pgs |

| objects: 0 objects, 0 B |

| usage: 48 MiB used, 90 GiB / 90 GiB avail |

| pgs: 1 active+clean |

| |

| [ceph: root@ceph1 ~] |

| cluster: |

| id: 09feacf4-4f13-11ed-a401-000c29d6f8f4 |

| health: HEALTH_OK |

| |

| services: |

| mon: 3 daemons, quorum ceph1,ceph2,ceph3 (age 30m) |

| mgr: ceph2.mwhuqa(active, since 12h), standbys: ceph1.sqfwyo |

| osd: 9 osds: 9 up (since 12h), 9 in (since 12h) |

| |

| data: |

| pools: 1 pools, 1 pgs |

| objects: 0 objects, 0 B |

| usage: 48 MiB used, 90 GiB / 90 GiB avail |

| pgs: 1 active+clean |

| |

7、可视化界面验证查看集群:

8.部署rgw.s3

8.1ceph version 15.2.12版本

8.1.1创建pool并设置<pg_num> <pgp_num> 大小

| ceph osd pool create cn-beijing.rgw.buckets.index 64 64 replicated |

| ceph osd pool create cn-beijing.rgw.buckets.data 128 128 replicated |

| ceph osd pool create cn-beijing.rgw.buckets.non-ec 32 32 replicated |

| [root@node1 ~] |

| pool 'cn-beijing.rgw.buckets.index' created |

| [root@node1 ~] |

| pool 'cn-beijing.rgw.buckets.data' created |

| [root@node1 ~] |

| pool 'cn-beijing.rgw.buckets.non-ec' created |

| |

8.1.2创建区域 realm zonegroup zone #创建一个新的领域

| radosgw-admin realm create --rgw-realm=YOUJIVEST.COM --default |

| [root@ceph1 ~] |

| { |

| "id": "6ed9dc1d-8eb4-4c32-9d12-473f13e4835a", |

| "name": "YOUJIVEST.COM", |

| "current_period": "8cf9f6e2-005a-4cd2-b0ec-58f634ce66bc", |

| "epoch": 1 |

| } |

8.1.3创建新的区域组信息

| radosgw-admin zonegroup create --rgw-zonegroup=cn --rgw-realm=YOUJIVEST.COM --master --default |

| [root@ceph1 ~] |

| { |

| "id": "38727586-d0ad-4df7-af2a-4bba2cbff495", |

| "name": "cn", |

| "api_name": "cn", |

| "is_master": "true", |

| "endpoints": [], |

| "hostnames": [], |

| "hostnames_s3website": [], |

| "master_zone": "", |

| "zones": [], |

| "placement_targets": [], |

| "default_placement": "", |

| "realm_id": "6ed9dc1d-8eb4-4c32-9d12-473f13e4835a", |

| "sync_policy": { |

| "groups": [] |

| } |

| } |

| |

8.1.4 rgw-zone 运行radosgw的区域的名称

| radosgw-admin zone create --rgw-zonegroup=cn --rgw-zone=cn-beijing --master --default |

| [root@ceph1 ~] |

| { |

| "id": "cc642b93-8b41-43c8-aa10-863a057401f1", |

| "name": "cn-beijing", |

| "domain_root": "cn-beijing.rgw.meta:root", |

| "control_pool": "cn-beijing.rgw.control", |

| "gc_pool": "cn-beijing.rgw.log:gc", |

| "lc_pool": "cn-beijing.rgw.log:lc", |

| "log_pool": "cn-beijing.rgw.log", |

| "intent_log_pool": "cn-beijing.rgw.log:intent", |

| "usage_log_pool": "cn-beijing.rgw.log:usage", |

| "roles_pool": "cn-beijing.rgw.meta:roles", |

| "reshard_pool": "cn-beijing.rgw.log:reshard", |

| "user_keys_pool": "cn-beijing.rgw.meta:users.keys", |

| "user_email_pool": "cn-beijing.rgw.meta:users.email", |

| "user_swift_pool": "cn-beijing.rgw.meta:users.swift", |

| "user_uid_pool": "cn-beijing.rgw.meta:users.uid", |

| "otp_pool": "cn-beijing.rgw.otp", |

| "system_key": { |

| "access_key": "", |

| "secret_key": "" |

| }, |

| "placement_pools": [ |

| { |

| "key": "default-placement", |

| "val": { |

| "index_pool": "cn-beijing.rgw.buckets.index", |

| "storage_classes": { |

| "STANDARD": { |

| "data_pool": "cn-beijing.rgw.buckets.data" |

| } |

| }, |

| "data_extra_pool": "cn-beijing.rgw.buckets.non-ec", |

| "index_type": 0 |

| } |

| } |

| ], |

| "realm_id": "6ed9dc1d-8eb4-4c32-9d12-473f13e4835a", |

| "notif_pool": "cn-beijing.rgw.log:notif" |

| } |

8.1.5查看区域

| [root@ceph1 ~] |

| { |

| "default_info": "cc642b93-8b41-43c8-aa10-863a057401f1", |

| "zones": [ |

| "cn-beijing" |

| ] |

| } |

| [root@ceph1 ~] |

| { |

| "default_info": "38727586-d0ad-4df7-af2a-4bba2cbff495", |

| "zonegroups": [ |

| "cn" |

| ] |

| } |

| [root@ceph1 ~] |

| { |

| "default_info": "6ed9dc1d-8eb4-4c32-9d12-473f13e4835a", |

| "realms": [ |

| "YOUJIVEST.COM" |

| ] |

| } |

8.1.6 为s3配置ssl

| [root@node1 ~] |

| set rgw/cert/YOUJIVEST.COM/cn-beijing.crt |

| [root@node1 ~] |

| set rgw/cert/YOUJIVEST.COM/cn-beijing.key |

8.1.7绑定数据存储位置

| ceph osd pool application enable cn-beijing.rgw.buckets.index rgw |

| ceph osd pool application enable cn-beijing.rgw.buckets.data rgw |

| ceph osd pool application enable cn-beijing.rgw.buckets.non-ec rgw |

8.1.8创建rgw.s3 ,curl 访问s3验证

| [root@node1 ~] |

| ceph orch apply rgw rgw.YOUJIVEST.COM.cn-beijing --realm=YOUJIVEST.COM --zone=cn-beijing --placement="3 ceph1 ceph2 ceph3" --ssl |

| |

| |

| [root@node1 ~] |

| <?xml version="1.0" encoding="UTF-8"?><ListAllMyBucketsResult xmlns="http://s3.amazonaws.com/doc/2006-03-01/"><Owner><ID>anonymous</ID><DisplayName></DisplayName></Owner><Buckets></Buckets></ListAllMyBucketsResult>[root@node1 ~] |

| |

8.1.9创建对外访问的KEY 和 secret_key

| radosgw-admin user create --uid=esg --display-name='ESG User' --email=chenhu@youjivest.com |

| [root@node1 ~] |

| { |

| "user_id": "esg", |

| "display_name": "ESG User", |

| "email": "chenhu@youjivest.com", |

| "suspended": 0, |

| "max_buckets": 1000, |

| "subusers": [], |

| "keys": [ |

| { |

| "user": "esg", |

| "access_key": "9Y3D6KQISHSURJHOUL8Z", |

| "secret_key": "gDk7TXLoTgAxBDwxfBAxgt4VTJrbcb82AlMvzu91" |

| } |

| ], |

| "swift_keys": [], |

| "caps": [], |

| "op_mask": "read, write, delete", |

| "default_placement": "", |

| "default_storage_class": "", |

| "placement_tags": [], |

| "bucket_quota": { |

| "enabled": false, |

| "check_on_raw": false, |

| "max_size": -1, |

| "max_size_kb": 0, |

| "max_objects": -1 |

| }, |

| "user_quota": { |

| "enabled": false, |

| "check_on_raw": false, |

| "max_size": -1, |

| "max_size_kb": 0, |

| "max_objects": -1 |

| }, |

| "temp_url_keys": [], |

| "type": "rgw", |

| "mfa_ids": [] |

| } |

8.1.10授权esg 用户可以操作所有存储池

| radosgw-admin caps add --uid=esg --caps="buckets=*" |

| [root@node1 ~] |

| { |

| "user_id": "esg", |

| "display_name": "ESG User", |

| "email": "lvfaguo@youjivest.com", |

| "suspended": 0, |

| "max_buckets": 1000, |

| "subusers": [], |

| "keys": [ |

| { |

| "user": "esg", |

| "access_key": "9Y3D6KQISHSURJHOUL8Z", |

| "secret_key": "gDk7TXLoTgAxBDwxfBAxgt4VTJrbcb82AlMvzu91" |

| } |

| ], |

| "swift_keys": [], |

| "caps": [ |

| { |

| "type": "buckets", |

| "perm": "*" |

| } |

| ], |

| "op_mask": "read, write, delete", |

| "default_placement": "", |

| "default_storage_class": "", |

| "placement_tags": [], |

| "bucket_quota": { |

| "enabled": false, |

| "check_on_raw": false, |

| "max_size": -1, |

| "max_size_kb": 0, |

| "max_objects": -1 |

| }, |

| "user_quota": { |

| "enabled": false, |

| "check_on_raw": false, |

| "max_size": -1, |

| "max_size_kb": 0, |

| "max_objects": -1 |

| }, |

| "temp_url_keys": [], |

| "type": "rgw", |

| "mfa_ids": [] |

| } |

8.1.10 保存创建的用户,用于对外访问s3

| [root@node1 ~] |

| 9Y3D6KQISHSURJHOUL8Z |

| [root@node1 ~] |

| gDk7TXLoTgAxBDwxfBAxgt4VTJrbcb82AlMvzu91 |

| [root@node1 ~] |

8.2 ceph version 16.2.4

其他步骤都一样,版本不同创建rgw 规则有所不同

| |

| ceph orch apply rgw YOUJIVEST.COM.cn-beijing YOUJIVEST.COM cn-beijing --placement=placement=label:rgw --port=8000 |

| |

| |

9、生产问题处理

9.1ceph version 15.2.12 中 指定rgw 的ssl 证书失效了怎么办

| 在Ceph版本15.2.12中,如果你使用ceph config-key set命令来更新RGW(对象网关)的SSL证书,即使目前服务器证书是最新的,也不会自动生效。这是因为在Ceph中,更新SSL证书需要通过重新加载RGW服务来应用更改。 |

| 要使新的SSL证书生效,你需要执行以下步骤: |

| 1.使用ceph config-key set命令将新的SSL证书内容存储到Ceph的配置数据库中。确保你已经替换了正确的证书文件路径和名称。示例命令如下: |

| |

| ceph config-key set rgw/cert/YOUJIVEST.COM/cn-beijing.crt -i /etc/letsencrypt/live/s3.youjivest.com/fullchain.pem |

| |

| 2.接下来,需要重新加载RGW服务以使其使用新的SSL证书。你可以使用Ceph Orchestrator来重新加载RGW服务。执行以下命令: |

| ceph orch restart rgw.<realm>.<zone> |

| 将 <realm> 替换为你的RGW实例的实际领域名称, <zone> 替换为区域名称。如果你只有一个RGW实例,则通常为default。 |

| 3.重新加载RGW服务后,它将开始使用新的SSL证书。你可以通过连接到RGW并检查证书信息来验证证书是否已更新。例如,你可以使用openssl s_client命令连接到RGW并检查证书详细信息: |

| openssl s_client -connect <rgw_host>:<rgw_port> |

| 将 <rgw_host> 替换为你的RGW主机名或IP地址, <rgw_port> 替换为RGW监听的端口号(通常为80或443)。 |

9.2 osd 存储池满了怎么办

| 方法一:修改pool副本数 |

| 查询存储池的副本数: |

| |

| 1.使用以下命令列出当前的存储池及其相关信息: |

| ceph osd lspools |

| 这将显示当前的存储池列表,其中包括每个存储池的ID和名称 |

| 2.选择你要查询副本数的存储池,并记录下其存储池ID或名称 |

| 3.使用以下命令查询存储池的副本数 |

| ceph osd pool get <pool_name> size |

| 将 <pool_name> 替换为你要查询的存储池的名称。 |

| 执行命令后,将显示该存储池的副本数。 |

| |

| |

| 修改存储池的副本数: |

| 1.使用以下命令修改存储池的副本数: |

| ceph osd pool set <pool_name> size <new_size> |

| 将 <pool_name> 替换为你要修改的存储池的名称, <new_size> 替换为你希望设置的新的副本数。 |

| |

| 例如,要将存储池 "my_pool" 的副本数设置为 3,可以执行以下命令: |

| ceph osd pool set my_pool size 3 |

| |

| 请注意,修改存储池的副本数可能会对存储池的容量和性能产生影响。确保你了解副本数对存储需求的影响,并在执行修改操作之前仔细考虑。 |

| 同时,修改存储池的副本数可能需要一段时间来重新平衡数据并应用更改。在此过程中,存储池可能会暂时处于不可用状态,因此请确保在适当的时机执行操作,并考虑影响到的应用程序或服务。 |

| 如果你需要更详细的信息或遇到问题,请参考Ceph的官方文档或寻求Ceph社区的支持和帮助。 |

| |

| |

| 方法二:购买硬盘。进行扩容 |

| 详细操作参考下面链接 |

| https://www.cnblogs.com/andy996/p/17448038.html |

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· Manus的开源复刻OpenManus初探

· AI 智能体引爆开源社区「GitHub 热点速览」

· 从HTTP原因短语缺失研究HTTP/2和HTTP/3的设计差异

· 三行代码完成国际化适配,妙~啊~