Netperf - The fastest TCP connection with Multipath TCP

http://multipath-tcp.org/pmwiki.php?n=Main.50Gbps

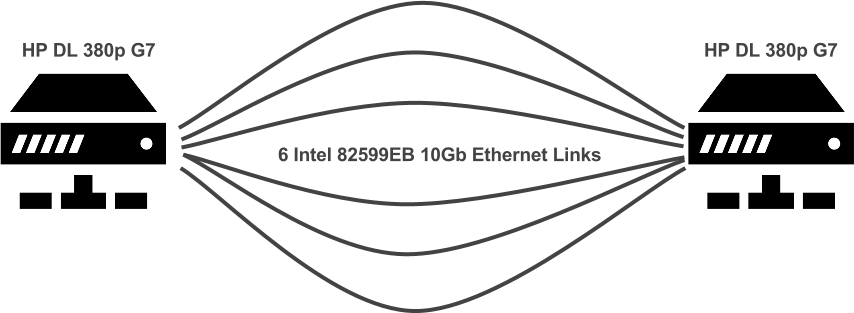

Transferring data quickly is a key objective for a large number of applications inside datacenters. While some datacenters are still using Gigabit/sec Ethernet interfaces, many are installing 10 Gigabit/sec interfaces on high-end servers. The next step will be 40 Gigabit/sec and 100 Gigabit/sec, but these technologies are still expensive today. Multipath TCP is a TCP extension specified in RFC6824 that allows endhosts to efficiently use multiple interfaces for a single TCP connection. To test the performance limits of Multipath TCP using 10 Gigabit/sec interfaces, we built the following setup in our lab :

Our lab uses two HP DL380p G7 generously provided by HP Belgium. Each server contains 3 Intel dual-port 10 Gig NICs (Intel 82599EB 10Gb Ethernet), offered by Intel. Each server runs the Linux Kernel with our Multipath TCP implementation.

The video below demonstrates the goodput achieved by Multipath TCP in this setup. We use netperf and open aSINGLE TCP connection between the two servers. At startup, Multipath TCP is enabled on only one interface and netperf is able to fully use one interface. This is the goodput that regular TCP would normally achieve. Then, we successively enable Multipath TCP on the other Ethernet interfaces. Multipath TCP adapts automatically to the available bandwidth and can quickly benefit from the new paths.

Using recent hardware and 3 dual-port 10Gig NICs, Multipath TCP is able to transmit asingle data-stream at the rate of up to 51.8 Gbit/s. This corresponds to more than 1 DVD per second, or an entire Blu-Ray disk (25GB) in only 5 seconds !

The technical details

Multipath TCP is a solution that allows to simultaneously use multiple interfaces/IP-addresses for a single data-stream, while still presenting a standard TCP socket API to the application. Multipath TCP thus allows to increase the download-speed by aggregating the bandwidth of each interface. Additionally, MPTCP allows mobile hosts to handover traffic from WiFi to 3G, without disrupting the application. Various other use case exist for Multipath TCP in datacenters, for IPv6/IPv4 coexistence, ...

To achieve this record, researchers from Université catholique de Louvain in Belgium have tuned their reference implementation of MPTCP in the Linux Kernel. All the software used to achieve this performance are open-source and publicly available.

The Linux Kernel being used on these machines is based on the stable v0.86 release of Multipath TCP. This stable release has been tuned to improve its performance :

- the kernel has been rebased to the latest net-next

- we added hardware offloading-support for MPTCP (will be included in the next stable MPTCP-release)

- by default the v0.86 Multipath TCP kernel establishes a full mesh of TCP subflows among all available IP addresses between both hosts. The custom kernel prevents this and only establishes one TCP subflow per interface.

- Some minor optimizations to the Multipath TCP stack which will be part of the upcoming v0.87 release.

All the customizations have been integrated into the latest v0.89 stable release of MPTCP.

Another factor that influences the performance of Multipath TCP is the configuration of the kernel. To achieve 50 Gbps, we have used a specific kernel configuration, optimized for our hardware in order to squeeze out the last bits of performance. You can access it here. If you use this config file, make sure that you adapt it accordingly to your hardware.

After booting the machines, we ran a special configuration-file on the client and the server. This configuration performs the following tuning :

- Enable Receive-Flow-Steering.

- Configure the MTU and tx-queue length of each interface

- Adjust interrupt-coalescing parameters of each interface

- Set the interrupt affinity of each NIC (interrupts are sent to another CPU than the one where the application is running)

- Increase the maximum memory settings of the TCP/IP stack

- Set the congestion-control, timestamp, sack,... parameters by using the sysctl interface.

Here are the client's script and the server's script.

The last element of our setup is netperf. netperf is a standard tool that allows to benchmark the performance of TCP. We use a custom netperf implementation. The netperf implementation is extended to perform real zero-copy send/receive by using the omni-test. Our patch has been submitted to the netperf community but has not yet been included in the standard netperf implementation. It can be retrieved fromgithub.

netperf was used with the following parameters :

root@comp9server:~# netperf -t omni -H 10.1.10.2 -l 30 -T 1/1 -c -C -- -m 512k -V

What are these options?

-t omnispecifies the netperf-test to use. This sends a single data-stream between two hosts.-H 10.1.10.2the destination-server's IP address-l 30the duration of the test (30 seconds)-T 1/1to pin the applications on the client and the server side to CPU 1.-c -Coutput statistics on the CPU-usage at the end of the test-m 512kspecifies the size of the send-buffer-Vuse zero-copy send and receive

If you experiment with this setup, please report the performance you achieved on themptcp-dev Mailing-List.

If you have any question or problems setting up this environment, let us know on the list.

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 别再用vector<bool>了!Google高级工程师:这可能是STL最大的设计失误

· 单元测试从入门到精通