Greenplum扩容详解

随着收集额外数据并且现有数据的定期增长,数据仓库通常会随着时间的推移而不断增长。 有时,有必要增加数据库能力来联合不同的数据仓库到一个数据库中。 数据仓库也可能需要额外的计算能力(CPU)来适应新增加的分析项目。 在系统被初始定义时就留出增长的空间是很好的,但是即便用户预期到了高增长率,提前太多在资源上投资通常也不明智。 因此,用户应该寄望于定期地执行一次数据库扩容项目。Greenplum使用gpexpand工具进行扩容,所以本文首先会介绍下gpexpand工具。本文为博客园作者所写: 一寸HUI,个人博客地址:https://www.cnblogs.com/zsql/

一、gpexpand介绍

gpexpand是在阵列中的新主机上扩展现有的Greenplum数据库的一个工具,使用方法如下:

gpexpand [{-f|--hosts-file} hosts_file]

| {-i|--input} input_file [-B batch_size]

| [{-d | --duration} hh:mm:ss | {-e|--end} 'YYYY-MM-DD hh:mm:ss']

[-a|-analyze]

[-n parallel_processes]

| {-r|--rollback}

| {-c|--clean}

[-v|--verbose] [-s|--silent]

[{-t|--tardir} directory ]

[-S|--simple-progress ]

gpexpand -? | -h | --help

gpexpand --version

参数详解:

-a | --analyze

在扩展后运行ANALYZE更新表的统计信息,默认是不运行ANALYZE。

-B batch_size

在暂停一秒钟之前发送给给定主机的远程命令的批量大小。默认值是16, 有效值是1-128。

gpexpand工具会发出许多设置命令,这些命令可能会超出主机的已验证 连接的最大阈值(由SSH守护进程配置中的MaxStartups定义)。该一秒钟 的暂停允许在gpexpand发出更多命令之前完成认证。

默认值通常不需要改变。但是,如果gpexpand由于连接错误 (例如'ssh_exchange_identification: Connection closed by remote host.')而失败,则可能需要减少命令的最大数量。

-c | --clean

删除扩展模式。

-d | --duration hh:mm:ss

扩展会话的持续时间。

-e | --end 'YYYY-MM-DD hh:mm:ss'

扩展会话的结束日期及时间。

-f | --hosts-file filename

指定包含用于系统扩展的新主机列表的文件的名称。文件的每一行都必须包含一个主机名。

该文件可以包含指定或不指定网络接口的主机名。gpexpand工具处理这两种情况, 如果原始节点配置了多个网络接口,则将接口号添加到主机名的末尾。

Note: Greenplum数据库Segment主机的命名习惯是sdwN,其中sdw 是前缀并且N是数字。例如,sdw1、sdw2等等。 对于具有多个接口的主机,约定是在主机名后面添加破折号(-)和数字。例如sdw1-1 和sdw1-2是主机sdw1的两个接口名称。

-i | --input input_file

指定扩展配置文件的名称,其中为每个要添加的Segment包含一行,格式为:

hostname:address:port:datadir:dbid:content:preferred_role

-n parallel_processes

要同时重新分布的表的数量。有效值是1 - 96。

每个表重新分布过程都需要两个数据库连接:一个用于更改表,另一个用于在扩展方案中更新表的状态。 在增加-n之前,检查服务器配置参数max_connections的当前值, 并确保不超过最大连接限制。

-r | --rollback

回滚失败的扩展设置操作。

-s | --silent

以静默模式运行。在警告时,不提示确认就可继续。

-S | --simple-progress

如果指定,gpexpand工具仅在Greenplum数据库表 gpexpand.expansion_progress中记录最少的进度信息。该工具不在表 gpexpand.status_detail中记录关系大小信息和状态信息。

指定此选项可通过减少写入gpexpand表的进度信息量来提高性能。

[-t | --tardir] directory

Segment主机上一个目录的完全限定directory,gpexpand 工具会在其中拷贝一个临时的tar文件。该文件包含用于创建Segment实例的Greenplum数据库文件。 默认目录是用户主目录。

-v | --verbose

详细调试输出。使用此选项,该工具将输出用于扩展数据库的所有DDL和DML。

--version

显示工具的版本号并退出。

-? | -h | --help

显示在线帮助

gpexpand的具体过程:

gpexpand工具分两个阶段执行系统扩展:Segment初始化和表重新分布

- 在初始化阶段,gpexpand用一个输入文件运行,该文件指定新Segment的数据目录、 dbid值和其他特征。用户可以手动创建输入文件,也可以在交互式对话中 按照提示进行操作。

- 在表数据重分布阶段,gpexpand会重分布表的数据,使数据在新旧segment 实例之间平衡

要开始重分布阶段,可以通过运行gpexpand并指定-d(运行时间周期) 或-e(结束时间)选项,或者不指定任何选项。如果客户指定了结束时间或运行周期,工具会在 扩展模式下重分布表,直到达到设定的结束时间或执行周期。如果没指定任何选项,工具会继续处理直到扩展模式的表 全部完成重分布。每张表都会通过ALTER TABLE命令来在所有的节点包括新增加的segment实例 上进行重分布,并设置表的分布策略为其原始策略。如果gpexpand完成所有表的重分布,它会 显示成功信息并退出。

二、扩容介绍

扩容可以分为纵向扩容和横向扩容,扩容的先决条件如下:

- 用户作为Greenplum数据库超级用户(gpadmin)登录。

- 新的Segment主机已被根据现有的Segment主机安装和配置。这包括:

- 配置硬件和操作系统

- 安装Greenplum软件

- 创建gpadmin用户帐户

- 交换SSH密钥

- 用户的Segment主机上有足够的磁盘空间来临时保存最大表的副本。

- 重新分布数据时,Greenplum数据库必须以生产模式运行。Greenplum数据库不能是受限模式或 Master模式。不能指定gpstart的选项-R或者-m 启动Greenplum数据库

扩容的基本步骤:

- 创建扩容输入文件:gpexpand -f hosts_file

- 初始化Segment并且创建扩容schema:gpexpand -i input_file,gpexpand会创建一个数据目录、从现有的数据库复制表到新的Segment上并且为扩容方案中的每个表捕捉元数据用于状态跟踪。 在这个处理完成后,扩容操作会被提交并且不可撤回。

- 重新分布表数据:gpexpand -d duration

- 移除扩容schema:gpexpand -c

三、纵向扩容

3.1、扩容前准备

首先看看现有的集群的状态:gpstate

[gpadmin@lgh1 conf]$ gpstate

20210331:14:10:34:014725 gpstate:lgh1:gpadmin-[INFO]:-Starting gpstate with args:

20210331:14:10:34:014725 gpstate:lgh1:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd'

20210331:14:10:34:014725 gpstate:lgh1:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 9.4.24 (Greenplum Database 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd) on x86_64-unknown-linux-gnu, compiled by gcc (GCC) 6.4.0, 64-bit compiled on Feb 22 2021 18:27:08'

20210331:14:10:34:014725 gpstate:lgh1:gpadmin-[INFO]:-Obtaining Segment details from master...

20210331:14:10:34:014725 gpstate:lgh1:gpadmin-[INFO]:-Gathering data from segments...

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:-Greenplum instance status summary

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Master instance = Active

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Master standby = lgh2

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Standby master state = Standby host passive

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total segment instance count from metadata = 4

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Primary Segment Status

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total primary segments = 2

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total primary segment valid (at master) = 2

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total primary segment failures (at master) = 0

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid files missing = 0

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid files found = 2

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs missing = 0

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs found = 2

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total number of /tmp lock files missing = 0

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total number of /tmp lock files found = 2

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total number postmaster processes missing = 0

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total number postmaster processes found = 2

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Mirror Segment Status

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total mirror segments = 2

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total mirror segment valid (at master) = 2

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total mirror segment failures (at master) = 0

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid files missing = 0

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid files found = 2

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs missing = 0

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs found = 2

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total number of /tmp lock files missing = 0

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total number of /tmp lock files found = 2

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total number postmaster processes missing = 0

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total number postmaster processes found = 2

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total number mirror segments acting as primary segments = 0

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:- Total number mirror segments acting as mirror segments = 2

20210331:14:10:35:014725 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

现在的状态是有3台主机,一个是master节点,还有两个segment的机器,每个segment的机器上都有一个primary和mirror的segment,现在计划在现有的集群上进行segment的扩容,在每台机器上的segment的数量翻倍,

现在segment的目录为:

primary:/apps/data1/primary

mirror:/apps/data1/mirror

现在需要在两个segment的主机上创建新的目录如下:

primary:/apps/data2/primary

mirror:/apps/data2/mirror

上面的目录的所属组和用户均为gpadmin:gpamdin,这里创建目录可以使用gpssh创建也可以一个一个的创建

3.2、创建初始化文件

查看目前segment的主机:

[gpadmin@lgh1 conf]$ cat seg_hosts

lgh2

lgh3

执行:gpexpand -f seg_hosts

[gpadmin@lgh1 conf]$ gpexpand -f seg_hosts

20210331:14:16:29:015453 gpexpand:lgh1:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd'

20210331:14:16:29:015453 gpexpand:lgh1:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 9.4.24 (Greenplum Database 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd) on x86_64-unknown-linux-gnu, compiled by gcc (GCC) 6.4.0, 64-bit compiled on Feb 22 2021 18:27:08'

20210331:14:16:29:015453 gpexpand:lgh1:gpadmin-[INFO]:-Querying gpexpand schema for current expansion state

System Expansion is used to add segments to an existing GPDB array.

gpexpand did not detect a System Expansion that is in progress.

Before initiating a System Expansion, you need to provision and burn-in

the new hardware. Please be sure to run gpcheckperf to make sure the

new hardware is working properly.

Please refer to the Admin Guide for more information.

Would you like to initiate a new System Expansion Yy|Nn (default=N):

> y

You must now specify a mirroring strategy for the new hosts. Spread mirroring places

a given hosts mirrored segments each on a separate host. You must be

adding more hosts than the number of segments per host to use this.

Grouped mirroring places all of a given hosts segments on a single

mirrored host. You must be adding at least 2 hosts in order to use this.

What type of mirroring strategy would you like?

spread|grouped (default=grouped): #默认的mirror方式

>

** No hostnames were given that do not already exist in the **

** array. Additional segments will be added existing hosts. **

By default, new hosts are configured with the same number of primary

segments as existing hosts. Optionally, you can increase the number

of segments per host.

For example, if existing hosts have two primary segments, entering a value

of 2 will initialize two additional segments on existing hosts, and four

segments on new hosts. In addition, mirror segments will be added for

these new primary segments if mirroring is enabled.

How many new primary segments per host do you want to add? (default=0):

> 1

Enter new primary data directory 1:

> /apps/data2/primary

Enter new mirror data directory 1:

> /apps/data2/mirror

Generating configuration file...

20210331:14:17:05:015453 gpexpand:lgh1:gpadmin-[INFO]:-Generating input file...

Input configuration file was written to 'gpexpand_inputfile_20210331_141705'.

Please review the file and make sure that it is correct then re-run

with: gpexpand -i gpexpand_inputfile_20210331_141705 #生成的初始化文件

20210331:14:17:05:015453 gpexpand:lgh1:gpadmin-[INFO]:-Exiting...

查看初始化文件:

[gpadmin@lgh1 conf]$ cat gpexpand_inputfile_20210331_141705

lgh3|lgh3|6001|/apps/data2/primary/gpseg2|7|2|p

lgh2|lgh2|7001|/apps/data2/mirror/gpseg2|10|2|m

lgh2|lgh2|6001|/apps/data2/primary/gpseg3|8|3|p

lgh3|lgh3|7001|/apps/data2/mirror/gpseg3|9|3|m

3.3、初始化Segment并且创建扩容schema

执行命令:gpexpand -i gpexpand_inputfile_20210331_141705

[gpadmin@lgh1 conf]$ gpexpand -i gpexpand_inputfile_20210331_141705

20210331:14:21:40:016004 gpexpand:lgh1:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd'

20210331:14:21:40:016004 gpexpand:lgh1:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 9.4.24 (Greenplum Database 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd) on x86_64-unknown-linux-gnu, compiled by gcc (GCC) 6.4.0, 64-bit compiled on Feb 22 2021 18:27:08'

20210331:14:21:40:016004 gpexpand:lgh1:gpadmin-[INFO]:-Querying gpexpand schema for current expansion state

20210331:14:21:40:016004 gpexpand:lgh1:gpadmin-[INFO]:-Heap checksum setting consistent across cluster

20210331:14:21:40:016004 gpexpand:lgh1:gpadmin-[INFO]:-Syncing Greenplum Database extensions

20210331:14:21:40:016004 gpexpand:lgh1:gpadmin-[INFO]:-The packages on lgh2 are consistent.

20210331:14:21:41:016004 gpexpand:lgh1:gpadmin-[INFO]:-The packages on lgh3 are consistent.

20210331:14:21:41:016004 gpexpand:lgh1:gpadmin-[INFO]:-Locking catalog

20210331:14:21:41:016004 gpexpand:lgh1:gpadmin-[INFO]:-Locked catalog

20210331:14:21:42:016004 gpexpand:lgh1:gpadmin-[INFO]:-Creating segment template

20210331:14:21:42:016004 gpexpand:lgh1:gpadmin-[INFO]:-Copying postgresql.conf from existing segment into template

20210331:14:21:43:016004 gpexpand:lgh1:gpadmin-[INFO]:-Copying pg_hba.conf from existing segment into template

20210331:14:21:43:016004 gpexpand:lgh1:gpadmin-[INFO]:-Creating schema tar file

20210331:14:21:43:016004 gpexpand:lgh1:gpadmin-[INFO]:-Distributing template tar file to new hosts

20210331:14:21:44:016004 gpexpand:lgh1:gpadmin-[INFO]:-Configuring new segments (primary)

20210331:14:21:44:016004 gpexpand:lgh1:gpadmin-[INFO]:-{'lgh2': '/apps/data2/primary/gpseg3:6001:true:false:8:3::-1:', 'lgh3': '/apps/data2/primary/gpseg2:6001:true:false:7:2::-1:'}

20210331:14:21:47:016004 gpexpand:lgh1:gpadmin-[INFO]:-Cleaning up temporary template files

20210331:14:21:48:016004 gpexpand:lgh1:gpadmin-[INFO]:-Cleaning up databases in new segments.

20210331:14:21:49:016004 gpexpand:lgh1:gpadmin-[INFO]:-Unlocking catalog

20210331:14:21:49:016004 gpexpand:lgh1:gpadmin-[INFO]:-Unlocked catalog

20210331:14:21:49:016004 gpexpand:lgh1:gpadmin-[INFO]:-Creating expansion schema

20210331:14:21:49:016004 gpexpand:lgh1:gpadmin-[INFO]:-Populating gpexpand.status_detail with data from database template1

20210331:14:21:50:016004 gpexpand:lgh1:gpadmin-[INFO]:-Populating gpexpand.status_detail with data from database postgres

20210331:14:21:50:016004 gpexpand:lgh1:gpadmin-[INFO]:-Populating gpexpand.status_detail with data from database gpebusiness

20210331:14:21:50:016004 gpexpand:lgh1:gpadmin-[INFO]:-Populating gpexpand.status_detail with data from database gpperfmon

20210331:14:21:50:016004 gpexpand:lgh1:gpadmin-[INFO]:-Starting new mirror segment synchronization

20210331:14:21:58:016004 gpexpand:lgh1:gpadmin-[INFO]:-************************************************

20210331:14:21:58:016004 gpexpand:lgh1:gpadmin-[INFO]:-Initialization of the system expansion complete.

20210331:14:21:58:016004 gpexpand:lgh1:gpadmin-[INFO]:-To begin table expansion onto the new segments

20210331:14:21:58:016004 gpexpand:lgh1:gpadmin-[INFO]:-rerun gpexpand

20210331:14:21:58:016004 gpexpand:lgh1:gpadmin-[INFO]:-************************************************

20210331:14:21:58:016004 gpexpand:lgh1:gpadmin-[INFO]:-Exiting...

使用gpstate验证下:(segment为8了,成功)

[gpadmin@lgh1 conf]$ gpstate

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:-Starting gpstate with args:

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd'

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 9.4.24 (Greenplum Database 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd) on x86_64-unknown-linux-gnu, compiled by gcc (GCC) 6.4.0, 64-bit compiled on Feb 22 2021 18:27:08'

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:-Obtaining Segment details from master...

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:-Gathering data from segments...

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:-Greenplum instance status summary

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Master instance = Active

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Master standby = lgh2

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Standby master state = Standby host passive

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total segment instance count from metadata = 8

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Primary Segment Status

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total primary segments = 4

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total primary segment valid (at master) = 4

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total primary segment failures (at master) = 0

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid files missing = 0

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid files found = 4

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs missing = 0

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs found = 4

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total number of /tmp lock files missing = 0

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total number of /tmp lock files found = 4

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total number postmaster processes missing = 0

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total number postmaster processes found = 4

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Mirror Segment Status

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total mirror segments = 4

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total mirror segment valid (at master) = 4

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total mirror segment failures (at master) = 0

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid files missing = 0

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid files found = 4

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs missing = 0

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs found = 4

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total number of /tmp lock files missing = 0

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total number of /tmp lock files found = 4

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total number postmaster processes missing = 0

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total number postmaster processes found = 4

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total number mirror segments acting as primary segments = 0

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Total number mirror segments acting as mirror segments = 4

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:- Cluster Expansion = In Progress

20210331:14:23:19:016384 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

3.4、重分布数据

执行命令:gpexpand -d 1:00:00 #不动命令回去看gpexpand命令说明,这里没有业务表,所以很快就重分布完成了,如果数据量很大,可以增加线程

20210331:14:28:45:016891 gpexpand:lgh1:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd'

20210331:14:28:45:016891 gpexpand:lgh1:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 9.4.24 (Greenplum Database 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd) on x86_64-unknown-linux-gnu, compiled by gcc (GCC) 6.4.0, 64-bit compiled on Feb 22 2021 18:27:08'

20210331:14:28:45:016891 gpexpand:lgh1:gpadmin-[INFO]:-Querying gpexpand schema for current expansion state

20210331:14:28:45:016891 gpexpand:lgh1:gpadmin-[INFO]:-Expanding postgres.gpcc_schema.pghba_lock

20210331:14:28:46:016891 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding postgres.gpcc_schema.pghba_lock

20210331:14:28:46:016891 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_schedule

20210331:14:28:46:016891 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_schedule

20210331:14:28:46:016891 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_scan_history

20210331:14:28:46:016891 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_scan_history

20210331:14:28:46:016891 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_wlm_rule

20210331:14:28:46:016891 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_wlm_rule

20210331:14:28:46:016891 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_export_log

20210331:14:28:46:016891 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_export_log

20210331:14:28:47:016891 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_table_info

20210331:14:28:47:016891 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_table_info

20210331:14:28:47:016891 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics._gpcc_plannode_history

20210331:14:28:47:016891 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics._gpcc_plannode_history

20210331:14:28:47:016891 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpcc_schema.pghba_lock

20210331:14:28:47:016891 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpcc_schema.pghba_lock

20210331:14:28:47:016891 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_alert_history

20210331:14:28:47:016891 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_alert_history

20210331:14:28:47:016891 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_database_history

20210331:14:28:48:016891 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_database_history

20210331:14:28:48:016891 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_disk_history

20210331:14:28:48:016891 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_disk_history

20210331:14:28:48:016891 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_pg_log_history

20210331:14:28:48:016891 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_pg_log_history

20210331:14:28:48:016891 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_plannode_history

20210331:14:28:49:016891 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_plannode_history

20210331:14:28:49:016891 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_queries_history

20210331:14:28:49:016891 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_queries_history

20210331:14:28:49:016891 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_system_history

20210331:14:28:49:016891 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_system_history

20210331:14:28:50:016891 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_table_info_history

20210331:14:28:50:016891 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_table_info_history

20210331:14:28:50:016891 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_wlm_log_history

20210331:14:28:50:016891 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_wlm_log_history

20210331:14:28:50:016891 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics._gpcc_pg_log_meta

20210331:14:28:50:016891 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics._gpcc_pg_log_meta

20210331:14:28:50:016891 gpexpand:lgh1:gpadmin-[INFO]:-EXPANSION COMPLETED SUCCESSFULLY

20210331:14:28:50:016891 gpexpand:lgh1:gpadmin-[INFO]:-Exiting...

3.5、移除扩容schema

执行命令:gpexpand -c

[gpadmin@lgh1 conf]$ gpexpand -c

20210331:14:32:01:017244 gpexpand:lgh1:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd'

20210331:14:32:01:017244 gpexpand:lgh1:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 9.4.24 (Greenplum Database 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd) on x86_64-unknown-linux-gnu, compiled by gcc (GCC) 6.4.0, 64-bit compiled on Feb 22 2021 18:27:08'

20210331:14:32:01:017244 gpexpand:lgh1:gpadmin-[INFO]:-Querying gpexpand schema for current expansion state

Do you want to dump the gpexpand.status_detail table to file? Yy|Nn (default=Y):

> y

20210331:14:32:05:017244 gpexpand:lgh1:gpadmin-[INFO]:-Dumping gpexpand.status_detail to /apps/data1/master/gpseg-1/gpexpand.status_detail

20210331:14:32:05:017244 gpexpand:lgh1:gpadmin-[INFO]:-Removing gpexpand schema

20210331:14:32:05:017244 gpexpand:lgh1:gpadmin-[INFO]:-Cleanup Finished. exiting...

这里为止纵向扩容就完成了,不出错都是傻瓜式的操作,出错多看日志,也不难。

注意:如果在扩容的时候失败或者出错了,记得回滚:gpexpand -r ,还有就是扩容成功,数据重分布成功后记得使用analyze或者analyzedb进行分析

四、横向扩容

4.1、安装前准备

参考:greenplum6.14、GPCC6.4安装详解 第一部分

4.2、基本配置和规划

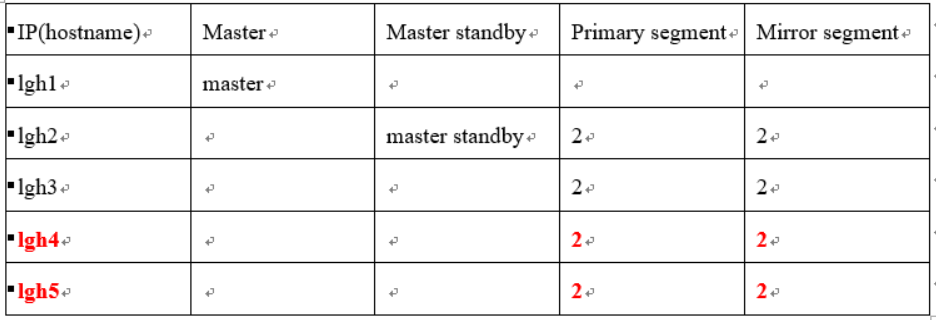

规划,新增两台机器(红色粗体部分),由于配置了mirror,所以至少要新增两台机器扩容,不然会报错:

在新的两个机器进行如下操作:

#创建gp用户和用户组

groupdel gpadmin

userdel gpadmin

groupadd gpadmin

useradd -g gpadmin gpadmin

#创建segment目录

mkdir /apps/data1/primary

mkdir /apps/data2/primary

mkdir /apps/data1/mirror

mkdir /apps/data2/mirror

chown -R gpamdin:gpamdin /apps/data*

#拷贝master主机的安装目录

cd /usr/local && tar -cf /usr/local/gp6.tar greenplum-db-6.14.1 #master主机操作

scp gp6.tar root@lgh4:/usr/local/ #master主机操作

scp gp6.tar root@lgh5:/usr/local/ #master主机操作

cd /usr/local

tar -xf gp6.tar

ln -s greenplum-db-6.14.1 greenplum-db

chown -R gpadmin:gpadmin greenplum-db*

#ssh免密配置

ssh-copy-id lgh4 #master主机操作

ssh-copy-id lgh5 #master主机操作

修改seg_hosts,all_hosts文件,添加新主机名进去:

[gpadmin@mvxl53201 conf]$ cat seg_hosts

lgh2

lgh3

lgh4 #new

lgh5 #new

[gpadmin@mvxl53201 conf]$ cat all_hosts

lgh1

lgh2

lgh3

lgh4 #new

lgh5 #new

执行:gpssh-exkeys -f all_hosts

[gpadmin@lgh1 conf]$ gpssh-exkeys -f all_hosts

[STEP 1 of 5] create local ID and authorize on local host

... /apps/gpadmin/.ssh/id_rsa file exists ... key generation skipped

[STEP 2 of 5] keyscan all hosts and update known_hosts file

[STEP 3 of 5] retrieving credentials from remote hosts

... send to lgh2

... send to lgh3

... send to lgh4

... send to lgh5

[STEP 4 of 5] determine common authentication file content

[STEP 5 of 5] copy authentication files to all remote hosts

... finished key exchange with lgh2

... finished key exchange with lgh3

... finished key exchange with lgh4

... finished key exchange with lgh5

[INFO] completed successfully

4.3、创建初始化文件

执行:gpexpand -f seg_hosts

[gpadmin@lgh1 conf]$ gpexpand -f seg_hosts

20210331:15:00:52:020105 gpexpand:lgh1:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd'

20210331:15:00:52:020105 gpexpand:lgh1:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 9.4.24 (Greenplum Database 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd) on x86_64-unknown-linux-gnu, compiled by gcc (GCC) 6.4.0, 64-bit compiled on Feb 22 2021 18:27:08'

20210331:15:00:52:020105 gpexpand:lgh1:gpadmin-[INFO]:-Querying gpexpand schema for current expansion state

System Expansion is used to add segments to an existing GPDB array.

gpexpand did not detect a System Expansion that is in progress.

Before initiating a System Expansion, you need to provision and burn-in

the new hardware. Please be sure to run gpcheckperf to make sure the

new hardware is working properly.

Please refer to the Admin Guide for more information.

Would you like to initiate a new System Expansion Yy|Nn (default=N):

> y

You must now specify a mirroring strategy for the new hosts. Spread mirroring places

a given hosts mirrored segments each on a separate host. You must be

adding more hosts than the number of segments per host to use this.

Grouped mirroring places all of a given hosts segments on a single

mirrored host. You must be adding at least 2 hosts in order to use this.

What type of mirroring strategy would you like?

spread|grouped (default=grouped):

>

By default, new hosts are configured with the same number of primary

segments as existing hosts. Optionally, you can increase the number

of segments per host.

For example, if existing hosts have two primary segments, entering a value

of 2 will initialize two additional segments on existing hosts, and four

segments on new hosts. In addition, mirror segments will be added for

these new primary segments if mirroring is enabled.

How many new primary segments per host do you want to add? (default=0):

>

Generating configuration file...

20210331:15:00:59:020105 gpexpand:lgh1:gpadmin-[INFO]:-Generating input file...

Input configuration file was written to 'gpexpand_inputfile_20210331_150059'.

Please review the file and make sure that it is correct then re-run

with: gpexpand -i gpexpand_inputfile_20210331_150059 #生成文件

20210331:15:00:59:020105 gpexpand:lgh1:gpadmin-[INFO]:-Exiting...

查看初始化的文件:

[gpadmin@lgh1 conf]$ cat gpexpand_inputfile_20210331_150059

lgh5|lgh5|6000|/apps/data1/primary/gpseg4|11|4|p

lgh4|lgh4|7000|/apps/data1/mirror/gpseg4|17|4|m

lgh5|lgh5|6001|/apps/data2/primary/gpseg5|12|5|p

lgh4|lgh4|7001|/apps/data2/mirror/gpseg5|18|5|m

lgh4|lgh4|6000|/apps/data1/primary/gpseg6|13|6|p

lgh5|lgh5|7000|/apps/data1/mirror/gpseg6|15|6|m

lgh4|lgh4|6001|/apps/data2/primary/gpseg7|14|7|p

lgh5|lgh5|7001|/apps/data2/mirror/gpseg7|16|7|m

4.4、初始化Segment并且创建扩容schema

执行:gpexpand -i gpexpand_inputfile_20210331_150059

[gpadmin@lgh1 conf]$ gpexpand -i gpexpand_inputfile_20210331_150059

20210331:15:04:06:020454 gpexpand:lgh1:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd'

20210331:15:04:06:020454 gpexpand:lgh1:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 9.4.24 (Greenplum Database 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd) on x86_64-unknown-linux-gnu, compiled by gcc (GCC) 6.4.0, 64-bit compiled on Feb 22 2021 18:27:08'

20210331:15:04:06:020454 gpexpand:lgh1:gpadmin-[INFO]:-Querying gpexpand schema for current expansion state

20210331:15:04:06:020454 gpexpand:lgh1:gpadmin-[INFO]:-Heap checksum setting consistent across cluster

20210331:15:04:06:020454 gpexpand:lgh1:gpadmin-[INFO]:-Syncing Greenplum Database extensions

20210331:15:04:07:020454 gpexpand:lgh1:gpadmin-[INFO]:-The packages on lgh5 are consistent.

20210331:15:04:08:020454 gpexpand:lgh1:gpadmin-[INFO]:-The packages on lgh4 are consistent.

20210331:15:04:08:020454 gpexpand:lgh1:gpadmin-[INFO]:-Locking catalog

20210331:15:04:08:020454 gpexpand:lgh1:gpadmin-[INFO]:-Locked catalog

20210331:15:04:09:020454 gpexpand:lgh1:gpadmin-[INFO]:-Creating segment template

20210331:15:04:09:020454 gpexpand:lgh1:gpadmin-[INFO]:-Copying postgresql.conf from existing segment into template

20210331:15:04:09:020454 gpexpand:lgh1:gpadmin-[INFO]:-Copying pg_hba.conf from existing segment into template

20210331:15:04:10:020454 gpexpand:lgh1:gpadmin-[INFO]:-Creating schema tar file

20210331:15:04:10:020454 gpexpand:lgh1:gpadmin-[INFO]:-Distributing template tar file to new hosts

20210331:15:04:11:020454 gpexpand:lgh1:gpadmin-[INFO]:-Configuring new segments (primary)

20210331:15:04:11:020454 gpexpand:lgh1:gpadmin-[INFO]:-{'lgh5': '/apps/data1/primary/gpseg4:6000:true:false:11:4::-1:,/apps/data2/primary/gpseg5:6001:true:false:12:5::-1:', 'lgh4': '/apps/data1/primary/gpseg6:6000:true:false:13:6::-1:,/apps/data2/primary/gpseg7:6001:true:false:14:7::-1:'}

20210331:15:04:17:020454 gpexpand:lgh1:gpadmin-[INFO]:-Cleaning up temporary template files

20210331:15:04:17:020454 gpexpand:lgh1:gpadmin-[INFO]:-Cleaning up databases in new segments.

20210331:15:04:19:020454 gpexpand:lgh1:gpadmin-[INFO]:-Unlocking catalog

20210331:15:04:19:020454 gpexpand:lgh1:gpadmin-[INFO]:-Unlocked catalog

20210331:15:04:19:020454 gpexpand:lgh1:gpadmin-[INFO]:-Creating expansion schema

20210331:15:04:19:020454 gpexpand:lgh1:gpadmin-[INFO]:-Populating gpexpand.status_detail with data from database template1

20210331:15:04:19:020454 gpexpand:lgh1:gpadmin-[INFO]:-Populating gpexpand.status_detail with data from database postgres

20210331:15:04:19:020454 gpexpand:lgh1:gpadmin-[INFO]:-Populating gpexpand.status_detail with data from database gpebusiness

20210331:15:04:20:020454 gpexpand:lgh1:gpadmin-[INFO]:-Populating gpexpand.status_detail with data from database gpperfmon

20210331:15:04:20:020454 gpexpand:lgh1:gpadmin-[INFO]:-Starting new mirror segment synchronization

20210331:15:04:34:020454 gpexpand:lgh1:gpadmin-[INFO]:-************************************************

20210331:15:04:34:020454 gpexpand:lgh1:gpadmin-[INFO]:-Initialization of the system expansion complete.

20210331:15:04:34:020454 gpexpand:lgh1:gpadmin-[INFO]:-To begin table expansion onto the new segments

20210331:15:04:34:020454 gpexpand:lgh1:gpadmin-[INFO]:-rerun gpexpand

20210331:15:04:34:020454 gpexpand:lgh1:gpadmin-[INFO]:-************************************************

20210331:15:04:34:020454 gpexpand:lgh1:gpadmin-[INFO]:-Exiting...

4.5、重分布数据

执行:gpexpand -d 1:00:00

[gpadmin@lgh1 conf]$ gpexpand -d 1:00:00

20210331:15:06:46:021037 gpexpand:lgh1:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd'

20210331:15:06:46:021037 gpexpand:lgh1:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 9.4.24 (Greenplum Database 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd) on x86_64-unknown-linux-gnu, compiled by gcc (GCC) 6.4.0, 64-bit compiled on Feb 22 2021 18:27:08'

20210331:15:06:46:021037 gpexpand:lgh1:gpadmin-[INFO]:-Querying gpexpand schema for current expansion state

20210331:15:06:46:021037 gpexpand:lgh1:gpadmin-[INFO]:-Expanding postgres.gpcc_schema.pghba_lock

20210331:15:06:46:021037 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding postgres.gpcc_schema.pghba_lock

20210331:15:06:46:021037 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_export_log

20210331:15:06:46:021037 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_export_log

20210331:15:06:47:021037 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_schedule

20210331:15:06:47:021037 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_schedule

20210331:15:06:47:021037 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_wlm_rule

20210331:15:06:47:021037 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_wlm_rule

20210331:15:06:47:021037 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_table_info

20210331:15:06:47:021037 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_table_info

20210331:15:06:47:021037 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics._gpcc_pg_log_meta

20210331:15:06:47:021037 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics._gpcc_pg_log_meta

20210331:15:06:48:021037 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics._gpcc_plannode_history

20210331:15:06:48:021037 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics._gpcc_plannode_history

20210331:15:06:48:021037 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpcc_schema.pghba_lock

20210331:15:06:48:021037 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpcc_schema.pghba_lock

20210331:15:06:48:021037 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_alert_history

20210331:15:06:48:021037 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_alert_history

20210331:15:06:48:021037 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_database_history

20210331:15:06:48:021037 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_database_history

20210331:15:06:49:021037 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_disk_history

20210331:15:06:49:021037 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_disk_history

20210331:15:06:49:021037 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_pg_log_history

20210331:15:06:49:021037 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_pg_log_history

20210331:15:06:50:021037 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_plannode_history

20210331:15:06:50:021037 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_plannode_history

20210331:15:06:50:021037 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_queries_history

20210331:15:06:50:021037 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_queries_history

20210331:15:06:50:021037 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_system_history

20210331:15:06:51:021037 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_system_history

20210331:15:06:51:021037 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_table_info_history

20210331:15:06:51:021037 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_table_info_history

20210331:15:06:51:021037 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_wlm_log_history

20210331:15:06:51:021037 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_wlm_log_history

20210331:15:06:52:021037 gpexpand:lgh1:gpadmin-[INFO]:-Expanding gpperfmon.gpmetrics.gpcc_scan_history

20210331:15:06:52:021037 gpexpand:lgh1:gpadmin-[INFO]:-Finished expanding gpperfmon.gpmetrics.gpcc_scan_history

20210331:15:06:56:021037 gpexpand:lgh1:gpadmin-[INFO]:-EXPANSION COMPLETED SUCCESSFULLY

20210331:15:06:56:021037 gpexpand:lgh1:gpadmin-[INFO]:-Exiting...

4.6、移除扩容schema

执行命令:gpexpand -c

[gpadmin@lgh1 conf]$ gpexpand -c

20210331:15:08:19:021264 gpexpand:lgh1:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd'

20210331:15:08:19:021264 gpexpand:lgh1:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 9.4.24 (Greenplum Database 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd) on x86_64-unknown-linux-gnu, compiled by gcc (GCC) 6.4.0, 64-bit compiled on Feb 22 2021 18:27:08'

20210331:15:08:19:021264 gpexpand:lgh1:gpadmin-[INFO]:-Querying gpexpand schema for current expansion state

Do you want to dump the gpexpand.status_detail table to file? Yy|Nn (default=Y):

> y

20210331:15:08:21:021264 gpexpand:lgh1:gpadmin-[INFO]:-Dumping gpexpand.status_detail to /apps/data1/master/gpseg-1/gpexpand.status_detail

20210331:15:08:21:021264 gpexpand:lgh1:gpadmin-[INFO]:-Removing gpexpand schema

20210331:15:08:21:021264 gpexpand:lgh1:gpadmin-[INFO]:-Cleanup Finished. exiting...

查看扩容结果:gpstate

[gpadmin@lgh1 conf]$ gpstate

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:-Starting gpstate with args:

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd'

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 9.4.24 (Greenplum Database 6.14.1 build commit:5ef30dd4c9878abadc0124e0761e4b988455a4bd) on x86_64-unknown-linux-gnu, compiled by gcc (GCC) 6.4.0, 64-bit compiled on Feb 22 2021 18:27:08'

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:-Obtaining Segment details from master...

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:-Gathering data from segments...

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:-Greenplum instance status summary

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Master instance = Active

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Master standby = lgh2

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Standby master state = Standby host passive

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total segment instance count from metadata = 16

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Primary Segment Status

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total primary segments = 8

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total primary segment valid (at master) = 8

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total primary segment failures (at master) = 0

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid files missing = 0

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid files found = 8

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs missing = 0

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs found = 8

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total number of /tmp lock files missing = 0

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total number of /tmp lock files found = 8

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total number postmaster processes missing = 0

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total number postmaster processes found = 8

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Mirror Segment Status

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total mirror segments = 8

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total mirror segment valid (at master) = 8

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total mirror segment failures (at master) = 0

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid files missing = 0

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid files found = 8

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs missing = 0

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total number of postmaster.pid PIDs found = 8

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total number of /tmp lock files missing = 0

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total number of /tmp lock files found = 8

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total number postmaster processes missing = 0

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total number postmaster processes found = 8

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total number mirror segments acting as primary segments = 0

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:- Total number mirror segments acting as mirror segments = 8

20210331:15:10:11:021437 gpstate:lgh1:gpadmin-[INFO]:-----------------------------------------------------

注意:如果在扩容的时候失败或者出错了,记得回滚:gpexpand -r ,还有就是扩容成功,数据重分布成功后记得使用analyze或者analyzedb进行分析

参考网址:

http://docs-cn.greenplum.org/v6/admin_guide/expand/expand-main.html

http://docs-cn.greenplum.org/v6/utility_guide/admin_utilities/gpexpand.html#topic1

出处:https://www.cnblogs.com/zsql/

如果您觉得阅读本文对您有帮助,请点击一下右下方的推荐按钮,您的推荐将是我写作的最大动力!

版权声明:本文为博主原创或转载文章,欢迎转载,但转载文章之后必须在文章页面明显位置注明出处,否则保留追究法律责任的权利。

浙公网安备 33010602011771号

浙公网安备 33010602011771号