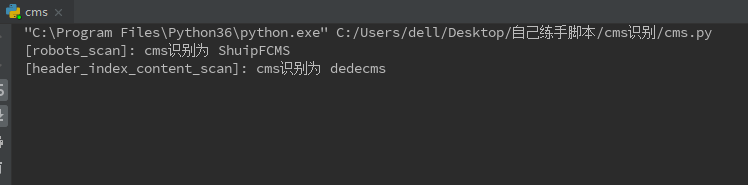

python3 cms识别类

参考了其他人的思路,总结了下主要搜集有四个方面:

1、index.php源代码中特征内容判断

2、静态目录中的静态资源文件,如图片等

3、根目录robots.txt 文本中的特征判断

4、根目录favicon.ico MD5的特征判断

5、http相应包中的报文特征内容判断

参考langzi的代码

# coding=utf-8 import requests import json import hashlib from threading import Thread class CmsScan: def __init__(self, dest_url): self.headers = { 'User-Agent': "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1" } self.dest_url = dest_url with open(__file__[0:-6] + 'body.txt', 'r', encoding='utf-8') as a,open(__file__[0:-6] + 'head.txt', 'r', encoding='utf-8') as b,open(__file__[0:-6] + 'robots.txt', 'r', encoding='utf-8') as d,open(__file__[0:-6] + 'data.json', 'r', encoding='utf-8') as e,open(__file__[0:-6] + 'cms_rule.txt', 'r', encoding='utf-8') as f: self.body_content = eval(a.read()) #已经完成 self.head_content = eval(b.read()) #已经完成 self.robots_content = eval(d.read()) #已经完成 self.rule_content = eval(f.read()) #已经完成 self.data_content = json.load(e) # 已经完成 def header_index_content_scan(self): # 基于首页源代码中的特征寻找 try: resp = requests.get(url=self.dest_url, headers=self.headers, allow_redirects=False, timeout=3, verify=False) # 迭代器迭代字典 for keyword, cms in self.body_content.items(): if keyword in resp.content.decode('utf-8'): print("[header_index_content_scan]: cms识别为 " + cms) return else: pass for keyword, cms in self.head.iteritems(): if keyword in resp.headers: print("[scan01_headers]: cms识别为 " + cms) return else: pass except: pass def robot_scan(self): # 根目录robots.txt 文本中的特征判断 if self.dest_url[-1] != '/': self.dest_url = self.dest_url + '/' try: resp = requests.get(url=self.dest_url + 'robots.txt', headers=self.headers, allow_redirects=False, timeout=3, verify=False) for robots in self.robots_content: if robots in resp.content.decode('utf-8'): print("[robots_scan]: cms识别为 " + robots) return else: pass except: pass def sub_dir_content_scan(self): # cms的特征目录内容文件特征判断 if self.dest_url[-1] == '/': self.dest_url = self.dest_url[:-1] for sub_dir in self.data_content: resp = requests.get(url=self.dest_url + sub_dir['url'], headers=self.headers, allow_redirects=False, timeout=3, verify=False) cms_type = sub_dir['name'] if resp.status_code == 200: try: resp_2 = requests.get(url=self.dest_url + sub_dir['url'], headers=self.headers, allow_redirects=False, timeout=3, verify=False) if sub_dir['md5'] == '': # md5不存在的情况下的验证流程 if sub_dir['re'] in resp_2.content.decode('utf-8'): print(cms_type) return else: pass else: # md5存在的情况下的验证流程 md5 = hashlib.md5() md5.update(resp_2.content) rmd5 = md5.hexdigest() if rmd5 == sub_dir['md5']: print(cms_type) return else: pass except: pass else: pass def md5_scan(self): # 根目录favicon.ico等 特征MD5的特征判断 if self.dest_url[-1] == '/': self.dest_url = self.dest_url[:-1] for i in self.rule_content: # 拆分 cms_dir = i.split('|')[0] cms_type = i.split('|')[1] cms_md5 = i.split('|')[2] try: resp = requests.get(url=self.dest_url + cms_dir, headers=self.headers, allow_redirects=False, timeout=3, verify=False) md5 = hashlib.md5() md5.update(resp.content) rmd5 = md5.hexdigest() print(rmd5) if rmd5 == cms_md5: print(cms_type) return else: pass except: pass if '__main__' == __name__: a = CmsScan('http://news.eeeqi.cn/') thread_list = [] thread_list.append(Thread(target=a.header_index_content_scan, args=())) thread_list.append(Thread(target=a.robot_scan, args=())) thread_list.append(Thread(target=a.sub_dir_content_scan, args=())) thread_list.append(Thread(target=a.md5_scan, args=())) for t in thread_list: t.start() for t in thread_list: t.join()

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY