Kubernetes集群搭建以及部署高可用ingress

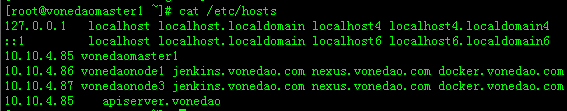

10.10.4.85 master

10.10.4.86 node

10.10.4.87 node

3个服务器都配置好主机名以及hosts配置:

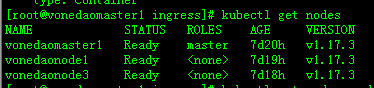

k8s集群搭建

在10.10.4.85 上操作,master节点

使用kubeadm工具安装,参考:使用kubeadm安装kubernetes_v1.17.x:https://kuboard.cn/install/install-k8s.htm

#curl -sSL https://kuboard.cn/install-script/v1.17.x/init_master.sh | sh -s 1.17.3

#kubectl get pod -n kube-system -o wide

检查pod启动情况,若报错,请检查具体pod:

#kubectl describe pod -n kube-system calico-node-v6zdg

若镜像报错,则手动下载镜像

比如:

#docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

当都启动好后,用save命令将镜像都打包,然后传给各个node

#docker save -o k8s.tar k8s的镜像

#scp k8s.tar 10.10.4.86

#scp k8s.tar 10.10.4.87

获取token:

#kubeadm token create --print-join-command

在10.10.4.86 和10.10.4.87 上操作,是node节点

使用kubeadm工具安装,参考: 初始化worker节点:https://kuboard.cn/install/install-k8s.html#初始化-worker节点

导入镜像

#docker load < k8s.tar

加入集群

#kubeadm join apiserver.vonedao:6443 --token y4gc5t.2eafib5oebrl3rm6 --discovery-token-ca-cert-hash sha256:481641cfbb04c5330d3cf90377589d2e657153a5ec23cbe416131f30f60268ee

NFS搭建

nfs用于做数据存储,比如jenkins的

安装软件(全部机器安装):

#yum install -y nfs-utils rpcbind

10.10.4.85 配置:

#vim /etc/exports

/data *(rw,sync,no_root_squash)

10.10.4.85 配置:

#vi /etc/sysconfig/nfs

RPCNFSDARGS=""

RPCMOUNTDOPTS=""

STATDARG=""

SMNOTIFYARGS=""

RPCIDMAPDARGS=""

RPCGSSDARGS=""

GSS_USE_PROXY="yes"

BLKMAPDARGS=""

RQUOTAD_PORT=4001

LOCKD_TCPPORT=4002

LOCKD_UDPPORT=4002

MOUNTD_PORT=4003

STATD_PORT=4004

10.10.4.85 重启、开机自启动:

#systemctl restart rpcbind && systemctl restart nfs

#systemctl enable rpcbind

#systemctl enable nfs

节点机器查看:

#showmount -e 10.10.4.85

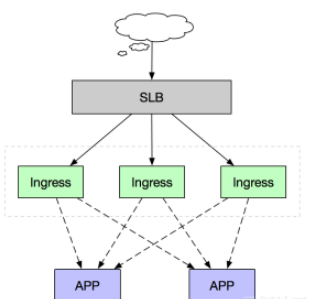

部署高可用Ingress

由多个独占Ingress实例组成统一接入层承载集群入口流量,同时可依据后端业务流量水平扩缩容Ingress节点。当然如果您前期的集群规模并不大,也可以采用将Ingress服务与业务应用混部的方式,但建议进行资源限制和隔离。

想法是美好的,服务器少了,无SLB,无公网,内网搭建多ingress练习。

ingress的高可用的话,要可以通过把nginx-ingress-controller运行到指定添加标签的几个node节点上,然后再把这几个node节点加入到LB中,然后对应的域名解析到该LB即可实现ingress的高可用。(注意:添加标签的节点数量要大于等于集群Pod副本数,从而避免多个Pod运行在同一个节点上。不建议将Ingress服务部署到master节点上,尽量选择worker节点添加标签。

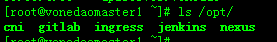

注意,以下操作都在10.10.4.85操作。 文件目录:/opt/ ,基础的yaml文件都放这里。

给node添加标签

节点都部署ingress,用于做冗余。首先在master上给节点打标签:

# kubectl label node vonedaonode1 ingresscontroller=true

# kubectl label node vonedaonode3 ingresscontroller=true

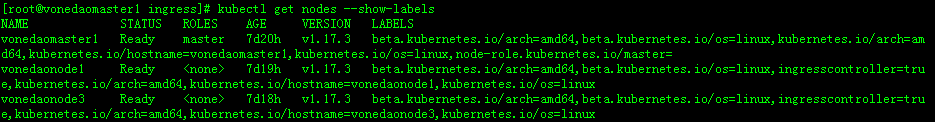

查看标签

#kubectl get nodes --show-labels

#删除标签

kubectl label node k8snode1 ingresscontroller-

#更新标签

kubectl label node k8snode1 ingresscontroller=false --overwrite

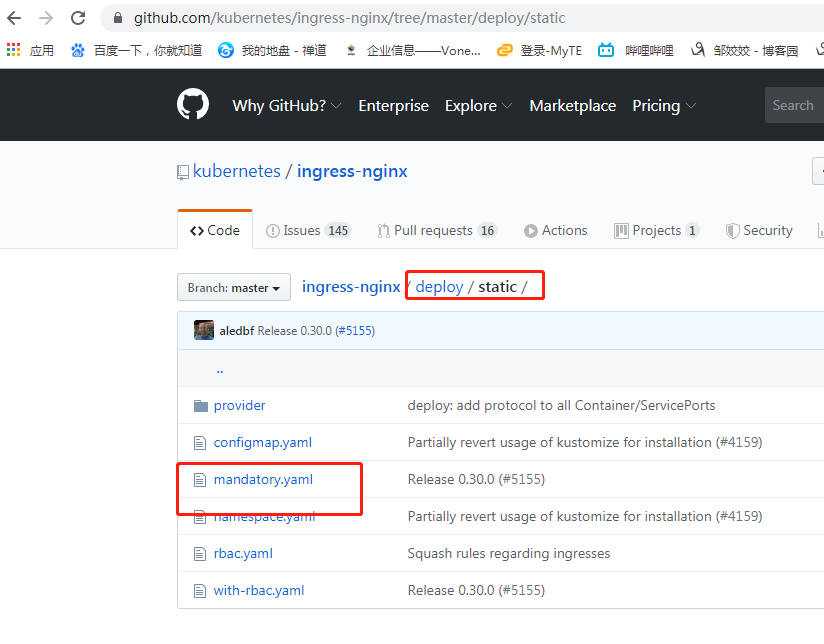

下载mandatory.yaml

/opt/ingress

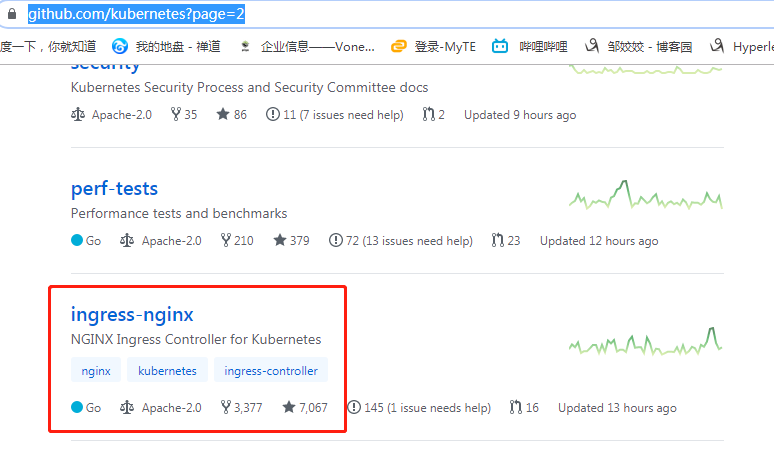

从官网下载:mandatory.yaml

https://github.com/kubernetes?page=2

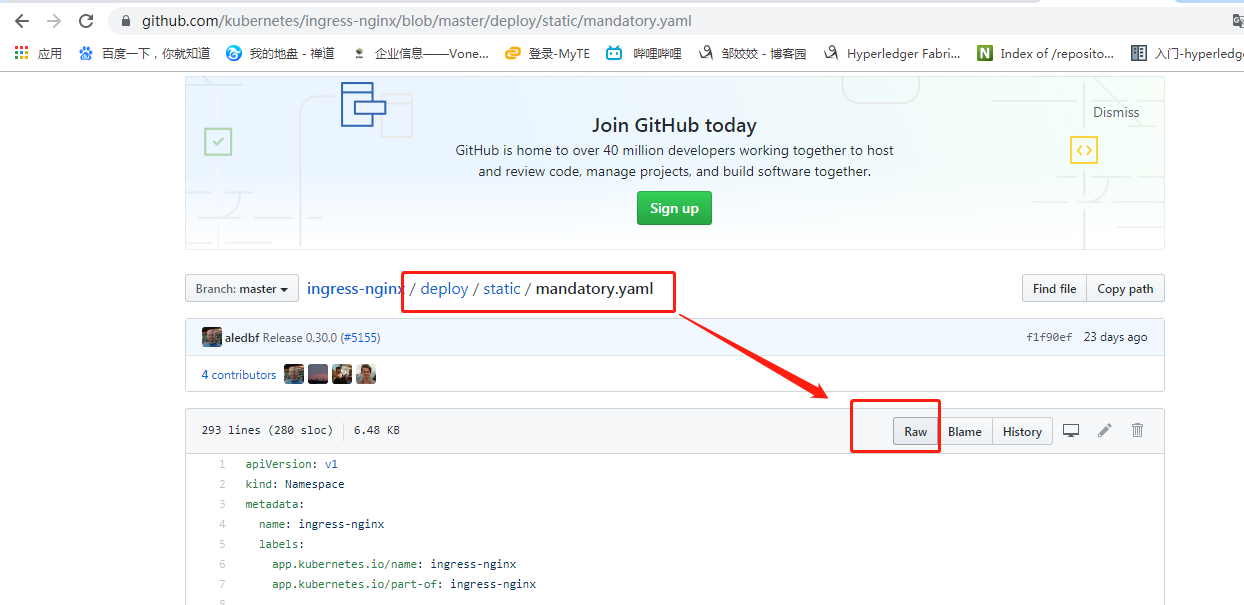

打开后,在浏览器页面复制或者wget这个地址获取文件。

修改mandatory.yaml

apiVersion