EFK (Elasticsearch + Fluentd + Kibana) 日志分析系统

EFK (Elasticsearch + Fluentd + Kibana) 日志分析系统

EFK 不是一个软件,而是一套解决方案。EFK 是三个开源软件的缩写,Elasticsearch,Fluentd,Kibana。其中 ELasticsearch 负责日志分析和存储,Fluentd 负责日志收集,Kibana 负责界面展示。它们之间互相配合使用,完美衔接,高效的满足了很多场合的应用,是目前主流的一种日志分析系统解决方案。

本文主要基于Fluentd实时读取日志文件的方式获取日志内容,将日志发送至Elasticsearch,并通过Kibana展示。

基于Docker 部署EFK

Docker images

$ docker pull elasticsearch:7.10.1

$ docker pull kibana:7.10.1

$ docker pull fluent/fluentd:v1.12-debian-armhf-1

创建数据目录,用于数据持久化

$ mkdir -p elasticsearch/data kibana/data fluentd/conf

$ chmod 777 elasticsearch/data kibana/data fluentd/conf

目录结构

├── docker-compose-ek.yml

├── docker-compose-f.yml

├── elasticsearch

│ └── data

├── fluentd

│ ├── conf

│ │ └── fluent.conf

│ └── Dockerfile

├── kibana

│ ├── data

│ │ └── uuid

│ └── kibana.yml

调整宿主机vm.max_map_count大小

$ vim /etc/sysctl.conf

vm.max_map_count = 2621440

$ sysctl -p

配置fluentd收集的log文件

$ cat fluentd/conf/fluent.conf

<source>

@type forward

port 24224 <--Fluentd 默认启动的端口>

bind 0.0.0.0

</source>

<source>

@type tail

path /usr/local/var/log/access.log <--收集的log绝对路径>

tag celery.log <--TAG,标示log>

refresh_interval 5

pos_file /usr/local/var/log/fluent/access-log.pos

<parse>

@type none

</parse>

</source>

<match access.log>

@type copy

<store>

@type elasticsearch

host 192.168.1.11 <--Elasticearch 地址>

port 22131 <--Elasticearch 端口>

user elastic <--Elasticearch 用户名,可选,如果es没有配置用户密码,此处删除>

password elastic <--Elasticearch 密码,可选,如果es没有配置用户密码,此处删除>

logstash_format true

logstash_prefix celery

logstash_dateformat %Y%m%d

include_tag_key true

type_name access_log

tag_key @log_name

flush_interval 10s

suppress_type_name true

</store>

<store>

@type stdout

</store>

</match>

// 如果多个日志文件,拷贝上面的配置即可(注:第一个<source>定义Fluentd的配置不用拷贝);

Kibana 配置文件

$ vim ./kibana/kibana.yml

#

# ** THIS IS AN AUTO-GENERATED FILE **

#

# Default Kibana configuration for docker target

server.name: kibana

server.host: "0"

# server.basePath: "/efk"

elasticsearch.hosts: [ "http://elasticsearch:9200" ]

monitoring.ui.container.elasticsearch.enabled: true

i18n.locale: "zh-CN"

# elasticsearch.username: "kibana_system"

# elasticsearch.password: "kibana_system"

官网Fluentd的docker images缺少fluent-plugin-elasticsearch包,我们重新build一下

$ cat fluentd/Dockerfile

FROM fluent/fluentd:v1.12-debian-armhf-1

USER root

RUN ["gem", "install", "fluent-plugin-elasticsearch"]

#USER fluent

# 构建

docker build -f fluentd/Dockerfile -t fluentd:v1.12-debian-armhf-1.1 .

Docker compose

$ cat docker-compose-f.yml

version: '2'

services:

fluentd:

image: fluentd:v1.12-debian-armhf-1.1

container_name: fluentd

mem_limit: 8G

volumes:

- ./fluentd/conf:/fluentd/etc # Fluentd配置文件

- /usr/local/var/log:/usr/local/var/log # 收集的Log目录位置

ports:

- "22130:24224"

- "22130:24224/udp"

networks:

- monitoring

networks:

monitoring:

driver: bridge

$ cat docker-compose-ek.yml

version: '2'

services:

elasticsearch:

image: elasticsearch:7.10.1

container_name: elasticsearch

mem_limit: 8G

environment:

- discovery.type=single-node

- xpack.security.enabled=true

volumes:

- /etc/localtime:/etc/localtime

- ./elasticsearch/data:/usr/share/elasticsearch/data # Elasticsearch数据目录

ports:

- "22131:9200"

networks:

- monitoring

kibana:

image: kibana:7.10.1

container_name: kibana

mem_limit: 4G

volumes:

- ./kibana/kibana.yml:/usr/share/kibana/config/kibana.yml # Kibana配置文件

- ./kibana/data:/usr/share/kibana/data # Kibana数据目录

- /etc/localtime:/etc/localtime

links:

- "elasticsearch"

depends_on:

- "elasticsearch"

ports:

- "22132:5601"

networks:

- monitoring

networks:

monitoring:

driver: bridge

服务启动

$ docker-compose -f docker-compose-f.yml up -d <--Fluentd>

$ docker-compose -f docker-compose-ek.yml up -d <--elasticsearch+kibana>

启动服务后建议配置Elasticsearch安全认证,配置密码访问

docker exec -it efk_elasticsearch_1 bash

./bin/elasticsearch-setup-passwords interactive

--- Please confirm that you would like to continue [y/N]y

Enter password for [elastic]: <--输入用户elastic的密码>

Reenter password for [elastic]: <--再次输入定义的密码>

Enter password for [apm_system]: <--输入用户apm_system的密码>

Reenter password for [apm_system]: <--再次输入定义的密码>

Enter password for [kibana_system]: <--输入用户kibana的密码>

Reenter password for [kibana_system]: <--再次输入定义的密码>

Enter password for [logstash_system]: <--输入用户logstash_system的密码>

Reenter password for [logstash_system]: <--再次输入定义的密码>

Enter password for [beats_system]: <--输入用户beats_system的密码>

Reenter password for [beats_system]: <--再次输入定义的密码>

Enter password for [remote_monitoring_user]: <--输入用户remote_monitoring_user的密码>

Reenter password for [remote_monitoring_user]: <--再次输入定义的密码>

Changed password for user [apm_system]

Changed password for user [kibana_system]

Changed password for user [logstash_system]

Changed password for user [beats_system]

Changed password for user [remote_monitoring_user]

Changed password for user [elastic]

exit

Fluentd配置连接Es的认证

$ vim ./fluentd/conf/fluent.conf

user elastic <--此处为在elasticsearch设置的elastic用户密码>

password elastic <--此处为在elasticsearch设置的elastic用户密码>

Kibana配置连接Es的认证

$ vim ./kibana/kibana.yml

elasticsearch.username: "kibana_system" <--此处为在elasticsearch设置的kibana_system用户密码>

elasticsearch.password: "kibana_system" <--此处为在elasticsearch设置的kibana_system用户密码>

重启服务

$ docker-compose -f docker-compose-f.yml down

$ docker-compose -f docker-compose-f.yml up -d

$ docker-compose -f docker-compose-ek.yml down

$ docker-compose -f docker-compose-ek.yml up -d

配置Nginx代理

$ vim nginx.conf

location /efk/ {

proxy_pass http://192.168.1.11:5601/;

}

// 配置kibana

$ vim ./kibana/kibana.yml

server.basePath: "/efk" <--/efk为url路径>

重启服务

$ docker-compose -f docker-compose-ek.yml down

$ docker-compose -f docker-compose-ek.yml up -d

浏览器访问Nginx域名即可打开Kibana页面

- 用户名&密码就是刚刚创建的elasticsearch用户信息:elastic / elastic

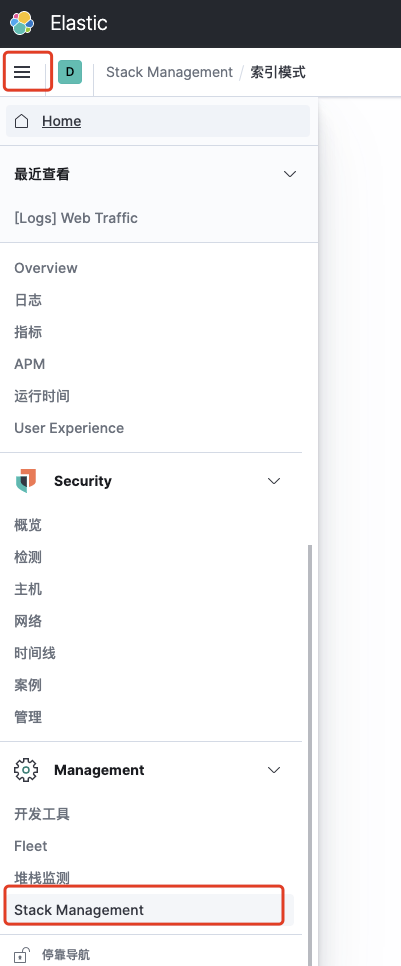

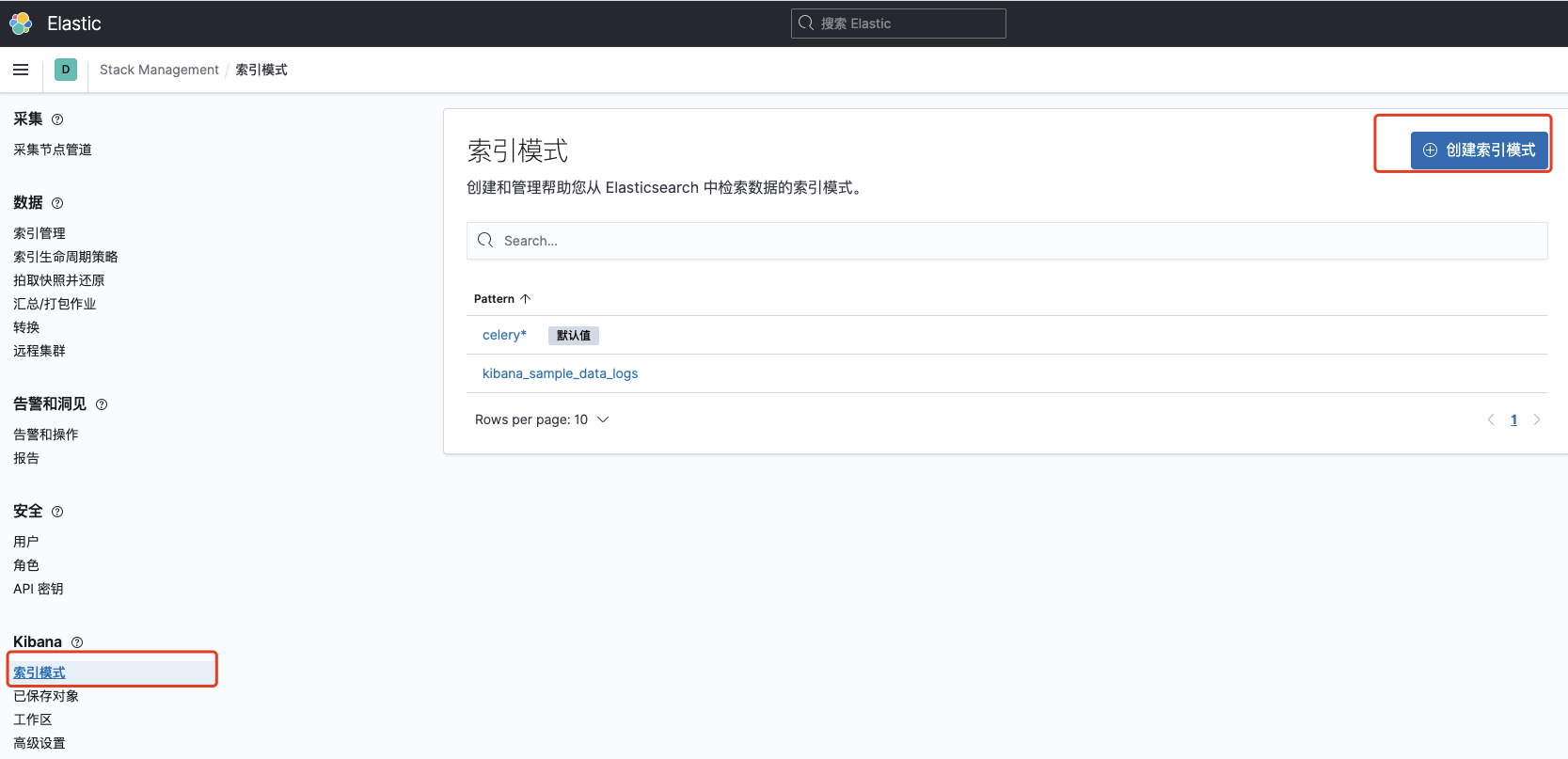

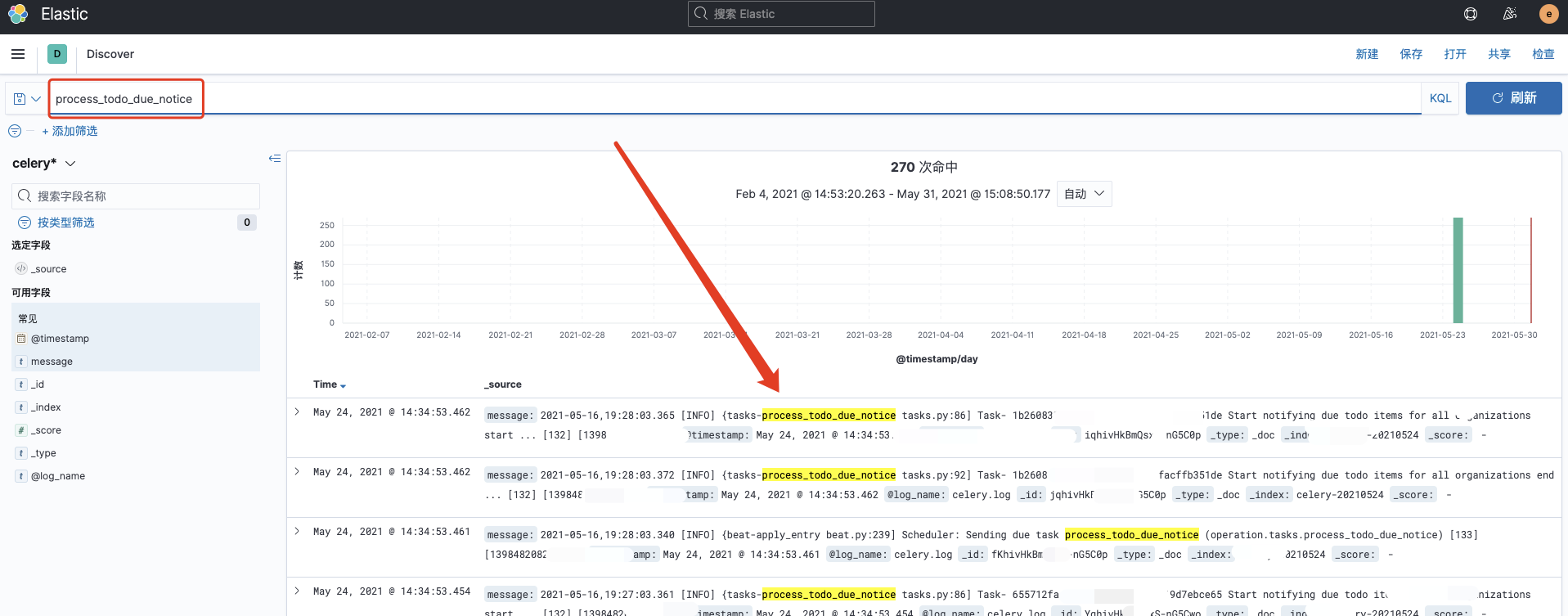

登录完成后,创建索引

填入关键词系统可以自动匹配

索引管理,输入关键词可以看到刚才创建的索引

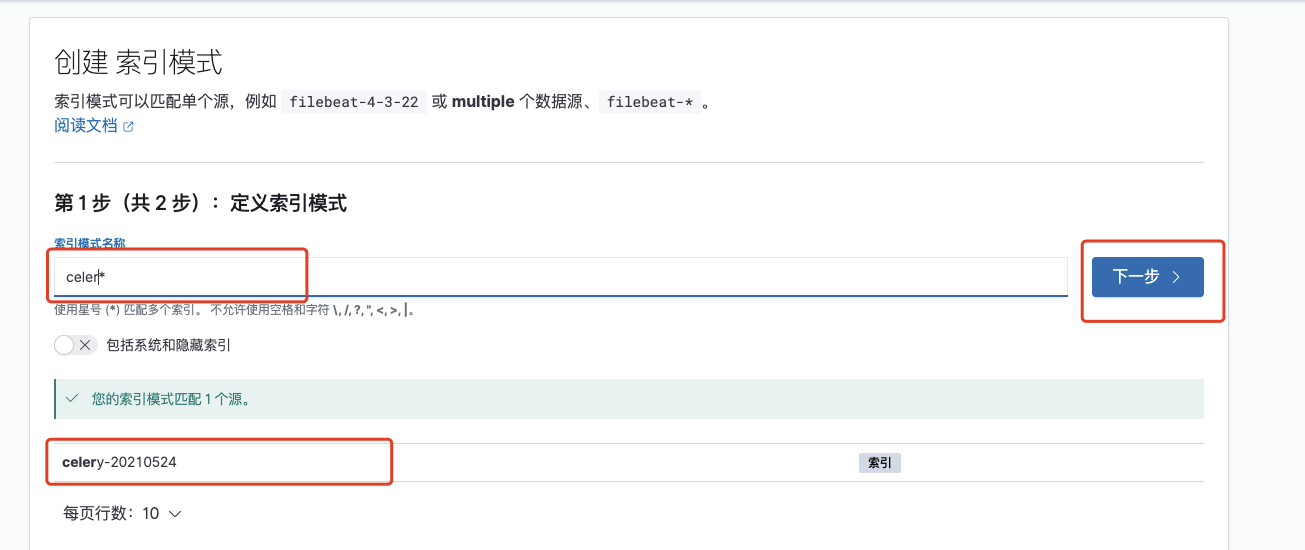

主页——Kibana可视化和分析——Discover,填入关键词可以过滤日志

- 其它

修改elastic的密码,Kibana登录密码

// curl -H "Content-Type:application/json" -XPOST -u <elastic用户名>:<elastic密码> 'http://192.168.1.11:9200/_xpack/security/user/elastic/_password' -d '{ "password" : "<elastic新密码>" }'

示例:curl -H "Content-Type:application/json" -XPOST -u elastic:elastic 'http://192.168.1.11:9200/_xpack/security/user/elastic/_password' -d '{ "password" : "123456" }'

—— Share every essays ——

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】凌霞软件回馈社区,博客园 & 1Panel & Halo 联合会员上线

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】博客园社区专享云产品让利特惠,阿里云新客6.5折上折

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 一个奇形怪状的面试题:Bean中的CHM要不要加volatile?

· [.NET]调用本地 Deepseek 模型

· 一个费力不讨好的项目,让我损失了近一半的绩效!

· .NET Core 托管堆内存泄露/CPU异常的常见思路

· PostgreSQL 和 SQL Server 在统计信息维护中的关键差异

· CSnakes vs Python.NET:高效嵌入与灵活互通的跨语言方案对比

· DeepSeek “源神”启动!「GitHub 热点速览」

· 我与微信审核的“相爱相杀”看个人小程序副业

· 上周热点回顾(2.17-2.23)

· 如何使用 Uni-app 实现视频聊天(源码,支持安卓、iOS)