DCN 实战 代码 数据集

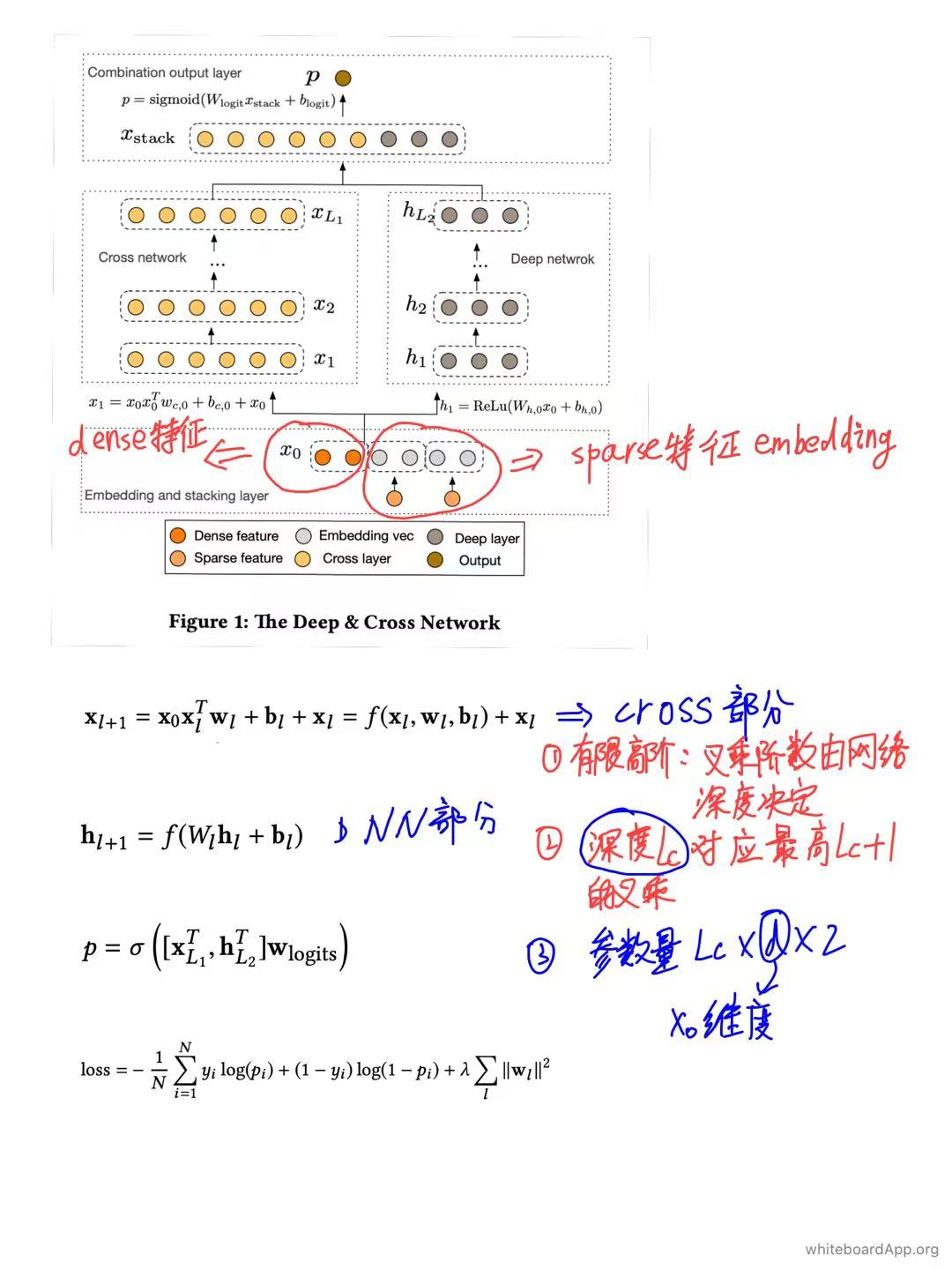

一、DCN 简介

之前已写过一篇文章介绍过DCN网络,感兴趣的小伙伴可以参考一下:推荐系统(五)DCN学习笔记

二、DCN网络实现

Criteo数据集下载,可关注公众号『后厂村搬砖工』,回复cirteo下载。代码参考链接。

# encoding=utf-8

import numpy as np

import pandas as pd

import tensorflow as tf

from tensorflow.keras.layers import *

from tensorflow.keras.models import Model

import tensorflow.keras.backend as K

import matplotlib.pyplot as plt

from sklearn.preprocessing import LabelEncoder

train_data = pd.read_csv('./data/criteo_train_sample.csv', sep='\t')

names = ["L"] + ["I{}".format(i) for i in range(1, 14)] + ["C{}".format(i) for i in range(1, 27)]

print("names length: {}".format(len(names)))

print("names: {}".format(names))

train_data.columns = names

print("train data shape: {}".format(train_data.shape))

print(train_data.head())

cols = train_data.columns.values

print("cols: {}".format(cols))

dense_feats = [f for f in cols if f[0] == "I"]

sparse_feats = [f for f in cols if f[0] == "C"]

print("dense feats: {}".format(dense_feats))

print("sparse feats: {}".format(sparse_feats))

def process_dense_feats(data, feats):

"""Process dense feats.

"""

d = data.copy()

d = d[feats].fillna(0.0)

for f in feats:

d[f] = d[f].apply(lambda x: np.log(x+1) if x > -1 else -1)

return d

def process_sparse_feats(data, feats):

"""Process sparse feats.

"""

d = data.copy()

d = d[feats].fillna("-1")

for f in feats:

# LabelEncoder是用来对分类型特征值进行编码,即对不连续的数值或文本进行编码

# fit(y): fit可看做一本空字典,y可看作要塞到字典中的词

# fit_transform(y):相当于先进行fit再进行transform,即把y塞到字典中去以后再进行transform得到索引值

label_encoder = LabelEncoder()

d[f] = label_encoder.fit_transform(d[f])

return d

data_dense = process_dense_feats(train_data, dense_feats)

data_sparse = process_sparse_feats(train_data, sparse_feats)

total_data = pd.concat([data_dense, data_sparse], axis=1)

print(data_dense.head(5))

print(data_sparse.head(5))

print(total_data.head(5))

total_data['label'] = train_data['L']

print(total_data.head(5))

# ========================

# 构造dense特征的输入

# ========================

dense_inputs = []

for f in dense_feats:

_input = Input([1], name=f)

dense_inputs.append(_input)

# 将输入拼接到一起,方便连接 Dense 层

concat_dense_inputs = Concatenate(axis=1)(dense_inputs) # ?, 13

# ========================

# 构造sparse特征输入

# ========================

sparse_inputs = []

for f in sparse_feats:

_input = Input([1], name=f)

sparse_inputs.append(_input)

# embedding size

k = 8

sparse_kd_embed = []

for i, _input in enumerate(sparse_inputs):

f = sparse_feats[i]

# 统计某特征取值个数

voc_size = total_data[f].nunique()

reg = tf.keras.regularizers.l2(0.7)

_embed = Embedding(voc_size, k, embeddings_regularizer=reg)(_input)

_embed = Flatten()(_embed)

sparse_kd_embed.append(_embed)

# 将sparse特征拼接在一起

concat_sparse_inputs = Concatenate(axis=1)(sparse_kd_embed)

# 拼接dense特征和sparse特征

embed_inputs = Concatenate(axis=1)([concat_sparse_inputs, concat_dense_inputs])

def cross_layer(x0, xl):

"""

单层 cross layer

x_{l+1} = x_0 x_l_T w_l + b_l + xl

"""

xl_dim = xl.shape[-1] # 221

w = tf.Variable(tf.random.truncated_normal(shape=(xl_dim,), stddev=0.01))

b = tf.Variable(tf.zeros(shape=(xl_dim,)))

# 下面的reshape操作相当于将列向量转换为行向量

xl_T = tf.reshape(xl, [-1, 1, xl_dim]) # (None, 1, 221)

# 行向量与列向量的乘积结果是一个标量

xlT_dot_wl = tf.tensordot(xl_T, w, axes=1) #(None, 1)

cross = x0 * xlT_dot_wl

return cross + b + xl

def build_cross_layer(x0, num_layer=3):

"""

多层 cross layer

"""

x1 = x0

for i in range(num_layer):

x1 = cross_layer(x0, x1)

return x1

# cross 部分

cross_layer_output = build_cross_layer(embed_inputs, 3)

# DNN 部分

fc_layer = Dropout(0.5)(Dense(128, activation='relu')(embed_inputs))

fc_layer = Dropout(0.3)(Dense(128, activation='relu')(fc_layer))

fc_layer_output = Dropout(0.1)(Dense(128, activation='relu')(fc_layer))

stack_layer = Concatenate()([cross_layer_output, fc_layer_output])

output_layer = Dense(1, activation='sigmoid', use_bias=True)(stack_layer)

model = Model(dense_inputs+sparse_inputs, output_layer)

model.compile(optimizer='adam',

loss='binary_crossentropy',

metrics=['binary_crossentropy',tf.keras.metrics.AUC(name='auc')])

# train_data = total_data.loc[:5000-1]

# valid_data = total_data.loc[5000:]

train_data = total_data.loc[:990000-1]

valid_data = total_data.loc[990000:]

train_dense_x = [train_data[f].values for f in dense_feats]

train_sparse_x = [train_data[f].values for f in sparse_feats]

train_label = [train_data['label'].values]

val_dense_x = [valid_data[f].values for f in dense_feats]

val_sparse_x = [valid_data[f].values for f in sparse_feats]

val_label = [valid_data['label'].values]

model.fit(

train_dense_x + train_sparse_x,

train_label,

epochs=5,

batch_size=128,

validation_data=(val_dense_x + val_sparse_x, val_label),

)

浙公网安备 33010602011771号

浙公网安备 33010602011771号