环境:centos7.6

内核版本:3.10.0-957.el7.x86_64 (可自行升级内核)

1、virtualbox新建虚拟机

centos7-kub01虚拟机:

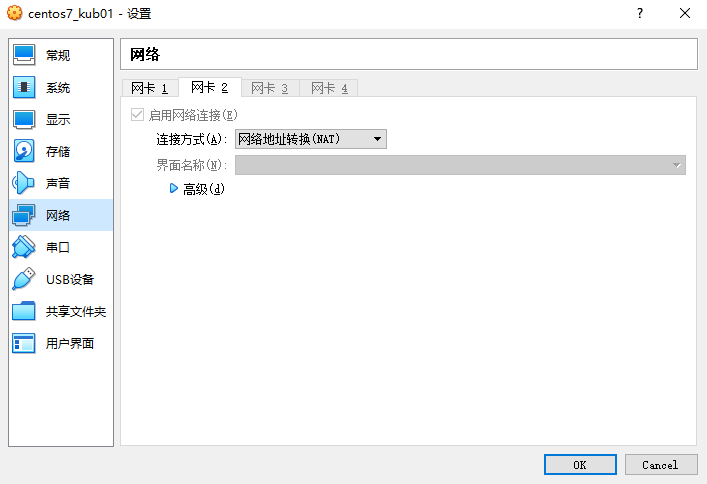

网卡1使用host-only网络,用于kubernete节点之间的连接。还有用于笔记本电脑连接虚拟机。

网卡2使用网络地址转换(NAT),用于连接互联网,下载镜像

其他的虚拟机的网卡配置也类似

2、安装centos7,自行安装

待补充

3、升级内核(可选)

待补充

4、安装docker,网上有教程

待补充

5、安装kubeadm

待补充

kubeadm安装可以参考:https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/install-kubeadm/

6、使用kubeadm安装kubernetes

首先确认已经安装的kubeadm的版本

[root@kub01 ~]# yum list installed|grep kube

Failed to set locale, defaulting to C

Repodata is over 2 weeks old. Install yum-cron? Or run: yum makecache fast

cri-tools.x86_64 1.13.0-0 @kubernetes

kubeadm.x86_64 1.17.0-0 @kubernetes

kubectl.x86_64 1.17.0-0 @kubernetes

kubelet.x86_64 1.17.0-0 @kubernetes

kubernetes-cni.x86_64 0.7.5-0 @kubernetes

提前下载需要使用到镜像,这个非常重要,如果你在国内的话,k8s.gcr.io会提示连接超时,除非自己解决,v1.17版本需要用到的镜像如下:

k8s.gcr.io/kube-apiserver:v1.17.0

k8s.gcr.io/kube-controller-manager:v1.17.0

k8s.gcr.io/kube-scheduler:v1.17.0

k8s.gcr.io/kube-proxy:v1.17.0

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd:3.4.3-0

k8s.gcr.io/coredns:1.6.5

针对这种情况,可以首先通过docker pull命令先在国内源将镜像下载完毕,然后通过docker tag命令重新设置tag(master节点)

docker pull gcr.azk8s.cn/google_containers/kube-proxy:v1.17.0

docker pull gcr.azk8s.cn/google_containers/kube-apiserver:v1.17.0

docker pull gcr.azk8s.cn/google_containers/kube-controller-manager:v1.17.0

docker pull gcr.azk8s.cn/google_containers/kube-scheduler:v1.17.0

docker pull gcr.azk8s.cn/google_containers/etcd:3.4.3-0

docker pull gcr.azk8s.cn/google_containers/coredns:1.6.5

docker pull gcr.azk8s.cn/google_containers/pause:3.1

docker tag gcr.azk8s.cn/google_containers/kube-proxy:v1.17.0 k8s.gcr.io/kube-proxy:v1.17.0 docker tag gcr.azk8s.cn/google_containers/kube-apiserver:v1.17.0 k8s.gcr.io/kube-apiserver:v1.17.0 docker tag gcr.azk8s.cn/google_containers/kube-controller-manager:v1.17.0 k8s.gcr.io/kube-controller-manager:v1.17.0 docker tag gcr.azk8s.cn/google_containers/kube-scheduler:v1.17.0 k8s.gcr.io/kube-scheduler:v1.17.0 docker tag gcr.azk8s.cn/google_containers/etcd:3.4.3-0 k8s.gcr.io/etcd:3.4.3-0 docker tag gcr.azk8s.cn/google_containers/coredns:1.6.5 k8s.gcr.io/coredns:1.6.5 docker tag gcr.azk8s.cn/google_containers/pause:3.1 k8s.gcr.io/pause:3.1

子节点只需要下载其中两个镜像即可

docker pull gcr.azk8s.cn/google_containers/kube-proxy:v1.17.0

docker pull gcr.azk8s.cn/google_containers/pause:3.1

docker tag gcr.azk8s.cn/google_containers/kube-proxy:v1.17.0 k8s.gcr.io/kube-proxy:v1.17.0 docker tag gcr.azk8s.cn/google_containers/pause:3.1 k8s.gcr.io/pause:3.1

如果没有对应版本的镜像,就只能自己去搜寻了

镜像搞定后,接下来安装kubernetes,通过kubeadm init安装

[root@kub01 ~]# kubeadm init --apiserver-advertise-address 192.168.56.107 --pod-network-cidr=10.244.0.0/16

安装日志信息(部分信息省略):

[root@kub01 ~]# kubeadm init --apiserver-advertise-address 192.168.56.107 --pod-network-cidr=10.244.0.0/16 W0119 01:08:20.120396 20293 version.go:101] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://dl.k8s.io/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers) W0119 01:08:20.120526 20293 version.go:102] falling back to the local client version: v1.17.0 W0119 01:08:20.121144 20293 validation.go:28] Cannot validate kubelet config - no validator is available W0119 01:08:20.121169 20293 validation.go:28] Cannot validate kube-proxy config - no validator is available [init] Using Kubernetes version: v1.17.0 Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: ... mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.56.107:6443 --token 40yu1v.jb0rfmijbjwwp1mk \ --discovery-token-ca-cert-hash sha256:be3edcdd89b0a742a216a2a041633e57cbd4223e100edacc504785249499530c

master节点配置kubectl

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

如果是root用户,可以运行一下命令配置kubectl

export KUBECONFIG=/etc/kubernetes/admin.conf

子节点可以通过以下命令加入到kubernetes集群(安装日志信息最后会打印):

kubeadm join 192.168.56.107:6443 --token 40yu1v.jb0rfmijbjwwp1mk \ --discovery-token-ca-cert-hash sha256:be3edcdd89b0a742a216a2a041633e57cbd4223e100edacc504785249499530c

查看所有的pods,可以发现coredns这个pod一直处于pending

[root@kub01 ~]# kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-6955765f44-k467p 0/1 Pending 0 6m55s kube-system coredns-6955765f44-qrpv4 0/1 Pending 0 6m55s kube-system etcd-kub01 1/1 Running 0 7m9s kube-system kube-apiserver-kub01 1/1 Running 0 7m9s kube-system kube-controller-manager-kub01 1/1 Running 0 7m9s kube-system kube-proxy-mmv9x 1/1 Running 0 6m54s kube-system kube-scheduler-kub01 1/1 Running 0 7m9s

查看所有的节点,都处于notreayd状态,这是因为没有安装网络插件,可选calico、flannel或者其他插件,

[root@kub01 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION kub01 NotReady master 12m v1.17.0 kub02 NotReady <none> 41s v1.17.0

之前kubeadm init使用了参数--pod-network-cidr=10.244.0.0/16,所以我们在这里使用flannel网络

首先还是需要先从国内下载镜像,然后通过docker tag命令重新打tag,主节点和子节点都需要运行

docker pull quay-mirror.qiniu.com/coreos/flannel:v0.11.0-amd64 docker tag quay-mirror.qiniu.com/coreos/flannel:v0.11.0-amd64 quay.io/coreos/flannel:v0.11.0-amd64

在master节点通过kubctl安装flannel,首先下载flannel的安装配置文件

wget https://raw.githubusercontent.com/coreos/flannel/2140ac876ef134e0ed5af15c65e414cf26827915/Documentation/kube-flannel.yml

修改kube-flannel.yml,添加启动参数,因为我们是双网卡的虚拟机,需要指定对应的网卡,否则可能导致dns解析异常,首先确认host-only对应的网卡名称(enp0s3)

enp0s3: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.56.107 netmask 255.255.255.0 broadcast 192.168.56.255 inet6 fe80::3270:547f:5509:7c24 prefixlen 64 scopeid 0x20<link> ether 08:00:27:c6:e6:f1 txqueuelen 1000 (Ethernet) RX packets 41219 bytes 3758617 (3.5 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 42864 bytes 21514707 (20.5 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 enp0s8: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 10.0.3.15 netmask 255.255.255.0 broadcast 10.0.3.255 inet6 fe80::7653:3467:95b2:bf4b prefixlen 64 scopeid 0x20<link> ether 08:00:27:3e:a2:7a txqueuelen 1000 (Ethernet) RX packets 17180 bytes 20648597 (19.6 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 3664 bytes 279458 (272.9 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

修改对应的启动参数位置:

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

- --iface=enp0s3

安装flannel:

kubectl apply -f kube-flannel.yml

运行完毕后查看所有的pods和nodes状态

[root@kub01 ~]# kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-6955765f44-k467p 1/1 Running 2 57m kube-system coredns-6955765f44-qrpv4 1/1 Running 2 57m kube-system etcd-kub01 1/1 Running 2 57m kube-system kube-apiserver-kub01 1/1 Running 3 57m kube-system kube-controller-manager-kub01 1/1 Running 2 57m kube-system kube-flannel-ds-amd64-chb5z 1/1 Running 3 44m kube-system kube-flannel-ds-amd64-dzwpr 1/1 Running 0 44m kube-system kube-proxy-46jzs 1/1 Running 0 45m kube-system kube-proxy-mmv9x 1/1 Running 3 57m kube-system kube-scheduler-kub01 1/1 Running 2 57m

[root@kub01 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION kub01 Ready master 3h2m v1.17.0 kub02 Ready <none> 170m v1.17.0

至此配置完毕

使用kubeadm安装kubernetes可以参考:https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/troubleshooting-kubeadm/

## 部署ingress

参考:https://docs.nginx.com/nginx-ingress-controller/installation/installation-with-manifests/

问题排错:

1、安装过程中coredns 一直处于ContainerCreating状态,因为我之前重复添加了节点并修改了主机名,需要reset,并删除对应的cni配置文件,以下是相关的命令:

kubeadm reset rm -rf /var/lib/cni/ /var/lib/kubelet/* /etc/cni/ ifconfig cni0 down ifconfig flannel.1 down ifconfig docker0 down ip link delete cni0 ip link delete flannel.1

reboot

然后再重新join

kubeadm join xxx

2、提示1 node(s) had taints that the pod didn't tolerate:

默认 k8s 不允许往 master 节点装东西,强行设置下允许:kubectl taint nodes --all node-role.kubernetes.io/master-

1 node(s) had taints that the pod didn't tolerate:

默认 k8s 不允许往 master 节点装东西,强行设置下允许:kubectl taint nodes --all node-role.kubernetes.io/master-