全网最全的Windows下Anaconda2 / Anaconda3里正确下载安装爬虫框架Scrapy(离线方式和在线方式)(图文详解)

不多说,直接上干货!

参考博客

全网最全的Windows下Anaconda2 / Anaconda3里正确下载安装OpenCV(离线方式和在线方式)(图文详解)

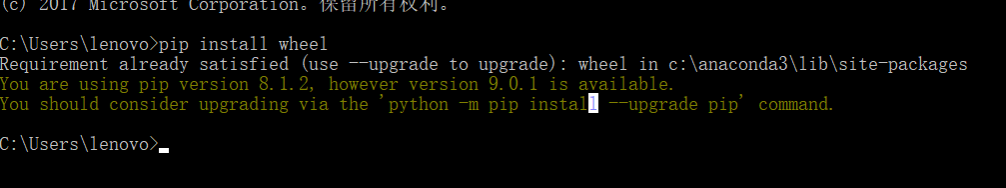

第一步:首先,提示升级下pip

第二步:下载安装wheel

也可以去网站里先下载好,离线安装。也可以如上在线安装。

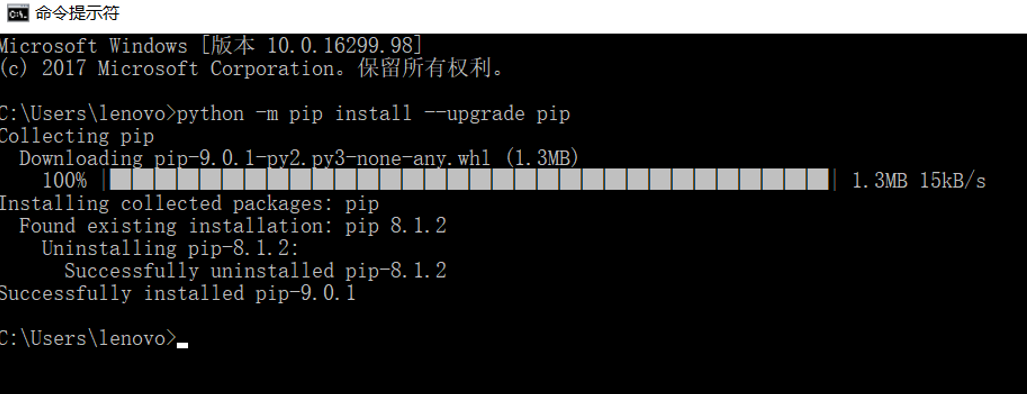

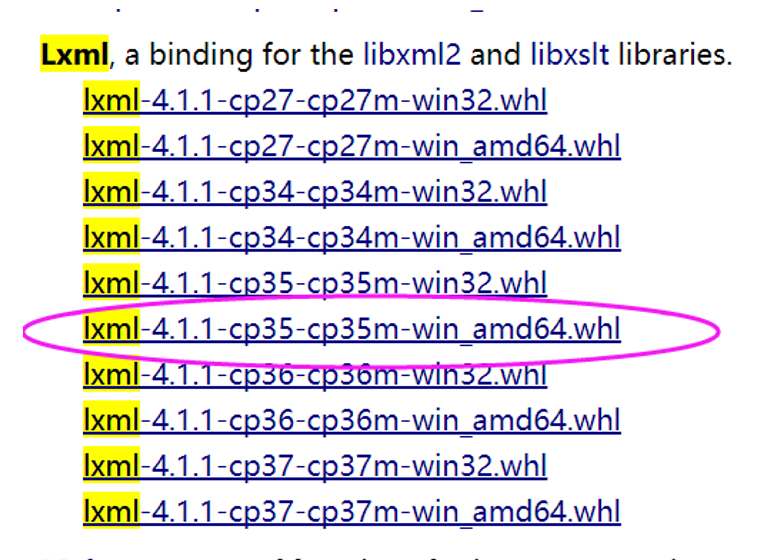

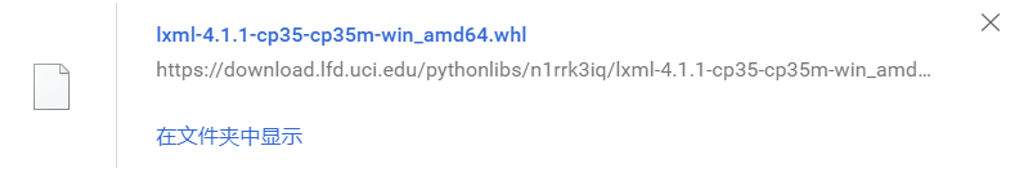

第三步: 安装lxml

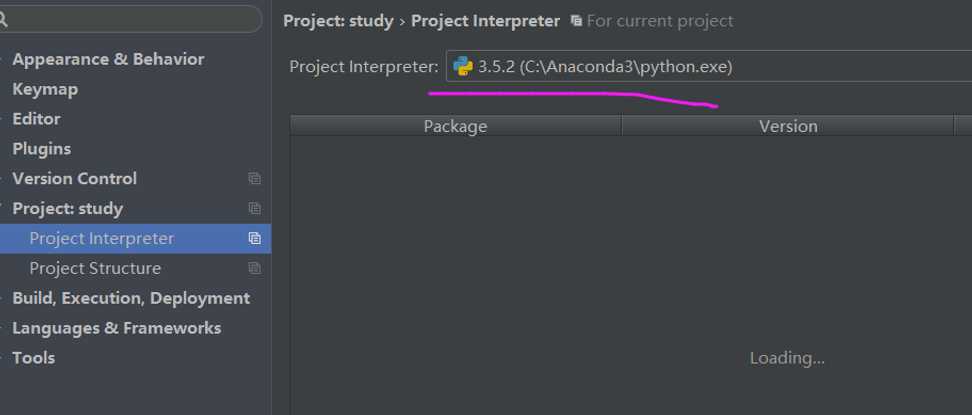

因为,我的是

成功!

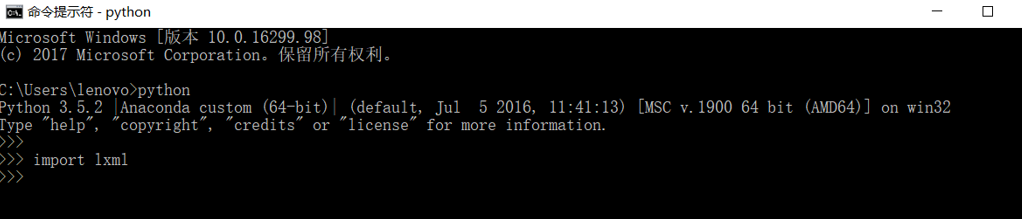

验证下

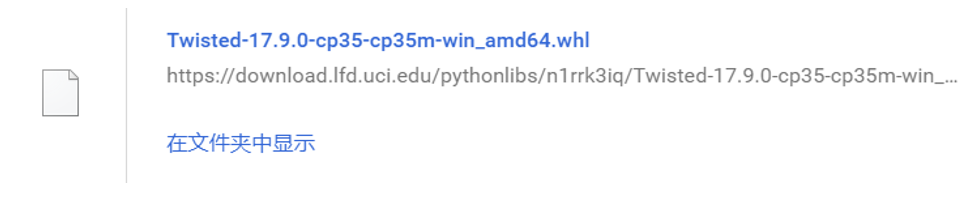

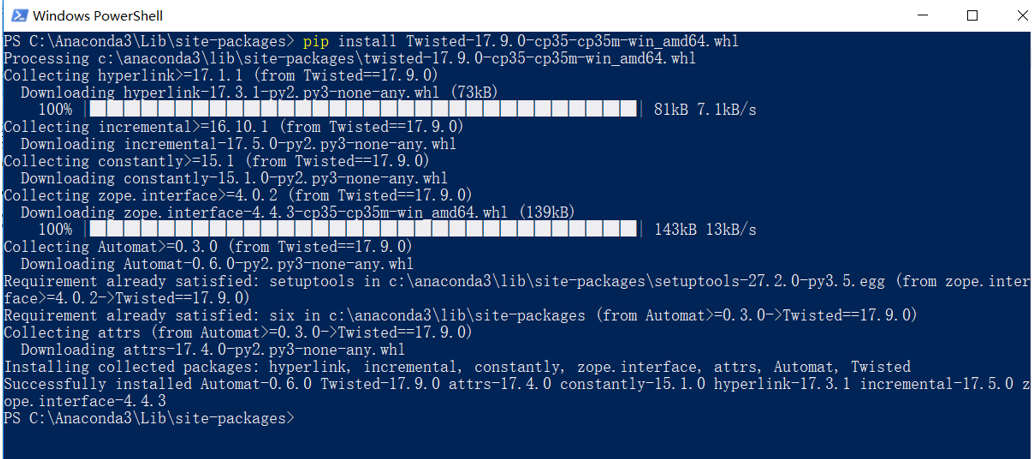

第四步:安装Twisted

PS C:\Anaconda3\Lib\site-packages> pip install Twisted-17.9.0-cp35-cp35m-win_amd64.whl Processing c:\anaconda3\lib\site-packages\twisted-17.9.0-cp35-cp35m-win_amd64.whl Collecting hyperlink>=17.1.1 (from Twisted==17.9.0) Downloading hyperlink-17.3.1-py2.py3-none-any.whl (73kB) 100% |████████████████████████████████| 81kB 7.1kB/s Collecting incremental>=16.10.1 (from Twisted==17.9.0) Downloading incremental-17.5.0-py2.py3-none-any.whl Collecting constantly>=15.1 (from Twisted==17.9.0) Downloading constantly-15.1.0-py2.py3-none-any.whl Collecting zope.interface>=4.0.2 (from Twisted==17.9.0) Downloading zope.interface-4.4.3-cp35-cp35m-win_amd64.whl (139kB) 100% |████████████████████████████████| 143kB 13kB/s Collecting Automat>=0.3.0 (from Twisted==17.9.0) Downloading Automat-0.6.0-py2.py3-none-any.whl Requirement already satisfied: setuptools in c:\anaconda3\lib\site-packages\setuptools-27.2.0-py3.5.egg (from zope.interface>=4.0.2->Twisted==17.9.0) Requirement already satisfied: six in c:\anaconda3\lib\site-packages (from Automat>=0.3.0->Twisted==17.9.0) Collecting attrs (from Automat>=0.3.0->Twisted==17.9.0) Downloading attrs-17.4.0-py2.py3-none-any.whl Installing collected packages: hyperlink, incremental, constantly, zope.interface, attrs, Automat, Twisted Successfully installed Automat-0.6.0 Twisted-17.9.0 attrs-17.4.0 constantly-15.1.0 hyperlink-17.3.1 incremental-17.5.0 zope.interface-4.4.3 PS C:\Anaconda3\Lib\site-packages>

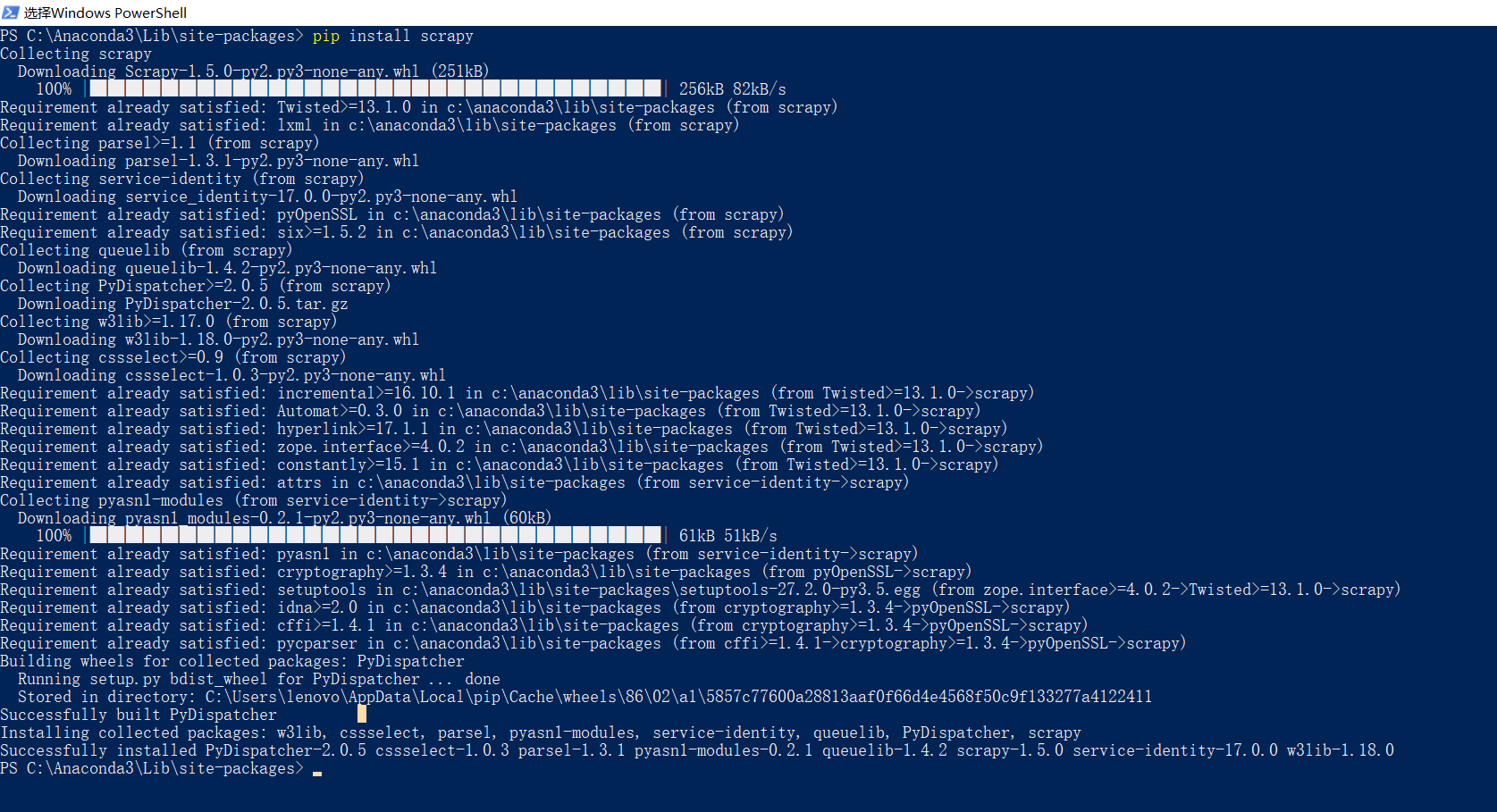

第五步:安装scrapy

PS C:\Anaconda3\Lib\site-packages> pip install scrapy Collecting scrapy Downloading Scrapy-1.5.0-py2.py3-none-any.whl (251kB) 100% |████████████████████████████████| 256kB 82kB/s Requirement already satisfied: Twisted>=13.1.0 in c:\anaconda3\lib\site-packages (from scrapy) Requirement already satisfied: lxml in c:\anaconda3\lib\site-packages (from scrapy) Collecting parsel>=1.1 (from scrapy) Downloading parsel-1.3.1-py2.py3-none-any.whl Collecting service-identity (from scrapy) Downloading service_identity-17.0.0-py2.py3-none-any.whl Requirement already satisfied: pyOpenSSL in c:\anaconda3\lib\site-packages (from scrapy) Requirement already satisfied: six>=1.5.2 in c:\anaconda3\lib\site-packages (from scrapy) Collecting queuelib (from scrapy) Downloading queuelib-1.4.2-py2.py3-none-any.whl Collecting PyDispatcher>=2.0.5 (from scrapy) Downloading PyDispatcher-2.0.5.tar.gz Collecting w3lib>=1.17.0 (from scrapy) Downloading w3lib-1.18.0-py2.py3-none-any.whl Collecting cssselect>=0.9 (from scrapy) Downloading cssselect-1.0.3-py2.py3-none-any.whl Requirement already satisfied: incremental>=16.10.1 in c:\anaconda3\lib\site-packages (from Twisted>=13.1.0->scrapy) Requirement already satisfied: Automat>=0.3.0 in c:\anaconda3\lib\site-packages (from Twisted>=13.1.0->scrapy) Requirement already satisfied: hyperlink>=17.1.1 in c:\anaconda3\lib\site-packages (from Twisted>=13.1.0->scrapy) Requirement already satisfied: zope.interface>=4.0.2 in c:\anaconda3\lib\site-packages (from Twisted>=13.1.0->scrapy) Requirement already satisfied: constantly>=15.1 in c:\anaconda3\lib\site-packages (from Twisted>=13.1.0->scrapy) Requirement already satisfied: attrs in c:\anaconda3\lib\site-packages (from service-identity->scrapy) Collecting pyasn1-modules (from service-identity->scrapy) Downloading pyasn1_modules-0.2.1-py2.py3-none-any.whl (60kB) 100% |████████████████████████████████| 61kB 51kB/s Requirement already satisfied: pyasn1 in c:\anaconda3\lib\site-packages (from service-identity->scrapy) Requirement already satisfied: cryptography>=1.3.4 in c:\anaconda3\lib\site-packages (from pyOpenSSL->scrapy) Requirement already satisfied: setuptools in c:\anaconda3\lib\site-packages\setuptools-27.2.0-py3.5.egg (from zope.interface>=4.0.2->Twisted>=13.1.0->scrapy) Requirement already satisfied: idna>=2.0 in c:\anaconda3\lib\site-packages (from cryptography>=1.3.4->pyOpenSSL->scrapy) Requirement already satisfied: cffi>=1.4.1 in c:\anaconda3\lib\site-packages (from cryptography>=1.3.4->pyOpenSSL->scrapy) Requirement already satisfied: pycparser in c:\anaconda3\lib\site-packages (from cffi>=1.4.1->cryptography>=1.3.4->pyOpenSSL->scrapy) Building wheels for collected packages: PyDispatcher Running setup.py bdist_wheel for PyDispatcher ... done Stored in directory: C:\Users\lenovo\AppData\Local\pip\Cache\wheels\86\02\a1\5857c77600a28813aaf0f66d4e4568f50c9f133277a4122411 Successfully built PyDispatcher Installing collected packages: w3lib, cssselect, parsel, pyasn1-modules, service-identity, queuelib, PyDispatcher, scrapy Successfully installed PyDispatcher-2.0.5 cssselect-1.0.3 parsel-1.3.1 pyasn1-modules-0.2.1 queuelib-1.4.2 scrapy-1.5.0 service-identity-17.0.0 w3lib-1.18.0 PS C:\Anaconda3\Lib\site-packages>

验证下

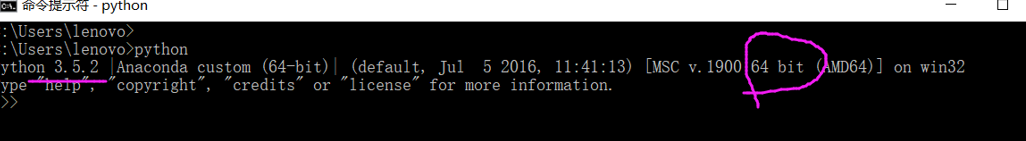

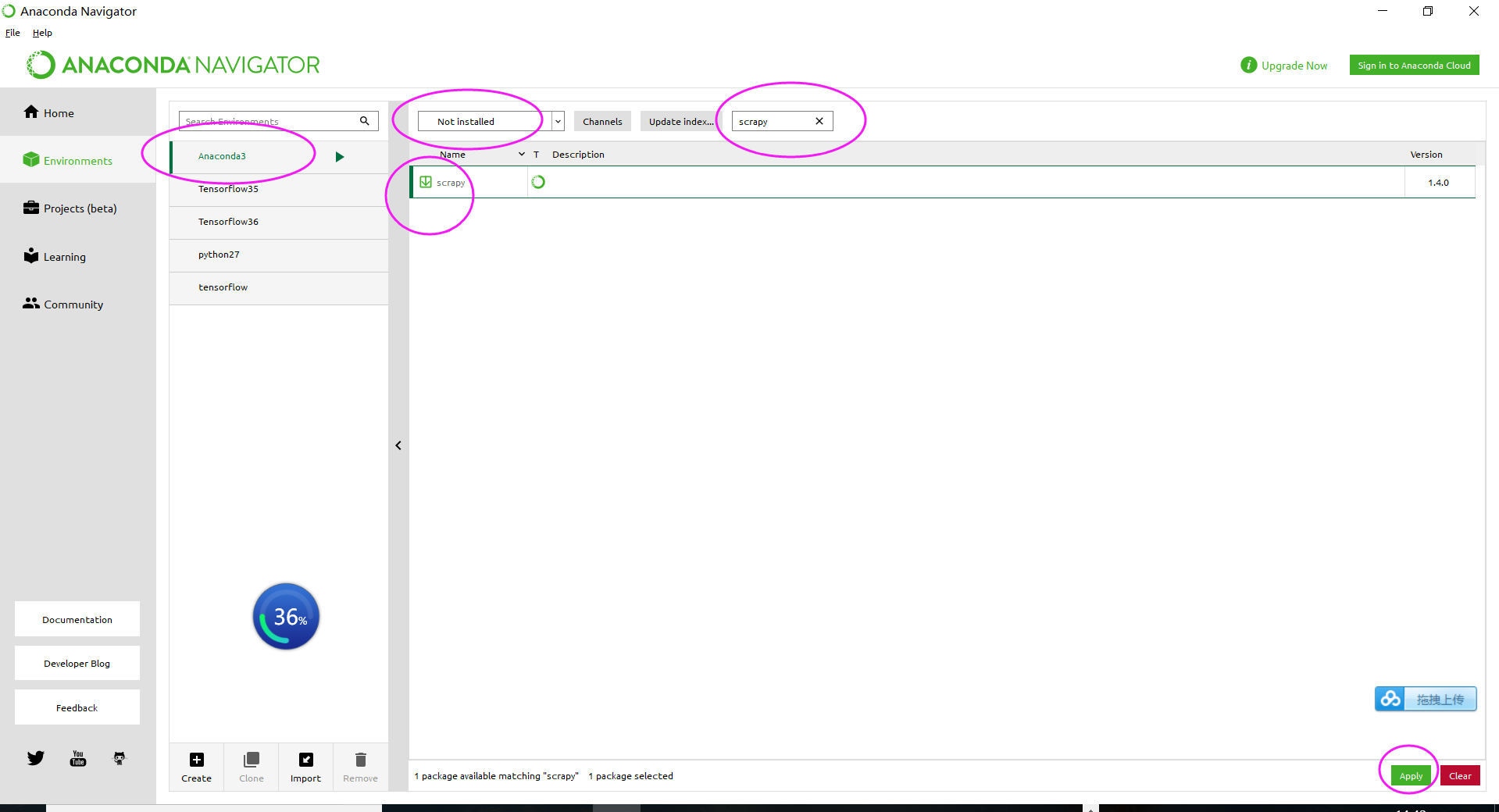

当然,你也可以直接在这里安装

同时,大家可以关注我的个人博客:

http://www.cnblogs.com/zlslch/ 和 http://www.cnblogs.com/lchzls/ http://www.cnblogs.com/sunnyDream/

详情请见:http://www.cnblogs.com/zlslch/p/7473861.html

人生苦短,我愿分享。本公众号将秉持活到老学到老学习无休止的交流分享开源精神,汇聚于互联网和个人学习工作的精华干货知识,一切来于互联网,反馈回互联网。

目前研究领域:大数据、机器学习、深度学习、人工智能、数据挖掘、数据分析。 语言涉及:Java、Scala、Python、Shell、Linux等 。同时还涉及平常所使用的手机、电脑和互联网上的使用技巧、问题和实用软件。 只要你一直关注和呆在群里,每天必须有收获

对应本平台的讨论和答疑QQ群:大数据和人工智能躺过的坑(总群)(161156071)

作者:大数据和人工智能躺过的坑

出处:http://www.cnblogs.com/zlslch/

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文链接,否则保留追究法律责任的权利。

如果您认为这篇文章还不错或者有所收获,您可以通过右边的“打赏”功能 打赏我一杯咖啡【物质支持】,也可以点击右下角的【好文要顶】按钮【精神支持】,因为这两种支持都是我继续写作,分享的最大动力!

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 地球OL攻略 —— 某应届生求职总结

· 提示词工程——AI应用必不可少的技术

· Open-Sora 2.0 重磅开源!

· 周边上新:园子的第一款马克杯温暖上架