[网络爬虫]Python爬取中国气象科普网新闻

代码如下:

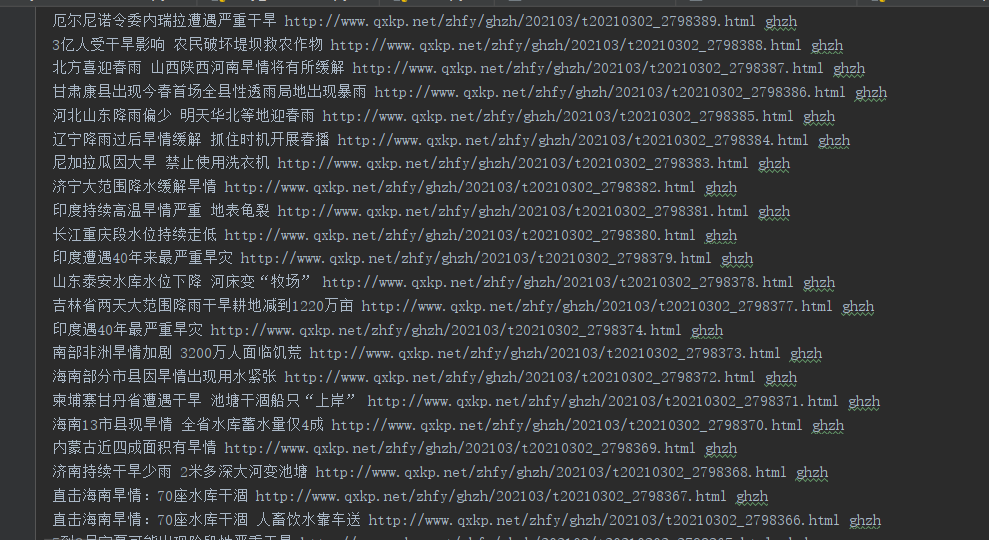

import requests from bs4 import BeautifulSoup import News.IO as io url = "http://www.qxkp.net/zhfy/" # 设置头 cookie = { "cityPy": "UM_distinctid=171f2280ef23fb-02a4939f3c1bd4-335e4e71-144000-171f2280ef3dab; Hm_lvt_" + "ab6a683aa97a52202eab5b3a9042a8d2=1588905651; CNZZDATA1275796416=871124600-1588903268-%7C1588990372; " + "Hm_lpvt_ab6a683aa97a52202eab5b3a9042a8d2=1588994046"} header = { "User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 " + "(KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3756.400 QQBrowser/10.5.4039.400"} Type = ["ghzh", "byhl", "scb", "tffy", "flaq", "hc", "jdtqsj", "gwfh"] def get_url_addtion(): global cookie, header Type=["bb","dw"] for type in Type: # 爬取内容 html = requests.get(url="http://www.qxkp.net/zhfy/"+type+"/", headers=header, cookies=cookie) # 设置格式 html.encoding = "utf-8" soup = BeautifulSoup(html.text, 'html.parser') # 寻找标签 divs = soup.find_all("div", class_='con_list_news_zt') for d in divs: div = BeautifulSoup(d.text, "html.parser") print(div) print("http://www.qxkp.net/zhfy/"+type+"/" + str(d).split("./")[1].split("\" tar")[0]) print(type) title=str(div).split("\n")[0] date=str(div).split("\n")[1] href="http://www.qxkp.net/zhfy/"+type+"/" + str(d).split("./")[1].split("\" tar")[0] io.cw("news",title+" "+date+" "+href+" "+type) def get_url(url): global cookie, header for type in Type: for i in range(1, 10): # 爬取内容 html = requests.get(url=url + type + "/index_" + str(i) + ".html", headers=header, cookies=cookie) # 设置格式 html.encoding = "utf-8" soup = BeautifulSoup(html.text, 'html.parser') # 寻找标签 divs = soup.find_all("div", class_='con_list_news_zt') for d in divs: div = BeautifulSoup(d.text, "html.parser") print(div) print("http://www.qxkp.net/zhfy/" + type + "/" + str(d).split("./")[1].split("\" tar")[0]) print(type) title = str(div).split("\n")[0] date = str(div).split("\n")[1] href = "http://www.qxkp.net/zhfy/" + type + "/" + str(d).split("./")[1].split("\" tar")[0] io.cw("news", title + " " + date + " " + href + " " + type+"\n") if __name__ == '__main__': get_url(url) get_url_addtion()

# 写入文件 def cw(file,con): f=open(file,"a+",encoding='utf-8') # 以追加的方式 f.write(con) f.close() if __name__=="__main__": cw("sss.txt", "aaaaa") # 以追加的方式