GLUE

一、简介

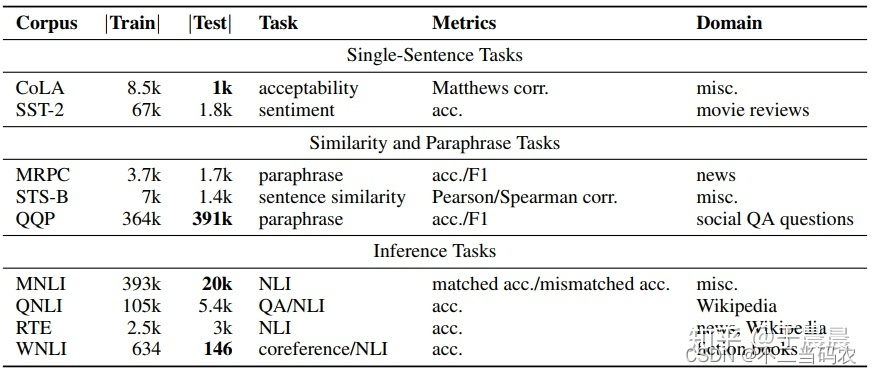

GLUE(General Language Understanding Evaluation)由来自纽约大学、华盛顿大学等机构创建的一个多任务的自然语言理解基准和分析平台。

GLUE共有九个任务,分别是CoLA、SST-2、MRPC、STS-B、QQP、MNLI、QNLI、RTE、WNLI。如下图图2所示,可以分为三类,分别是单句任务,相似性和释义任务。

二、任务介绍

单句任务-CoLA、SST-2

2.1 CoLA

CoLA(The Corpus of Linguistic Acceptability,语言可接受性语料库),单句子分类任务,语料来自语言理论的书籍和期刊,每个句子被标注为是否合乎语法的单词序列。本任务是一个二分类任务,标签共两个,分别是0和1,其中0表示不合乎语法,1表示合乎语法。

样本个数:训练集8, 551个,开发集1, 043个,测试集1, 063个。

任务:可接受程度,合乎语法与不合乎语法二分类。

评价准则:Matthews correlation coefficient。

CoLA下载

Data Format

Each line in the .tsv files consists of 4 tab-separated columns.

Column 1: the code representing the source of the sentence.

Column 2: the acceptability judgment label (0=unacceptable, 1=acceptable).

Column 3: the acceptability judgment as originally notated by the author.

Column 4: the sentence.

Corpus Sample

clc95 0 * In which way is Sandy very anxious to see if the students will be able to solve the homework problem?

c-05 1 The book was written by John.

c-05 0 * Books were sent to each other by the students.

swb04 1 She voted for herself.

swb04 1 I saw that gas can explode.- 1

- 2

- 3

- 4

- 5

2.2 SST-2

SST-2(The Stanford Sentiment Treebank,斯坦福情感树库),单句子分类任务,包含电影评论中的句子和它们情感的人类注释。这项任务是给定句子的情感,类别分为两类正面情感(positive,样本标签对应为1)和负面情感(negative,样本标签对应为0),并且只用句子级别的标签。也就是,本任务也是一个二分类任务,针对句子级别,分为正面和负面情感。

样本个数:训练集67, 350个,开发集873个,测试集1, 821个。

任务:情感分类,正面情感和负面情感二分类。

评价准则:accuracy。

sentence label

a stirring , funny and finally transporting re-imagining of beauty and the beast and 1930s horror films 1

apparently reassembled from the cutting-room floor of any given daytime soap . 0

they presume their audience wo n't sit still for a sociology lesson , however entertainingly presented , so they trot out the conventional science-fiction elements of bug-eyed monsters and futuristic women in skimpy clothes . 0- 1

- 2

- 3

- 4

SST-5:

4,"a stirring , funny and finally transporting re-imagining of beauty and the beast and 1930s horror films"

1,apparently reassembled from the cutting-room floor of any given daytime soap .

1,"they presume their audience wo n't sit still for a sociology lesson , however entertainingly presented , so they trot out the conventional science-fiction elements of bug-eyed monsters and futuristic women in skimpy clothes ."

2,the entire movie is filled with deja vu moments .- 1

- 2

- 3

- 4

相似性任务-MRPC、STSB、QQP

2.3 MRPC

MRPC下载

MRPC(The Microsoft Research Paraphrase Corpus,微软研究院释义语料库),相似性和释义任务,是从在线新闻源中自动抽取句子对语料库,并人工注释句子对中的句子是否在语义上等效。类别并不平衡,其中68%的正样本,所以遵循常规的做法,报告准确率(accuracy)和F1值。

样本个数:训练集3, 668个,开发集408个,测试集1, 725个。

任务:是否释义二分类,是释义,不是释义两类。

评价准则:准确率(accuracy)和F1值。

Quality #1 ID #2 ID #1 String #2 String

1 702876 702977 Amrozi accused his brother , whom he called " the witness " , of deliberately distorting his evidence . Referring to him as only " the witness " , Amrozi accused his brother of deliberately distorting his evidence .- 1

- 2

2.4 STSB

STSB(The Semantic Textual Similarity Benchmark,语义文本相似性基准测试),相似性和释义任务,是从新闻标题、视频标题、图像标题以及自然语言推断数据中提取的句子对的集合,每对都是由人类注释的,其相似性评分为0-5(大于等于0且小于等于5的浮点数,原始paper里写的是1-5,可能是作者失误)。任务就是预测这些相似性得分,本质上是一个回归问题,但是依然可以用分类的方法,可以归类为句子对的文本五分类任务。

样本个数:训练集5, 749个,开发集1, 379个,测试集1, 377个。

任务:回归任务,预测为1-5之间的相似性得分的浮点数。但是依然可以使用分类的方法,作为五分类。

评价准则:Pearson and Spearman correlation coefficients。

STSB下载

index genre filename year old_index source1 source2 sentence1 sentence2 score

0 main-captions MSRvid 2012test 0001 none none A plane is taking off. An air plane is taking off. 5.000

1 main-captions MSRvid 2012test 0004 none none A man is playing a large flute. A man is playing a flute. 3.800

2 main-captions MSRvid 2012test 0005 none none A man is spreading shreded cheese on a pizza. A man is spreading shredded cheese on an uncooked pizza. 3.800- 1

- 2

- 3

- 4

2.5 QQP

QQP(The Quora Question Pairs, Quora问题对数集),相似性和释义任务,是社区问答网站Quora中问题对的集合。任务是确定一对问题在语义上是否等效。与MRPC一样,QQP也是正负样本不均衡的,不同是的QQP负样本占63%,正样本是37%,所以我们也是报告准确率和F1值。我们使用标准测试集,为此我们从作者那里获得了专用标签。我们观察到测试集与训练集分布不同。

样本个数:训练集363, 870个,开发集40, 431个,测试集390, 965个。

任务:判定句子对是否等效,等效、不等效两种情况,二分类任务。

评价准则:准确率(accuracy)和F1值。

暂无专用下载链接

id qid1 qid2 question1 question2 is_duplicate

133273 213221 213222 How is the life of a math student? Could you describe your own experiences? Which level of prepration is enough for the exam jlpt5? 0

402555 536040 536041 How do I control my horny emotions? How do you control your horniness? 1

360472 364011 490273 What causes stool color to change to yellow? What can cause stool to come out as little balls? 0

150662 155721 7256 What can one do after MBBS? What do i do after my MBBS ? 1- 1

- 2

- 3

- 4

- 5

语义推断型任务——MNLI、QNLI、RTE、WNLI

2.6 MNLI

MNLI(The Multi-Genre Natural Language Inference Corpus, 多类型自然语言推理数据库),自然语言推断任务,是通过众包方式对句子对进行文本蕴含标注的集合。给定前提(premise)语句和假设(hypothesis)语句,任务是预测前提语句是否包含假设(蕴含, entailment),与假设矛盾(矛盾,contradiction)或者两者都不(中立,neutral)。前提语句是从数十种不同来源收集的,包括转录的语音,小说和政府报告。

样本个数:训练集392, 702个,开发集dev-matched 9, 815个,开发集dev-mismatched9, 832个,测试集test-matched 9, 796个,测试集test-dismatched9, 847个。因为MNLI是集合了许多不同领域风格的文本,所以又分为了matched和mismatched两个版本的数据集,matched指的是训练集和测试集的数据来源一致,mismached指的是训练集和测试集来源不一致。

任务:句子对,一个前提,一个是假设。前提和假设的关系有三种情况:蕴含(entailment),矛盾(contradiction),中立(neutral)。句子对三分类问题。

评价准则:matched accuracy/mismatched accuracy。

MNLI下载

index promptID pairID genre sentence1_binary_parse sentence2_binary_parse sentence1_parse sentence2_parse sentence1 sentence2 label1 gold_label

0 31193 31193n government ( ( Conceptually ( cream skimming ) ) ( ( has ( ( ( two ( basic dimensions ) ) - ) ( ( product and ) geography ) ) ) . ) ) ( ( ( Product and ) geography ) ( ( are ( what ( make ( cream ( skimming work ) ) ) ) ) . ) ) (ROOT (S (NP (JJ Conceptually) (NN cream) (NN skimming)) (VP (VBZ has) (NP (NP (CD two) (JJ basic) (NNS dimensions)) (: -) (NP (NN product) (CC and) (NN geography)))) (. .))) (ROOT (S (NP (NN Product) (CC and) (NN geography)) (VP (VBP are) (SBAR (WHNP (WP what)) (S (VP (VBP make) (NP (NP (NN cream)) (VP (VBG skimming) (NP (NN work)))))))) (. .))) Conceptually cream skimming has two basic dimensions - product and geography. Product and geography are what make cream skimming work. neutral neutral- 1

- 2

2.7 QNLI

QNLI(Qusetion-answering NLI,问答自然语言推断),自然语言推断任务。QNLI是从另一个数据集The Stanford Question Answering Dataset(斯坦福问答数据集, SQuAD 1.0)[3]转换而来的。SQuAD 1.0是有一个问题-段落对组成的问答数据集,其中段落来自维基百科,段落中的一个句子包含问题的答案。这里可以看到有个要素,来自维基百科的段落,问题,段落中的一个句子包含问题的答案。通过将问题和上下文(即维基百科段落)中的每一句话进行组合,并过滤掉词汇重叠比较低的句子对就得到了QNLI中的句子对。相比原始SQuAD任务,消除了模型选择准确答案的要求;也消除了简化的假设,即答案适中在输入中并且词汇重叠是可靠的提示。

样本个数:训练集104, 743个,开发集5, 463个,测试集5, 461个。

任务:判断问题(question)和句子(sentence,维基百科段落中的一句)是否蕴含,蕴含和不蕴含,二分类。

评价准则:准确率(accuracy)。

QNLI下载

index question sentence label

0 When did the third Digimon series begin? Unlike the two seasons before it and most of the seasons that followed, Digimon Tamers takes a darker and more realistic approach to its story featuring Digimon who do not reincarnate after their deaths and more complex character development in the original Japanese. not_entailment

1 Which missile batteries often have individual launchers several kilometres from one another? When MANPADS is operated by specialists, batteries may have several dozen teams deploying separately in small sections; self-propelled air defence guns may deploy in pairs. not_entailment

2 What two things does Popper argue Tarski's theory involves in an evaluation of truth? He bases this interpretation on the fact that examples such as the one described above refer to two things: assertions and the facts to which they refer. entailment- 1

- 2

- 3

- 4

2.8 RTE

RTE(The Recognizing Textual Entailment datasets,识别文本蕴含数据集),自然语言推断任务,它是将一系列的年度文本蕴含挑战赛的数据集进行整合合并而来的,包含RTE1[4],RTE2,RTE3[5],RTE5等,这些数据样本都从新闻和维基百科构建而来。将这些所有数据转换为二分类,对于三分类的数据,为了保持一致性,将中立(neutral)和矛盾(contradiction)转换为不蕴含(not entailment)。

样本个数:训练集2, 491个,开发集277个,测试集3, 000个。

任务:判断句子对是否蕴含,句子1和句子2是否互为蕴含,二分类任务。

评价准则:准确率(accuracy)。

RTE下载

index sentence1 sentence2 label

0 No Weapons of Mass Destruction Found in Iraq Yet. Weapons of Mass Destruction Found in Iraq. not_entailment

1 A place of sorrow, after Pope John Paul II died, became a place of celebration, as Roman Catholic faithful gathered in downtown Chicago to mark the installation of new Pope Benedict XVI. Pope Benedict XVI is the new leader of the Roman Catholic Church. entailment

2 Herceptin was already approved to treat the sickest breast cancer patients, and the company said, Monday, it will discuss with federal regulators the possibility of prescribing the drug for more breast cancer patients. Herceptin can be used to treat breast cancer. entailment- 1

- 2

- 3

- 4

2.9 WNLI

WNLI(Winograd NLI,Winograd自然语言推断),自然语言推断任务,数据集来自于竞赛数据的转换。Winograd Schema Challenge[6],该竞赛是一项阅读理解任务,其中系统必须读一个带有代词的句子,并从列表中找到代词的指代对象。这些样本都是都是手动创建的,以挫败简单的统计方法:每个样本都取决于句子中单个单词或短语提供的上下文信息。为了将问题转换成句子对分类,方法是通过用每个可能的列表中的每个可能的指代去替换原始句子中的代词。任务是预测两个句子对是否有关(蕴含、不蕴含)。训练集两个类别是均衡的,测试集是不均衡的,65%是不蕴含。

样本个数:训练集635个,开发集71个,测试集146个。

任务:判断句子对是否相关,蕴含和不蕴含,二分类任务。

评价准则:准确率(accuracy)。

index sentence1 sentence2 label

0 I stuck a pin through a carrot. When I pulled the pin out, it had a hole. The carrot had a hole. 1

1 John couldn't see the stage with Billy in front of him because he is so short. John is so short. 1

2 The police arrested all of the gang members. They were trying to stop the drug trade in the neighborhood. The police were trying to stop the drug trade in the neighborhood. 1

3 Steve follows Fred's example in everything. He influences him hugely. Steve influences him hugely. 0- 1

- 2

- 3

- 4

- 5