zookeeper分布式锁原理及使用 curator 实现分布式锁

本文为博主原创,未经允许不得转载:

1. zookeeper 分布式锁应用场景及特点分析

2. zookeeper 分布式原理

3. curator 实现分布式锁

1. zookeeper 分布式锁:

(1)优点:ZooKeeper分布式锁(如InterProcessMutex),能有效的解决分布式问题,不可重入问题,使用起来也较为简单。

(2)缺点:ZooKeeper实现的分布式锁,性能并不太高。为啥呢?

因为每次在创建锁和释放锁的过程中,都要动态创建、销毁瞬时节点来实现锁功能。大家知道,ZK中创建和删除节点只能通过Leader服务器来执行,

然后Leader服务器还需要将数据同不到所有的Follower机器上,这样频繁的网络通信,性能的短板是非常突出的。

总之,在高性能,高并发的场景下,不建议使用ZooKeeper的分布式锁。而由于ZooKeeper的高可用特性,所以在并发量不是太高的场景,推荐

使用ZooKeeper的分布式锁。

对比 redis 分布式锁:

(1)基于ZooKeeper的分布式锁,适用于高可靠(高可用)而并发量不是太大的场景;

(2)基于Redis的分布式锁,适用于并发量很大、性能要求很高的、而可靠性问题可以通过其他方案去弥补的场景。

2. zookeeper 分布式锁原理:

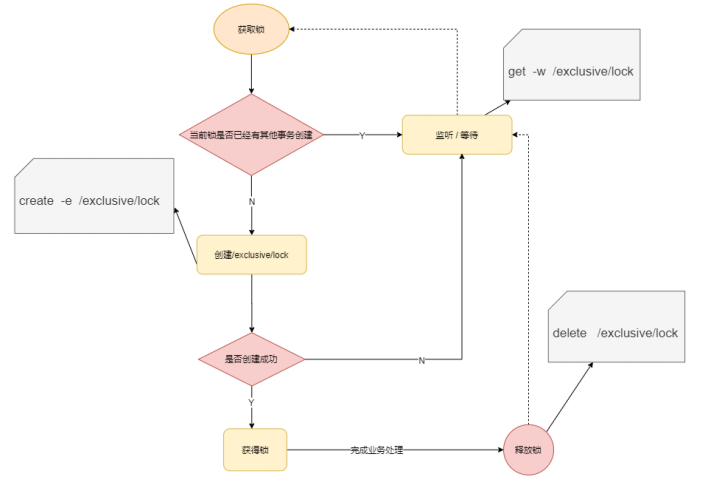

非公共锁方式实现流程:

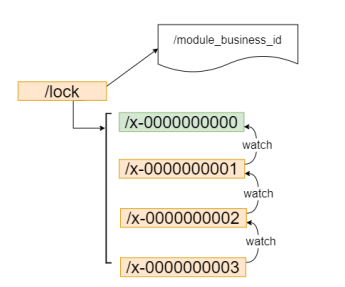

zookeeper 公平锁实现:

流程:

1. 请求进来,直接在 /lock 节点下穿件一个临时顺序节点

2. 判断自己是不是 lock 节点下最小的节点: 如果是最小的,则获取锁,反之对前面的节点进行监听

3. 获得锁的请求,处理完释放锁,即delete 节点,然后后继第一个节点收到通知,并重复第二步判断。

<dependency>

<groupId>org.apache.curator</groupId>

<artifactId>curator‐recipes</artifactId>

<version>5.0.0</version>

<exclusions>

<exclusion>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

<version>3.5.8</version>

</dependency>

封装的工具类:

import org.apache.commons.lang3.exception.ExceptionUtils; import org.apache.commons.logging.Log; import org.apache.commons.logging.LogFactory; import org.apache.curator.RetryPolicy; import org.apache.curator.framework.CuratorFramework; import org.apache.curator.framework.CuratorFrameworkFactory; import org.apache.curator.framework.recipes.leader.LeaderLatch; import org.apache.curator.retry.ExponentialBackoffRetry; import org.springframework.beans.factory.annotation.Value; import org.springframework.stereotype.Component; import java.util.concurrent.TimeUnit; @Component public class BaseJob { Log log = LogFactory.getLog(this.getClass()); @Value("${zookeeper.connectstring}") private String zookeeperHost; @Value("${zookeeper.parent.path}") private String zookeeperPath = "/dlocks/zk/demo"; @Value("${curator.leadership.wait}") private int leaderShipWait = 60000; @Value("${curator.leadership.release}") private int leaderShipRelease = 360000; protected static String Zookeeper_KEY = "zk/lag/count";

public boolean getZkLock(String tokenKey) { return getZkLock(tokenKey, leaderShipRelease); } public boolean getZkLock(String tokenKey, final int lShipRelease) { CuratorFramework client = null; LeaderLatch ll = null; try { RetryPolicy policy = new ExponentialBackoffRetry(4000, 3); client = CuratorFrameworkFactory.newClient(zookeeperHost, policy); client.start(); ll = new LeaderLatch(client, String.format("%s/ppcloud/"+tokenKey+"/lock", zookeeperPath)); ll.start(); boolean get = ll.await(leaderShipWait, TimeUnit.MILLISECONDS); if (get) { final CuratorFramework client2 = client; final LeaderLatch ll2 = ll; new Thread(new Runnable() { public void run() { try { Thread.sleep(lShipRelease); } catch (Throwable e1) { } try { ll2.close(); } catch (Throwable t) { } try { client2.close(); } catch (Throwable e) { } } }).start(); return true; } else { ll.close(); client.close(); log.error(String.format("Zookeeper return:%s", get)); //hack code temp //return true; } } catch (Throwable e) { log.error(String.format("Zookeeper failed:%s", ExceptionUtils.getStackTrace(e))); try { if (ll != null) { ll.close(); } } catch (Exception e2) { } try { if (client != null) { client.close(); } } catch (Exception e2) { } } return false; } }

浙公网安备 33010602011771号

浙公网安备 33010602011771号