企业级Gitlab-ci|cd实践

前言

吐槽一波

2020年6月2号刚入职公司时,第一感觉是集群环境是个大坑!内网一套,公网一套。内网采用单节点Kubernetes,公网采用aliyun托管的X节点Kubernetes(还有节点是2C的...)。内网Kubernetes环境几乎无人使用(可能后端开发工程师在偶尔使用吧)。公网的X节点Kubernetes集群,也可以是称之为生产Kubernetes集群,也可以称之为测试Kubernetes集群,天才的设想--通过名称空间区分集群环境!

引出话题

研发人员向部署在公网的Kubernetes集群的gitlab代码管理仓库推送代码,然后由部署在香港服务器的gitlab-runner做ci|cd,容器镜像是存在gitlab上的,也就是公网kubernetes集群上,emmm,好吧,反正是集群重构势在必行了。

集群重构说的也直接点也就是针对ci|cd的重构,用起内网环境,增加预发环境,将公网Kubernetes的测试环境给剔除掉。

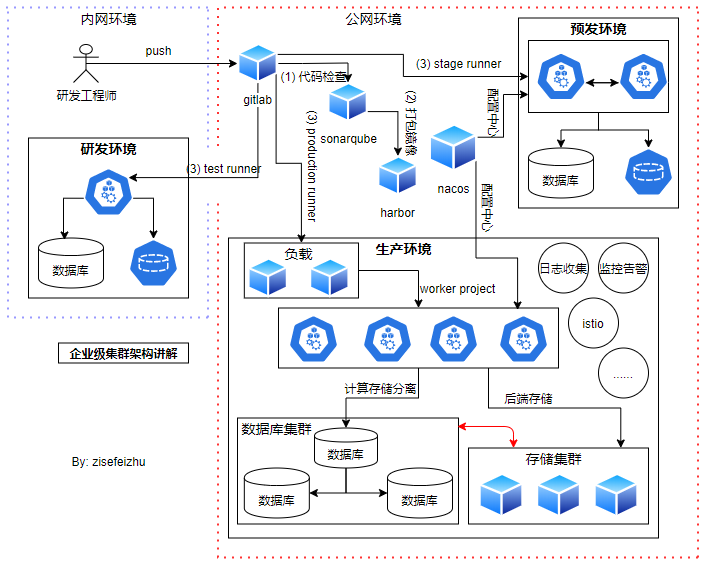

架构图

企业级集群架构图

由上图可知,分三种环境:研发环境(内网环境);预发环境(和生产环境处于同一VPC);生产环境(原公网环境【升配】)。

研发环境

研发人员:开发的日常开发工作都在这个环境进行。大部分的基础开发工作都会在该环境进行。

测试人员:主要用于测试验证当前的需求开发是否符合预期。

预发环境

其实就是真实的线上环境,几乎全部的环境配置都是一模一样的,包括但不限于,操作系统版本以及软件配置,开发语言的运行环境,数据库配置等。 最后上线前的验证环境,除了不对用户开放外,这个环境的数据和线上是一致的。产品、运营、测试、开发都可以尝试做最后的线上验证,提前发现问题。

环境对比

| 分类 | 使用场景 | 使用者 | 使用时机 | 备注 |

|---|---|---|---|---|

| 研发环境 | 日常开发测试验证 | 开发测试工程师 | 需求开发开发完成 | |

| 预发环境 | 线上验证 | 开发、测试、产品、运营等 | 上线之前 |

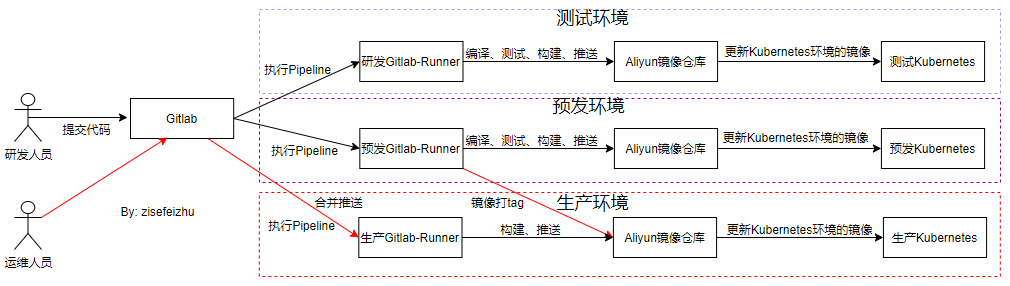

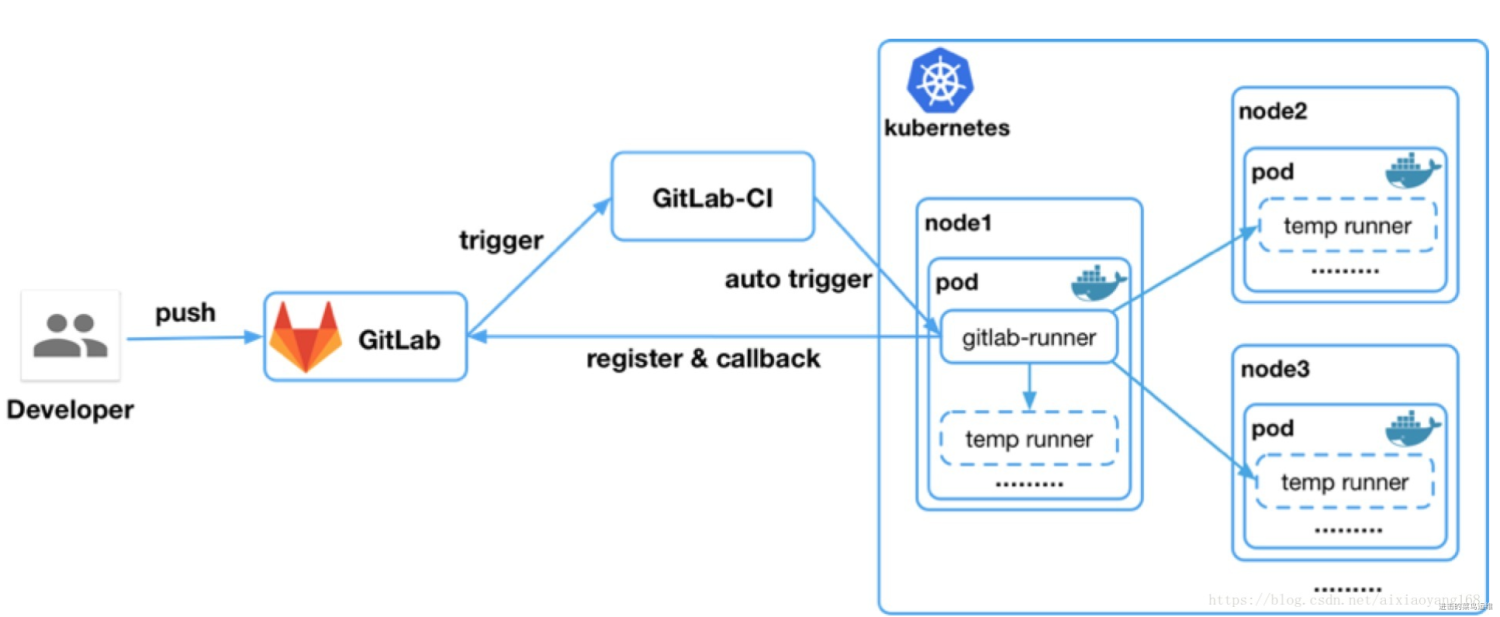

gitlab-ci架构图

如上图所示,开发者将代码提交到GitLab后,可以触发CI脚本在GitLab Runner上执行,通过编写CI脚本可以完成很多使用的功能:编译、测试、构建docker镜像、推送到Aliyun镜像仓库等;

- 🔲部署在公网环境的Gitlab 如何管控部署在内网环境的Kubernetes集群呢?

- 🔲部署在Kubernetes上的Gitlab-runner如何实现缓存呢?

部署环境

部署Kubernetes

公网直接使用阿里云的ACK版Kubernetes集群

-

- 🔲ingress nginx lb 域名???

内网部署如下:

清理Kubernetes环境

rm -rf ~/.kube/

rm -rf /etc/kubernetes/

rm -rf /etc/systemd/system/kubelet.service.d

rm -rf /etc/systemd/system/kubelet.service

rm -rf /usr/bin/kube*

rm -rf /etc/cni

rm -rf /opt/cni

rm -rf /var/lib/etcd

rm -rf /var/etcd

部署Kubernetes单节点

docker info

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum list kubelet kubeadm kubectl --showduplicates | sort -r

yum install -y kubelet-1.17.5 kubeadm--1.17.5 kubectl-1.17.5

systemctl enable kubelet && systemctl start kubelet

kubelet status kubelet

kubeadm init --apiserver-advertise-address=10.17.1.44 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=1.17.5 --pod-network-cidr=10.244.0.0/16

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl get cs

cd linkun/

cd zujian/

kubectl apply -f calico.yaml

kubectl get pods -A

kubectl apply -f metrice-server.yaml

kubectl apply -f nginx-ingress.yaml

kubectl get pods -A

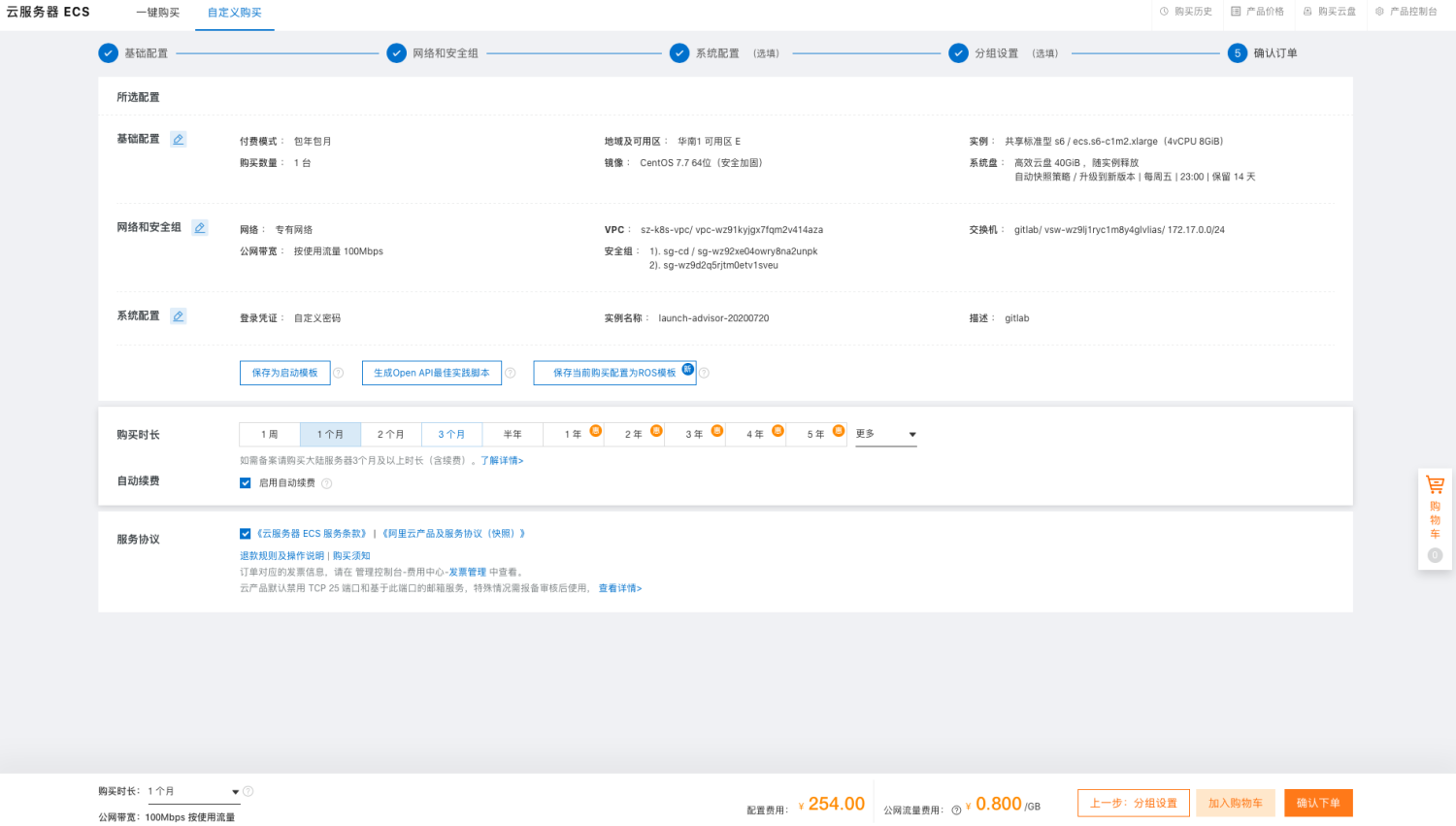

部署gitlab

4C8G的ECS服务器

yum install vim gcc gcc-c++ wget net-tools lrzsz iotop lsof iotop bash-completion -y

yum install curl policycoreutils openssh-server openssh-clients postfix -y

systemctl disable firewalld

sed -i '/SELINUX/s/enforcing/disabled/' /etc/sysconfig/selinux

wget https://mirrors.tuna.tsinghua.edu.cn/gitlab-ce/yum/el7/gitlab-ce-13.1.4-ce.0.el7.x86_64.rpm

yum localinstall gitlab-ce-13.1.4-ce.0.el7.x86_64.rpm

cp /etc/gitlab/gitlab.rb{,.bak}

vim /etc/gitlab/gitlab.rb

gitlab-ctl reconfigure

ss -lntup

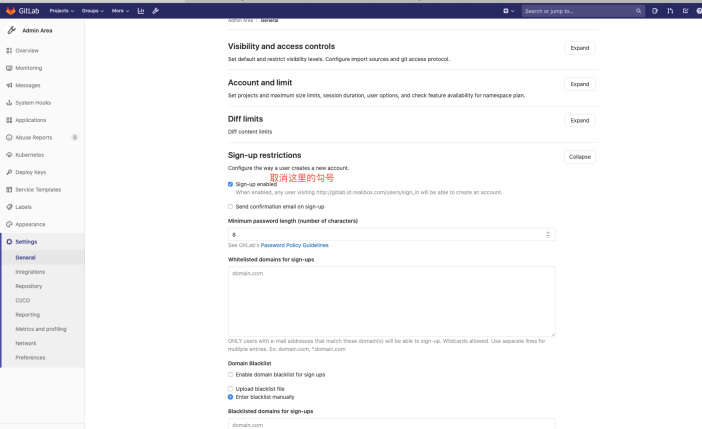

不允许用户注册

部署gitlab-runner

GitLab-CI 是一套 GitLab 提供给用户使用的持续集成系统,GitLab 8.0 版本以后是默认集成并且默认启用。GitLab-Runner 是配合 GitLab-CI 进行使用的,GitLab 里面每个工程都会定义一些该工程的持续集成脚本,该脚本可配置一个或多个 Stage 例如构建、编译、检测、测试、部署等等。当工程有代码更新时,GitLab 会自动触发 GitLab-CI,此时 CitLab-CI 会找到事先注册好的 GitLab-Runner 通知并触发该 Runner 来执行预先定义好的脚本。

传统的 GitLab-Runner 我们一般会选择某个或某几个机器上,可以 Docker 安装启动亦或是直接源码安装启动,都会存在一些痛点问题,比如发生单点故障,那么该机器的所有 Runner 就不可用了;每个 Runner 所在机器环境不一样,以便来完成不同类型的 Stage 操作,但是这种差异化配置导致管理起来很麻烦;资源分配不平衡,有的 Runner 运行工程脚本出现拥塞时,而有的 Runner 缺处于空闲状态;资源有浪费,当 Runner 处于空闲状态时,也没有安全释放掉资源。因此,为了解决这些痛点,我们可以采用在 Kubernetes 集群中运行 GitLab-Runner 来动态执行 GitLab-CI 脚本任务,它整个流程如下图:

这种方式带来的好处有:

- 服务高可用,当某个节点出现故障时,Kubernetes 会自动创建一个新的 GitLab-Runner 容器,并挂载同样的 Runner 配置,使服务达到高可用。

- 动态伸缩,合理使用资源,每次运行脚本任务时,Gitlab-Runner 会自动创建一个或多个新的临时 Runner,当任务执行完毕后,临时 Runner 会自动注销并删除容器,资源自动释放,而且 Kubernetes 会根据每个节点资源的使用情况,动态分配临时 Runner 到空闲的节点上创建,降低出现因某节点资源利用率高,还排队等待在该节点的情况。

- 扩展性好,当 Kubernetes 集群的资源严重不足而导致临时 Runner 排队等待时,可以很容易的添加一个 Kubernetes Node 到集群中,从而实现横向扩展。

helm部署

https://www.cnblogs.com/bolingcavalry/p/13200977.html

wget https://get.helm.sh/helm-v3.1.2-linux-amd64.tar.gz

tar xf helm-v3.1.2-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/

chmod +x /usr/local/bin/helm

helm version

helm repo add gitlab https://charts.gitlab.io

helm fetch gitlab/gitlab-runner

helm install --name-template gitlab-runner -f values.yaml . --namespace gitlab-runner

helm uninstall gitlab-runner --namespace gitlab-runner

statefulset部署

名称空间

# cat gitlab-runner-namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: gitlab-runner

# kubectl apply -f gitlab-runner-namespace.yaml

namespace/gitlab-runner created

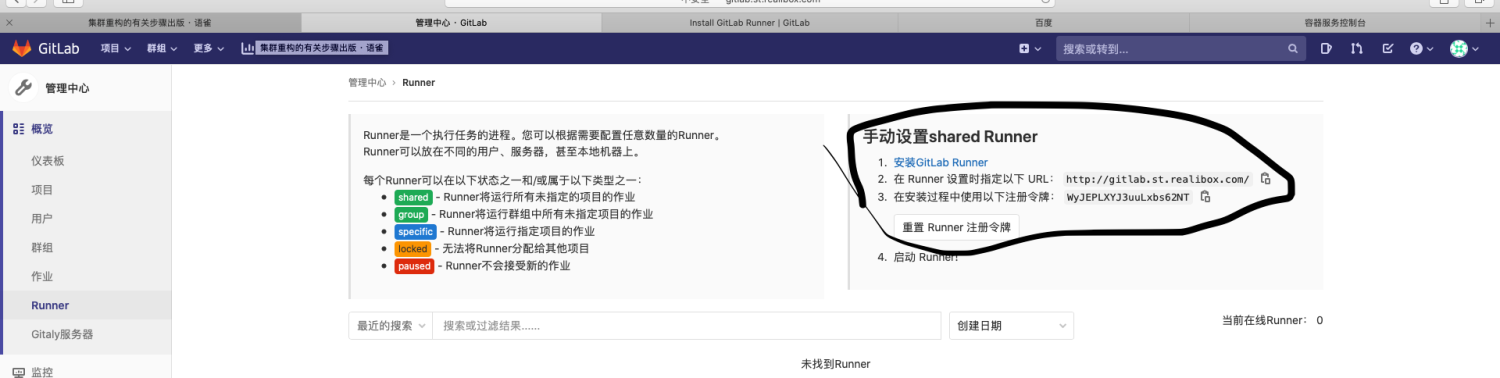

用于注册、运行和取消注册Gitlab ci runner 的Token

# echo "WyJEPLXYJ3uuLxbs62NT" | base64

V3lKRVBMWFlKM3V1THhiczYyTlQK

# cat gitlab-runner-token-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: gitlab-ci-token

namespace: gitlab-runner

labels:

app: gitlab-ci-token

data:

GITLAB_CI_TOKEN: V3lKRVBMWFlKM3V1THhiczYyTlQK

# kubectl apply -f gitlab-runner-token-secret.yaml

secret/gitlab-ci-token created

在k8s里configmap里存储一个用于注册、运行和取消注册 Gitlab ci runner 的小脚本

# cat gitlab-runner-scripts-cm.yaml

apiVersion: v1

data:

run.sh: |

#!/bin/bash

unregister() {

kill %1

echo "Unregistering runner ${RUNNER_NAME} ..."

/usr/bin/gitlab-ci-multi-runner unregister -t "$(/usr/bin/gitlab-ci-multi-runner list 2>&1 | tail -n1 | awk '{print $4}' | cut -d'=' -f2)" -n ${RUNNER_NAME}

exit $?

}

trap 'unregister' EXIT HUP INT QUIT PIPE TERM

echo "Registering runner ${RUNNER_NAME} ..."

/usr/bin/gitlab-ci-multi-runner register -r ${GITLAB_CI_TOKEN}

sed -i 's/^concurrent.*/concurrent = '"${RUNNER_REQUEST_CONCURRENCY}"'/' /home/gitlab-runner/.gitlab-runner/config.toml

echo "Starting runner ${RUNNER_NAME} ..."

/usr/bin/gitlab-ci-multi-runner run -n ${RUNNER_NAME} &

wait

kind: ConfigMap

metadata:

labels:

app: gitlab-ci-runner

name: gitlab-ci-runner-scripts

namespace: gitlab-runner

# kubectl apply -f gitlab-runner-scripts-cm.yaml

configmap/gitlab-ci-runner-scripts created

使用k8s的configmap资源来传递runner镜像所需的环境变量

# cat gitlab-runner-cm.yaml

apiVersion: v1

data:

REGISTER_NON_INTERACTIVE: "true"

REGISTER_LOCKED: "false"

METRICS_SERVER: "0.0.0.0:9100"

CI_SERVER_URL: "http://gitlab.st.zisefeizhubox.com/ci"

RUNNER_REQUEST_CONCURRENCY: "4"

RUNNER_EXECUTOR: "kubernetes"

KUBERNETES_NAMESPACE: "gitlab-runner"

KUBERNETES_PRIVILEGED: "true"

KUBERNETES_CPU_LIMIT: "1"

KUBERNETES_CPU_REQUEST: "500m"

KUBERNETES_MEMORY_LIMIT: "1Gi"

KUBERNETES_SERVICE_CPU_LIMIT: "1"

KUBERNETES_SERVICE_MEMORY_LIMIT: "1Gi"

KUBERNETES_HELPER_CPU_LIMIT: "500m"

KUBERNETES_HELPER_MEMORY_LIMIT: "100Mi"

KUBERNETES_PULL_POLICY: "if-not-present"

KUBERNETES_TERMINATIONGRACEPERIODSECONDS: "10"

KUBERNETES_POLL_INTERVAL: "5"

KUBERNETES_POLL_TIMEOUT: "360"

kind: ConfigMap

metadata:

labels:

app: gitlab-ci-runner

name: gitlab-ci-runner-cm

namespace: gitlab-runner

# kubectl apply -f gitlab-runner-cm.yaml

configmap/gitlab-ci-runner-cm created

需要用于k8s权限控制的rbac文件

# cat gitlab-runner-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: gitlab-ci

namespace: gitlab-runner

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: gitlab-ci

namespace: gitlab-runner

rules:

- apiGroups: [""]

resources: ["*"]

verbs: ["*"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: gitlab-ci

namespace: gitlab-runner

subjects:

- kind: ServiceAccount

name: gitlab-ci

namespace: gitlab-runner

roleRef:

kind: Role

name: gitlab-ci

apiGroup: rbac.authorization.k8s.io

# kubectl apply -f gitlab-runner-rbac.yaml

serviceaccount/gitlab-ci created

role.rbac.authorization.k8s.io/gitlab-ci created

rolebinding.rbac.authorization.k8s.io/gitlab-ci created

zisefeizhu:gitlab-runner root

在k8s集群生成gitlab runner的statefulset文件

# cat gitlab-runner-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: gitlab-ci-runner

namespace: gitlab-runner

labels:

app: gitlab-ci-runner

spec:

updateStrategy:

type: RollingUpdate

replicas: 2

selector:

matchLabels:

app: gitlab-ci-runner

serviceName: gitlab-ci-runner

template:

metadata:

labels:

app: gitlab-ci-runner

spec:

volumes:

- name: gitlab-ci-runner-scripts

projected:

sources:

- configMap:

name: gitlab-ci-runner-scripts

items:

- key: run.sh

path: run.sh

mode: 0755

serviceAccountName: gitlab-ci

securityContext:

runAsNonRoot: true

runAsUser: 999

supplementalGroups: [999]

containers:

- image: gitlab/gitlab-runner:latest

name: gitlab-ci-runner

command:

- /scripts/run.sh

envFrom:

- configMapRef:

name: gitlab-ci-runner-cm

- secretRef:

name: gitlab-ci-token

env:

- name: RUNNER_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

ports:

- containerPort: 9100

name: http-metrics

protocol: TCP

volumeMounts:

- name: gitlab-ci-runner-scripts

mountPath: "/scripts"

readOnly: true

restartPolicy: Always

# kubectl apply -f gitlab-runner-statefulset.yaml

statefulset.apps/gitlab-ci-runner created

zisefeizhu:gitlab-runner root# kubectl get pods -n gitlab-runner -w

NAME READY STATUS RESTARTS AGE

gitlab-ci-runner-0 0/1 ContainerCreating 0 10s

gitlab-ci-runner-0 1/1 Running 0 26s

测试环境同样的配置

测试环境

预发布环境

# tar cvf gitlab-runner.tar.gz ./*

a ./gitlab-runner-cm.yaml

a ./gitlab-runner-namespace.yaml

a ./gitlab-runner-rbac.yaml

a ./gitlab-runner-scripts-cm.yaml

a ./gitlab-runner-statefulset.yaml

a ./gitlab-runner-token-secret.yaml

[root@localhost gitlab-runner]# tar xf gitlab-runner.tar.gz

[root@localhost gitlab-runner]# ll

总用量 36

-rw-r--r--. 1 root wheel 831 7月 20 23:27 gitlab-runner-cm.yaml

-rw-r--r--. 1 root wheel 63 7月 20 23:05 gitlab-runner-namespace.yaml

-rw-r--r--. 1 root wheel 547 7月 20 23:31 gitlab-runner-rbac.yaml

-rw-r--r--. 1 root wheel 853 7月 20 23:17 gitlab-runner-scripts-cm.yaml

-rw-r--r--. 1 root wheel 1315 7月 20 23:54 gitlab-runner-statefulset.yaml

-rw-r--r--. 1 root root 9728 7月 21 03:24 gitlab-runner.tar.gz

-rw-r--r--. 1 root wheel 178 7月 20 23:14 gitlab-runner-token-secret.yaml

[root@localhost gitlab-runner]# kubectl apply -f .

namespace/gitlab-runner created

serviceaccount/gitlab-ci created

role.rbac.authorization.k8s.io/gitlab-ci created

rolebinding.rbac.authorization.k8s.io/gitlab-ci created

configmap/gitlab-ci-runner-scripts created

statefulset.apps/gitlab-ci-runner created

secret/gitlab-ci-token created

configmap/gitlab-ci-runner-cm created

[root@localhost gitlab-runner]# kubectl get pods -n gitlab-runner -w

NAME READY STATUS RESTARTS AGE

gitlab-ci-runner-0 1/1 Running 0 36s

gitlab-ci-runner-1 1/1 Running 0 1s

gitlab-ci和阿里云镜像仓库 & 不同kubernetes集群调试

gitlab-ci使用有关文档

1、GitLab的CI|CD编译的实现(基础) : https://www.jianshu.com/p/b69304279c5f

2、gitlab-ci.yml 配置Gitlab pipeline以达到持续集成的方法 (参数讲解): https://www.jianshu.com/p/617f035f01b8

3、持续集成之gitlab-ci.yml(命令及示例讲解):https://segmentfault.com/a/1190000019540360

4、gitlab 官方文档地址(官):https://docs.gitlab.com/ee/ci/yaml/README.html

gitlab-ci和阿里云镜像仓库联调

注:此处有docker in docker的问题

# cat gitlab-ci

# 设置执行镜像

image: busybox:latest

before_script:

- export REGISTRY_IMAGE="${ALI_IMAGE_REGISTRY}"/gitlab-test/"${CI_PROJECT_NAME}":"${CI_COMMIT_REF_NAME//\.}"-"${CI_PIPELINE_ID}"

stages:

- build

- deploy

docker_build_job:

stage: build

tags:

- advance

image: docker:latest

stage: build

stage: build

script:

- docker login -u "${ALI_IMAGE_USER}" -p "${ALI_IMAGE_PASSWORD}" "${ALI_IMAGE_REGISTRY}"

- docker build . -t $REGISTRY_IMAGE --build-arg CI_COMMIT_SHORT_SHA=$CI_COMMIT_SHORT_SHA --build-arg NODE_DEPLOY_ENV=$NODE_DEPLOY_ENV --build-arg DEPLOY_VERSION=$DEPLOY_VERSION --build-arg API_SERVER_HOST=$API_SERVER_HOST --build-arg COOKIE_DOMAIN=$COOKIE_DOMAIN --build-arg LOGIN_HOST=$LOGIN_HOST

- docker push "${REGISTRY_IMAGE}"

tags:

- advance

gitlab-ci和kubernetes集群联调

注:因为测试环境是在内网,测试环境的k8s集群无法通过外网连接,如果gitlab-runner是部署在外部的裸机上,将无法和测试环境k8s对接,如果gitlab-runner是部署在k8s集群上,那么如何实现runner job跑在对应的分支上呢?

我的方案是通过制作三个kubectl 镜像分别控制三个集群,在gitlab-ci脚本中引用。

制作kubectl镜像,以测试环境为例 在香港服务器上制作镜像

# pwd

/root/linkun/gitlab-runner/test

# ll

total 20

drwxr-xr-x 2 root root 4096 Jul 23 16:38 ./

drwxr-xr-x 4 root root 4096 Jul 23 15:55 ../

-rw-r--r-- 1 root root 5451 Jul 23 16:31 config

-rw-r--r-- 1 root root 545 Jul 23 16:38 Dockerfile

此处的config是k8s集群的/root/.kube/config 保密期间,再次不引开

# cat Dockerfile

FROM alpine:3.8

MAINTAINER cnych <icnych@gmail.com>

ENV KUBE_LATEST_VERSION="v1.17.5"

RUN apk add --update ca-certificates \

&& apk add --update -t deps curl \

&& apk add --update gettext \

&& apk add --update git \

&& curl -L https://storage.googleapis.com/kubernetes-release/release/${KUBE_LATEST_VERSION}/bin/linux/amd64/kubectl -o /usr/local/bin/kubectl \

&& chmod +x /usr/local/bin/kubectl \

&& apk del --purge deps \

&& rm /var/cache/apk/*

RUN mkdir /root/.kube

COPY config /root/.kube/

ENTRYPOINT ["kubectl"]

CMD ["--help"]

# docker build -t registry.cn-shenzhen.aliyuncs.com/zisefeizhu/kubectl:test .

# docker push registry.cn-shenzhen.aliyuncs.com/zisefeizhu/kubectl:test

阿里云镜像仓库

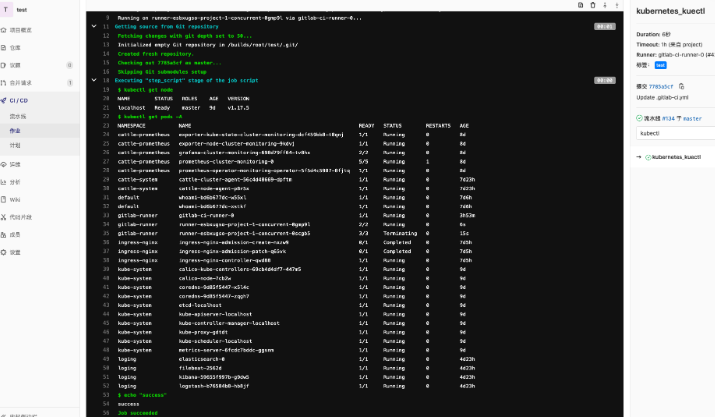

gitlab-ci脚本

stages:

- kubectl

kubernetes_kuectl:

tags:

- test

stage: kubectl

image: "${KUBECTL_ADVANCE}"

script:

- kubectl get node

- kubectl get pods -A

# - sleep 500

- echo "success"

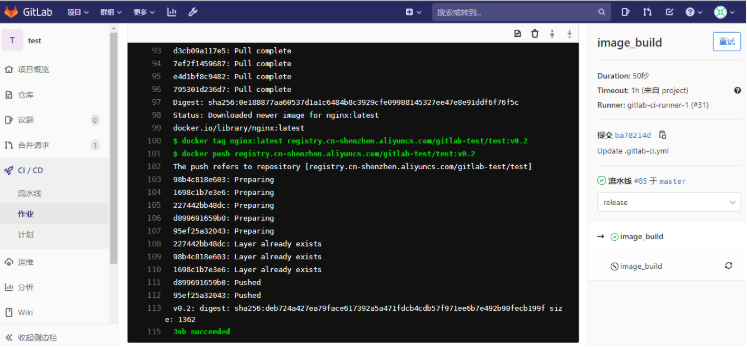

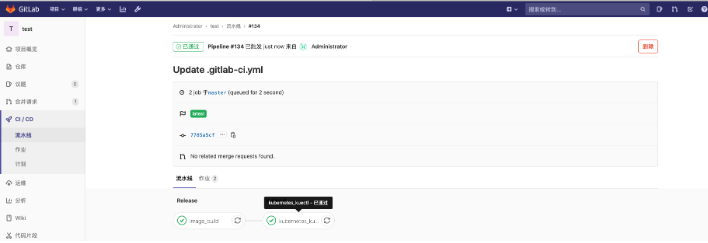

观察job

观察ku job

至此说明:gitlab-ci和阿里云镜像仓库 & 不同kubernetes集群调试 👌

环境变量

内置环境变量

.gitlab-ci.yml预设环境变量【系统级】

| 名称 | 说明 |

|---|---|

| $CI_PROJECT_NAME | 项目名称 |

| $CI_PROJECT_NAMESPACE | 组名称 |

| $CI_PROJECT_PATH | 项目相对路径 |

| $CI_PROJECT_URL | 项目URL地址 |

| $GITLAB_USER_NAME | 用户名称 |

| $GITLAB_USER_EMAIL | 用户邮箱 |

| $CI_PROJECT_DIR | 项目绝对路径 |

| $CI_PIPELINE_ID | 流水线ID |

| $CI_COMMIT_REF_NAME | 当前分支 |

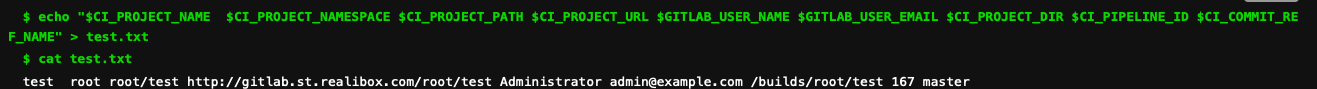

案例演示

自设环境变量

案例演示

stages:

- neizhi

- release

- kubectl

neizhibianliang:

tags:

- advance

stage: neizhi

script:

- echo "$CI_PROJECT_NAME $CI_PROJECT_NAMESPACE $CI_PROJECT_PATH $CI_PROJECT_URL $GITLAB_USER_NAME $GITLAB_USER_EMAIL $CI_PROJECT_DIR $CI_PIPELINE_ID $CI_COMMIT_REF_NAME" > test.txt

- cat test.txt

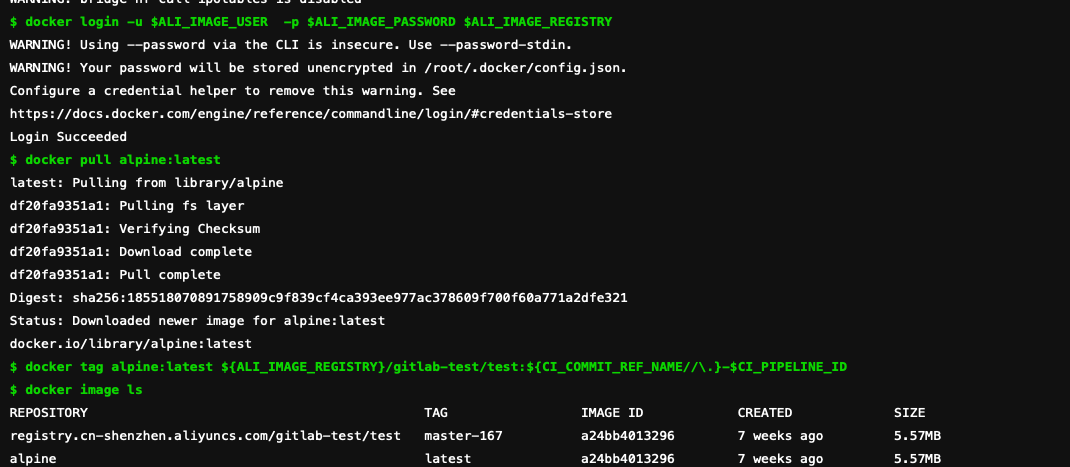

image_build:

tags:

- advance

stage: release

image: docker:latest

variables:

DOCKER_DRIVER: overlay

DOCKER_HOST: tcp://localhost:2375

services:

- name: docker:18.03-dind

command: ["--insecure-registry=registry.cn-shenzhen.aliyuncs.com"]

script:

- docker info

- docker login -u $ALI_IMAGE_USER -p $ALI_IMAGE_PASSWORD $ALI_IMAGE_REGISTRY

- docker pull alpine:latest

- docker tag alpine:latest ${ALI_IMAGE_REGISTRY}/gitlab-test/test:${CI_COMMIT_REF_NAME//\.}-$CI_PIPELINE_ID

- docker image ls

kubernetes_kuectl:

tags:

- advance

stage: kubectl

image: $KUBECTL_ADVANCE

script:

- kubectl get node

- kubectl get pods -A

- echo "success"

通过自设环境变量可以避免因为敏感信息外泄!

gitlab-ci指令集

Gitlab CI 使用高级技巧:https://www.jianshu.com/p/3c0cbb6c2936

案例项目

gitlab-ci.yml

image:

name: golang:1.13.2-stretch

entrypoint: ["/bin/sh", "-c"]

# The problem is that to be able to use go get, one needs to put

# the repository in the $GOPATH. So for example if your gitlab domain

# is mydomainperso.com, and that your repository is repos/projectname, and

# the default GOPATH being /go, then you'd need to have your

# repository in /go/src/mydomainperso.com/repos/projectname

# Thus, making a symbolic link corrects this.

before_script:

- pwd

- ls -al

- mkdir -p "/go/src/git.qikqiak.com/${CI_PROJECT_NAMESPACE}"

- ls -al "/go/src/git.qikqiak.com/"

- ln -sf "${CI_PROJECT_DIR}" "/go/src/git.qikqiak.com/${CI_PROJECT_PATH}"

- ls -la "${CI_PROJECT_DIR}"

- pwd

- cd "/go/src/git.qikqiak.com/${CI_PROJECT_PATH}/"

- pwd

- export REGISTRY_IMAGE="${ALI_IMAGE_REGISTRY}"/gitlab-test/"${CI_PROJECT_NAME}":"${CI_COMMIT_REF_NAME//\.}"-"${CI_PIPELINE_ID}"

variables:

NAMESPACE: gitlab-runner

PORT: 8000

stages:

- test

- build

- release

- review

test:

tags:

- advance

stage: test

script:

- make test

test2:

tags:

- advance

stage: test

script:

- echo "We did it! Something else runs in parallel!"

compile:

tags:

- advance

stage: build

script:

# Add here all the dependencies, or use glide/govendor/...

# to get them automatically.

- make build

artifacts:

paths:

- app

image_build:

tags:

- advance

stage: release

image: docker:latest

script:

- docker info

- docker login -u "${ALI_IMAGE_USER}" -p "${ALI_IMAGE_PASSWORD}" "${ALI_IMAGE_REGISTRY}"

- docker build -t "${REGISTRY_IMAGE}" .

- docker push "${REGISTRY_IMAGE}"

deploy_review:

tags:

- advance

image: "${KUBECTL_ADVANCE}"

stage: review

environment:

name: stage-studio

url: http://studio.advance.zisefeizhubox.com/api-ews/

script:

- kubectl version

- cd manifests/

- sed -i "s/__ALI_IMAGE_REGISTRY__/${ALI_IMAGE_REGISTRY}/" secret-namespace-advance.sh

- sed -i "s/__ALI_IMAGE_USER__/${ALI_IMAGE_USER}/" secret-namespace-advance.sh

- sed -i "s/__ALI_IMAGE_PASSWORD__/${ALI_IMAGE_PASSWORD}/" secret-namespace-advance.sh

- sed -i "s/__NAMESPACE__/${NAMESPACE}/g" secret-namespace-advance.sh deployment.yaml svc.yaml ingress.yaml zisefeizhubox-namespace.yaml

- sed -i "s/__CI_PROJECT_NAME__/${CI_PROJECT_NAME}/g" deployment.yaml svc.yaml ingress.yaml

- sed -i "s/__VERSION__/"${CI_COMMIT_REF_NAME//\.}"-"${CI_PIPELINE_ID}"/" deployment.yaml

- sed -i "s/__PORT__/${PORT}/g" deployment.yaml svc.yaml ingress.yaml

- cat secret-namespace-advance.sh

- cat deployment.yaml

- cat svc.yaml

- cat ingress.yaml

- |

if kubectl apply -f zisefeizhubox-namespace.yaml | grep -q unchanged; then

echo "=> The namespace already exists."

else

echo "=> The namespace is created"

fi

- |

if sh -x secret-namespace-advance.sh || echo $? != 0; then

echo "=> The secret already exists."

else

echo "=> The secret is created"

fi

- |

if kubectl apply -f deployment.yaml | grep -q unchanged; then

echo "=> Patching deployment to force image update."

kubectl patch -f deployment.yaml -p "{\"spec\":{\"template\":{\"metadata\":{\"annotations\":{\"ci-last-updated\":\"$(date +'%s')\"}}}}}"

else

echo "=> Deployment apply has changed the object, no need to force image update."

fi

- kubectl apply -f svc.yaml

- kubectl apply -f ingress.yaml

- kubectl rollout status -f deployment.yaml

- kubectl get all -l name=${CI_PROJECT_NAME} -n ${NAMESPACE}

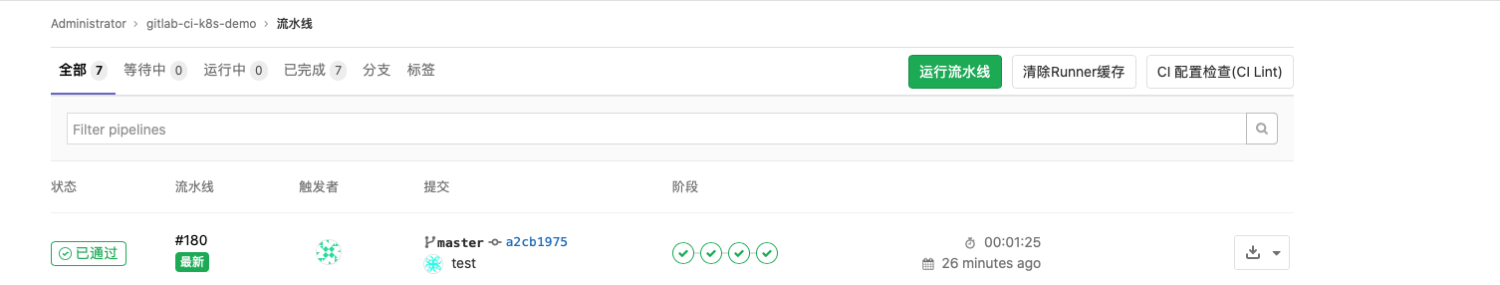

项目流水线

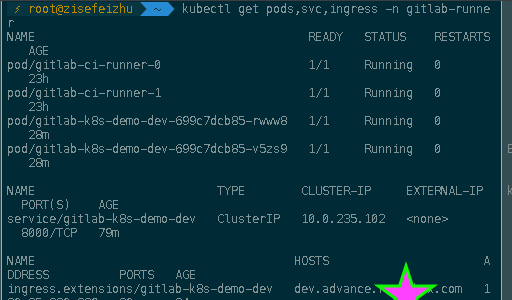

项目资源查看

项目界面访问

👌!

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 记一次.NET内存居高不下排查解决与启示

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· Docker 太简单,K8s 太复杂?w7panel 让容器管理更轻松!