ceph部署

集群时间同步

我们在之前的kubeasz部署高可用kubernetes1.17.2 并实现traefik2.1.2部署篇已经实现了基于chrony的时间同步

bs-k8s-ceph 节点和bs-k8s-gitlab节点

[root@bs-k8s-master01 ~]# scp /etc/chrony.conf 20.0.0.208:/etc/chrony.conf

[root@bs-k8s-master01 ~]# scp /etc/chrony.conf 20.0.0.209:/etc/chrony.conf

# systemctl restart chronyd.service

# chronyc sources -v

===============================================================================

^* 20.0.0.202 3 6 17 9 +2890ns[ -24us] +/- 21ms

免密钥认证

bs-k8s-ceph

# cat /service/scripts/ssh-key.sh

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-03-17

#FileName: /service/scripts/ssh-key.sh

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

##########################################################################

#!/bin/bash

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin

export $PATH

#目标主机列表

IP="

20.0.0.209

bs-k8s-ceph

20.0.0.207

bs-k8s-harbor

20.0.0.208

bs-k8s-gitlab

"

for node in ${IP};do

sshpass -p 1 ssh-copy-id ${node} -o StrictHostKeyChecking=no

if [ $? -eq 0 ];then

echo "${node} 秘钥copy完成"

else

echo "${node} 秘钥copy失败"

fi

done

# yum install -y sshpass

# ssh-keygen -t rsa

# sh -x /service/scripts/ssh-key.sh

注:上述步骤需要注意hosts解析对应正确

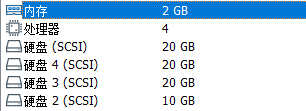

准备数据盘

# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 1G 0 part /boot

├─sda2 8:2 0 1G 0 part [SWAP]

└─sda3 8:3 0 18G 0 part /

sdb 8:16 0 10G 0 disk

sdc 8:32 0 20G 0 disk

sdd 8:48 0 20G 0 disk

sr0 11:0 1 918M 0 rom

在每个节点上为Journal磁盘分区, 分别为 sdb1, sdb2, 各自对应本机的2个OSD, journal磁盘对应osd的大小为25%

使用 parted 命令进行创建分区操作

# parted /dev/sdb

GNU Parted 3.1

使用 /dev/sdb

Welcome to GNU Parted! Type 'help' to view a list of commands.

(parted) mklabel gpt

(parted) mkpart primary xfs 0% 50%

(parted) mkpart primary xfs 50% 100%

(parted) q

信息: You may need to update /etc/fstab.

# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 1G 0 part /boot

├─sda2 8:2 0 1G 0 part [SWAP]

└─sda3 8:3 0 18G 0 part /

sdb 8:16 0 10G 0 disk

├─sdb1 8:17 0 5G 0 part

└─sdb2 8:18 0 5G 0 part

sdc 8:32 0 20G 0 disk

sdd 8:48 0 20G 0 disk

sr0 11:0 1 918M 0 rom

配置源

[root@bs-ceph-ceph ~]# cat /etc/yum.repos.d/ceph.repo

[Ceph]

name=Ceph packages for $basearch

baseurl=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/$basearch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[Ceph-noarch]

name=Ceph noarch packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/noarch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[ceph-source]

name=Ceph source packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/SRPMS

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

# scp /etc/yum.repos.d/ceph.repo 20.0.0.207:/etc/yum.repos.d/ceph.repo

ceph.repo 100% 558 166.9KB/s 00:00

[root@bs-ceph-ceph01 ~]# scp /etc/yum.repos.d/ceph.repo 20.0.0.208:/etc/yum.repos.d/ceph.repo

ceph.repo 100% 558 167.2KB/s 00:00

建立元数据

# yum clean all && yum makecache

安装必要库

# yum install snappy leveldb gdisk python-argparse gperftools-libs -y

部署ceph

无特殊说明都在bs-k8s-ceph节点操作

# yum install -y ceph-deploy python-pip

# ceph-deploy --version

2.0.1

# mkdir /etc/ceph

# cd /etc/ceph

# ceph-deploy new bs-k8s-ceph bs-k8s-harbor bs-k8s-gitlab

# ls

ceph.conf ceph-deploy-ceph.log ceph.mon.keyring

# cp ceph.conf ceph.conf-`date +%F` //改配置文件之前一定要先备份

# vim ceph.conf

# diff ceph.conf ceph.conf-2020-03-17

8,9d7

< public network = 20.0.0.0/24

< cluster network = 20.0.0.0/24

所有节点安装ceph组件

# yum install -y ceph

bs-k8s-ceph 初始monitor 并收集密钥

# ceph-deploy mon create-initial

分发密钥给bs-k8s-harbor、bs-k8s-gitlab节点

# ceph-deploy admin bs-k8s-ceph bs-k8s-harbor bs-k8s-gitlab

配置OSD

# ceph-deploy osd create bs-k8s-ceph --data /dev/sdc --journal /dev/sdb1

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy osd create bs-k8s-ceph --data /dev/sdc --journal /dev/sdb1

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] bluestore : None

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fe10c1bb8c0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] fs_type : xfs

[ceph_deploy.cli][INFO ] block_wal : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] journal : /dev/sdb1

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] host : bs-k8s-ceph

[ceph_deploy.cli][INFO ] filestore : None

[ceph_deploy.cli][INFO ] func : <function osd at 0x7fe10c4058c0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] zap_disk : False

[ceph_deploy.cli][INFO ] data : /dev/sdc

[ceph_deploy.cli][INFO ] block_db : None

[ceph_deploy.cli][INFO ] dmcrypt : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.osd][DEBUG ] Creating OSD on cluster ceph with data device /dev/sdc

[bs-k8s-ceph][DEBUG ] connected to host: bs-k8s-ceph

[bs-k8s-ceph][DEBUG ] detect platform information from remote host

[bs-k8s-ceph][DEBUG ] detect machine type

[bs-k8s-ceph][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.6.1810 Core

[ceph_deploy.osd][DEBUG ] Deploying osd to bs-k8s-ceph

[bs-k8s-ceph][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[bs-k8s-ceph][WARNIN] osd keyring does not exist yet, creating one

[bs-k8s-ceph][DEBUG ] create a keyring file

[bs-k8s-ceph][DEBUG ] find the location of an executable

[bs-k8s-ceph][INFO ] Running command: /usr/sbin/ceph-volume --cluster ceph lvm create --bluestore --data /dev/sdc

[bs-k8s-ceph][WARNIN] Running command: /bin/ceph-authtool --gen-print-key

[bs-k8s-ceph][WARNIN] Running command: /bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new dacac7e3-82b8-4d9d-a3dd-47c109f2b2fe

[bs-k8s-ceph][WARNIN] Running command: /usr/sbin/vgcreate -s 1G --force --yes ceph-7e353b38-0fe1-4b7c-b70f-72ab945c4470 /dev/sdc

[bs-k8s-ceph][WARNIN] stdout: Physical volume "/dev/sdc" successfully created.

[bs-k8s-ceph][WARNIN] stdout: Volume group "ceph-7e353b38-0fe1-4b7c-b70f-72ab945c4470" successfully created

[bs-k8s-ceph][WARNIN] Running command: /usr/sbin/lvcreate --yes -l 100%FREE -n osd-block-dacac7e3-82b8-4d9d-a3dd-47c109f2b2fe ceph-7e353b38-0fe1-4b7c-b70f-72ab945c4470

[bs-k8s-ceph][WARNIN] stdout: Logical volume "osd-block-dacac7e3-82b8-4d9d-a3dd-47c109f2b2fe" created.

[bs-k8s-ceph][WARNIN] Running command: /bin/ceph-authtool --gen-print-key

[bs-k8s-ceph][WARNIN] Running command: /bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-0

[bs-k8s-ceph][WARNIN] Running command: /usr/sbin/restorecon /var/lib/ceph/osd/ceph-0

[bs-k8s-ceph][WARNIN] Running command: /bin/chown -h ceph:ceph /dev/ceph-7e353b38-0fe1-4b7c-b70f-72ab945c4470/osd-block-dacac7e3-82b8-4d9d-a3dd-47c109f2b2fe

[bs-k8s-ceph][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-0

[bs-k8s-ceph][WARNIN] Running command: /bin/ln -s /dev/ceph-7e353b38-0fe1-4b7c-b70f-72ab945c4470/osd-block-dacac7e3-82b8-4d9d-a3dd-47c109f2b2fe /var/lib/ceph/osd/ceph-0/block

[bs-k8s-ceph][WARNIN] Running command: /bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-0/activate.monmap

[bs-k8s-ceph][WARNIN] stderr: got monmap epoch 1

[bs-k8s-ceph][WARNIN] Running command: /bin/ceph-authtool /var/lib/ceph/osd/ceph-0/keyring --create-keyring --name osd.0 --add-key AQB5mHBeloJpKBAAfwSp+ooYl7IYgghhVNzIYw==

[bs-k8s-ceph][WARNIN] stdout: creating /var/lib/ceph/osd/ceph-0/keyring

[bs-k8s-ceph][WARNIN] added entity osd.0 auth auth(auid = 18446744073709551615 key=AQB5mHBeloJpKBAAfwSp+ooYl7IYgghhVNzIYw== with 0 caps)

[bs-k8s-ceph][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/keyring

[bs-k8s-ceph][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/

[bs-k8s-ceph][WARNIN] Running command: /bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 0 --monmap /var/lib/ceph/osd/ceph-0/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-0/ --osd-uuid dacac7e3-82b8-4d9d-a3dd-47c109f2b2fe --setuser ceph --setgroup ceph

[bs-k8s-ceph][WARNIN] --> ceph-volume lvm prepare successful for: /dev/sdc

[bs-k8s-ceph][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0

[bs-k8s-ceph][WARNIN] Running command: /bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-7e353b38-0fe1-4b7c-b70f-72ab945c4470/osd-block-dacac7e3-82b8-4d9d-a3dd-47c109f2b2fe --path /var/lib/ceph/osd/ceph-0 --no-mon-config

[bs-k8s-ceph][WARNIN] Running command: /bin/ln -snf /dev/ceph-7e353b38-0fe1-4b7c-b70f-72ab945c4470/osd-block-dacac7e3-82b8-4d9d-a3dd-47c109f2b2fe /var/lib/ceph/osd/ceph-0/block

[bs-k8s-ceph][WARNIN] Running command: /bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-0/block

[bs-k8s-ceph][WARNIN] Running command: /bin/chown -R ceph:ceph /dev/dm-0

[bs-k8s-ceph][WARNIN] Running command: /bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-0

[bs-k8s-ceph][WARNIN] Running command: /bin/systemctl enable ceph-volume@lvm-0-dacac7e3-82b8-4d9d-a3dd-47c109f2b2fe

[bs-k8s-ceph][WARNIN] stderr: Created symlink from /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-0-dacac7e3-82b8-4d9d-a3dd-47c109f2b2fe.service to /usr/lib/systemd/system/ceph-volume@.service.

[bs-k8s-ceph][WARNIN] Running command: /bin/systemctl enable --runtime ceph-osd@0

[bs-k8s-ceph][WARNIN] stderr: Created symlink from /run/systemd/system/ceph-osd.target.wants/ceph-osd@0.service to /usr/lib/systemd/system/ceph-osd@.service.

[bs-k8s-ceph][WARNIN] Running command: /bin/systemctl start ceph-osd@0

[bs-k8s-ceph][WARNIN] --> ceph-volume lvm activate successful for osd ID: 0

[bs-k8s-ceph][WARNIN] --> ceph-volume lvm create successful for: /dev/sdc

[bs-k8s-ceph][INFO ] checking OSD status...

[bs-k8s-ceph][DEBUG ] find the location of an executable

[bs-k8s-ceph][INFO ] Running command: /bin/ceph --cluster=ceph osd stat --format=json

[ceph_deploy.osd][DEBUG ] Host bs-k8s-ceph is now ready for osd use.

# ceph-deploy osd create bs-k8s-ceph --data /dev/sdd --journal /dev/sdb2

# ceph-deploy osd create bs-k8s-harbor --data /dev/sdc --journal /dev/sdb1

# ceph-deploy osd create bs-k8s-harbor --data /dev/sdd --journal /dev/sdb2

# ceph-deploy osd create bs-k8s-gitlab --data /dev/sdc --journal /dev/sdb1

# ceph-deploy osd create bs-k8s-gitlab --data /dev/sdd --journal /dev/sdb2

# ceph -s

cluster:

id: ed4d59da-c861-4da0-bbe2-8dfdea5be796

health: HEALTH_WARN

no active mgr //没有部署mgr

services:

mon: 3 daemons, quorum bs-k8s-harbor,bs-k8s-gitlab,bs-k8s-ceph

mgr: no daemons active

osd: 6 osds: 6 up, 6 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.11151 root default

-3 0.03717 host bs-k8s-ceph

0 hdd 0.01859 osd.0 up 1.00000 1.00000

1 hdd 0.01859 osd.1 up 1.00000 1.00000

-7 0.03717 host bs-k8s-gitlab

4 hdd 0.01859 osd.4 up 1.00000 1.00000

5 hdd 0.01859 osd.5 up 1.00000 1.00000

-5 0.03717 host bs-k8s-harbor

2 hdd 0.01859 osd.2 up 1.00000 1.00000

3 hdd 0.01859 osd.3 up 1.00000 1.00000

# ll

总用量 164

-rw------- 1 root root 113 3月 17 17:28 ceph.bootstrap-mds.keyring

-rw------- 1 root root 113 3月 17 17:28 ceph.bootstrap-mgr.keyring

-rw------- 1 root root 113 3月 17 17:28 ceph.bootstrap-osd.keyring

-rw------- 1 root root 113 3月 17 17:28 ceph.bootstrap-rgw.keyring

-rw------- 1 root root 151 3月 17 17:28 ceph.client.admin.keyring

-rw-r--r-- 1 root root 310 3月 17 17:30 ceph.conf

-rw-r--r-- 1 root root 251 3月 17 17:24 ceph.conf-2020-03-17

-rw-r--r-- 1 root root 90684 3月 17 17:32 ceph-deploy-ceph.log

-rw------- 1 root root 73 3月 17 17:24 ceph.mon.keyring

-rw-r--r-- 1 root root 92 12月 13 06:01 rbdmap

# chmod +r ceph.client.admin.keyring

# ceph-deploy mgr create bs-k8s-ceph bs-k8s-harbor bs-k8s-gitlab

# ceph -s

cluster:

id: ed4d59da-c861-4da0-bbe2-8dfdea5be796

health: HEALTH_OK

services:

mon: 3 daemons, quorum bs-k8s-harbor,bs-k8s-gitlab,bs-k8s-ceph

mgr: bs-k8s-ceph(active), standbys: bs-k8s-harbor, bs-k8s-gitlab

osd: 6 osds: 6 up, 6 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 6.0 GiB used, 108 GiB / 114 GiB avail

pgs:

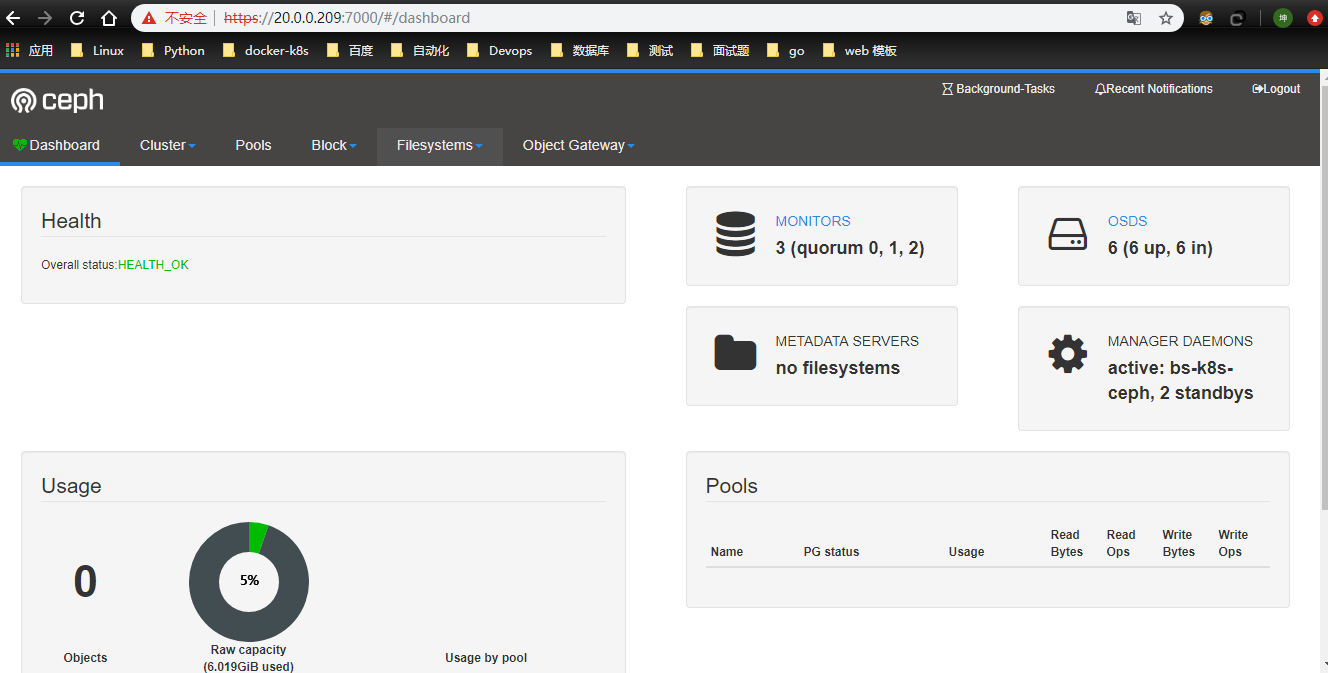

开启dashboard

# vim ceph.conf

[mgr]

mgr_modules = dashboard

# ceph mgr module enable dashboard

# ceph dashboard create-self-signed-cert

Self-signed certificate created

自签证书

# openssl req -new -nodes -x509 -subj "/O=IT/CN=ceph-mgr-dashboard" -days 3650 -keyout dashboard.key -out dashboard.crt -extensions v3_ca

Generating a 2048 bit RSA private key

...............................................+++

.................+++

writing new private key to 'dashboard.key'

-----

配置服务地址、端口,默认的端口是8443,这里改为7000

# ceph config set mgr mgr/dashboard/server_addr 0.0.0.0

# ceph config set mgr mgr/dashboard/server_port 7000

# ceph dashboard set-login-credentials admin zisefeizhu

Username and password updated

# ceph mgr services

{

"dashboard": "https://bs-k8s-ceph:8443/"

}

同步集群ceph配置文件

# ceph-deploy --overwrite-conf config push bs-k8s-ceph bs-k8s-gitlab bs-k8s-harbor

# systemctl restart ceph-mgr@bs-k8s-ceph.service

# ceph -s

cluster:

id: ed4d59da-c861-4da0-bbe2-8dfdea5be796

health: HEALTH_OK

services:

mon: 3 daemons, quorum bs-k8s-harbor,bs-k8s-gitlab,bs-k8s-ceph

mgr: bs-k8s-ceph(active), standbys: bs-k8s-harbor, bs-k8s-gitlab

osd: 6 osds: 6 up, 6 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 6.0 GiB used, 108 GiB / 114 GiB avail

pgs:

# ceph mgr services

{

"dashboard": "https://0.0.0.0:7000/"

}

注:上述部署失败的主要原因是主机名不对,时间同步不对。web访问已经改成了任意节点都可以,及哪个节点mgr处于active就使用该节点IP访问。

至此ceph部署完成。

优化ceph配置

因为我对于ceph的认知是真的不足,所以我只做适当优化。加油啊 ,不能太弱了。

因为我的集群内核是4.4,而map待映射机器内核太低不支 feature flag 400000000000000,需要kernel>=4.5的机器成功。所以做一下优化

三个节点

# ceph osd crush tunables hammer

adjusted tunables profile to hammer

允许 ceph 集群删除 pool

# cat ceph.conf

[global]

fsid = ed4d59da-c861-4da0-bbe2-8dfdea5be796

mon_initial_members = bs-k8s-ceph, bs-k8s-harbor, bs-k8s-gitlab

mon_host = 20.0.0.209,20.0.0.207,20.0.0.208

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

public network = 20.0.0.0/24

cluster network = 20.0.0.0/24

[mgr]

mgr_modules = dashboard

[mon]

mon_allow_pool_delete = true

同步配置文件

#ceph-deploy --overwrite-conf config push bs-k8s-ceph bs-k8s-gitlab bs-k8s-harbor

测试

#systemctl restart ceph-mon.target

#ceph osd pool create rbd 128

#ceph osd pool rm rbd rbd --yes-i-really-really-mean-it

pool 'rbd' created

完成部署

过手如登山,一步一重天

浙公网安备 33010602011771号

浙公网安备 33010602011771号