kubeasz部署高可用kubernetes1.17.2 并实现traefik2.1.2部署

模板机操作

# cat /etc/redhat-release

CentOS Linux release 7.6.1810 (Core)

# uname -a //内核升级到4.4.X以后, 关于如何升级请自行解决

Linux bs-k8s-master01 4.4.186-1.el7.elrepo.x86_64 #1 SMP Sun Jul 21 04:06:52 EDT 2019 x86_64 x86_64 x86_64 GNU/Linux

#yum install python epel-release -y

#yum install git python-pip -y

#pip install pip --upgrade -i http://mirrors.aliyun.com/pypi/simple/ --trusted-host mirrors.aliyun.com

#pip install ansible==2.6.12 -i http://mirrors.aliyun.com/pypi/simple/ --trusted-host mirrors.aliyun.com

#pip install netaddr -i http://pypi.douban.com/simple --trusted-host pypi.douban.com

#reboot

快照 --> 克隆

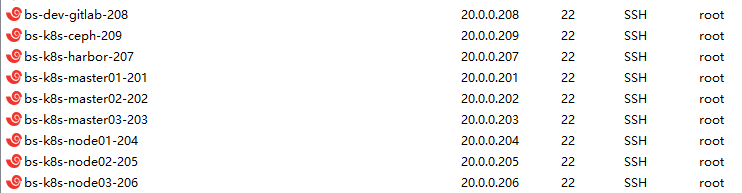

克隆主机布局

| 主机IP | 主机名 | 主机角色 | 内存 & cpu |

|---|---|---|---|

| 20.0.0.201 | bs-k8s-master01 | master etcd | 4C & 2G |

| 20.0.0.202 | bs-k8s-master02 | master etcd traefik | 2C & 2G |

| 20.0.0.203 | bs-k8s-master03 | master etcd traefik | 2C & 2G |

| 20.0.0.204 | bs-k8s-node01 | worker prometheus efk | 4C & 10G |

| 20.0.0.205 | bs-k8s-node02 | worker jenkins f | 4C & 3G |

| 20.0.0.206 | bs-k8s-node03 | woker myweb f | 4C & 2G |

| 20.0.0.207 | bs-k8s-harbor | ceph harbor haproxy keepalived | 2C & 1.5G |

| 20.0.0.208 | bs-k8s-gitlab | ceph gitlab | 2C & 6G |

| 20.0.0.209 | bs-k8s-ceph | ceph haproxy keepalived | 2C & 1.5G |

上表格为规划(2月份已经验证几乎可行),按需按量增减,符合自己的物理机要求

bs-k8s-master01为例 初始化

#hostnamectl set-hostname bs-k8s-master01

# vimn

# cat /etc/sysconfig/network-scripts/ifcfg-eth0

TYPE=Ethernet

BOOTPROTO=none

NAME=eth0

DEVICE=eth0

ONBOOT=yes

IPADDR=20.0.0.201

PREFIX=24

GATEWAY=20.0.0.2

DNS1=223.5.5.5

#init 0

做快照

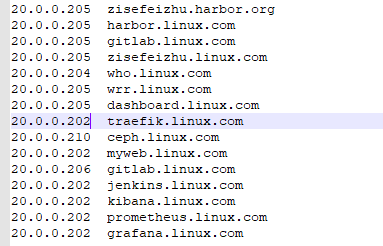

配置DNS

# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

20.0.0.201 bs-k8s-master01

20.0.0.202 bs-k8s-master02

20.0.0.203 bs-k8s-master03

20.0.0.204 bs-k8s-node01

20.0.0.205 bs-k8s-node02

20.0.0.206 bs-k8s-node03

20.0.0.207 bs-k8s-harbor harbor.linux.com

20.0.0.208 bs-k8s-gitlab

20.0.0.209 bs-k8s-ceph

ssh免密钥登陆

# ssh-keygen -t rsa

# yum install -y sshpass

# mkdir /service/scripts -p

# cat /service/scripts/ssh-key.sh

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-03-16

#FileName: /service/scripts/ssh-key.sh

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

##########################################################################

#目标主机列表

IP="

20.0.0.201

bs-k8s-master01

20.0.0.202

bs-k8s-master02

20.0.0.203

bs-k8s-master03

20.0.0.204

bs-k8s-node01

20.0.0.205

bs-k8s-node02

20.0.0.206

bs-k8s-node03

20.0.0.207

bs-k8s-harbor

20.0.0.208

bs-k8s-gitlab

20.0.0.209

bs-k8s-ceph

"

for node in ${IP};do

sshpass -p 1 ssh-copy-id ${node} -o StrictHostKeyChecking=no

scp /etc/hosts ${node}:/etc/hosts

if [ $? -eq 0 ];then

echo "${node} 秘钥copy完成"

else

echo "${node} 秘钥copy失败"

fi

done

# sh -x /service/scripts/ssh-key.sh

bs-k8s-master01为部署机

下载工具脚本easzup,举例使用kubeasz版本2.2.0

# pwd

/data

# export release=2.2.0

# curl -C- -fLO --retry 3 https://github.com/easzlab/kubeasz/releases/download/${release}/easzup

# chmod +x ./easzup

# cat easzup

......

export DOCKER_VER=19.03.5

export KUBEASZ_VER=2.2.0

export K8S_BIN_VER=v1.17.2

export EXT_BIN_VER=0.4.0

export SYS_PKG_VER=0.3.3

......

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": [

"https://dockerhub.azk8s.cn",

"https://docker.mirrors.ustc.edu.cn",

"http://hub-mirror.c.163.com"

],

"max-concurrent-downloads": 10,

"log-driver": "json-file",

"log-level": "warn",

"log-opts": {

"max-size": "10m",

"max-file": "3"

},

"data-root": "/var/lib/docker"

}

......

# images needed by k8s cluster

calicoVer=v3.4.4

corednsVer=1.6.6

dashboardVer=v2.0.0-rc3

dashboardMetricsScraperVer=v1.0.3

flannelVer=v0.11.0-amd64

metricsVer=v0.3.6

pauseVer=3.1

traefikVer=v1.7.20

......

# ./easzup -D

[INFO] Action begin : download_all

Unit docker.service could not be found.

Unit containerd.service could not be found.

which: no docker in (/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin)

[INFO] downloading docker binaries 19.03.5

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 60.3M 100 60.3M 0 0 2240k 0 0:00:27 0:00:27 --:--:-- 1881k

[INFO] generate docker service file

[INFO] generate docker config file

[INFO] prepare register mirror for CN

[INFO] turn off selinux in CentOS/Redhat

Disabled

[INFO] enable and start docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /etc/systemd/system/docker.service.

[INFO] downloading kubeasz 2.2.0

[INFO] run a temporary container

Unable to find image 'easzlab/kubeasz:2.2.0' locally

2.2.0: Pulling from easzlab/kubeasz

9123ac7c32f7: Pull complete

837e3bfc1a1b: Pull complete

Digest: sha256:a1fc4a75fde5aee811ff230e88ffa80d8bfb66e9c1abc907092abdbff073735e

Status: Downloaded newer image for easzlab/kubeasz:2.2.0

60c5bc91b52996f009ebab89e1daf6db77fd7a8b3313843fb7fba7ef6f207014

[INFO] cp kubeasz code from the temporary container

[INFO] stop&remove temporary container

temp_easz

[INFO] downloading kubernetes v1.17.2 binaries

v1.17.2: Pulling from easzlab/kubeasz-k8s-bin

9123ac7c32f7: Already exists

fa197cdd54ac: Pull complete

Digest: sha256:d9fdc65a79a2208f48d5bf9a7e51cf4a4719c978742ef59b507bc8aaca2564f5

Status: Downloaded newer image for easzlab/kubeasz-k8s-bin:v1.17.2

docker.io/easzlab/kubeasz-k8s-bin:v1.17.2

[INFO] run a temporary container

e452799cb2a32cd82c3b976e47c9c8f9fa67079143a29783cb8e4223dc1011e6

[INFO] cp k8s binaries

[INFO] stop&remove temporary container

temp_k8s_bin

[INFO] downloading extral binaries kubeasz-ext-bin:0.4.0

0.4.0: Pulling from easzlab/kubeasz-ext-bin

9123ac7c32f7: Already exists

96aeb45eaf70: Pull complete

Digest: sha256:cb7c51e9005a48113086002ae53b805528f4ac31e7f4c4634e22c98a8230a5bb

Status: Downloaded newer image for easzlab/kubeasz-ext-bin:0.4.0

docker.io/easzlab/kubeasz-ext-bin:0.4.0

[INFO] run a temporary container

7cba170c92b590f787b364ce4996b99a26d53f3a2eb6222ac483fb2f1ec01a43

[INFO] cp extral binaries

[INFO] stop&remove temporary container

temp_ext_bin

[INFO] downloading system packages kubeasz-sys-pkg:0.3.3

0.3.3: Pulling from easzlab/kubeasz-sys-pkg

e7c96db7181b: Pull complete

291d9a0e6c41: Pull complete

5f5b83293598: Pull complete

376121b0ab94: Pull complete

1c7cd77764e9: Pull complete

d8d58def0f00: Pull complete

Digest: sha256:342471d786ba6d9bb95c15c573fd7d24a6fd30de51049c2c0b543d09d28b5d9f

Status: Downloaded newer image for easzlab/kubeasz-sys-pkg:0.3.3

docker.io/easzlab/kubeasz-sys-pkg:0.3.3

[INFO] run a temporary container

a5e4078ebf08b40834aed8db62b63aa131064425ddd1f9cc2abcb3b592ea2b40

[INFO] cp system packages

[INFO] stop&remove temporary container

temp_sys_pkg

[INFO] downloading offline images

v3.4.4: Pulling from calico/cni

c87736221ed0: Pull complete

5c9ca5efd0e4: Pull complete

208ecfdac035: Pull complete

4112fed29204: Pull complete

Digest: sha256:bede24ded913fb9f273c8392cafc19ac37d905017e13255608133ceeabed72a1

Status: Downloaded newer image for calico/cni:v3.4.4

docker.io/calico/cni:v3.4.4

v3.4.4: Pulling from calico/kube-controllers

c87736221ed0: Already exists

e90e29149864: Pull complete

5d1329dbb1d1: Pull complete

Digest: sha256:b2370a898db0ceafaa4f0b8ddd912102632b856cc010bb350701828a8df27775

Status: Downloaded newer image for calico/kube-controllers:v3.4.4

docker.io/calico/kube-controllers:v3.4.4

v3.4.4: Pulling from calico/node

c87736221ed0: Already exists

07330e865cef: Pull complete

d4d8bb3c8ac5: Pull complete

870dc1a5d2d5: Pull complete

af40827f5487: Pull complete

76fa1069853f: Pull complete

Digest: sha256:1582527b4923ffe8297d12957670bc64bb4f324517f57e4fece3f6289d0eb6a1

Status: Downloaded newer image for calico/node:v3.4.4

docker.io/calico/node:v3.4.4

1.6.6: Pulling from coredns/coredns

c6568d217a00: Pull complete

967f21e47164: Pull complete

Digest: sha256:41bee6992c2ed0f4628fcef75751048927bcd6b1cee89c79f6acb63ca5474d5a

Status: Downloaded newer image for coredns/coredns:1.6.6

docker.io/coredns/coredns:1.6.6

v2.0.0-rc3: Pulling from kubernetesui/dashboard

d8fcb18be2fe: Pull complete

Digest: sha256:c5d991d02937ac0f49cb62074ee0bd1240839e5814d6d7b51019f08bffd871a6

Status: Downloaded newer image for kubernetesui/dashboard:v2.0.0-rc3

docker.io/kubernetesui/dashboard:v2.0.0-rc3

v0.11.0-amd64: Pulling from easzlab/flannel

cd784148e348: Pull complete

04ac94e9255c: Pull complete

e10b013543eb: Pull complete

005e31e443b1: Pull complete

74f794f05817: Pull complete

Digest: sha256:bd76b84c74ad70368a2341c2402841b75950df881388e43fc2aca000c546653a

Status: Downloaded newer image for easzlab/flannel:v0.11.0-amd64

docker.io/easzlab/flannel:v0.11.0-amd64

v1.0.3: Pulling from kubernetesui/metrics-scraper

75d12d4b9104: Pull complete

fcd66fda0b81: Pull complete

53ff3f804bbd: Pull complete

Digest: sha256:40f1d5785ea66609b1454b87ee92673671a11e64ba3bf1991644b45a818082ff

Status: Downloaded newer image for kubernetesui/metrics-scraper:v1.0.3

docker.io/kubernetesui/metrics-scraper:v1.0.3

v0.3.6: Pulling from mirrorgooglecontainers/metrics-server-amd64

e8d8785a314f: Pull complete

b2f4b24bed0d: Pull complete

Digest: sha256:c9c4e95068b51d6b33a9dccc61875df07dc650abbf4ac1a19d58b4628f89288b

Status: Downloaded newer image for mirrorgooglecontainers/metrics-server-amd64:v0.3.6

docker.io/mirrorgooglecontainers/metrics-server-amd64:v0.3.6

3.1: Pulling from mirrorgooglecontainers/pause-amd64

67ddbfb20a22: Pull complete

Digest: sha256:59eec8837a4d942cc19a52b8c09ea75121acc38114a2c68b98983ce9356b8610

Status: Downloaded newer image for mirrorgooglecontainers/pause-amd64:3.1

docker.io/mirrorgooglecontainers/pause-amd64:3.1

v1.7.20: Pulling from library/traefik

42e7d26ec378: Pull complete

8a753f02eeff: Pull complete

ab927d94d255: Pull complete

Digest: sha256:5ec34caf19d114f8f0ed76f9bc3dad6ba8cf6d13a1575c4294b59b77709def39

Status: Downloaded newer image for traefik:v1.7.20

docker.io/library/traefik:v1.7.20

2.2.0: Pulling from easzlab/kubeasz

Digest: sha256:a1fc4a75fde5aee811ff230e88ffa80d8bfb66e9c1abc907092abdbff073735e

Status: Image is up to date for easzlab/kubeasz:2.2.0

docker.io/easzlab/kubeasz:2.2.0

[INFO] Action successed : download_all

/etc/ansible 包含 kubeasz 版本为 ${release} 的发布代码

/etc/ansible/bin 包含 k8s/etcd/docker/cni 等二进制文件

/etc/ansible/down 包含集群安装时需要的离线容器镜像

/etc/ansible/down/packages 包含集群安装时需要的系统基础软件

配置配置清单

# cd /etc/ansible/

# ll

总用量 92

-rw-rw-r-- 1 root root 395 2月 1 10:35 01.prepare.yml

-rw-rw-r-- 1 root root 58 2月 1 10:35 02.etcd.yml

-rw-rw-r-- 1 root root 149 2月 1 10:35 03.containerd.yml

-rw-rw-r-- 1 root root 137 2月 1 10:35 03.docker.yml

-rw-rw-r-- 1 root root 470 2月 1 10:35 04.kube-master.yml

-rw-rw-r-- 1 root root 140 2月 1 10:35 05.kube-node.yml

-rw-rw-r-- 1 root root 408 2月 1 10:35 06.network.yml

-rw-rw-r-- 1 root root 77 2月 1 10:35 07.cluster-addon.yml

-rw-rw-r-- 1 root root 3686 2月 1 10:35 11.harbor.yml

-rw-rw-r-- 1 root root 431 2月 1 10:35 22.upgrade.yml

-rw-rw-r-- 1 root root 1975 2月 1 10:35 23.backup.yml

-rw-rw-r-- 1 root root 113 2月 1 10:35 24.restore.yml

-rw-rw-r-- 1 root root 1752 2月 1 10:35 90.setup.yml

-rw-rw-r-- 1 root root 1127 2月 1 10:35 91.start.yml

-rw-rw-r-- 1 root root 1120 2月 1 10:35 92.stop.yml

-rw-rw-r-- 1 root root 337 2月 1 10:35 99.clean.yml

-rw-rw-r-- 1 root root 10283 2月 1 10:35 ansible.cfg

drwxrwxr-x 3 root root 4096 3月 16 09:55 bin

drwxrwxr-x 2 root root 23 2月 1 10:55 dockerfiles

drwxrwxr-x 8 root root 92 2月 1 10:55 docs

drwxrwxr-x 3 root root 322 3月 16 09:57 down

drwxrwxr-x 2 root root 52 2月 1 10:55 example

drwxrwxr-x 14 root root 218 2月 1 10:55 manifests

drwxrwxr-x 2 root root 322 2月 1 10:55 pics

-rw-rw-r-- 1 root root 5607 2月 1 10:35 README.md

drwxrwxr-x 23 root root 4096 2月 1 10:55 roles

drwxrwxr-x 2 root root 294 2月 1 10:55 tools

# cp example/hosts.multi-node ./hosts

# cat hosts

# 'etcd' cluster should have odd member(s) (1,3,5,...)

# variable 'NODE_NAME' is the distinct name of a member in 'etcd' cluster

[etcd]

20.0.0.201 NODE_NAME=etcd1

20.0.0.202 NODE_NAME=etcd2

20.0.0.203 NODE_NAME=etcd3

# master node(s)

[kube-master]

20.0.0.201

20.0.0.202

20.0.0.203

# work node(s)

[kube-node]

20.0.0.204

20.0.0.205

20.0.0.206

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'yes' to install a harbor server; 'no' to integrate with existed one

# 'SELF_SIGNED_CERT': 'no' you need put files of certificates named harbor.pem and harbor-key.pem in directory 'down'

[harbor]

#192.168.1.8 HARBOR_DOMAIN="harbor.yourdomain.com" NEW_INSTALL=no SELF_SIGNED_CERT=yes

# [optional] loadbalance for accessing k8s from outside

[ex-lb]

20.0.0.209 LB_ROLE=backup EX_APISERVER_VIP=20.0.0.250 EX_APISERVER_PORT=8443

20.0.0.207 LB_ROLE=master EX_APISERVER_VIP=20.0.0.250 EX_APISERVER_PORT=8443

# [optional] ntp server for the cluster

[chrony]

20.0.0.202

[all:vars]

# --------- Main Variables ---------------

# Cluster container-runtime supported: docker, containerd

CONTAINER_RUNTIME="docker"

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="calico"

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"

# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="10.68.0.0/16"

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="172.20.0.0/16"

# NodePort Range

NODE_PORT_RANGE="20000-40000"

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="cluster.local."

# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

bin_dir="/opt/kube/bin"

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"

# Deploy Directory (kubeasz workspace)

base_dir="/etc/ansible"

# ansible all -m ping

20.0.0.202 | SUCCESS => {

"changed": false,

"ping": "pong"

}

20.0.0.209 | SUCCESS => {

"changed": false,

"ping": "pong"

}

20.0.0.207 | SUCCESS => {

"changed": false,

"ping": "pong"

}

20.0.0.203 | SUCCESS => {

"changed": false,

"ping": "pong"

}

20.0.0.201 | SUCCESS => {

"changed": false,

"ping": "pong"

}

20.0.0.204 | SUCCESS => {

"changed": false,

"ping": "pong"

}

20.0.0.206 | SUCCESS => {

"changed": false,

"ping": "pong"

}

20.0.0.205 | SUCCESS => {

"changed": false,

"ping": "pong"

}

部署kubernetes 1.17.2高可用集群

#ansible-playbook 01.prepare.yml

#ansible-playbook 02.etcd.yml

#ansible-playbook 03.docker.yml

#ansible-playbook 04.kube-master.yml

#ansible-playbook 05.kube-node.yml

# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

#ansible-playbook 06.network.yml

# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-6cf5b744d7-7wnlw 1/1 Running 0 6m38s

kube-system calico-node-25dlc 1/1 Running 0 6m37s

kube-system calico-node-49q4n 1/1 Running 0 6m37s

kube-system calico-node-4gmcp 1/1 Running 0 6m37s

kube-system calico-node-gt4bt 1/1 Running 0 6m37s

kube-system calico-node-svcdj 1/1 Running 0 6m38s

kube-system calico-node-tkrqt 1/1 Running 0 6m37s

#命令补全

# source /usr/share/bash-completion/bash_completion

# source <(kubectl completion bash)

# echo "source <(kubectl completion bash)" >> ~/.bashrc

# pwd

/etc/ansible/manifests/metrics-server

# ll

总用量 24

-rw-rw-r-- 1 root root 303 2月 1 10:35 auth-delegator.yaml

-rw-rw-r-- 1 root root 324 2月 1 10:35 auth-reader.yaml

-rw-rw-r-- 1 root root 293 2月 1 10:35 metrics-apiservice.yaml

-rw-rw-r-- 1 root root 1107 3月 16 12:33 metrics-server-deployment.yaml

-rw-rw-r-- 1 root root 291 2月 1 10:35 metrics-server-service.yaml

-rw-rw-r-- 1 root root 517 2月 1 10:35 resource-reader.yaml

#部署pod到特定主机 具体原因看主机布局

# cat metrics-server-deployment.yaml //增加

nodeSelector: ## 设置node筛选器,在特定label的节点上启动

metricsserver: "true"

#kubectl label nodes 20.0.0.204 metricsserver=true

# kubectl apply -f .

# kubectl get pods --all-namespaces -o wide | grep metrics

kube-system metrics-server-6694c7dd66-p6x6n 1/1 Running 1 148m 172.20.46.70 20.0.0.204 <none> <none>

#Metrics Server是一个集群范围的资源使用情况的数据聚合器。作为一个应用部署在集群中

# kubectl top pods --all-namespaces

NAMESPACE NAME CPU(cores) MEMORY(bytes)

kube-system calico-kube-controllers-6cf5b744d7-7wnlw 1m 14Mi

kube-system calico-node-25dlc 60m 53Mi

kube-system calico-node-49q4n 39m 52Mi

kube-system calico-node-4gmcp 20m 50Mi

kube-system calico-node-gt4bt 80m 52Mi

kube-system calico-node-svcdj 21m 51Mi

kube-system calico-node-tkrqt 37m 55Mi

kube-system coredns-76b74f549-km72p 9m 13Mi

kube-system metrics-server-6694c7dd66-p6x6n 5m 17Mi

# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

20.0.0.201 297m 7% 829Mi 64%

20.0.0.202 282m 15% 790Mi 61%

20.0.0.203 307m 17% 799Mi 62%

20.0.0.204 182m 4% 411Mi 5%

20.0.0.205 293m 7% 384Mi 17%

20.0.0.206 168m 4% 322Mi 25%

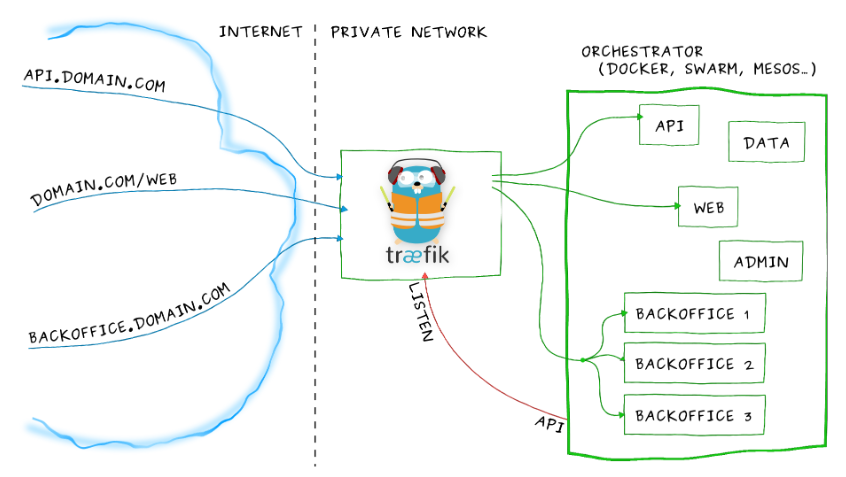

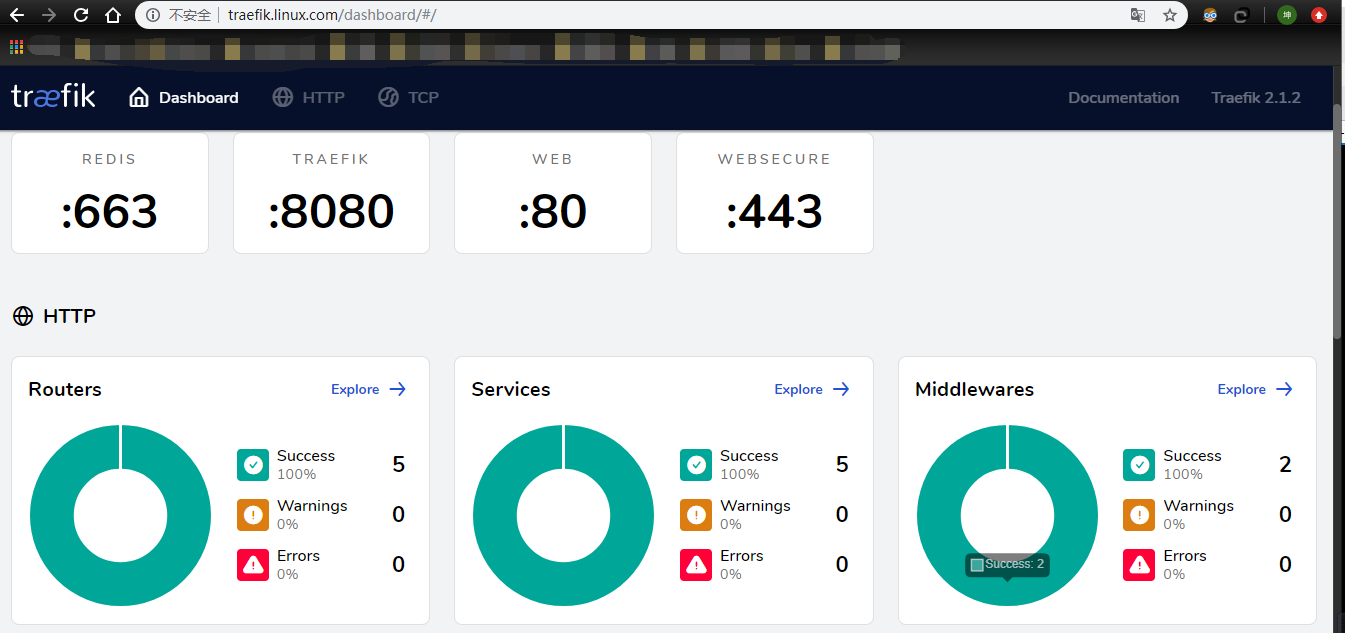

部署traefik 2.1 代理

在 traefik v2.1 版本后,开始使用 CRD(Custom Resource Definition)来完成路由配置等,所以需要提前创建 CRD 资源。

创建 traefik-crd.yaml 文件

# cat traefik-crd.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-03-16

#FileName: traefik-crd.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

## IngressRoute

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ingressroutes.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: IngressRoute

plural: ingressroutes

singular: ingressroute

---

## IngressRouteTCP

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: ingressroutetcps.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: IngressRouteTCP

plural: ingressroutetcps

singular: ingressroutetcp

---

## Middleware

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: middlewares.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: Middleware

plural: middlewares

singular: middleware

---

## TLSOption

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: tlsoptions.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: TLSOption

plural: tlsoptions

singular: tlsoption

---

## TraefikService

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: traefikservices.traefik.containo.us

spec:

scope: Namespaced

group: traefik.containo.us

version: v1alpha1

names:

kind: TraefikService

plural: traefikservices

singular: traefikservice

创建rbac权限

Kubernetes 在 1.6 版本中引入了基于角色的访问控制(RBAC)策略,方便对 Kubernetes 资源和 API 进行细粒度控制。Traefik 需要一定的权限,所以这里提前创建好 Traefik ServiceAccount 并分配一定的权限。

# cat traefik-rbac.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-03-16

#FileName: traefik-rbac.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

## ServiceAccount

apiVersion: v1

kind: ServiceAccount

metadata:

namespace: kube-system

name: traefik-ingress-controller

---

## ClusterRole

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

rules:

- apiGroups: [""]

resources: ["services","endpoints","secrets"]

verbs: ["get","list","watch"]

- apiGroups: ["extensions"]

resources: ["ingresses"]

verbs: ["get","list","watch"]

- apiGroups: ["extensions"]

resources: ["ingresses/status"]

verbs: ["update"]

- apiGroups: ["traefik.containo.us"]

resources: ["middlewares"]

verbs: ["get","list","watch"]

- apiGroups: ["traefik.containo.us"]

resources: ["ingressroutes"]

verbs: ["get","list","watch"]

- apiGroups: ["traefik.containo.us"]

resources: ["ingressroutetcps"]

verbs: ["get","list","watch"]

- apiGroups: ["traefik.containo.us"]

resources: ["tlsoptions"]

verbs: ["get","list","watch"]

- apiGroups: ["traefik.containo.us"]

resources: ["traefikservices"]

verbs: ["get","list","watch"]

---

## ClusterRoleBinding

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: traefik-ingress-controller

subjects:

- kind: ServiceAccount

name: traefik-ingress-controller

namespace: kube-system

创建traefik配置文件

# cat traefik-config.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-03-16

#FileName: traefik-config.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

kind: ConfigMap

apiVersion: v1

metadata:

name: traefik-config

namespace: kube-system

data:

traefik.yaml: |-

ping: "" ## 启用 Ping

serversTransport:

insecureSkipVerify: true ## Traefik 忽略验证代理服务的 TLS 证书

api:

insecure: true ## 允许 HTTP 方式访问 API

dashboard: true ## 启用 Dashboard

debug: false ## 启用 Debug 调试模式

metrics:

prometheus: "" ## 配置 Prometheus 监控指标数据,并使用默认配置

entryPoints:

web:

address: ":80" ## 配置 80 端口,并设置入口名称为 web

websecure:

address: ":443" ## 配置 443 端口,并设置入口名称为 websecure

redis:

address: ":663"

providers:

kubernetesCRD: "" ## 启用 Kubernetes CRD 方式来配置路由规则

kubernetesIngress: "" ## 启动 Kubernetes Ingress 方式来配置路由规则

log:

filePath: "" ## 设置调试日志文件存储路径,如果为空则输出到控制台

level: error ## 设置调试日志级别

format: json ## 设置调试日志格式

accessLog:

filePath: "" ## 设置访问日志文件存储路径,如果为空则输出到控制台

format: json ## 设置访问调试日志格式

bufferingSize: 0 ## 设置访问日志缓存行数

filters:

#statusCodes: ["200"] ## 设置只保留指定状态码范围内的访问日志

retryAttempts: true ## 设置代理访问重试失败时,保留访问日志

minDuration: 20 ## 设置保留请求时间超过指定持续时间的访问日志

fields: ## 设置访问日志中的字段是否保留(keep 保留、drop 不保留)

defaultMode: keep ## 设置默认保留访问日志字段

names: ## 针对访问日志特别字段特别配置保留模式

ClientUsername: drop

headers: ## 设置 Header 中字段是否保留

defaultMode: keep ## 设置默认保留 Header 中字段

names: ## 针对 Header 中特别字段特别配置保留模式

User-Agent: redact

Authorization: drop

Content-Type: keep

部署traefik

# cat traefik-deploy.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-03-16

#FileName: traefik-deploy.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

apiVersion: v1

kind: Service

metadata:

name: traefik

namespace: kube-system

spec:

ports:

- name: web

port: 80

- name: websecure

port: 443

- name: admin

port: 8080

selector:

app: traefik

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: traefik-ingress-controller

namespace: kube-system

labels:

app: traefik

spec:

selector:

matchLabels:

app: traefik

template:

metadata:

name: traefik

labels:

app: traefik

spec:

serviceAccountName: traefik-ingress-controller

terminationGracePeriodSeconds: 1

containers:

- image: traefik:v2.1.2

name: traefik-ingress-lb

ports:

- name: web

containerPort: 80

hostPort: 80 ## 将容器端口绑定所在服务器的 80 端口

- name: websecure

containerPort: 443

hostPort: 443 ## 将容器端口绑定所在服务器的 443 端口

- name: redis

containerPort: 6379

hostPort: 6379

- name: admin

containerPort: 8080 ## Traefik Dashboard 端口

resources:

limits:

cpu: 2000m

memory: 1024Mi

requests:

cpu: 1000m

memory: 1024Mi

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

args:

- --configfile=/config/traefik.yaml

volumeMounts:

- mountPath: "/config"

name: "config"

volumes:

- name: config

configMap:

name: traefik-config

tolerations: ## 设置容忍所有污点,防止节点被设置污点

- operator: "Exists"

nodeSelector: ## 设置node筛选器,在特定label的节点上启动

IngressProxy: "true"

配置traefik路由规则

# cat traefik-dashboard-route.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-03-16

#FileName: traefik-dashboard-route.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: traefik-dashboard-route

namespace: kube-system

spec:

entryPoints:

- web

routes:

- match: Host(`traefik.linux.com`)

kind: Rule

services:

- name: traefik

port: 8080

节点设置label

#kubectl label nodes 20.0.0.202 IngressProxy=true

#kubectl label nodes 20.0.0.203 IngressProxy=true

如果想删除标签,可以使用 kubectl label nodes nodeIP IngressProxy- 命令

kubernetes部署traefik

#kubectl apply -f .

完成 traefik的部署

# kubectl get pods --all-namespaces -o wide

kube-system traefik-ingress-controller-m8jf9 1/1 Running 0 7m34s 172.20.177.130 20.0.0.202 <none> <none>

kube-system traefik-ingress-controller-r7cgl 1/1 Running 0 7m25s 172.20.194.130 20.0.0.203 <none> <none>

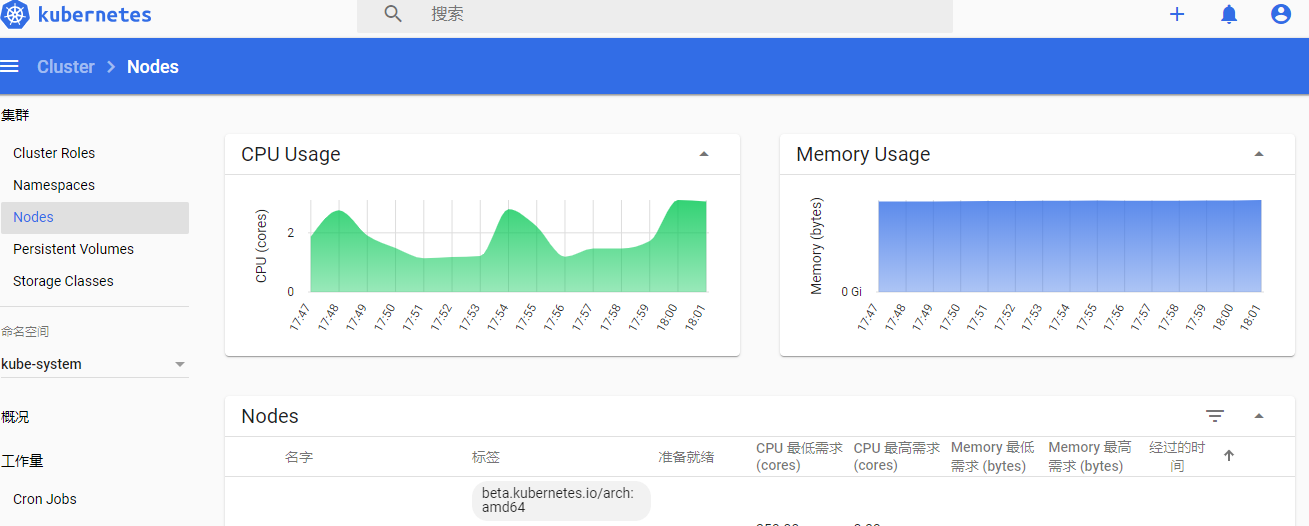

部署dashboard

资源清单

# pwd

/data/k8s/dashboard

# cat admin-user-sa-rbac.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-02-05

#FileName: admin-user-sa-rbac.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

# cat kubernetes-dashboard.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-02-05

#FileName: kubernetes-dashboard.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

name: kubernetes-dashboard

namespace: kube-system

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 30001

selector:

k8s-app: kubernetes-dashboard

type: NodePort

---

#注释掉 使用自己创建的certs

#apiVersion: v1

#kind: Secret

#metadata:

# labels:

# k8s-app: kubernetes-dashboard

# name: kubernetes-dashboard-certs

# namespace: kube-system

#type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kube-system

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kube-system

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kube-system

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: registry.cn-hangzhou.aliyuncs.com/google_containers/dashboard:v2.0.0-rc3

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kube-system

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

nodeSelector: ## 设置node筛选器,在特定label的节点上启动

dashboard: "true"

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kube-system

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-scraper:v1.0.3

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

nodeSelector: ## 设置node筛选器,在特定label的节点上启动

metricsscraper: "true"

# cat read-user-sa-rbac.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-02-05

#FileName: read-user-sa-rbac.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard-read-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: dashboard-read-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: dashboard-read-clusterrole

subjects:

- kind: ServiceAccount

name: dashboard-read-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: dashboard-read-clusterrole

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- persistentvolumes

- persistentvolumeclaims

- persistentvolumeclaims/status

- pods

- replicationcontrollers

- replicationcontrollers/scale

- serviceaccounts

- services

- services/status

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- bindings

- events

- limitranges

- namespaces/status

- pods/log

- pods/status

- replicationcontrollers/status

- resourcequotas

- resourcequotas/status

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- namespaces

verbs:

- get

- list

- watch

- apiGroups:

- apps

resources:

- controllerrevisions

- daemonsets

- daemonsets/status

- deployments

- deployments/scale

- deployments/status

- replicasets

- replicasets/scale

- replicasets/status

- statefulsets

- statefulsets/scale

- statefulsets/status

verbs:

- get

- list

- watch

- apiGroups:

- autoscaling

resources:

- horizontalpodautoscalers

- horizontalpodautoscalers/status

verbs:

- get

- list

- watch

- apiGroups:

- batch

resources:

- cronjobs

- cronjobs/status

- jobs

- jobs/status

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- daemonsets

- daemonsets/status

- deployments

- deployments/scale

- deployments/status

- ingresses

- ingresses/status

- replicasets

- replicasets/scale

- replicasets/status

- replicationcontrollers/scale

verbs:

- get

- list

- watch

- apiGroups:

- policy

resources:

- poddisruptionbudgets

- poddisruptionbudgets/status

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

- ingresses/status

- networkpolicies

verbs:

- get

- list

- watch

- apiGroups:

- storage.k8s.io

resources:

- storageclasses

- volumeattachments

verbs:

- get

- list

- watch

- apiGroups:

- rbac.authorization.k8s.io

resources:

- clusterrolebindings

- clusterroles

- roles

- rolebindings

verbs:

- get

- list

- watch

# cat traefik-dashboard-ingressroute.yaml

##########################################################################

#Author: zisefeizhu

#QQ: 2********0

#Date: 2020-03-16

#FileName: traefik-dashboard-ingressroute.yaml

#URL: https://www.cnblogs.com/zisefeizhu/

#Description: The test script

#Copyright (C): 2020 All rights reserved

###########################################################################

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: kubernetes-dashboard-route

namespace: kube-system

spec:

entryPoints:

- websecure

tls:

secretName: k8s-dashboard-tls

routes:

- match: Host(`dashboard.linux.com`)

kind: Rule

services:

- name: kubernetes-dashboard

port: 443

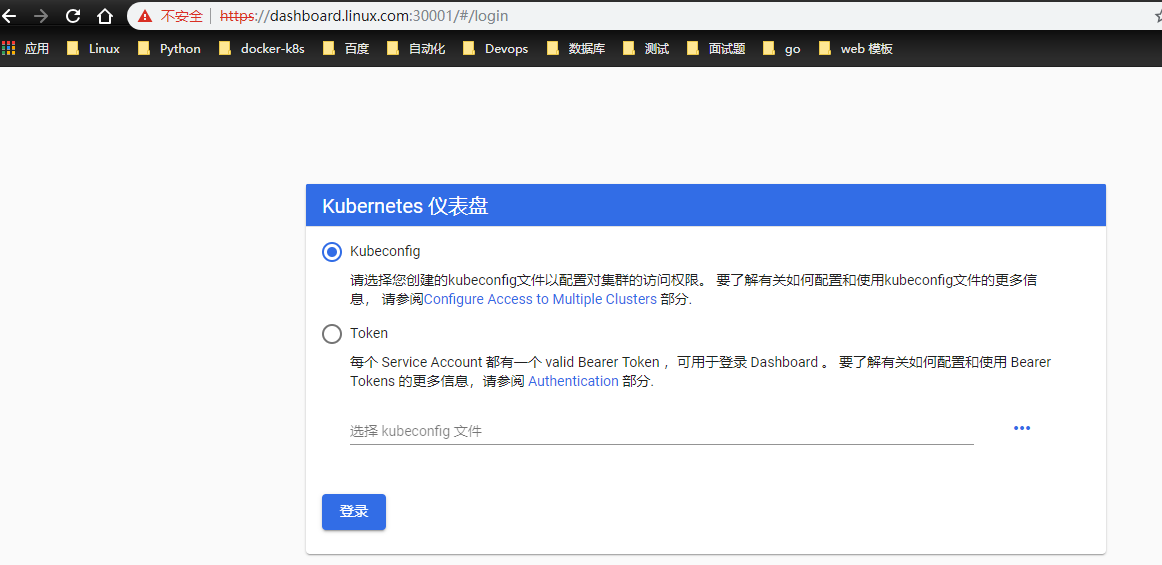

认证

mkdir /etc/kubernetes/pki/

cd /etc/kubernetes/pki/

openssl genrsa -out dashboard.key 2048

openssl req -days 3650 -new -out dashboard.csr -key dashboard.key -subj '/CN=**20.0.0.204**'

openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt

kubectl create secret generic kubernetes-dashboard-certs --from-file=/etc/kubernetes/pki/dashboard.key --from-file=/etc/kubernetes/pki/dashboard.crt -n kube-system

openssl req -x509 -nodes -days 3650 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=dashboard.linux.com"

kubectl create secret generic k8s-dashboard-tls --from-file=tls.crt --from-file=tls.key -n kube-system

部署dashboard

# kubectl apply -f .

导出认证

# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

Name: admin-user-token-cj5l4

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: d4a13fad-f427-435b-86a7-6dfc534e926d

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1350 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6Il9wNERQb2tOU2pMRkdoTXlDSDRIOVh5R3pLdnA2ektIMHhXQVBucEdldFUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWNqNWw0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJkNGExM2ZhZC1mNDI3LTQzNWItODZhNy02ZGZjNTM0ZTkyNmQiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.T65yeuBa2ExprRigERC-hPG-WSdaW7B-04O5qRcXn7SLKpK_4tMM8rlraClGmc-ppSDIi35ZjK0SVb8YGDeUnt2psJlRLYVEPsJXHwYiNUfrigVs67Uo3aMGhSdjPEaqdZxsnRrReSW_rfX8odjXF0-wGKx7uA8GelUJuRNIZ0eBSu_iGJchpZxU_K3AdU_dmcyHidKzDxbPLVgAb8m7wE9wcelWVK9g6UOeg71bO0gJtlXrjWrBMfBjvnC4oLDBYs9ze96KmeOLwjWTOlwXaYg4nIuVRL13BaqmBJB9lcRa3jrCDsRT0oBZrBymvqxbCCN2VVjDmz-kZXh7BcWVLg

# vim /root/.kube/config //增加如下 注意不要用空格,要tab 四一

token: eyJhbGciOiJSUzI1NiIsImtpZCI6Il9wNERQb2tOU2pMRkdoTXlDSDRIOVh5R3pLdnA2ektIMHhXQVBucEdldFUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWNqNWw0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJkNGExM2ZhZC1mNDI3LTQzNWItODZhNy02ZGZjNTM0ZTkyNmQiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.T65yeuBa2ExprRigERC-hPG-WSdaW7B-04O5qRcXn7SLKpK_4tMM8rlraClGmc-ppSDIi35ZjK0SVb8YGDeUnt2psJlRLYVEPsJXHwYiNUfrigVs67Uo3aMGhSdjPEaqdZxsnRrReSW_rfX8odjXF0-wGKx7uA8GelUJuRNIZ0eBSu_iGJchpZxU_K3AdU_dmcyHidKzDxbPLVgAb8m7wE9wcelWVK9g6UOeg71bO0gJtlXrjWrBMfBjvnC4oLDBYs9ze96KmeOLwjWTOlwXaYg4nIuVRL13BaqmBJB9lcRa3jrCDsRT0oBZrBymvqxbCCN2VVjDmz-kZXh7BcWVLg

# cp /root/.kube/config /data/k8s/

# cp /root/.kube/config /data/k8s/dashboard/k8s-dashboard.kubeconfig

# sz k8s-dashboard.kubeconfig

过手如登山,一步一重天