部署harbor以https模式和k8s对接

我们在之前的kubeasz部署高可用kubernetes1.17.2 并实现traefik2.1.2部署篇已经实现了基于chrony的时间同步

[root@bs-k8s-master01 ~]# cat /etc/chrony.conf # Use public servers from the pool.ntp.org project. server 20.0.0.202 iburst [root@bs-k8s-master01 ~]# chronyc sources -v 210 Number of sources = 1 .-- Source mode '^' = server, '=' = peer, '#' = local clock. / .- Source state '*' = current synced, '+' = combined , '-' = not combined, | / '?' = unreachable, 'x' = time may be in error, '~' = time too variable. || .- xxxx [ yyyy ] +/- zzzz || Reachability register (octal) -. | xxxx = adjusted offset, || Log2(Polling interval) --. | | yyyy = measured offset, || \ | | zzzz = estimated error. || | | \ MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^* bs-k8s-master02 3 6 377 4 -15ms[ -17ms] +/- 21ms [root@bs-k8s-master01 ~]# scp /etc/chrony.conf 20.0.0.207:/etc/chrony.conf root@20.0.0.207's password: chrony.conf 100% 1011 662.7KB/s 00:00 [root@bs-k8s-harbor01 ~]# systemctl restart chronyd.service [root@bs-k8s-harbor01 ~]# chronyc sources -v 210 Number of sources = 1 .-- Source mode '^' = server, '=' = peer, '#' = local clock. / .- Source state '*' = current synced, '+' = combined , '-' = not combined, | / '?' = unreachable, 'x' = time may be in error, '~' = time too variable. || .- xxxx [ yyyy ] +/- zzzz || Reachability register (octal) -. | xxxx = adjusted offset, || Log2(Polling interval) --. | | yyyy = measured offset, || \ | | zzzz = estimated error. || | | \ MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== ^* 20.0.0.202 3 6 7 1 +25us[ -546us] +/- 36ms

注:时间同步的概念应该深入每一个搞IT人员的心中

部署docker

#安装服务器必备软件 # yum -y install wget vim iftop iotop net-tools nmon telnet lsof iptraf nmap httpd-tools lrzsz mlocate ntp ntpdate strace libpcap nethogs iptraf iftop nmon bridge-utils bind-utils telnet nc nfs-utils rpcbind nfs-utils dnsmasq python python-devel yum-utils device-mapper-persistent-data lvm2 tcpdump mlocate tree #添加docker源信息 [root@bs-k8s-harbor01 ~]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo #列出所有docker版本,选择和Kubernetes集群一致的docker版本[不一致能否正常,我没尝试] [root@bs-k8s-harbor01 ~]# yum list docker-ce --showduplicates | sort -r [root@bs-k8s-master01 ~]# docker -v Docker version 19.03.5, build 633a0ea838 #kubernetes集群版本 #安装对应版本 [root@bs-k8s-harbor01 ~]# yum -y install docker-ce-19.03.5-3.el7 #为了保持集群环境一致,daemon.json也应该一致 [root@bs-k8s-master01 docker]# cat daemon.json { "registry-mirrors": ["https://dockerhub.azk8s.cn", "https://docker.mirrors.ustc.edu.cn"], "insecure-registries": ["127.0.0.1/8"], "max-concurrent-downloads": 10, "log-driver": "json-file", "log-level": "warn", "log-opts": { "max-size": "10m", "max-file": "3" }, "data-root": "/var/lib/docker" } [root@bs-k8s-harbor01 ~]# mkdir /etc/docker [root@bs-k8s-master01 docker]# scp daemon.json 20.0.0.207:/etc/docker/ root@20.0.0.207's password: [root@bs-k8s-harbor01 docker]# systemctl restart docker && systemctl enable docker [root@bs-k8s-harbor01 docker]# docker version Client: Docker Engine - Community Version: 19.03.5 ...... Server: Docker Engine - Community Engine: Version: 19.03.5

部署harbor

harbor的管理是基于docker-compose的

# yum install -y docker-compose # docker-compose version docker-compose version 1.18.0, build 8dd22a9 docker-py version: 2.6.1 CPython version: 3.6.8 OpenSSL version: OpenSSL 1.0.2k-fips 26 Jan 2017

harbor的 git地址:https://github.com/goharbor/harbor

这里我使用的版本是1.8

[root@bs-k8s-harbor01 data]# pwd /data [root@bs-k8s-harbor01 data]# ll 总用量 539940 -rw-r--r-- 1 root root 552897681 5月 31 2019 harbor-offline-installer-v1.8.0.tgz root@bs-k8s-harbor01 data]# tar xf harbor-offline-installer-v1.8.0.tgz [root@bs-k8s-harbor01 data]# mv harbor /usr/local/ [root@bs-k8s-harbor01 data]# cd /usr/local/harbor/ [root@bs-k8s-harbor01 harbor]# ls harbor.v1.8.0.tar.gz harbor.yml install.sh LICENSE prepare #创建证书 # mkdir /data/ca # openssl genrsa -out /data/ca/harbor-ca.key Generating RSA private key, 2048 bit long modulus ....................+++ ..................................................................................+++ e is 65537 (0x10001) # openssl req -x509 -new -nodes -key /data/ca/harbor-ca.key -subj "/CN=harbor.linux.com" -days 7120 -out /data/ca/harbor-ca.crt #修改配置文件 # cp harbor.yml{,.bak} # vim harbor.yml # diff harbor.yml{,.bak} 5c5 5c5 < hostname: harbor.linux.com --- > hostname: reg.mydomain.com 8c8 < #http: --- > http: 10c10 < # port: 80 --- > port: 80 13c13 < https: --- > # https: 15c15 < port: 443 --- > # port: 443 17,18c17,18 < certificate: /data/ca/harbor-ca.crt < private_key: /data/ca/harbor-ca.key --- > # certificate: /your/certificate/path > # private_key: /your/private/key/path 27c27 < harbor_admin_password: zisefeizhu --- > harbor_admin_password: Harbor12345 35c35 < data_volume: /data/harbor --- > data_volume: /data #部署 # mkdir -pv /etc/docker/certs.d/harbor.linux.com/ mkdir: 已创建目录 "/etc/docker/certs.d" mkdir: 已创建目录 "/etc/docker/certs.d/harbor.linux.com/" # cp /data/ca/harbor-ca.crt /etc/docker/certs.d/harbor.linux.com/ # ./install.sh # docker-compose start Starting log ... done Starting registry ... done Starting registryctl ... done Starting postgresql ... done Starting core ... done Starting portal ... done Starting redis ... done Starting jobservice ... done Starting proxy ... done # docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 287136c60b95 goharbor/nginx-photon:v1.8.0 "nginx -g 'daemon of…" 38 seconds ago Up 37 seconds (healthy) 0.0.0.0:80->80/tcp, 0.0.0.0:443->443/tcp nginx 66a07d42818c goharbor/harbor-jobservice:v1.8.0 "/harbor/start.sh" 42 seconds ago Up 38 seconds harbor-jobservice e4bb415fd236 goharbor/harbor-portal:v1.8.0 "nginx -g 'daemon of…" 42 seconds ago Up 38 seconds (healthy) 80/tcp harbor-portal 1530c4b4c604 goharbor/harbor-core:v1.8.0 "/harbor/start.sh" 43 seconds ago Up 41 seconds (healthy) harbor-core adc160874fef goharbor/redis-photon:v1.8.0 "docker-entrypoint.s…" 44 seconds ago Up 42 seconds 6379/tcp redis 300165f93782 goharbor/harbor-db:v1.8.0 "/entrypoint.sh post…" 44 seconds ago Up 42 seconds (healthy) 5432/tcp harbor-db a81c3d53eb2e goharbor/registry-photon:v2.7.1-patch-2819-v1.8.0 "/entrypoint.sh /etc…" 44 seconds ago Up 43 seconds (healthy) 5000/tcp registry 1a7cf72c6433 goharbor/harbor-registryctl:v1.8.0 "/harbor/start.sh" 44 seconds ago Up 42 seconds (healthy) registryctl 6be2b10b733d goharbor/harbor-log:v1.8.0 "/bin/sh -c /usr/loc…" 45 seconds ago Up 44 seconds (healthy) 127.0.0.1:1514->10514/tcp harbor-log # ss -lntup Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port udp UNCONN 0 0 *:111 *:* users:(("systemd",pid=1,fd=28)) udp UNCONN 0 0 *:123 *:* users:(("chronyd",pid=1558,fd=3)) udp UNCONN 0 0 127.0.0.1:323 *:* users:(("chronyd",pid=1558,fd=1)) udp UNCONN 0 0 ::1:323 :::* users:(("chronyd",pid=1558,fd=2)) tcp LISTEN 0 128 *:22 *:* users:(("sshd",pid=956,fd=3)) tcp LISTEN 0 128 127.0.0.1:1514 *:* users:(("docker-proxy",pid=6568,fd=4)) tcp LISTEN 0 128 *:111 *:* users:(("systemd",pid=1,fd=27)) tcp LISTEN 0 128 :::80 :::* users:(("docker-proxy",pid=7254,fd=4)) tcp LISTEN 0 128 :::22 :::* users:(("sshd",pid=956,fd=4)) tcp LISTEN 0 128 :::443 :::* users:(("docker-proxy",pid=7243,fd=4))

# docker login harbor.linux.com Username: admin Password: WARNING! Your password will be stored unencrypted in /root/.docker/config.json. Configure a credential helper to remove this warning. See https://docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeeded

配置开机自启

# cat /etc/rc.d/rc.local

cd /usr/local/harbor && docker-compose start

注:登陆失败的原因可能有:1. hosts没有域名解析 2.密码错误

客户端配置

以bs-k8s-master01为例

# mkdir -pv /etc/docker/certs.d/harbor.linux.com/ && scp 20.0.0.207:/data/ca/harbor-ca.crt /etc/docker/certs.d/harbor.linux.com/ && docker login harbor.linux.com mkdir: 已创建目录 "/etc/docker/certs.d" mkdir: 已创建目录 "/etc/docker/certs.d/harbor.linux.com/" The authenticity of host '20.0.0.207 (20.0.0.207)' can't be established. ECDSA key fingerprint is SHA256:EqqNfQ6sVyEO5yRX8E2plLlEaaeTyLbXhocH4uxhvJw. ECDSA key fingerprint is MD5:a2:3a:03:bc:e7:7a:f8:c3:ef:db:6c:d5:d2:34:e1:3c. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '20.0.0.207' (ECDSA) to the list of known hosts. root@20.0.0.207's password: harbor-ca.crt 100% 1115 512.7KB/s 00:00 Username: admin Password: WARNING! Your password will be stored unencrypted in /root/.docker/config.json. Configure a credential helper to remove this warning. See https://docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeededxxxxxxxxxx31 1# mkdir -pv /etc/docker/certs.d/harbor.linux.com/ && scp 20.0.0.207:/data/ca/harbor-ca.crt /etc/docker/certs.d/harbor.linux.com/ && docker login harbor.linux.com2mkdir: 已创建目录 "/etc/docker/certs.d"3mkdir: 已创建目录 "/etc/docker/certs.d/harbor.linux.com/"4The authenticity of host '20.0.0.207 (20.0.0.207)' can't be established.5ECDSA key fingerprint is SHA256:EqqNfQ6sVyEO5yRX8E2plLlEaaeTyLbXhocH4uxhvJw.6ECDSA key fingerprint is MD5:a2:3a:03:bc:e7:7a:f8:c3:ef:db:6c:d5:d2:34:e1:3c.7Are you sure you want to continue connecting (yes/no)? yes8Warning: Permanently added '20.0.0.207' (ECDSA) to the list of known hosts.9root@20.0.0.207's password: 10harbor-ca.crt 100% 1115 512.7KB/s 00:00 11Username: admin12Password: 13WARNING! Your password will be stored unencrypted in /root/.docker/config.json.14Configure a credential helper to remove this warning. See15https://docs.docker.com/engine/reference/commandline/login/#credentials-store1617Login Succeeded18# mkdir -pv /etc/docker/certs.d/harbor.linux.com/19mkdir: 已创建目录 "/etc/docker/certs.d"20mkdir: 已创建目录 "/etc/docker/certs.d/harbor.linux.com/"21# scp 20.0.0.207:/data/ca/harbor-ca.crt /etc/docker/certs.d/harbor.linux.com/22root@20.0.0.207's password: 23harbor-ca.crt 100% 1115 690.7KB/s 00:00 24# docker login harbor.linux.com25Username: admin26Password: 27WARNING! Your password will be stored unencrypted in /root/.docker/config.json.28Configure a credential helper to remove this warning. See29https://docs.docker.com/engine/reference/commandline/login/#credentials-store3031Login Succeededshell

# cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://dockerhub.azk8s.cn", "https://docker.mirrors.ustc.edu.cn"],

"insecure-registries": ["harbor.linux.com", "20.0.0.207:443"],

"max-concurrent-downloads": 10,

"log-driver": "json-file",

"log-level": "warn",

"log-opts": {

"max-size": "10m",

"max-file": "3"

},

"data-root": "/var/lib/docker"

}

# systemctl restart docker

# docker login harbor.linux.com

# docker login 20.0.0.207:443 # cat /root/.docker/config.json { "auths": {

"auths": {

"20.0.0.207:443": {

"auth": "YWRtaW46emlzZWZlaXpodQ=="

},

"harbor.linux.com": { "auth": "YWRtaW46emlzZWZlaXpodQ==" } }, "HttpHeaders": { "User-Agent": "Docker-Client/19.03.5 (linux)" } }

注:其他集群机同样操作

测试

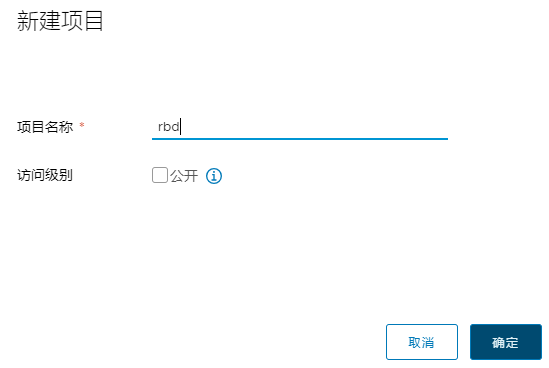

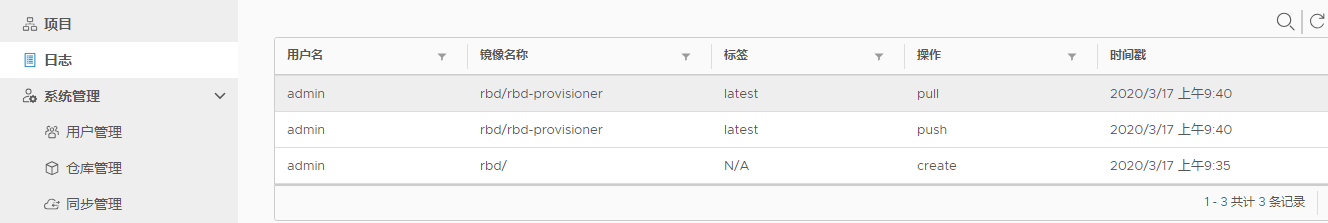

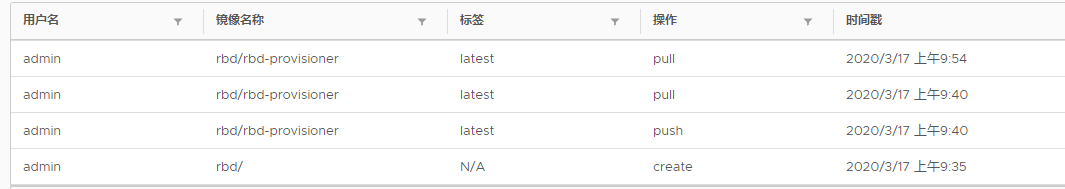

以ceph rbd 为例

#kubernetes master节点拉取镜像上传到harbor仓库,worker节点拉取镜像 [root@bs-k8s-master01 k8s]# docker pull quay.io/external_storage/rbd-provisioner:latest [root@bs-k8s-master01 k8s]# docker pull quay.io/external_storage/rbd-provisioner:latest [root@bs-k8s-master01 k8s]# docker tag quay.io/external_storage/rbd-provisioner:latest harbor.linux.com/rbd/rbd-provisioner:latest [root@bs-k8s-master01 k8s]# docker push harbor.linux.com/rbd/rbd-provisioner:latest [root@bs-k8s-node01 ~]# docker pull harbor.linux.com/rbd/rbd-provisioner:latest

#用户名密码存放 # cat ~/.docker/config.json |base64 -w 0 ewoJImF1dGhzIjogewoJCSJoYXJib3IubGludXguY29tIjogewoJCQkiYXV0aCI6ICJZV1J0YVc0NmVtbHpaV1psYVhwb2RRPT0iCgkJfQoJfSwKCSJIdHRwSGVhZGVycyI6IHsKCQkiVXNlci1BZ2VudCI6ICJEb2NrZXItQ2xpZW50LzE5LjAzLjUgKGxpbnV4KSIKCX0KfQ== #创建secret # cat secret-harbor.yaml ########################################################################## #Author: zisefeizhu #QQ: 2********0 #Date: 2020-03-17 #FileName: secret-harbor.yaml #URL: https://www.cnblogs.com/zisefeizhu/ #Description: The test script #Copyright (C): 2020 All rights reserved ########################################################################### apiVersion: v1 kind: Secret metadata: name: k8s-harbor-login type: kubernetes.io/dockerconfigjson data: .dockerconfigjson: ewoJImF1dGhzIjogewoJCSJoYXJib3IubGludXguY29tIjogewoJCQkiYXV0aCI6ICJZV1J0YVc0NmVtbHpaV1psYVhwb2RRPT0iCgkJfQoJfSwKCSJIdHRwSGVhZGVycyI6IHsKCQkiVXNlci1BZ2VudCI6ICJEb2NrZXItQ2xpZW50LzE5LjAzLjUgKGxpbnV4KSIKCX0KfQ== # pwd /data/k8s/harbor # kubectl apply -f secret-harbor.yaml secret/k8s-harbor-login created #部署rbd pod测试 [root@bs-k8s-master01 harbor]# cat external-storage-rbd-provisioner.yaml ########################################################################## #Author: zisefeizhu #QQ: 2********0 #Date: 2020-03-13 #FileName: external-storage-rbd-provisioner.yaml #URL: https://www.cnblogs.com/zisefeizhu/ #Description: The test script #Copyright (C): 2020 All rights reserved ########################################################################### apiVersion: v1 kind: ServiceAccount metadata: name: rbd-provisioner namespace: default --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: rbd-provisioner rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"] - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] - apiGroups: [""] resources: ["services"] resourceNames: ["kube-dns"] verbs: ["list", "get"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: rbd-provisioner subjects: - kind: ServiceAccount name: rbd-provisioner namespace: default roleRef: kind: ClusterRole name: rbd-provisioner apiGroup: rbac.authorization.k8s.io --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: rbd-provisioner namespace: default rules: - apiGroups: [""] resources: ["secrets"] verbs: ["get"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: rbd-provisioner namespace: default roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: rbd-provisioner subjects: - kind: ServiceAccount name: rbd-provisioner namespace: default --- apiVersion: apps/v1 kind: Deployment metadata: name: rbd-provisioner namespace: default spec: replicas: 1 selector: matchLabels: app: rbd-provisioner strategy: type: Recreate template: metadata: labels: app: rbd-provisioner spec: containers: - name: rbd-provisioner image: "harbor.linux.com/rbd/rbd-provisioner:latest" imagePullPolicy: IfNotPresent env: - name: PROVISIONER_NAME value: ceph.com/rbd imagePullSecrets: - name: k8s-harbor-login serviceAccount: rbd-provisioner nodeSelector: ## 设置node筛选器,在特定label的节点上启动 rbd: "true" #节点打标签 [root@bs-k8s-master01 harbor]# kubectl label nodes 20.0.0.204 rbd=true node/20.0.0.204 labeled #删除bs-k8s-node01节点上的rbd镜像 [root@bs-k8s-master01 harbor]# kubectl apply -f external-storage-rbd-provisioner.yaml serviceaccount/rbd-provisioner created clusterrole.rbac.authorization.k8s.io/rbd-provisioner created clusterrolebinding.rbac.authorization.k8s.io/rbd-provisioner created role.rbac.authorization.k8s.io/rbd-provisioner created rolebinding.rbac.authorization.k8s.io/rbd-provisioner created deployment.apps/rbd-provisioner created [root@bs-k8s-master01 harbor]# kubectl get pods -o wide -w NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES rbd-provisioner-9cf46c856-bl454 0/1 ContainerCreating 0 6s <none> 20.0.0.204 <none> <none> rbd-provisioner-9cf46c856-bl454 1/1 Running 0 37s 172.20.46.82 20.0.0.204 <none> <none>

测试完成

浙公网安备 33010602011771号

浙公网安备 33010602011771号